Bridging the Divide: Traditional and Modern Architectures

We are in the midst of a significant shift in application architectures. According to Cisco (Global Cloud Index: 2016-2021), 85% of new app workload instances are container-based. This implies they are being designed and developed using modern app architectures that rely heavily on API-first, microservices principles. This architecture is markedly different from traditional, three-tier web architecture that has dominated the application space since the Internet emerged as a reliable means of doing business.

But traditional applications are not going away, either. Studies have shown that traditional (legacy) applications have lifespans longer than many careers—reaching into the 20-year span. Indeed, research from BMC found that over 51% of respondents had more than half of their data residing on a mainframe. Respondents further indicated increases in transaction volumes (59%), data volumes (55%), and speed of application change (45%).

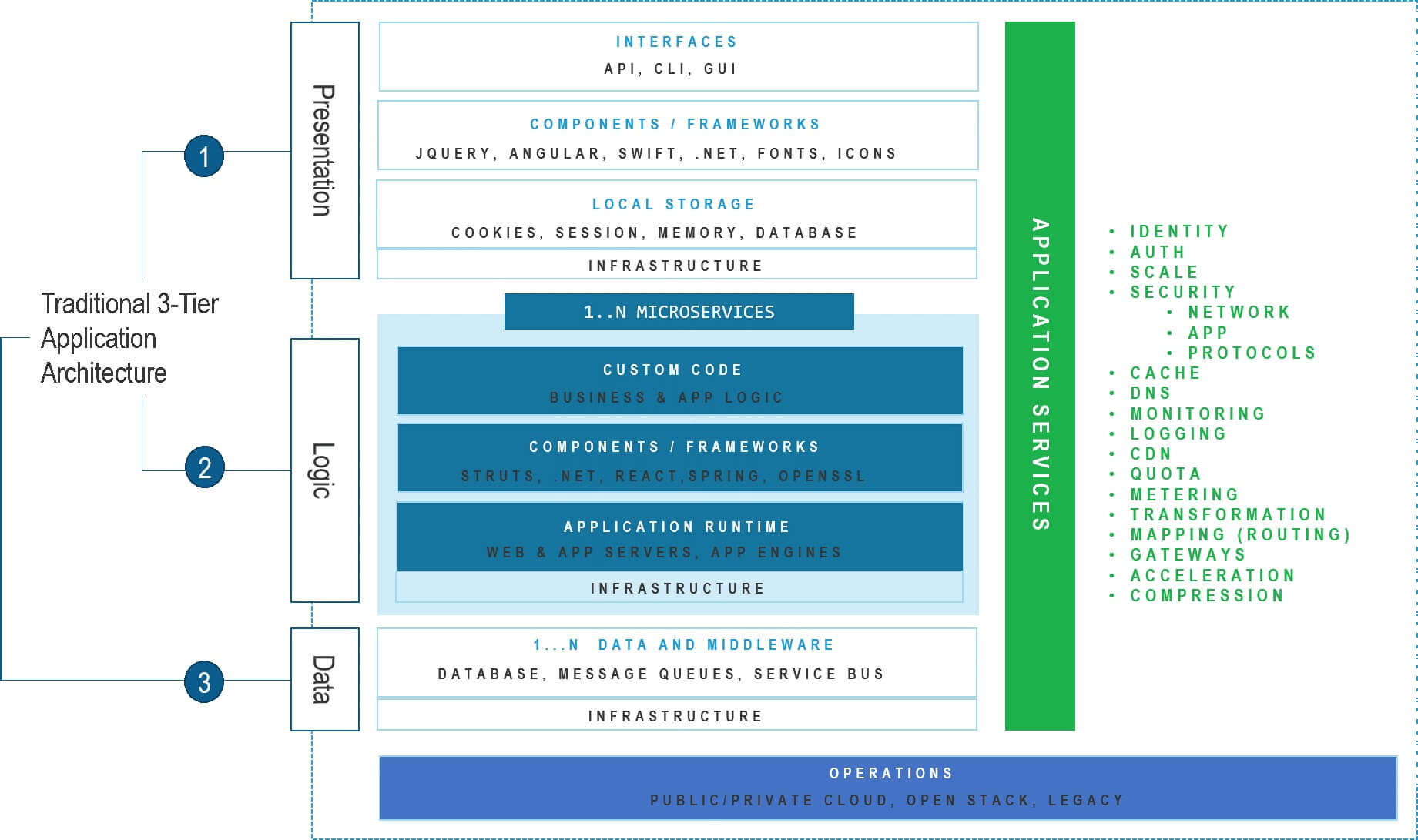

These architectures are divided by many characteristics, including technology choices.

Traditional apps rely on web servers, app servers, and relational databases. Containers, app engines, and NoSQL databases are the go-to choices for modern development. Traditional apps reside primarily on-premises and take advantage of shared resources. Modern apps are in the cloud and expect resources to be dedicated just for them.

We also find contrast in the architectures used to deliver the services all apps rely on to enforce security, ensure availability, and improve performance. To be clear, the divide isn't really based on the use of application services, but in the efficiency of the platforms that deliver them.

Capacity and Connections

Efficiency is the number one outcome desired from digital transformation according to our State of Application Services 2019. 70% of organizations cited IT optimization (efficiency) over more ‘buzzword bingo’ benefits like competitive advantage (46%) and new business opportunities (45%). In the 2019 State of the CIO survey, 40% of respondents said that increasing operational efficiency will the most significant initiative driving IT investment this year.

Efficiency is important. The problem is that along with the divide between traditional and modern architectures comes a divide in the way we define efficiency.

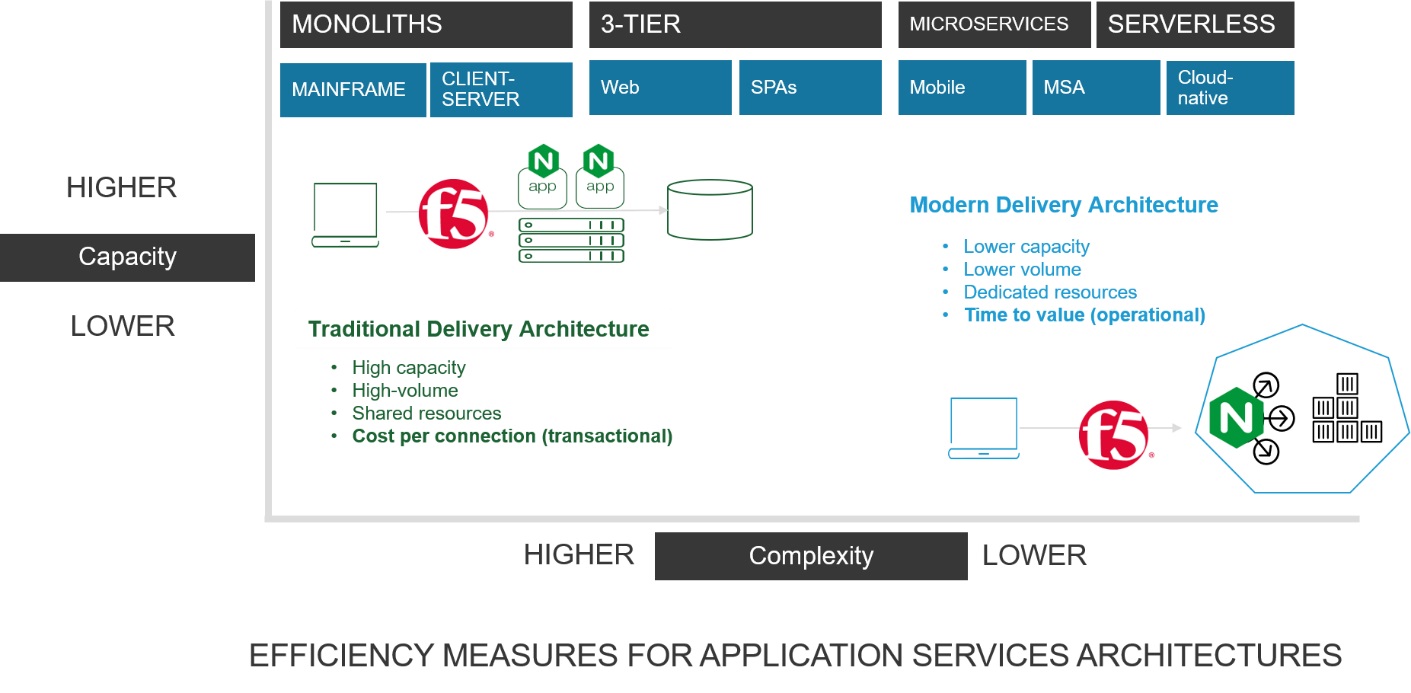

Traditional delivery architectures measure efficiency based on transactional values like cost per connection. Systems were built on the premise of shared infrastructure and resources that demanded highly reliable, scalable platforms from which to deliver application services. A single application delivery platform acts as the gateway to an average of over 130 different applications. Its efficiency is based on cost per connection (capacity), and generally speaking the lower the better. Complexity is acceptable as long as high capacity is achieved.

Today’s cloud-native and container-based approach to applications points to an explosion of “applications” that require the same security and scale as their monolithic predecessors. A single application is no longer expected to scale to millions of connections. Rather, those million connections will be distributed across hundreds (or thousands) of smaller-sized applications. Application services don’t need to scale to millions of connections because “applications” don't scale vertically anymore, they scale horizontally. Each one is responsible for only a small slice of the overall connections. The application services that distribute those connections, then, do not require as high a capacity either.

Speed, Simplicity, and Security

Instead, efficiency is measured based on simplicity and speed. Fast, frequent changes need to be made to satisfy the growing appetite of the digital economy. A whopping 62% of respondents to the 2017 SDxCentral Container and Cloud Orchestration Report deploy containers for “faster spin up and down” speed. Nearly half (47%) deploy containers specifically because of easier management. Application services then must be easy and fast to obtain, deploy, and operate within modern architectures. This is why open source dominates the CI/CD toolchain and makes NGINX such an attractive tool for much of the developer and DevOps communities.

Modern delivery platforms aren’t that efficient in traditional architectures, and traditional delivery platforms aren't that efficient in modern architectures. This is particularly evident when we look at application security. Application security is primarily designed to prevent external (public) attacks from reaching applications, servers, and data sources. Effective—and efficient—application security does that as close to the source of the attack as possible. By the time an attack or malicious payload is seen by an application, it’s generally too late. Resources have been consumed. The malware has been delivered. The malicious code has already implanted itself.

In terms of architecture, that generally means application security is most efficiently deployed in a traditional (N-S) architecture to prevent malicious traffic from reaching applications executing in either type of environment (that is, modern or traditional).

Bridging the Divide

Briefly, that sums up the existing architectural divide we will aim to fill with the acquisition of NGINX. Customers need options for both traditional and modern delivery architectures to satisfy their own efficiency equation. We see a need for both to enable customers to bridge the divide between traditional (N-S) and the modern (E-W) architectures.

Today, both architectures are valid and necessary for business to succeed in delivering digital capabilities faster and more frequently and, most importantly, in the most efficient way possible to support its most valuable asset: a multi-generational portfolio of applications. We believe the time is now to bridge that divide by bringing together the best of both traditional and modern delivery architectures.

For more about the advantages of bringing F5 and NGINX together, check out a post from F5’s CEO introducing the ‘Bridging the Divide’ blog series.