Which ones do you need and where should they go?

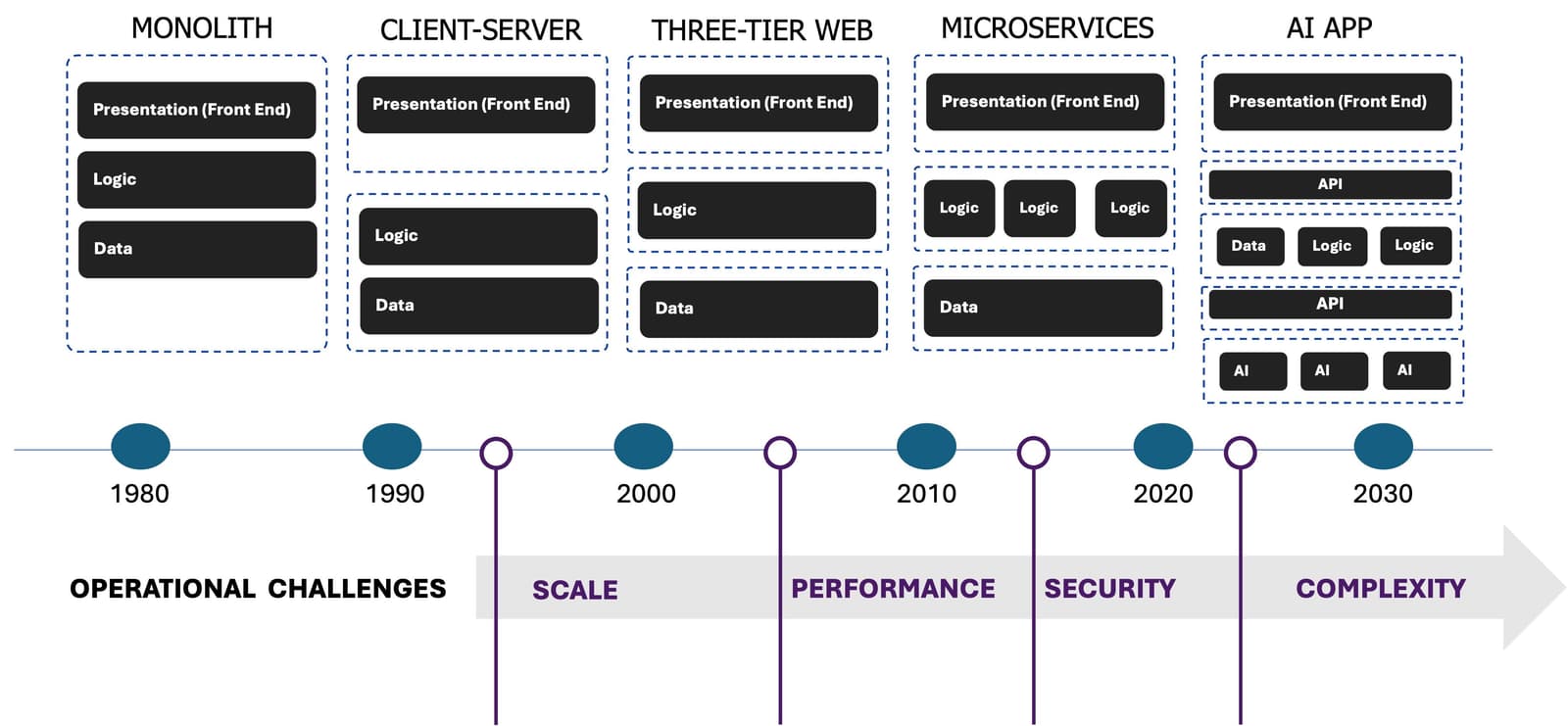

Every new generation of application architecture has an impact on network traffic. We pay attention to this because for every significant shift in application architectures there is a complementary shift in application delivery and security to address challenges that arise.

Digging into the shifts and the network response to address operational challenges, it is interesting to note that there really are no new challenges introduced with AI applications. Scale, performance, security, and complexity are increased, of course, but these are the same challenges we’ve been solving for more than a decade.

But AI does change distribution of workloads and traffic patterns. This is important because the bulk of that network traffic is application traffic and, increasingly, API traffic. This is what’s different. Understanding those new traffic patterns and distribution across core, cloud, and edge provides insight into what application delivery and security services you will need, and where you can put them for maximum impact and efficiency.

New Traffic Patterns

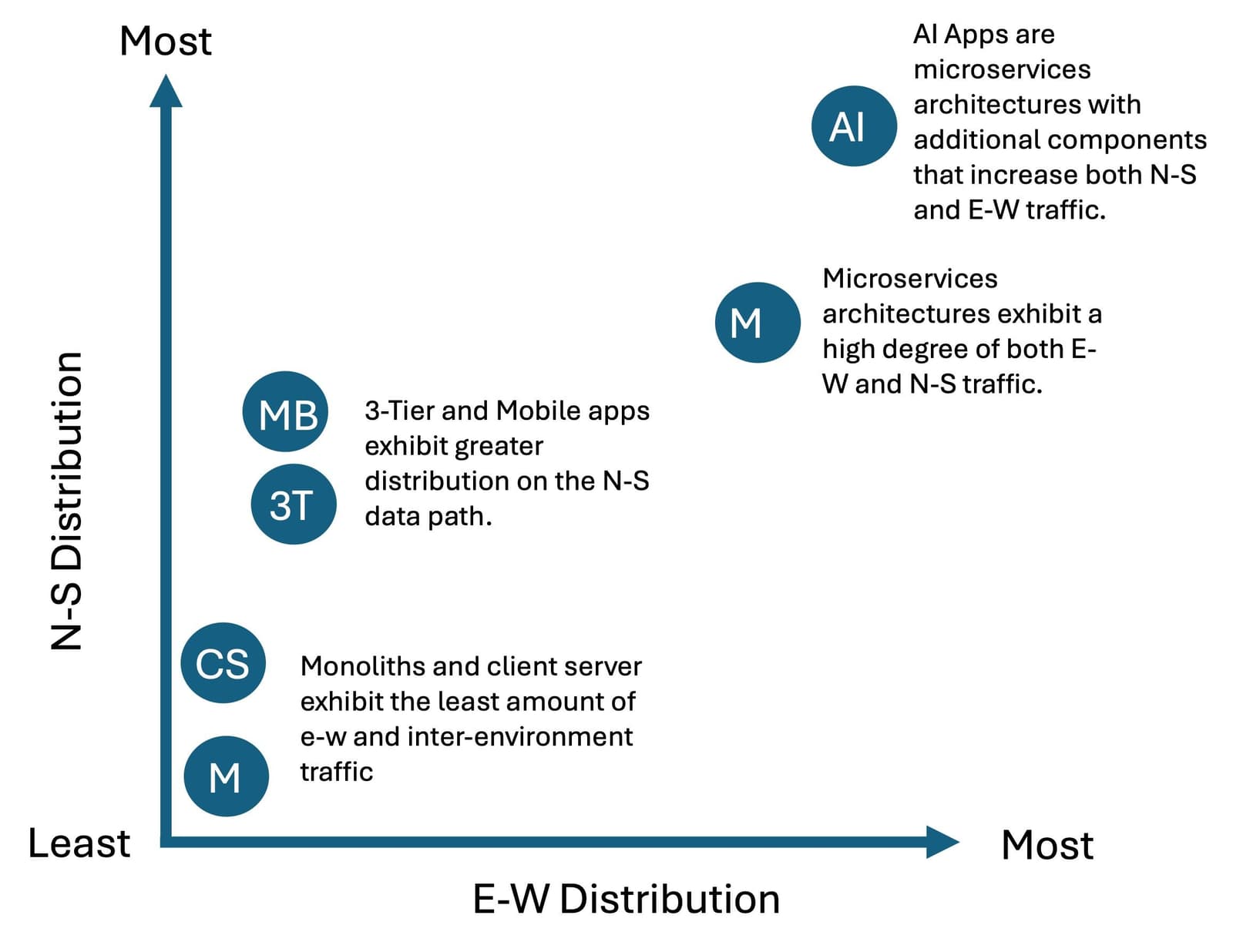

It is important to note that one of the most significant consequences of AI applications will be the increase of both E-W and N-S traffic, with much of the N-S traffic originating from the AI and thus introducing the outbound N-S data path as a strategic point of control in addition to the traditional inbound N-S data path.

AI applications will be additive to existing portfolios for the next 2-3 years, with consolidation occurring as organizations understand consumer demand for NLI (natural language interface).

Increasing distribution on the N-S data path will drive greater demand for security as a service at the corporate boundary while the increasing distribution on the E-W data path across environments is driving the need for multicloud networking. Internally, the sensitivity of data on the E-W data path is accelerating the need for security and access capabilities.

The result is two new insertion points in AI application architectures where application delivery and security will be valuable, and an opportunity to reconsider where application delivery and security are deployed with an eye toward efficiency, cost reduction, and efficacy.

This is important given that we’re starting to see CVEs logged against inference servers. That’s the server part of the “model” tier that communicates with clients via an API. The use of API security here is important in the overall AI security strategy because it is here that capabilities to inspect, detect, and protect AI models and servers against exploitation are best deployed. It is the “last line of defense” and, given a programmable API security solution, the fastest means to mitigating new attacks against AI models.

Insertion Points for App Delivery and Security

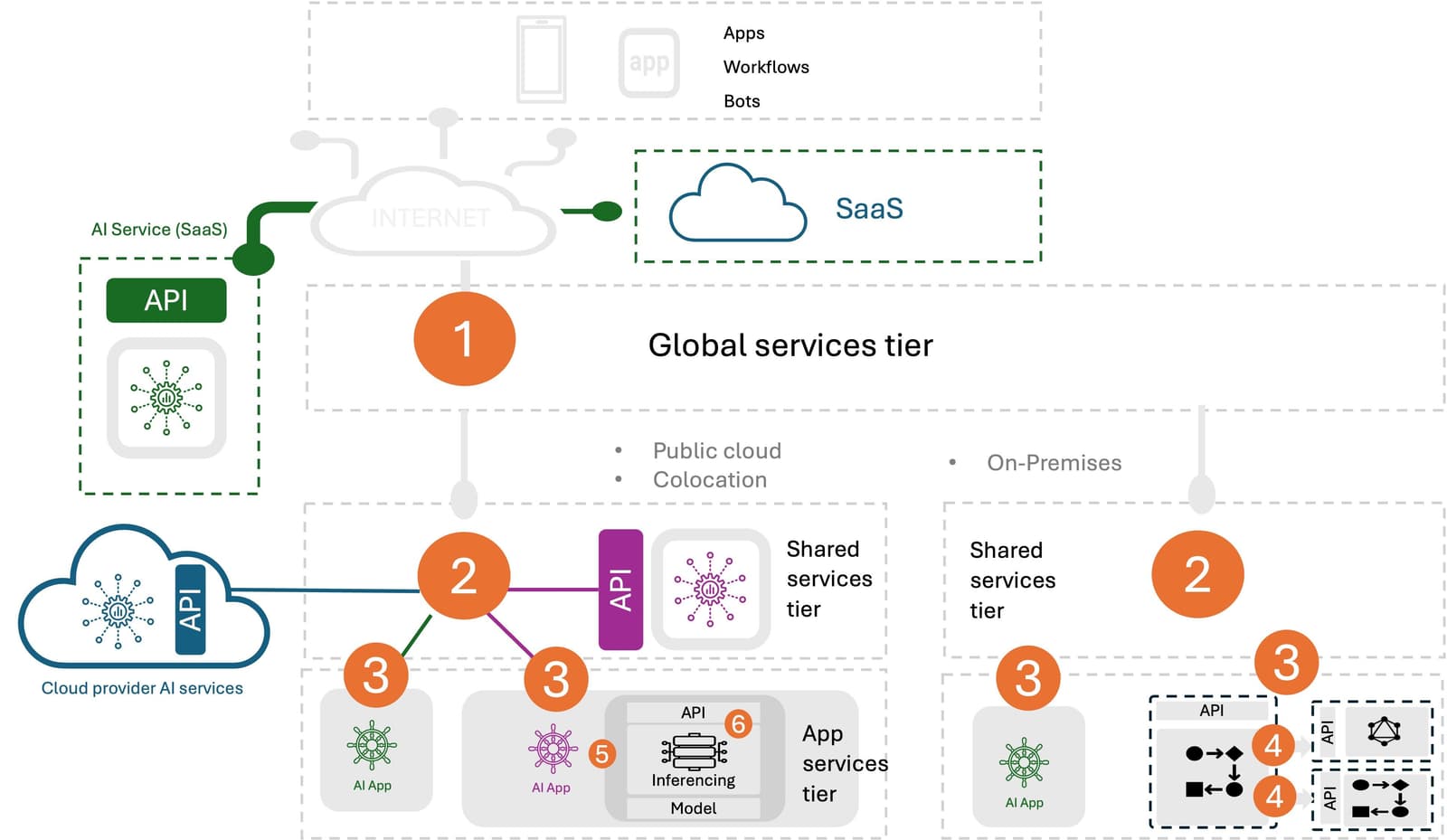

You may recall this post on AI inferencing patterns, in which we demonstrate the three main deployment patterns for AI inferencing today. Based on those patterns, we can identify six distinct insertion points in this expanded architecture for application services and identify where those services are best deployed to optimize for security, scale, and efficiency.

- Global Services (per company) Application delivery and security at this tier are generally security services but include company-level delivery services such as DNS, GSLB, and multicloud networking. Security services such as DDoS and Bot Protection are well-suited here as they prevent attackers from consuming critical (and costly) resources deeper in the IT estate, especially those applications hosted in the public cloud.

- Shared Services (per location) Application delivery and security at this tier serve as further protection against attackers as well as providing availability services such as load balancing for applications, APIs, and infrastructure services (firewall, SSL VPN, etc.).

- Application Services (per application) Application delivery and security at this insertion point are more affine to the application or API they are delivering and protecting. These include app services such as WAF, local load balancing, and ingress control for modern applications. These app services deliver and secure “user to app” communications.

- Microservices Networking (per cluster) Application delivery and security at this insertion point are typically deployed as part of the Kubernetes infrastructure and include mTLS and service mesh. These services are for delivering and securing “app to app” communications.

- AI Inferencing Services (per AI compute complex) This new insertion point is specific to AI applications and includes delivery and security capabilities designed to specifically deliver and protect AI inferencing services. Load balancing is common, as is application layer rate limiting to protect AI inferencing APIs. See The Impact of AI Inferencing on Data Center Architecture for more details.

- AI Infrastructure Services (per AI server) This new insertion point is embedded in the AI network fabric, with application delivery and security deployed on DPUs to facilitate the offload of delivery and security services. This insertion point serves to improve the efficiency of inferencing investments by offloading necessary delivery and security from the CPU, allowing the inferencing servers to “just serve.” F5 is Scaling Inferencing from the Inside Out provides more detail on this tier.

Now, the truth is that most application delivery and security services can be deployed at any of these insertion points. The exception would be those services specifically designed to integrate with an environment, such as ingress controllers and service mesh, which are bound to Kubernetes deployments.

The key is to identify the insertion point at which you can maximize variables—efficacy, efficiency, and cost. This includes not just the operational cost of said services, but the costs associated with processing that traffic deeper in the IT estate.

And while there are best practices for matching application delivery and security with insertion points (hence the mention of specific services for each) there are also always reasons to deviate because no two enterprise architectures are the same. This is also one of the primary reasons for programmability of application delivery and security; because no two environments, applications, or networks are the same and the ability to customize for unique use cases is a critical capability.

The need for application delivery and security across environments and insertion points is why F5 insists on supporting the deployment of application delivery and security at as many insertion points as possible, in every environment. Because that is how we ensure that organizations can optimize for efficacy, efficiency, and cost regardless of how they’ve architected their environment, applications, and networks.

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.