Executive Summary

Creating an encrypted HTTPS website depends on a lot more than simply throwing a digital certificate at it and hoping for the best. As old protocols prove to be insecure and new standards emerge, it has never been more important to keep HTTPS configurations up to date. In fact, Transport Layer Security (TLS) and HTTPS misconfigurations are now so commonplace that in the 2021 OWASP Top 10, Cryptographic Failures now comes in second place.1

As this report shows, the issue is not so much the lack of adopting new ciphers and security features but the rate at which old and vulnerable protocols are removed. Attackers know there is a correlation between poor HTTPS configurations and a vulnerable web server. Websites that routinely fail to follow TLS best practices are also found to be running old (and likely vulnerable) web servers.

On top of that is the potential use or abuse of web encryption for malicious purposes. Attackers have learned how to use TLS to their advantage in phishing campaigns, governments worldwide seek to subvert encryption to their benefit, and fingerprinting techniques raise questions about the prevalence of malware servers in the top one million sites on the web.

In order to collect the data for this report, we have continued to develop our own TLS scanning tool, Cryptonice, which is now free and open source. Security teams and website operators can use this to evaluate the cryptographic posture of their own sites and even bake it into their DevSecOps workflows for fully automated HTTPS auditing.

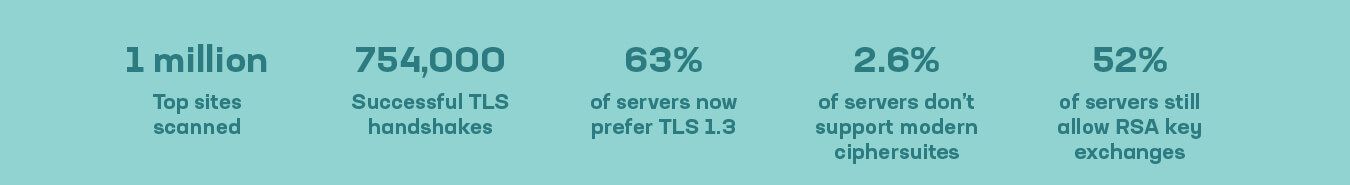

Here are some detailed stats on what’s good, what’s bad, and what’s troubling in the world of TLS:

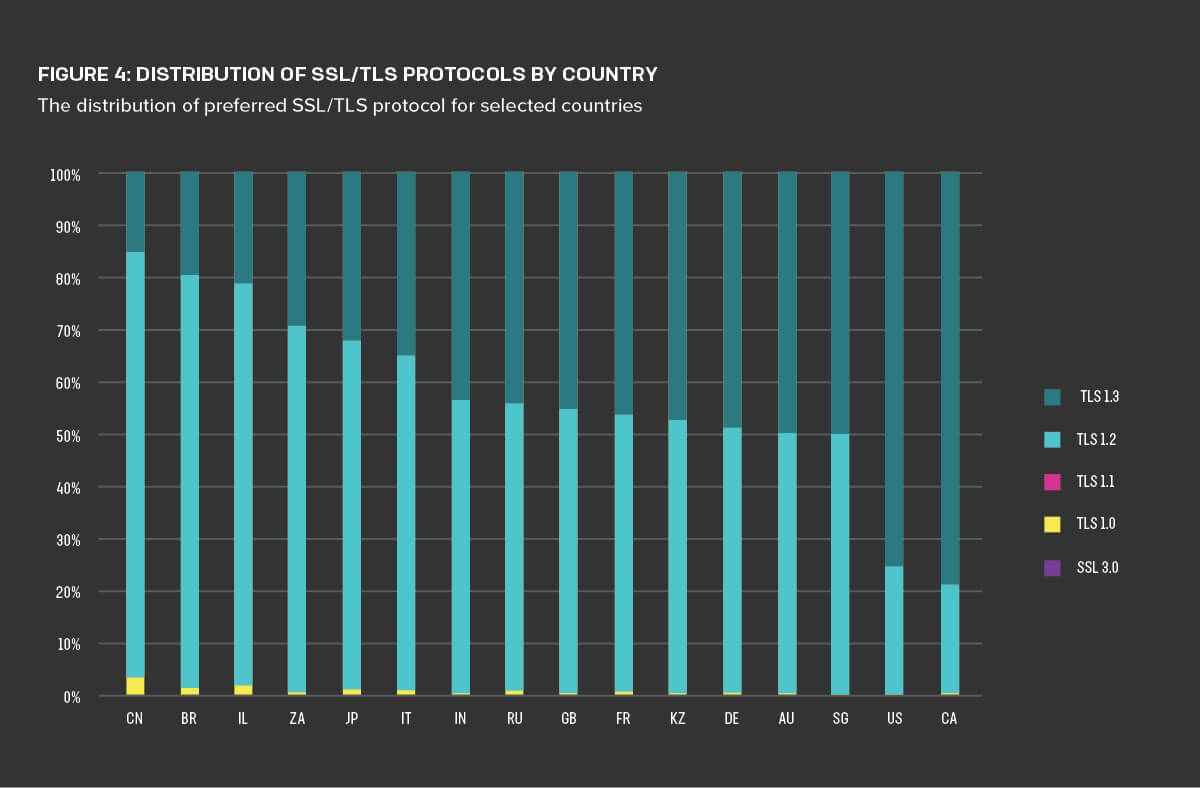

- TLS 1.3, now just over two years old, has risen to become the preferred protocol for 63 percent of the top one million web servers on the Internet. Support can vary drastically, however. In some countries, such as the United States and Canada, as many as 80 percent of web servers choose it, while in others, such as China and Israel, only 15 percent of servers support it.

- The move to elliptic curve cryptography is slow but steady, with 25 percent of certificates now signed with the Elliptic Curve Digital Signature Algorithm (ECDSA) and over 99 percent of servers choosing non-RSA handshakes when possible.

- Despite widespread TLS 1.3 adoption, old and vulnerable protocols are being left enabled. RSA handshakes are allowed by 52 percent of web servers, SSL v3 is enabled on 2 percent of sites, and 2.5 percent of certificates had expired.

- TLS 1.0 and 1.1 are now officially deprecated due to known security flaws. They have largely disappeared from use across the top one million sites, although a small number of web servers, 0.4 percent, still select one of them during an HTTPS connection.

- Encryption continues to be abused. The proportion of phishing sites using HTTPS and valid certificates has risen to 83 percent, with roughly 80 percent of malicious sites coming from just 3.8 percent of the hosting providers.

- Recent research has found active SSLStrip attacks successfully stealing user logon credentials, indicating the growing need for using HTTP Strict Transport Security (HSTS) headers or completely disabling HTTP services.

- Certificate revocation methods are almost entirely broken, driving a growing desire across the certificate authority (CA) and browser industries to move toward extremely short-term certificates.

By comparing themselves with the top one million sites, security teams can perform a gap analysis of their own web servers to determine areas of improvement to prioritize. We’ve also included relevant stories from the past 18 months to illustrate how lapses in TLS can have very real-world consequences.

Introduction

It is now Autumn 2021, which means that eighteen months have passed since F5 Labs last revisited encryption—everyone’s favorite dusty corner of Internet infrastructure. Even though encryption can feel like a “solved problem,” the devil is still in the details after all these years, and it remains possible to mess this up, solved problem or not. As a result, we analyze how successful the Internet’s busiest properties have been at implementing the known best practices around HTTPS and TLS. This report presents those findings and our assessments of devilish encryption details that still need attention in too many places.

Figure 1: A snapshot of key TLS telemetry findings

Report Structure

We start with the good news: progress we’ve seen toward everyone reaching a minimum level of security. Then we talk about the bad news: stagnation or even regression in encryption practices. Finally, we turn to the ugly side of encryption—how it is being subverted by organized crime and how governments around the world look to weaken or even ban encryption entirely.

Methodology

The majority of data in this study comes from our scans of sites found in the Tranco Top 1 Million list.2 For the 2019 TLS Telemetry Report,3 we developed the free and open-source tool Cryptonice. Over the past 18 months, we’ve continued to develop and expand the capabilities of this tool to help us capture even more relevant data from Internet-wide probing. We perform scans of the top 1 million sites once per quarter and average results for any given 12-month period. Rather than scanning every IP address, we focus on the most popular websites on the web, since doing so allows us to perform more accurate scans of web configuration. It also helps us provide insight into differences between various industries. We also look at phishing sites as reported by OpenPhish and use their data to investigate which sites are using encryption and which industries are most targeted. Finally, we supplement our findings with client (browser) data captured by Shape Security to clearly understand the most frequently used browsers and bots.

The Good News

You’re supposed to start with a compliment, right? Let’s start with the good news revealed by our research. There’s plenty to discuss, from the evolution away from old protocols to more secure certificate management.

The Shift to TLS 1.3

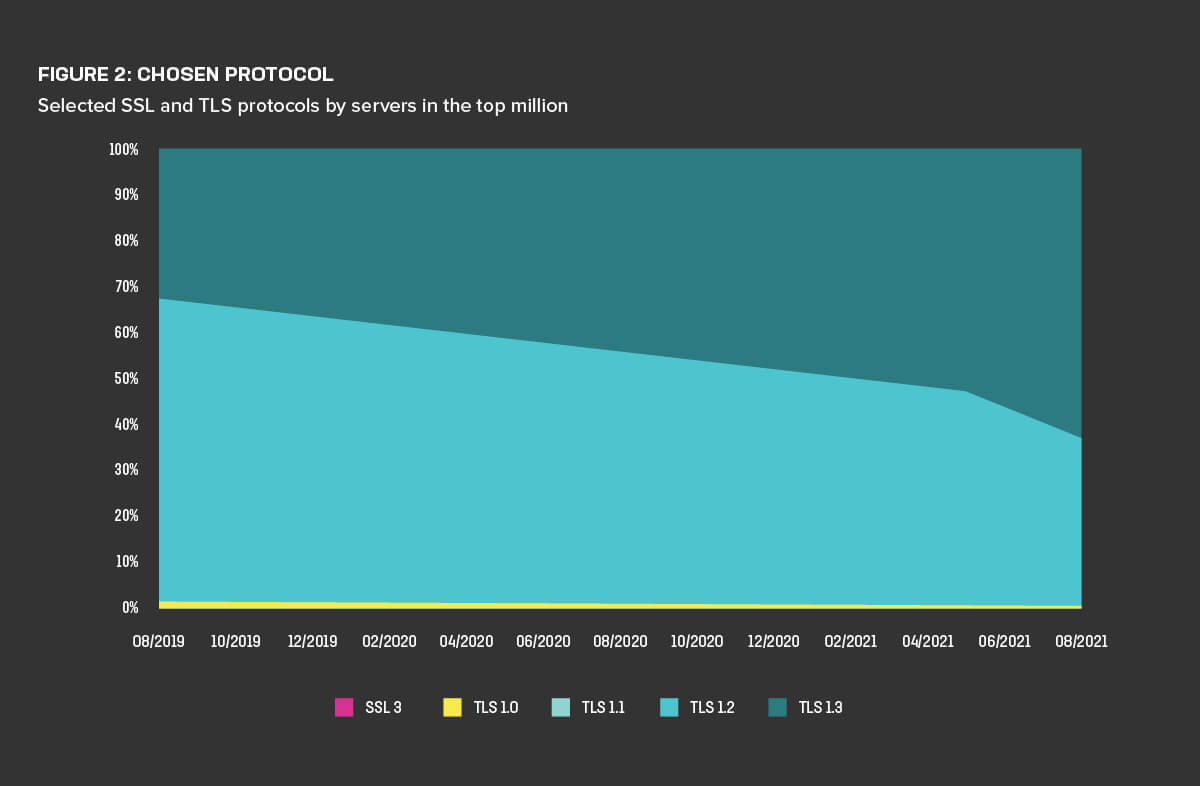

The very good news is that for the first time, TLS 1.3 is the chosen encryption protocol for the majority of web servers among the top million (Figure 2). While TLS 1.3 has been gradually growing in prevalence, two years ago only 32% of servers defaulted to TLS 1.3, and it only climbed to the number one spot in May 2021. The protocol has seen big jumps in popularity following its adoption by large hosting and CDN providers such as Amazon Cloudfront. Almost 63% of servers prefer TLS 1.3 to other protocols as of August 2021.

Of those sites supporting TLS 1.3, the proportion using the “early data” capability—which allows the server to save time by proactively pushing data to the client—grew from 8.4% in 2019 to 9.2% in 2021.4

The IETF officially deprecated TLS 1.0 and TLS 1.1 in March 2021.5 Despite this, SSL 3.0 and TLS 1.0 are still the preferred protocols for a small number of sites, as is barely perceptible in Figure 2. TLS 1.0 is preferred by 0.4% of sites, while SSL 3 preference accounts for just 0.002%. On the client side, data from Shape Security show that Chrome is by far the most prevalent browser. At the time of data collection, Chrome 91 was used by almost 34% of connections, with Chrome 90 accounted for 6.5% of connections. Versions of Mobile Safari were in second and third place with a combined total of 23.5%. In total, well over 95% of all browsers in active use support TLS 1.3.

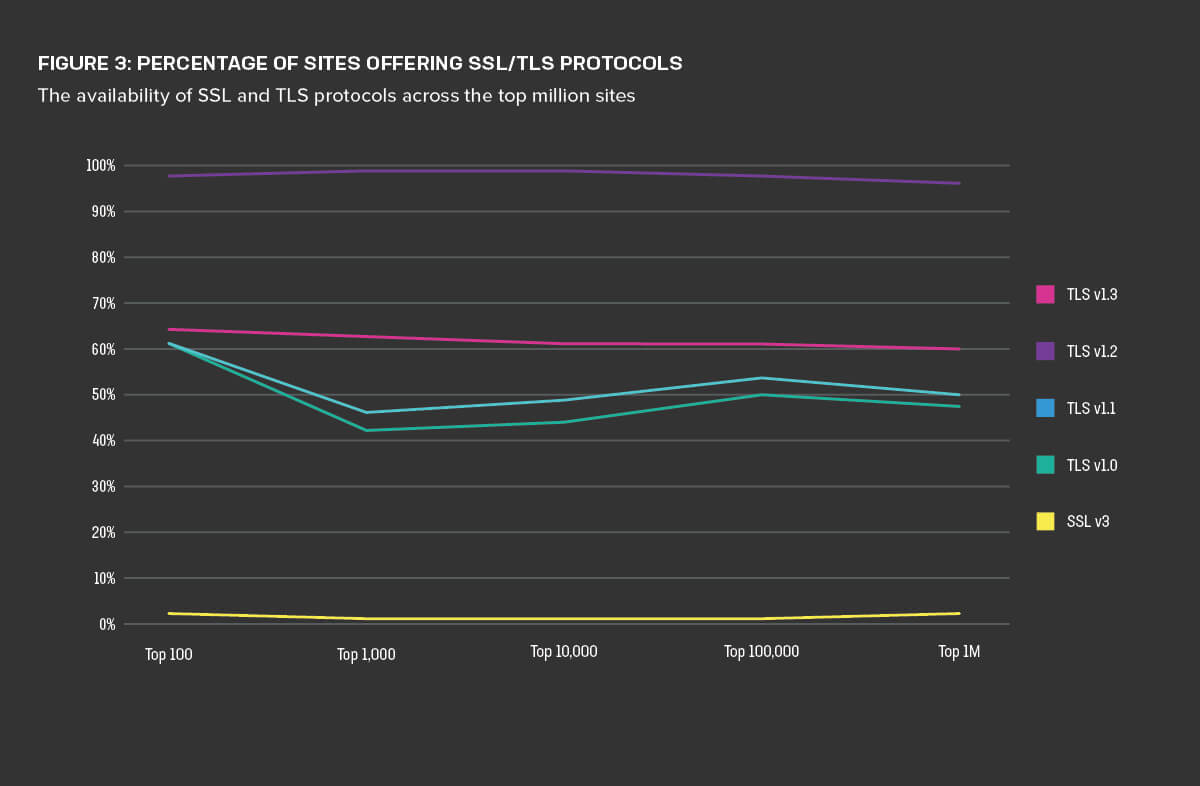

Simply looking at the preferred protocol a server selects for TLS handshakes does not reveal the whole story, however. Support for older, deprecated protocols continues unabated across the entire range of sites (Figure 3). We found no relationship between the amount of traffic a site receives and the protocols it supports. In other words, more popular sites aren’t necessarily stricter when it comes to offering TLS protocols. In fact, the top 100 sites were more likely to still support SSL 3, TLS 1.0, and TLS 1.1 than servers with much less traffic.

In the 2019 report, we analyzed servers’ preferred TLS protocols by the country in which they were based, using country code top level domains (ccTLDs) such as *.co.uk or *.jp. In 2019, the TLDs with a greater prevalence of TLS 1.3 than the .com TLD were .co for Colombia and .id for Indonesia. At the other end of the spectrum, .cn (China) and .jp (Japan) had the lowest prevalence of TLS 1.3. While Chinese domains were marginally better than Japanese domains in supporting TLS 1.3, they also supported TLS 1.0 at a higher rate.

For this report, we supplanted TLD analysis in favor of geolocation lookups on the servers in question. This had the advantage of allowing us to reveal the true location of the servers, not their putative registration information.6 Figure 4 shows the distribution of SSL/TLS protocols by country. Canada and the United States are significantly ahead of the pack in TLS 1.3 preference, with Canadian servers in particular preferring TLS 1.3 nearly 80% of the time. At the other end of the spectrum, Chinese servers show little support for TLS 1.3 and also had the highest occurrence of TLS 1.0 protocols.

Simplifying Cipher Suites

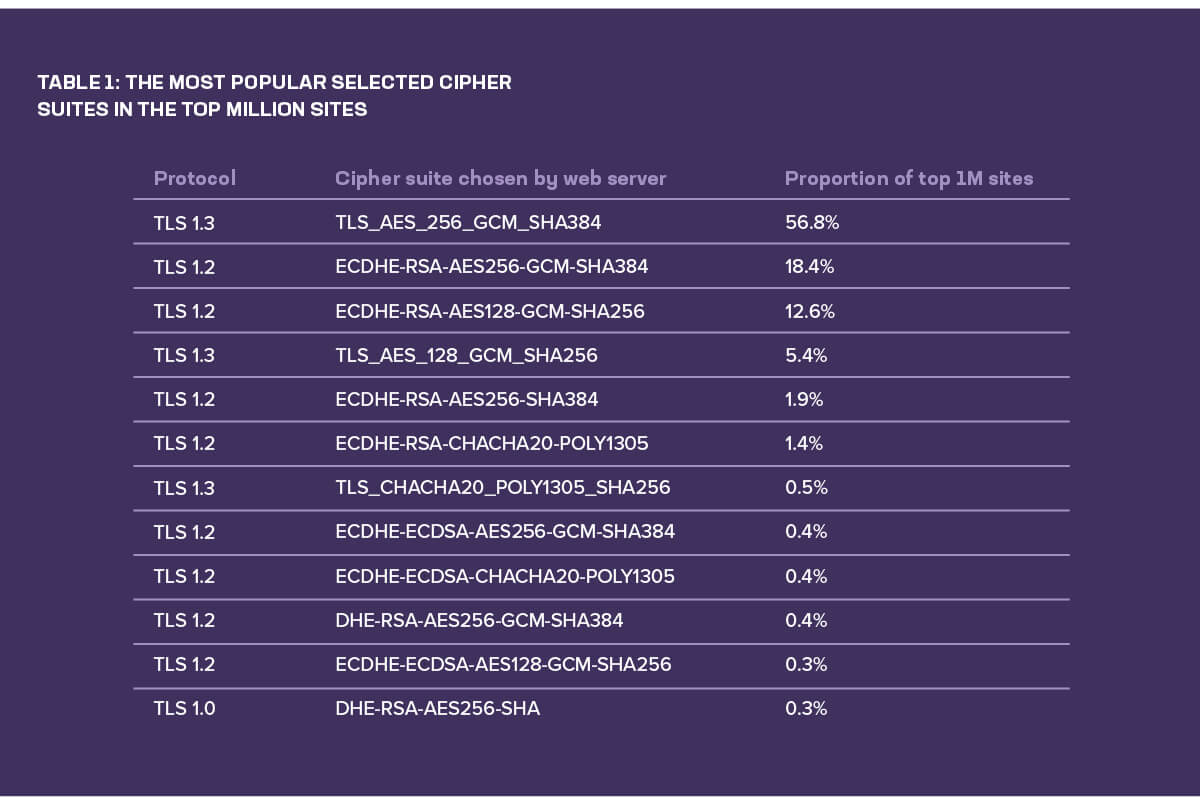

When we switched attention from protocols to cipher suites, the scans revealed that sites in the top million offered, on average, nearly 20 separate cipher suites. This illustrates one reason TLS 1.3 is such an important step forward: It simplifies which suites are available. While we found all five TLS 1.3 cipher suites available across the top million sites, our scans found only three being chosen by servers during TLS handshakes: TLS_AES_256_GCM_SHA384, TLS_AES_128_GCM_SHA256, and TLS_CHACHA20_POLY1305_SHA256.

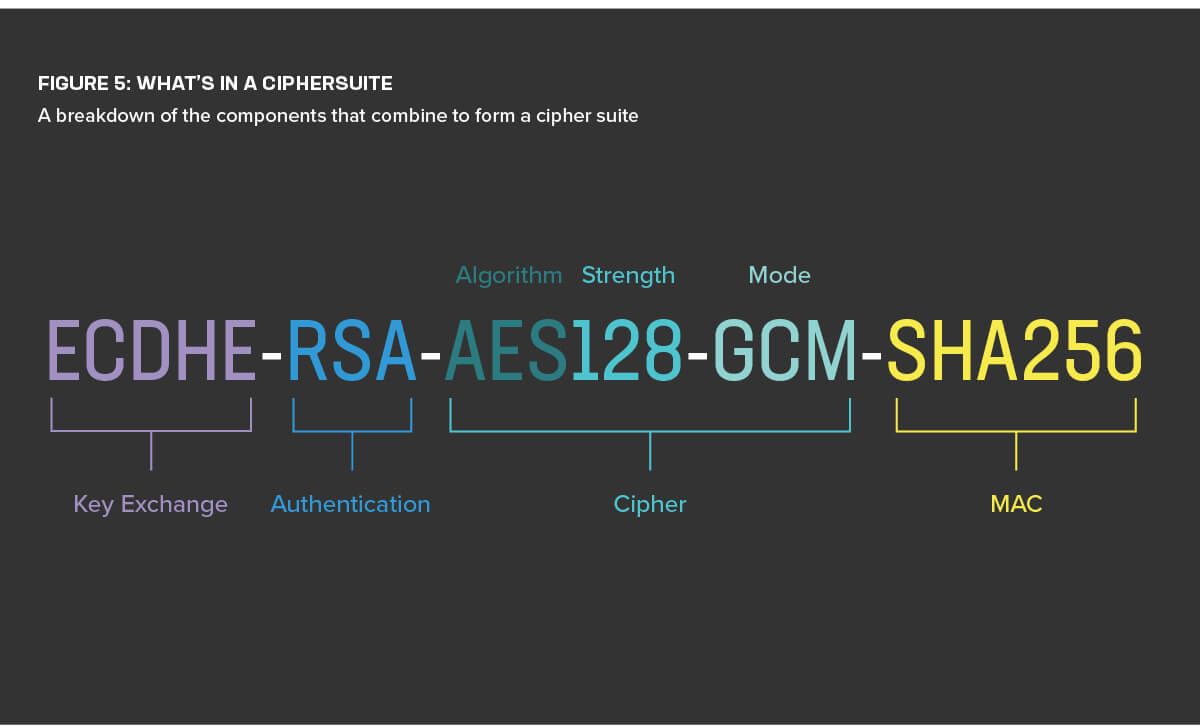

It’s common to refer to the cryptography a server and client agree to use as a cipher, although this isn’t quite accurate. A cipher is the cryptographic algorithm which, when combined with a secret key, scrambles and protects some data. But to send encrypted data over the insecure Internet we need more than a cipher. The client and server must agree:

- How to safely exchange keys (the key agreement).

- How the keys will be authenticated (signed).

- How to use the cipher (the mode of operation).

Finally, they also need to identify which hashing algorithm to use to ensure encrypted messages have not been tampered with. All of these factors combined represent the suite of agreements known as a cipher suite. (See Figure 5.)

Table 1 shows how just over three-quarters of every TLS connection across the top million sites make use of AES (with a 256-bit key) and the SHA-2 hashing algorithm (with a 384-bit output size). This is true for servers that support TLS 1.3 and those which still use TLS 1.2.

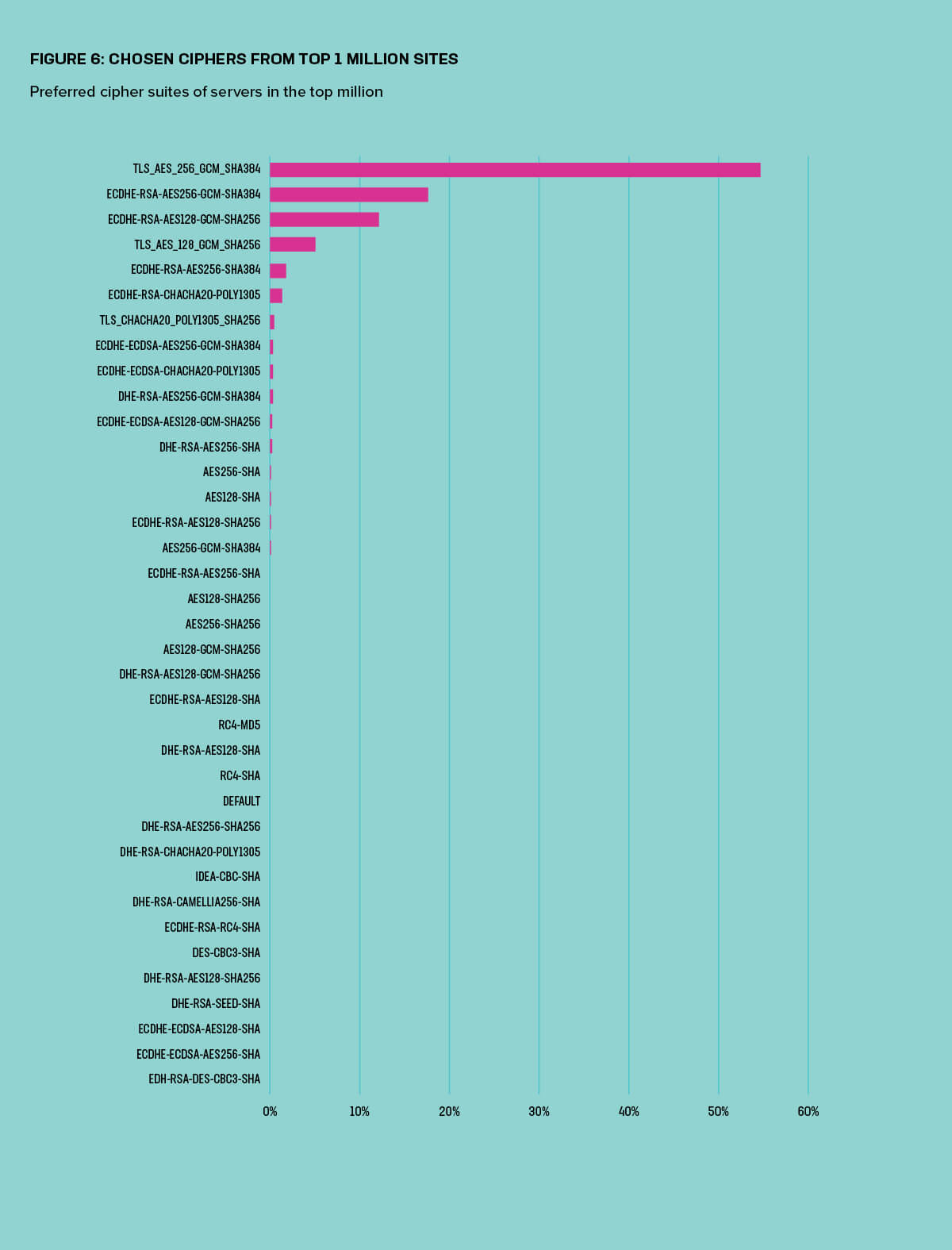

The scan also revealed 58 distinct TLS 1.2 cipher suites available across all servers in the top million. If the rate at which SSL 3 is dying is anything to go by, it will be years before we all benefit from the simplification TLS 1.3 was partially designed to bring. However, as Figure 6 shows, most of those 58 cipher suites were infrequent and made up the long tail, with 84% of servers running TLS 1.2 preferring just one of two cipher suites.

The Shift to Elliptic Curve Crypto

To further improve the security of encrypted communications, most TLS implementations implement forward secrecy, which is achieved by the use of ephemeral (one-time use) keys with elliptic curve cryptography. The final E in the abbreviation DHE and ECDHE indicates the use of ephemeral keys. (See Figure 6).

TLS 1.3 removes the risk of using RSA key exchange, since it only permits ECDHE key agreements. Between the widespread use of TLS 1.3 and older protocols configured to prefer non-RSA key exchanges, almost every site—99.3% in the top million—chooses not to use RSA to exchange keys during the TLS handshake. This is comforting to see.

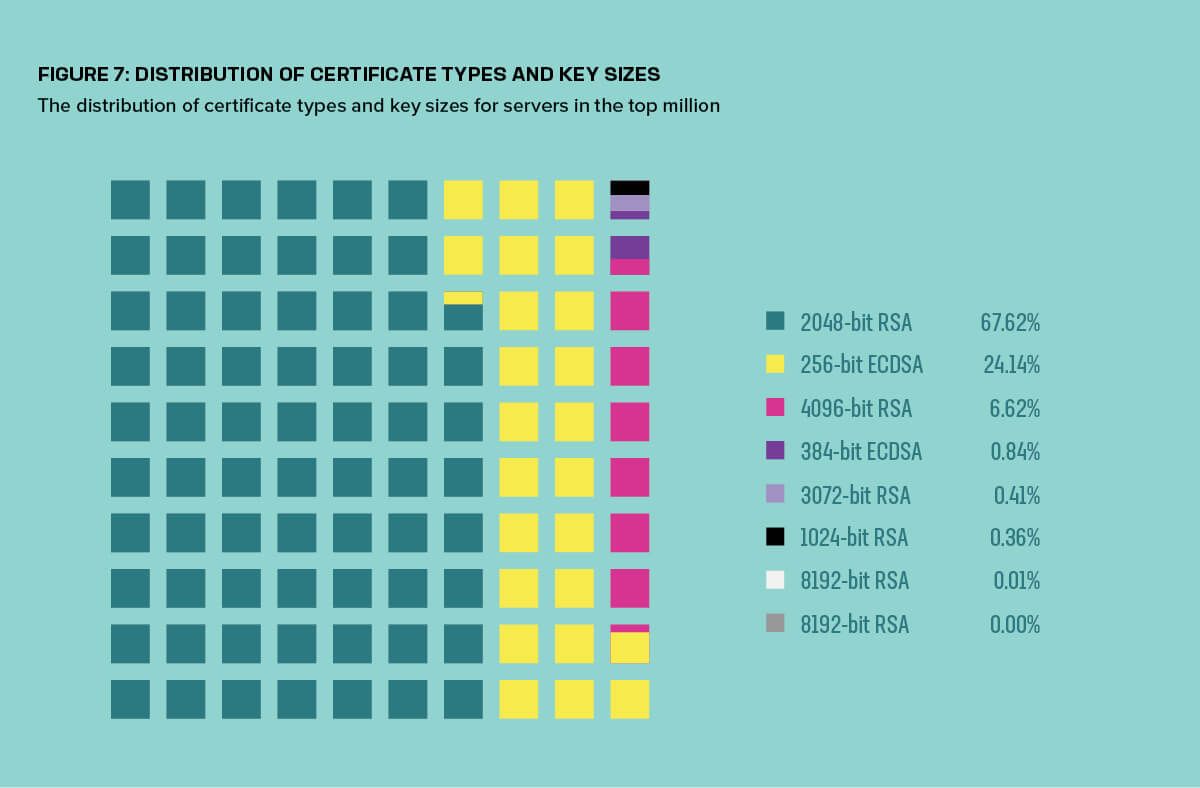

The move away from RSA-based certificates to elliptic curve cryptography (ECC) variants has been slower, since RSA is still believed to be a secure way of cryptographically signing data. Nevertheless, ECC certificates using the elliptic curve digital signature algorithm (ECDSA) are increasing. Just over 24% of top sites make use of 256-bit ECDSA certificates, while around 1% use 384-bit ECDSA certificates.

Decreasing Certificate Lifespans

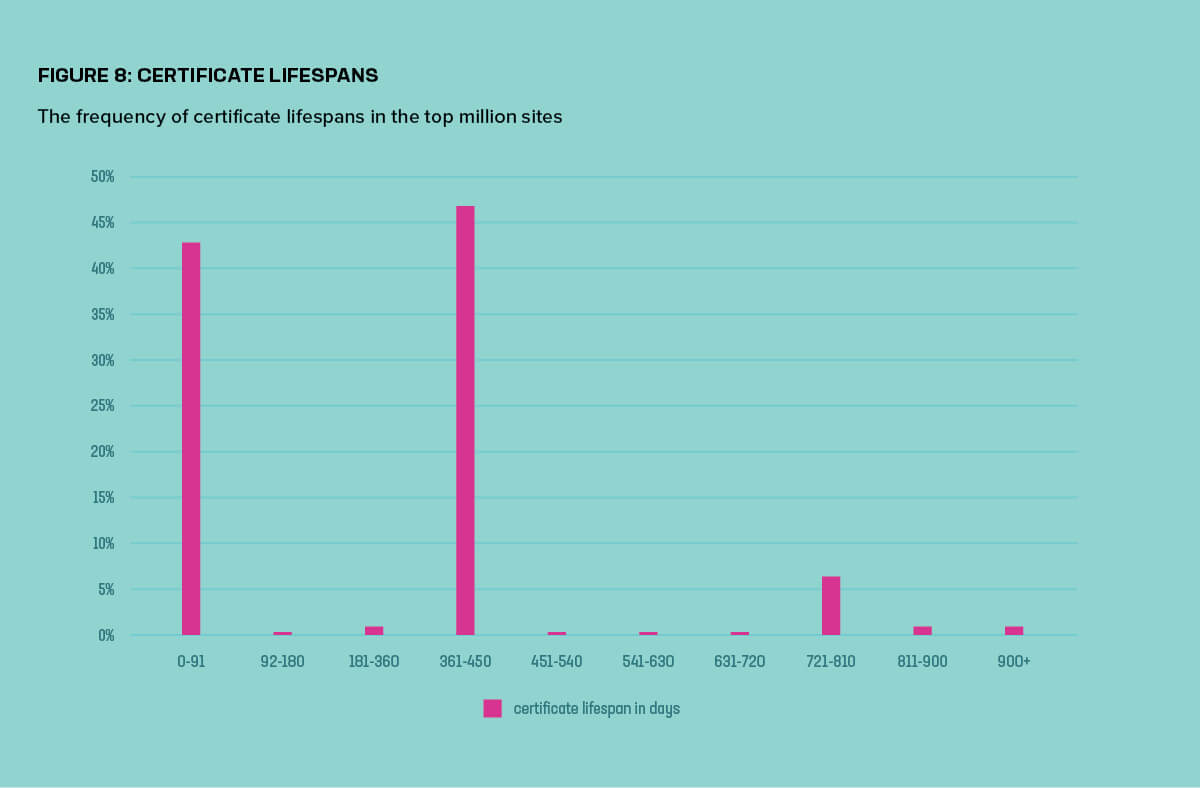

Regardless of the type of certificate in use, certificate revocation methods are almost entirely broken. That’s why desire is growing across the certificate authorities (CAs) and browser industry to move toward extremely short-term certificates. Revoking a stolen certificate becomes much less of an issue if it will expire in just a few weeks.

After repeated failed ballots in the CA/Browser Forum to cap the maximum certificate lifespan at one year, the major browsers instead chose to enforce a 398-day limit. The maximum lifespan of new certificates issued after September 2020 has dropped significantly from three years to only 398 days.7 The ACME protocol, used to automate requests and issuance of free certificates, defines a maximum certificate age of 90 days. The popularity of these short-term certificates is revealed in the data: The single most common certificate— accounting for 38% of the total—has a lifespan of 90 days. Figure 8 combines 90-day certificates with those accidentally issued for 91 days, bringing the total for 90-91 day certs to 42%.

Short lifespan certificates also help partially solve the issue of changes in domain name ownership. Should the owner of a domain change, administrators must ensure the old owner can’t continue using their certificate to perform active attacks and decrypt traffic intended for the new owner. In 2018, security researchers showed pre-existing certificates for 1.5 million domains (0.45% of the Internet), a problem they dubbed BygoneSSL.8 Some 25% of those pre-existing certificates had not expired.9

This Is the End… (of Some Protocols)

Over the years, the industry has developed new supporting protocols designed to help close the gaps a basic TLS configuration can leave open. Some protocols are becoming well established standards, such as DNS CAA records and the HTTP Strict Transport Security (HSTS) header. Not all new security protocols survive, however, and while their deaths are rarely to be celebrated, in many instances something much better takes their place.

HTTP Strict Transport Security

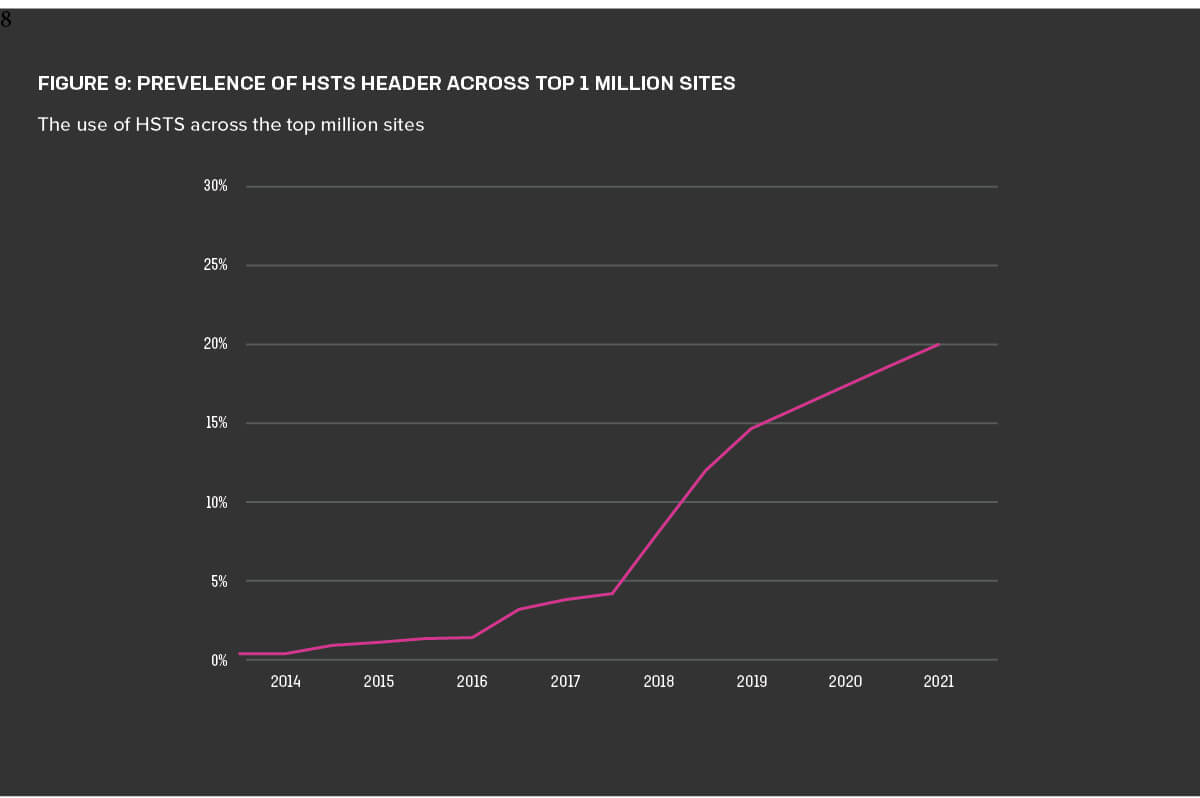

Our scans showed slowed growth of the HSTS response header. At the time of our 2019 report, roughly 15% of sites used it, and in mid-2021, about 20% of scanned sites returned an HSTS header (Figure 9). A number of factors could account for the reduced growth rate. For instance, the majority of website owners who understand HSTS may have already configured it. Perhaps the others simply haven’t heard of it or don’t believe it’s necessary.

Major browsers are beginning to default to HTTPS, however, and web browsers could conceivably disable HTTP altogether within a few years. Such a move to an HTTPS-only web would certainly negate the need for the HSTS header.

HTTP Public Key Pinning

Our scans also showed that use of HTTP Public Key Pinning (HPKP), the deprecated mechanism in which the server delivers hashes of public keys that must match keys in the certificate chain for future connections, has largely if not completely died out. Only 0.06% of servers in our 2021 scans had HPKP configured, although this is up slightly from our 0.05% finding 18 months ago.

Extended Validation Certificates

Sites can pay to undergo enhanced vetting to ensure the domain, the site, and the organization purporting to run it on that domain all match. The outcome of that additional vetting is an extended validation (EV) certificate. EV certificates don’t provide additional technical security; they are intended to help signal to users that a certificate is trustworthy. However, their efficacy is marginal. Most users don’t know what they are or how to check for them and don’t miss them when they aren’t present. Furthermore, research for our 2020 Phishing and Fraud Report found that many phishing sites are hosted on well-known blogging platforms that used EV certs.10 Cryptonice scans of the top million sites revealed that only 1.8% of web servers use EV certs, down from the 2.2% of servers with EV certs noted in our last report.

Overall, this year’s research documented continued, if sometimes slow, progress toward more sophisticated (but higher-performance) security tactics. It also captured evidence that organizations—at least those responsible for the top million sites—are gradually weeding out older, less secure protocols, practices, and certificate management. Older, less secure protocols and cryptography still exist, but mostly in a very small percentage of sites.

Of course, that doesn’t make them all right, and our 2021 research also revealed problems that need to be addressed.

The Bad News

It wouldn’t be a security report without some bad news, right? The most glaring sign that all is not right within the world of web encryption comes not from our scan data but from OWASP. In the latest OWASP Top 10 ranking of security issues, cryptographic failures (A02:2021) moved up from third position to second position, behind broken access control. This increasingly frequent failure, previously known as sensitive data exposure, was also renamed to emphasize the technical root cause rather than symptoms or outcomes.11 More than anything else, its growth illustrates why something that can appear as dry and abstruse as cryptography still requires everyone’s attention: we simply aren’t doing it well enough to ignore it. With that said, Cryptonice also revealed interesting (and troubling) findings that help supply details for understanding why OWASP made the change they did.

To put these findings in context a TLS configuration using less-than-great encryption is not an automatic disaster. It’s virtually unheard of for organized cybercriminals to use TLS weaknesses to attack an organization. (Nation states are a different matter.) So it’s unlikely that an organization’s ongoing support for TLS 1.0, HTTPS misconfigurations, or failing to follow TLS best practices will be responsible for a breach. But such TLS weaknesses may indicate to attackers that the rest of the web server is very likely out of date and therefore vulnerable.

CAs Behaving Badly

Let’s start with the problems that primarily result from malicious or negligent behaviour by certificate authorities. The web depends on a chain of trust, and that chain is anchored with the certificate authorities. There are hundreds of CAs that web browsers inherently trust, despite many of them being completely unknown to everyday users of the web. So it’s imperative that the CAs are completely trustworthy and held accountable for their actions.

If a web server is still using a certificate signed by a CA whose certificate has been removed from a browser’s root store, then TLS handshakes will fail. The most recently distrusted CA, Camerfirma, accounts for four certificates found in the top million sites. Another 114 are still signed by Symantec, WoSign and StartCom, other recently distrusted CAs. All are untrusted and will result in a failed connection.

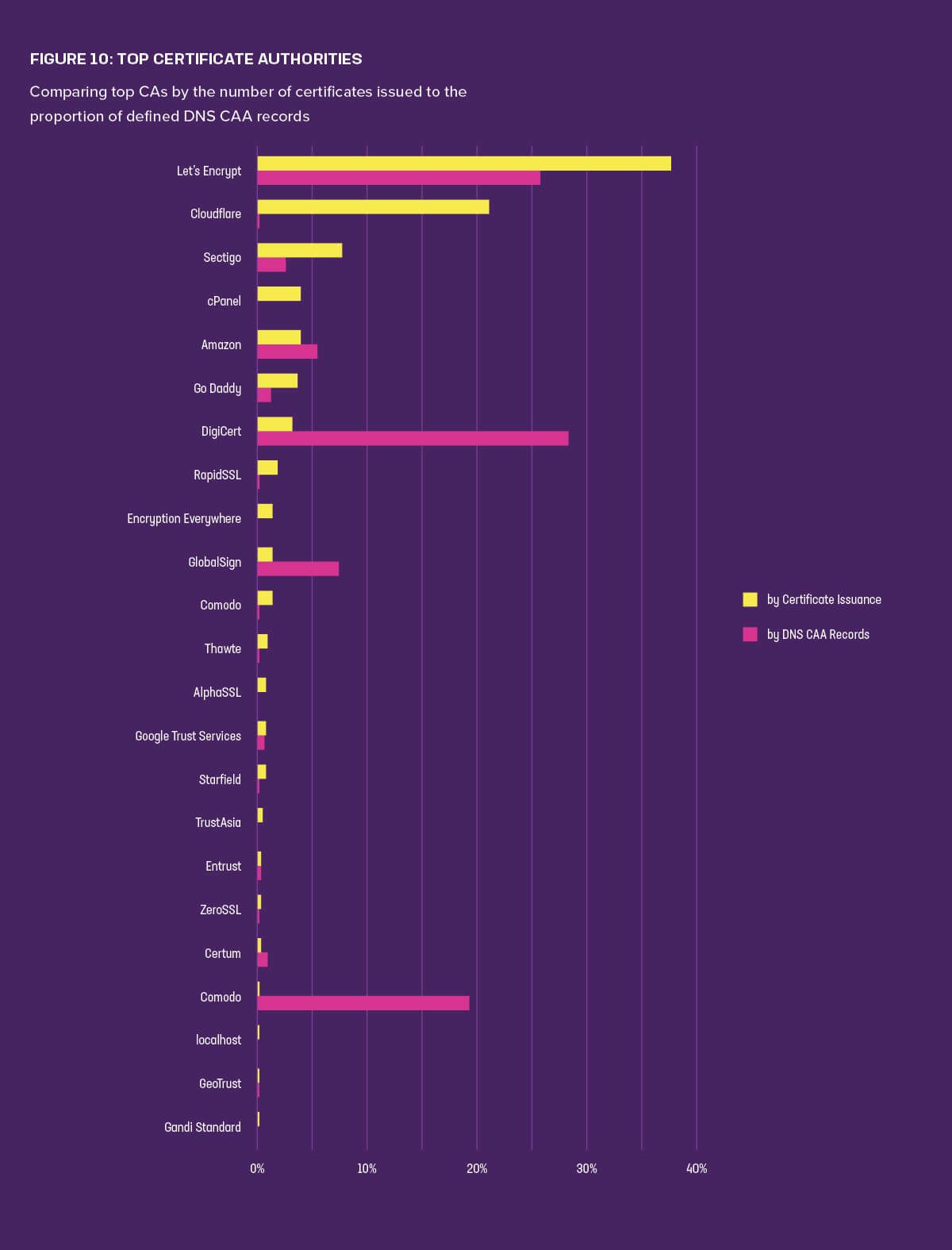

One of the best ways to protect against misbehaving CAs is to create DNS certificate authority authorization (CAA) records. In such records, site owners maintain information about which CAs are permitted to issue certificates for that domain.

Figure 10 shows the most popular certificate authorities as measured both by the number of certificates they issue and their prevalence within the CAA records. While Let’s Encrypt appeared marginally less often in the CAA records than DigiCert, Let’s Encrypt was still by far the busiest CA in terms of issuing certificates; nearly 40% of the web’s certificates currently come from them. Only Cloudflare, at 21%, even comes close, with Sectigo third at 8%.

DNS CAA records grew in popularity between 2019 and 2021. Of the top million sites, 3.5% are now using CAA, up from 1.8% a few years ago. Despite this positive and steady increase, this finding demonstrates how few sites still actually use them. The average number of CAs defined in CAA records was 5.8, with some sites defining up to 20.

When ALPACAs Attack

Although there have been a number of worrying Man-in-the-Middle (MITM) vulnerabilities discovered in TLS implementations recently (see GnuTLS CVE-2020-1377712 and WolfSSL CVE-2020-2461313) the protocol itself is rarely found to have serious problems.

This is what makes the ALPACA attack so interesting14. We are, of course, referring to the Application Layer Protocol Confusion-Analyzing and Mitigating Cracks (ALPACA attack), not the furry llama-like animal, which also would be interesting. This attack takes advantage of wildcard certificates and exposes a vulnerability even when TLS is working correctly. TLS is an application-independent protocol, which is to say that TLS can be used to secure HTTP, SMTP and many other applications. There is nothing within a TLS handshake which defines and restricts the secure session to a specific application, and the ALPACA attack takes advantage of this.

While the vulnerability is not trivial to exploit, the researchers were keen to point out that they were only able to test some specific scenarios. Others may exist. The US National Security Agency (NSA) deems this issue severe enough to have recently issued guidance around it.15

In our scans of the top 1M sites we found over 40% of sites make use of certificates which contain wildcards, e.g., *.example.com. More specifically, we found over 6.5% of web servers use certificates which are also used with mail servers as they contained mail. or smtp. In the SubjectAltName field.

The NSA guidance document suggests that administrators carefully consider the use of wildcard certificates and make use of web application firewalls, even for non-HTTP servers.16

Fat Fingers and Dusty Configurations

Misconfigurations and outdated configurations represent a significant source of TLS issues. Since HTTPS is contingent on several components, this section of this report starts with outdated encryption protocols and ciphers, moves on to weak or expired certificates, and finally touches on invalid certificate chains and the transmission of trust.

Legacy Protocols and Weak Ciphers

We’ve seen plenty of good progress made with the relatively rapid adoption of TLS 1.3 and move to elliptic curve cryptography. But as much as new protocols can improve a website’s security, its legacy protocols, frequently left enabled, are a much bigger factor in overall cryptographic strength. Neglecting to turn off SSL 3 leaves web servers vulnerable to the POODLE attack; some TLS 1.0 and TLS 1.1 configurations may also be vulnerable to POODLE v2.17 Offering RSA key exchanges or allowing export-grade cipher suites can enable attacks on the supposedly secure TLS connection.

While cryptographers believe that using RSA to sign digital certificates is still secure, exchanging keys over the Internet using RSA has proven vulnerable to attack time18 and time19 (and time20) again. For years cryptographers and the wider security community have called for an end to RSA as a key exchange method recommending instead, the use of Diffie-Hellman (DH) key agreement. While DH, like RSA, may be used with large prime numbers, in current practice DH is more often used with elliptic curves, which results in much smaller keys. These smaller keys have the same security as larger, prime-based crypto and they are computationally far more efficient.

While more than 99% of servers in the top million prefer the use of Diffie-Hellman or elliptic curve Diffie-Hellman key agreements, 52% still allow the use of RSA key exchanges, should that be all the client supports.

During TLS handshakes, 2,991 web servers (0.4% of scan results) chose a cipher suite F5 Labs considers weak—which is defined as using export-grade key lengths, anonymous authentication, or RC4, DES/3DES, RC2/RC4, MD5, or null encryption. For example, the best cipher suite one website offered to clients was SSL 3 using RC4-SHA, a combination of protocol and cipher suite considered state of the art in the mid-1990s. A larger number of sites (12.4%) made weak cipher suites available but didn’t choose them for connections.

On the subject of legacy protocols, we found SSL 3 stubbornly clinging to life in the wild. In 2019, 3% of sites in the top million still allowed this legacy protocol. Our 2021 scan revealed that 2% of sites still have SSL 3 enabled. That represents some progress, but not enough.

Failure to remove older protocols and cipher suites from a web server after the implementation of new ones is sufficient to allow a threat actor to perform downgrade attacks.

POODLE, FREAK, Logjam, and SLOTH are all vulnerabilities in which an active attacker manipulates the TLS handshake between client and server and tricks both into believing either one only supports an older (weaker) connection. While TLS 1.3 does offer downgrade protection against active attackers, those protections should not be relied upon, especially since the implementation of specifications often creates vulnerabilities. Leaving old, vulnerable protocols enabled should only be done in extreme cases when business requirements outweigh the risks.

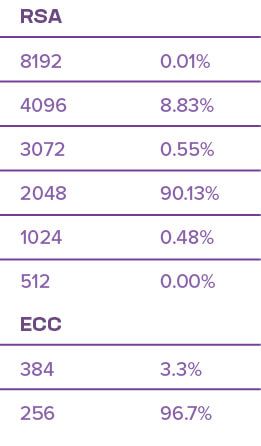

Table 2: Keys used with RSA and elliptic curve certificates

Weak Certificates

Moving on to certificates, we also found more room for improvement. Of the 1 million sites scanned, 0.3% used RSA certificates with 1024-bit keys, which haven’t been available from trustworthy CAs since 2013. (Fortunately, only one site in the top 10,000 had a certificate with a 1024-bit key.) Among the RSA certificates alone (that is, leaving aside the elliptic curve based ECDSA certificates), 1024-bit keys made up just under half a percent (Table 2).

The size of the public certificate’s public key should be chosen carefully. Websites often claim “military grade AES128” encryption, which means very little if that symmetric 128-bit key is initially protected by a certificate whose public key is only 512 bits—akin to fastening a thick metal chain with a plastic cable tie. It’s important that the public key (stored in the server’s private certificate), the symmetric key size (as defined in the chosen cipher suite), and the message authentication scheme (also defined in the cipher suite) all provide similar levels of security or a weakness in one may undo the entire chain.

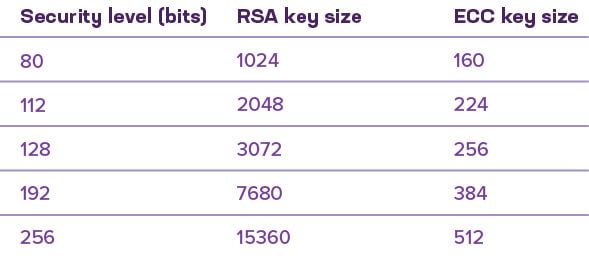

The website keylength.com21 provides a useful look at public key, symmetric key, and hashing algorithms by comparing their security in bits. It provides key length recommendations from various groups around the world, including NIST, NSA, BSI, and ECrypt. Table 3 provides a simplified view of the relative strength of RSA and elliptic curve public-key cryptography.

Table 3: A security comparison of RSA and ECC key sizes

A 256-bit ECC key provides a slightly higher level of security than 2048-bit RSA keys. As shown in Table 1, the majority of connections on the web use AES-256 with a 384-bit hash, but the majority of those are initially protected by a 2048-bit RSA certificate. If a comparable level of security is required across the public key, symmetric, and hashing algorithms, then a 3072-bit or larger RSA key (or a 256- to 384-bit ECC key) should be used for the certificates.

Table 3 also shows how quickly RSA key lengths can get out of hand. Doubling the security from 128 bits to 256 bits requires an RSA key five times larger. This has a huge impact on web server performance, since even a modest doubling of RSA key lengths more than halves performance.

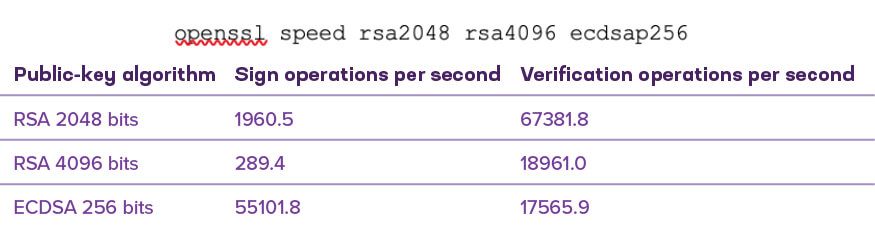

Table 4 lists the results of running the OpenSSL command shown to compare public key operations. Specifically, doubling the RSA key length from 2048 to 4096 bits results in a 72% performance drop for RSA verifications and an 85% drop for RSA signatures. The move to elliptic curve crypto is useful today but will become essential for increasing security in the near future.

Table 4: Speed comparison of public key operations as performed on a modest Windows 10 desktop

While 2048-bit certificates are still strong by today’s standards, organizations need to keep in mind the expected cover time provided by key lengths. 2048-bit RSA keys are a long way from being factored with any degree of efficiency, but they probably will be easily broken within 10 to 20 years. Businesses should consider whether encrypted data captured today by a passive snooper could cause problems if decrypted in 10 years’ time. For shopping transactions on e-commerce stores, that’s unlikely. But if the TLS connection is protecting intellectual property or top-secret government communications, cover time should absolutely be a consideration. As previously mentioned, keylength.com is useful for evaluating whether your certificate key length will be adequate in years to come as well as today.

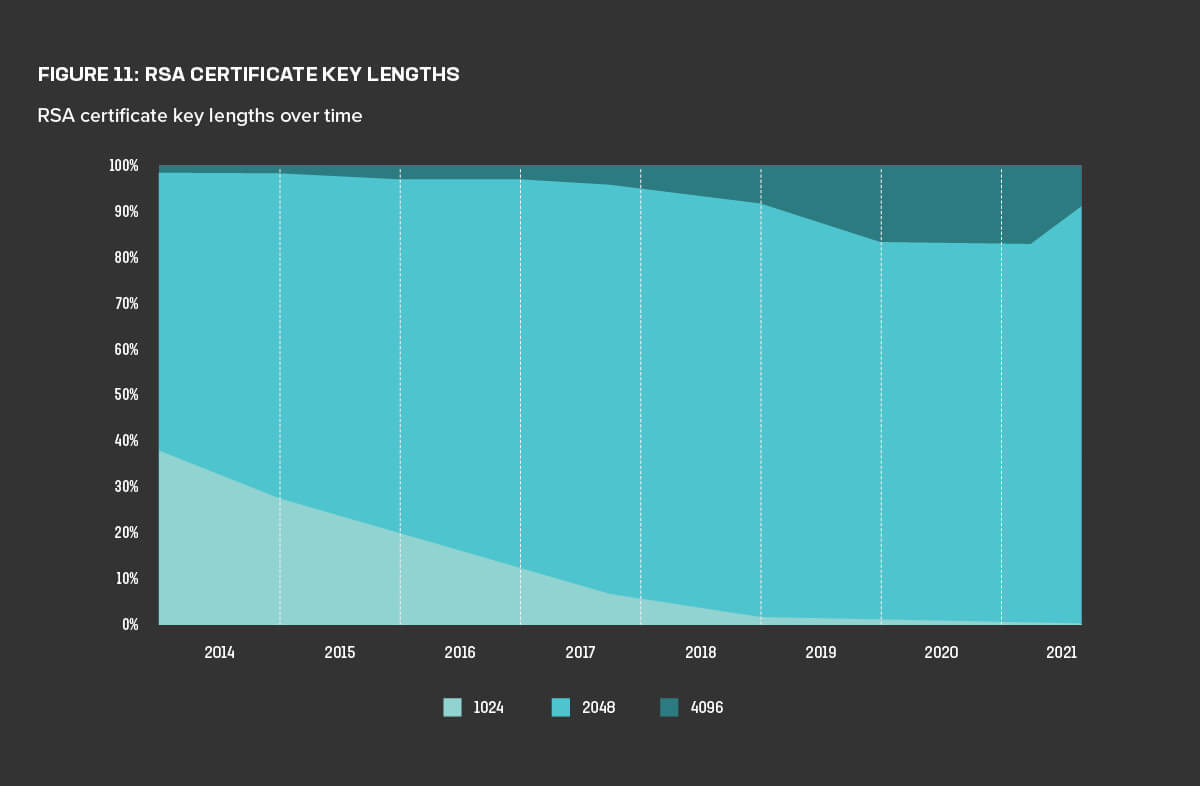

Figure 11 shows that while the number of 1024-bit certificates is declining, the number of 4096-bit certificates also declined in mid-2021 in favor of 2048-bit certificates. This is likely due to many websites moving from RSA to ECDSA certificates, as noted earlier in this report. Of the sites using 1024-bit certificates, nearly 70% are running Apache, with 24% using NGINX. Apache version 2.0, which accounts for 32% of the Apache servers, is the most prominent offender. Since Apache 2.0 was released in 2002 and last patched in 2013, this data strongly suggests that many web servers are configured once and only touched again to renew the certificate.

Thankfully, we found only seven sites in the top million using 512-bit certificates. However, we’re talking about the most popular, heavily trafficked sites on the Internet—so that’s seven too many!

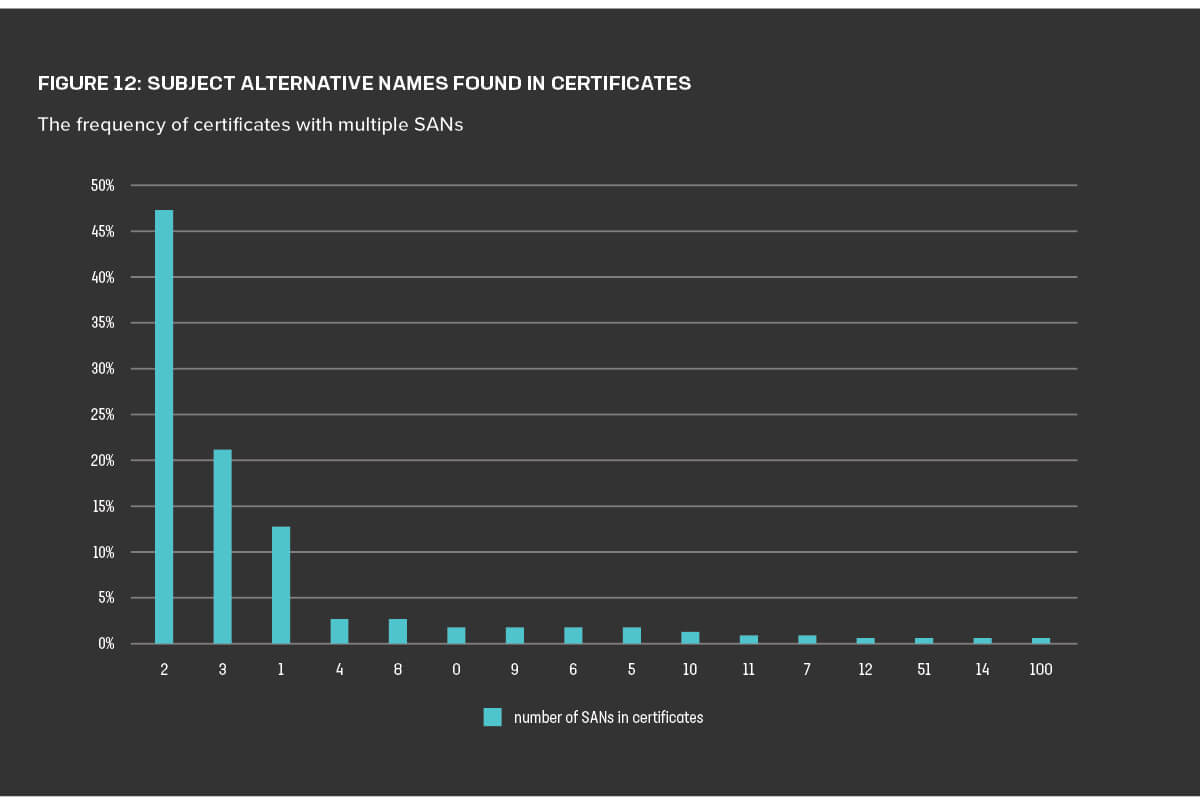

Our scans also revealed the number of subject alternative names (SANs) in the certificates. This information provides a sense of how many discrete entities on the web—whether domains, IP addresses, or common names—fall under the purview of the certificate in question. Nearly half of the certificates included two SANs, and certificates with one, two, or three SANs made up about 80% of the scanned certificates (Figure 12). Fully 90% of the certificates contained between one and nine SANs. However, this distribution had a very long tail indeed: 15 certificates contained 991 SANs, and five certificates included 1,000 SANs.

Certificates with a large number of SANs are increasingly common with CDNs, who provide services to hundreds of websites via a single IP address. But while they might offer some operational or business advantages, certificates with a plenitude of alternative names can slow the TLS handshake and are more disruptive when they are revoked than certificates with a smaller number of domains.

Expired Certificates

Digital certificates expire for the same reason passports, driving licenses, and other physical certificates expire: Claims must be verified regularly to be trustworthy. In the digital world, certificates must expire first and foremost to help with the problems of certificate revocation. Distrusting a stolen or incorrectly issued certificate is unreliable, so currently the best way to protect against those threats is to have the certificate expire as soon as possible.

The X.509 v3 digital certificate specification, used by TLS, defines the use of two timestamps: the notBefore and notAfter dates. Together they specify the earliest a certificate may be used and at what point in the future it should no longer be trusted. An expired certificate prevents users from establishing a connection to a website, mobile app, or API secured with TLS. With fully automated certificate services such as Let’s Encrypt, it’s surprising to still see services frequently knocked offline due to certificates apparently forgotten about and not renewed in time. Over the past 12 months alone, Google Voice, the Microsoft Exchange portal, Spotify, Github, and SpamCop all suffered outages due to expired certificates. Google Play users also found their American Express credit cards removed from their accounts due to certificate expiration.

Overall, we found that 2.5% (or about 1 in 40) of the certificates in the full set of sites were expired at the time of scan.

Fortunately, less than 1% of sites in the top 1,000 (0.7%) were found to have expired certificates, compared with 3.3% of sites in the top million’s bottom 1,000. These rates indicate that better certificate management does somewhat correlate with site popularity. But no one’s immune.

Invalid Certificate Chains

A lapsed notAfter date is not the only reason a certificate may no longer be trusted. Web browsers expect to receive not only the leaf (server) certificate for a website, but all certificates responsible for digitally signing that certificate, up to (but not including) the root certificate. The order of the chain is important too, although many web browsers are forgiving and still accept certificate chains in incorrect order. However, certificate chains that entirely miss intermediary certificates will generally not be trusted and result in a failed connection to that HTTPS site.

In our 2021 scan, 2% of sites in the top million sent certificates back in an invalid order—down from 2.5% two years ago.

Other Errors

Our research also found a handful of miscellaneous issues that don’t fit the categories above but certainly qualify as bad news:

- 2.8% of sites were vulnerable to denial-of-service attacks via client certificate renegotiation.

- 1% of scanned sites support compression, which can make them vulnerable to the CRIME exploit.29

- 0.2% of scanned sites do not support secure renegotiation.

Overall, there’s a long list of cryptographic oversights or conscious-but-unwise practices that are contributing to failures that risk data breaches—or could in the future, even if they’re secure now. Certificate issues of one sort or another make up the bulk of the list. That’s an argument for directing sufficient IT resources toward CA selection and ongoing certificate management.

Abuse and Misuse

In addition to the bad news covered above, our study also explored malicious activity or encryption circumstances that might engender it. For instance, our research showed how encryption helps threat actors make social engineering schemes more believable or harvest credentials from cryptocurrency owners with encryption downgrading attacks. Finally, governments also circumvent encryption for surveillance and espionage.

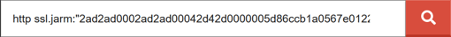

Threat Hunting with TLS Fingerprinting

Website operators have been using device fingerprinting for many years to help distinguish malicious bots from genuine customers. Since many threat actors intentionally modify their client device’s browser headers and other properties, it can be useful to measure a client’s hidden signals in an attempt to find its true identity. However, fingerprinting servers, specifically Transport Layer Security (TLS) fingerprinting, is rarely performed. By incorporating the Salesforce JARM TLS fingerprinting technique directly into Cryptonice, we were able to capture server TLS fingerprints for the top one million sites.22 The results not only revealed a perhaps unsurprising lack of variance, but they also indicated that malicious command-and-control (C&C) servers may be lurking among the world’s most popular sites. Before we dive into attacker behavior, however, let’s cover what a TLS fingerprint is and what it can tell us.

For each Client Hello message in the TLS handshake, a web server may respond with a unique Server Hello that will differ based on the operating system, TLS library, the preferred order of cipher suites, and other configuration options. The TLS fingerprinting technique sends specially crafted client parameters to a web server and carefully measures its response to create a unique fingerprint. This can then be compared with other web servers to determine if they are configured the same way. This could be useful for auditing purposes, for example, ensuring that all servers for any given organization are configured the same way. Fingerprinting is also a useful way to identify servers that may deliberately hide HTTP response headers. One caveat: despite its potential advantages, fingerprinting is far from foolproof. Recently, we’ve seen examples of attackers selling client fingerprints on dark web markets (/content/f5-labs-v2/en/labs/articles/threat-intelligence/genesis-marketplace--a-digital-fingerprint-darknet-store.html) with the specific intention of avoiding fingerprint-based security controls. So, while fingerprinting is not a perfect method for identifying a server, it does highlight areas worthy of future research.

One in a Million …Or Not, As It Turns Out

Across the one million sites on the Internet, we found only 8,851 different TLS fingerprints, and of those only 4,035 were completely unique. Why so few? Why does every server not have a completely unique fingerprint? One possible explanation is that many servers are configured exactly the same way, that is, using defaults. A standard install of Ubuntu 20.04 with NGINX 1.21.3 using default TLS configurations will, in all likelihood, result in the same fingerprint. However, the real reason for such low variations in fingerprints is the sheer number of content delivery network (CDN), DDoS-mitigation, and cloud proxies being used.

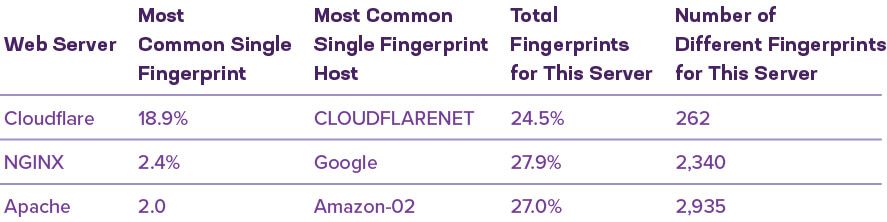

One single TLS fingerprint, belonging to Cloudflare, accounted for almost 20 percent of the top one million sites. In aggregate, Cloudflare is responsible for 262 unique fingerprints, which constitute 25 percent of the fingerprints found.

The second most common fingerprint, at 2.4 percent, is found with NGINX. This is significantly less than Cloudflare in the top spot. However, if we combine all fingerprints associated with NGINX, then it rises to the top, claiming almost 28 percent of all fingerprints in the top one million.

The most common unique Apache fingerprint was just behind NGINX, at 2 percent, and combined Apache fingerprints account for exactly 27 percent of the total, placing it in third place for unique fingerprints and second place for aggregate fingerprints. See Table 5 for a summary of fingerprints by the top three website server platforms.

In all, 80 percent of the top one million sites produced just 203 unique fingerprints, provided by just 17 different web servers.

Table 5: The most commonly found TLS fingerprints across the top 1 million sites

The results of F5 Lab’s fingerprinting of the top one million sites leads to the question: is lack of variance in TLS configurations a concern from a security perspective? If TLS fingerprinting is a reliable way to identify vulnerable servers, then clearly yes. The JARM fingerprinting method has been included in services such as Shodan.23 This means that not only can website owners perform lookups for their own matching fingerprints but so too can threat actors, as shown in Figure 13.

Figure 13: Example Shodan query to search for TLS fingerprints

On the whole, however, cloud providers often benefit from having teams of dedicated engineers who understand how to correctly secure HTTPS deployments. They can continuously maintain TLS configurations and ensure that all customers benefit from the ever-changing best practices.

Malicious Servers

Here is where it gets interesting from a cybercrime standpoint: since phishing sites and C&C servers will intentionally attempt to disguise their configuration, fingerprinting techniques can be a useful way to spot the true identity of web servers. Table 6 shows JARM fingerprints for known malware servers or red team tools and the number of times that fingerprint was found in our scans of the top one million sites.

As we would hope, the number of (potentially) malicious servers is very low, though it is far from zero. Trickbot and Dridex (2), in particular, show relatively high counts for their associated fingerprints. This does not necessarily mean that all servers are infected with those malware families, simply that the web servers we scanned have identical TLS fingerprints to those malware strains. These could be false positives, or they could indicate that a small percentage of the web’s most popular sites are being controlled, either knowingly or unknowingly, by attackers.

This is particularly significant in light of the growth of ransomware in 2020 and 2021. The 2021 Application Protection Report noted that Trickbot and Cobalt Strike were two of the top three most frequently observed malware variants for delivering ransomware, along with Emotet. The implication is that some of the web’s most popular sites are also delivery vehicles for some of the most devastating attack trends in the last five years.

Phishing in the Murky Depths

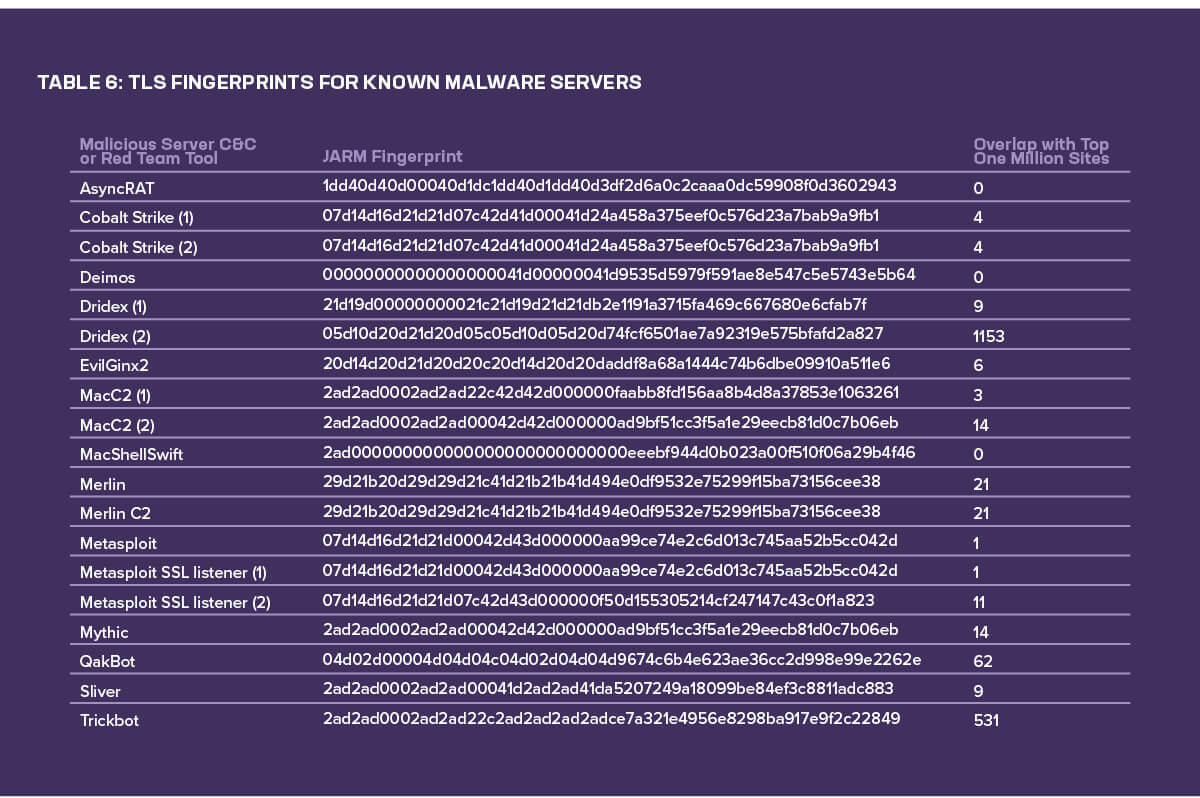

As reported in our 2020 Phishing and Fraud Report, 70% of phishing sites used HTTPS with valid certificates to appear more legitimate to victims. Data from OpenPhish indicates that this figure is now almost 83%, with only 17% of phishing sites using insecure HTTP-only connections. We also found that the majority of malicious sites—just under 80%—come from just 3.8% of the hosting providers.

In our 2019 TLS Telemetry Report, we indicated that the web hosting control panel cPanel— through its AutoSSL capability arising from its integration with Sectigo—was the preferred method of obtaining and installing free digital certificates on phishers’ websites. Today, Let’s Encrypt has taken the lead, providing 28% of certificates for phishing sites. Phishers are either finding alternative ways to deploy their sites or perhaps using the optional Let’s Encrypt plug-in for cPanel.

For service providers, phishers tended to prefer Fastly, though several other providers— namely Unified Layer, Cloudflare, and Namecheap—hosted similar proportions of phishing networks (Figure 14).

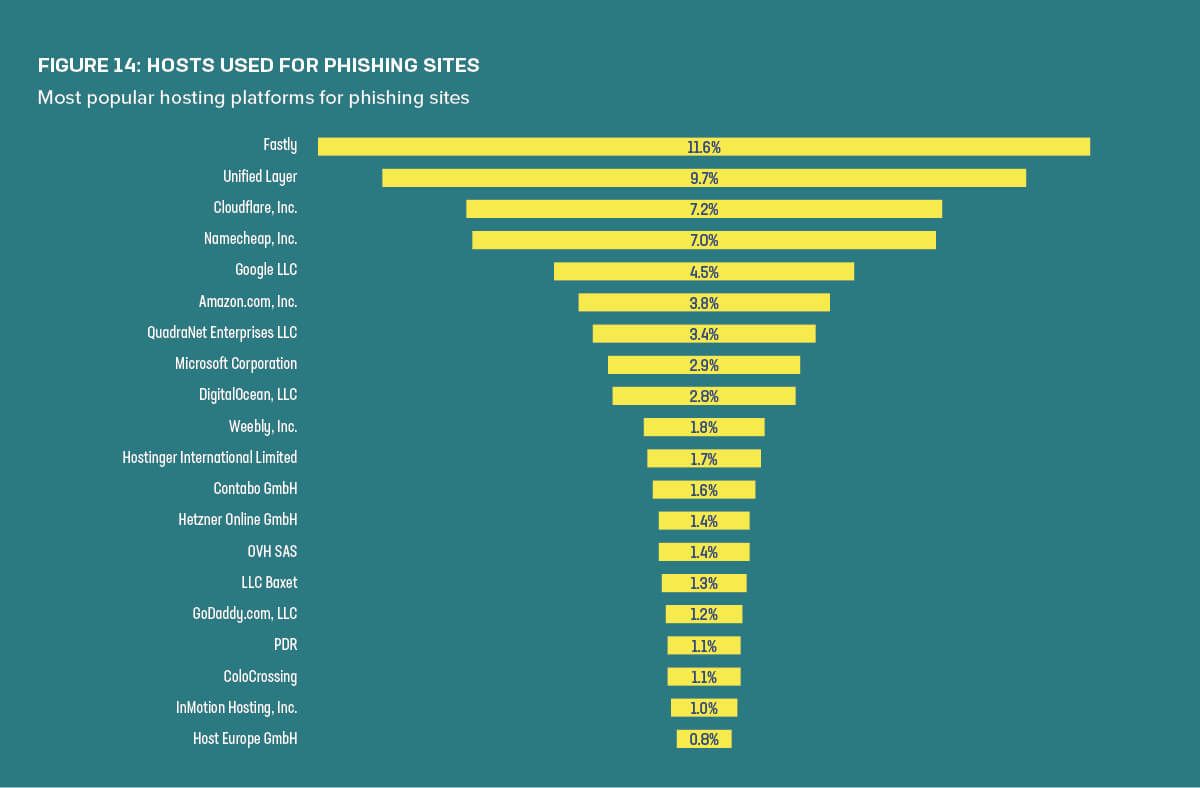

When it comes to phishing bait (after the use of generic or highly targeted spear phishing attacks), the brands most commonly targeted in phishing attacks were Facebook and Microsoft Outlook/Office 365. This reflects the value of stolen credentials from these sites, in part because so many other accounts rely on these as identity providers (IdPs) or for password resets.

Figure 15 also contains another finding hiding in plain sight: If we combine the various webmail targets, such as Outlook, Outlook365, and other webmail providers, webmail accounts for 10.4% of impersonated web functions—just under Facebook’s percentage. In other words, the diversity of webmail platforms shouldn’t obfuscate the fact that phishing victims are almost equally likely to experience a phish against their webmail accounts as against their Facebook accounts.

When is Encryption Not Encryption?

The web is well on its way to becoming 100% encrypted, but we’re currently in an awkward transition phase. Although the majority of websites prefer encrypted HTTPS connections, their operators hesitate to disable HTTP altogether, and many sites simultaneously allow clients to connect over both secure and insecure protocols. Some site operators fear inadvertently blocking access to customers using old browsers, while others may not understand the risks of leaving the unencrypted HTTP protocol enabled.

Once a secure TLS session has been established, the client and server can be sure communications are private and trustworthy. If, however, an active attacker (let’s call her Mallory) can intercept the very first connection between the two, she can trick the client into thinking the website only supports HTTP connections.

Figure 16: An attacker fooling a client with SSLstrip deception

This kind of attack, dubbed SSLstrip by its creator, Moxie Marlinspike, is extremely potent and can be used to capture sign-in credentials, personal information, and payment card details from any website. However, this attack can’t be performed remotely. An SSLstrip attack requires the attacker to be on the same network as the victim, since they need to capture and modify all network traffic. An ideal place for Mallory to hang out, therefore, would be a coffee shop that offers free Wi-Fi and where multiple victims all use the same open network.

A few tactics can prevent this kind of attack:24

- Completely disable HTTP connections and force all clients to use HTTPS.

- Use HSTS to instruct the web browser to only ever attempt connections to the website over HTTPS.

- Have users configure their browsers to only use HTTPS connections for all websites. (Users can search browser settings for “https” to do so.)

More broadly, this kind of attack illustrates the degree to which certain assumptions about the Internet and its infrastructure no longer hold on the dark web. Much of the care necessarily exercised by site administrators and product owners on the web is offloaded to users on the dark web, and cryptography is no different.

Future Encryption vs. Government Interception

Governments all over the world continue to propose and roll out new laws that affect the strength and types of encryption permitted within their borders. Law enforcement agencies say that limiting encryption or providing lawful interception is essential for bringing criminals to justice. Privacy advocates (and many opposition political parties) argue that governments only wish to perform mass surveillance of their citizens, and that weakening encryption threatens everyone’s security. Despite these concerns, nations around the world are prohibiting or limiting the use of encryption.

Their motives may be mixed. In the wake of the July 2021 revelations about the scope and scale of NSO Group’s use of their Pegasus spyware, for instance, information about its application by Kazakhstan went under the radar for most of the world. However, since the F5 Labs reported in 2019 on the Kazakhstani government’s attempts to decrypt its citizens’ traffic, we also noticed the nation’s government has used Pegasus to track not only domestic dissidents but also the country’s current and former prime ministers. While this new surveillance is not TLS-related, it stands as a proof that the Kazakhstani government isn’t finished spying on its own people,25 and it’s unlikely to be the only one.

More recently, newer encryption standards such as TLS 1.3, encrypted DNS such as DNS over HTTPS (DoH) or DNS over TLS (DoT), and eSNI (encrypted server name indicator) have come into the crosshairs of restrictive governments. In August 2020, security researchers found that the Great Firewall of China was attempting to block TLS connections that used eSNI values in the TLS handshake.26 The Russian government has also proposed an amendment to an existing law to prohibit the use of any technology that enables “hid[ing] the name (identifier) of a web page or site.”27 If implemented, this law will outlaw the use of eSNI and encrypted DNS.

Major browsers such as Chrome and Firefox allow manual configuration of DoH (although in some regions, such as the United States, DoH is now enabled by default). But few users are aware of the value or even the need for such manual configurations, leaving them at the mercy not only of intrusive governments but potential attackers and negligent site operators whose cryptography practices lag behind current standards.

Is Quantum Cryptography Here Yet?

No. And that’s not even a real question, so stop asking.28

Conclusion

The desire to intercept, weaken, and circumvent encryption has never been greater. Nation-states and cybercriminals alike are attempting to work around the problems caused by strong encryption. While this rarely results in direct attacks against cryptographic algorithms or protocols, it often leads attackers to instead think of creative ways to intercept or capture information before or after it has been encrypted. With these risks ever-present, it has never been more important to focus on strong and up-to-date HTTPS configurations, particularly when digital certificates are shared across different services.

As with many areas of information security, weaknesses come not from the latest and greatest features that we struggle to adopt, but the old ones we are reluctant to disable.

Here at F5 we have a passion for all things crypto. The F5 Labs research team frequently reports on updates to our Cryptonice HTTPS scanner, in addition to providing everything from educational series on the basics of crypto to deep dives on currently recommended best practices. Subscribe to the F5 Labs newsletter (/content/f5-labs-v2/en/labs/subscribe.html) to ensure you are always kept up to date.