And scale leads to speed. And speed leads to success.

Suppose one engineer can process a change request in two hours. Suppose another engineer can process that change request in 1.5 hours. If they work together, how long will it take for them to process two hundred change requests?

Yes, the answer is obviously beer.

Everyone likely remembers (with no small amount of fear and loathing) the infamous “train” or “painting” word problems in math classes which were designed to teach us about fractional relationships. Really, that was their intent, even though what many of us got out of them was a distaste for painting and train travel. And a lot of memes. Let’s not forget the memes.

The point is that this type of formulaic measurements are becoming a requirement in the data center, particularly in the network. That’s because budgets are limited (I know, right? How crazy is that?) and you can only hire X engineers to do Y work. In an application world where fast and frequent release cycles are the name of the game, the number of “X” you can hire to achieve timely releases is limited.

This is why scale is a primary driver of “devops” in the network, a.k.a. “netops”. By applying the operational principles of DevOps to the network, it is believed that netops can achieve the operational scale necessary to make “X” engineers able to do “Y” work on time and within budget constraints, even when the relationship between X and Y is exponential (layman translation: really lopsided).

The change request problem is a real one; presented by a network engineer who faced increasing numbers of change requests (defined as create a load balancer endpoint for an application, add it to DNS and create a firewall rule to allow access) that ultimately threatened to overwhelm available staff while simultaneously increasing the time to complete.

The answer was found in automation, of course, and in applying the principles of DevOps and offering a self-service interface that allowed other engineers to make requests that were then documented in the ticketing system and carried out via various networking and application service APIs.

Not only does this adoption of automation (and orchestration, because the entire process is actually being automated, too) allow existing engineers to operationally scale, but it’s made the process faster. It no longer takes up to two hours to process a change request; now it takes as little as five minutes.

Yes, you read that right. Five minutes instead of up to two hours.

That’s a significant improvement in speed arising from the ability to scale through automation and orchestration. And while it isn’t an ambitious “data center wide” project, it does address a specific pain point that both engineers and application owners experienced frequently. In this case, apparently up to 200 times a week.

This is exactly how netops should approach automation and orchestration within the production network. Finding those tasks that are repeatable and considered by the change review board to be trivial (i.e. they are provably non-disruptive and most engineers can do in their sleep) can result in significant opportunities to scale that result in the speed we are told by business and application owners alike that we need to achieve in the network.

Digging up those nuggets that can be automated with as little risk of disruption as possible leaves engineers freed to focus on those tasks that are not considered ripe for automation. That focus also translates into faster time to other service implementations, as they’re freed from spending an inordinate amount of their time on simpler, repeatable tasks.

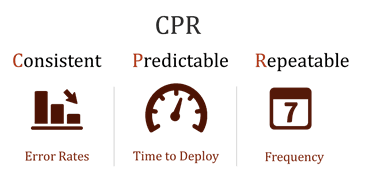

I cannot emphasize enough how critical it is to take a CPR approach for network automation: consistent, predictable, and repeatable automation will enable more efficient scale and faster time to delivery, with less risk of disruption due to errors. This is the marriage of so-called “mode 1” and “mode 2” operating models, where reliability and stability rules but agility is still desirable. By enabling a CPR approach to automating and orchestration in the network, organizations can improve agility with far less risk of disturbing the reliability of a network tasked with delivering many, many (like an average of around 200 or so) other applications.

Data center networks are comprised of legacy and emerging technologies, often held together with MacGyver-like techniques that require bubble-gum and a fishing line. These networks must simultaneously support applications that have been in production for going on fifty years now (mainframes still exist) and emerging apps built on barely out of diapers technology (like containers). It must deliver all applications, and do so reliably and securely. Those standards cannot be ignored in favor of the speed and frequency required by newer, shinier applications.

But netops can achieve a balance that enables both to co-exist, if it can identify and subsequently automate the heck out of those tasks and service delivery options that are non-disruptive and considered checkbox tasks by change control and other approvers.

After all, our favorite equation for determining the time to paint a house changes dramatically when the painters involved are given automatic paint rollers instead of manually-driven brushes.

Don’t worry so much about changing the network, just change the equation instead.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.