Keep the hot side hot and the cold side cold.

You may recall (if you’re old enough and no, don’t worry, I won’t ask you to raise your hand) a campaign years ago by McDonald’s promoting packaging of some of its products that kept the “hot side hot and the cold side cold.”

The concept was quite simple, really: separate the hot and the cold but keep it in a single container for easy transport.

That notion of separation within the same “container” is really the basis for what makes an app proxy, well, a proxy. Keeps the client side client and the app side … app.

Okay, that might not translate as neatly as I’d like after all.

Still, the concept is valid and one that’s important in understanding an app proxy.

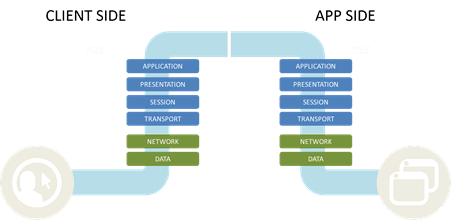

Fundamentally a proxy is software that is logically positioned between two participants in a communication exchange. An app proxy sits between an app and a client. Now, not all proxies are full proxies. A full proxy requires internal separation of the two sides; essentially a full proxy has two independent networking stacks contained within a single device. The client side (the hot side) and the app side (the cold side).

I know, the analogy really isn’t working well, is it? Work with me, it’s all I got for now.

The reason this is (or should be) a requirement for an app proxy is that it provides the proxy with the ability to participate in the exchange between the client and the server. That’s necessary to provide functionality like minification (which improves app performance) and security functions (like data scrubbing) and TCP multiplexing (optimization) as well as a broad set of other services.

It’s in that internal “gap” between the client side and the app side that the magic happens. That’s where any number of services – from rudimentary load balancing to advanced application firewalling to application access control – do their thing. Requests are effectively terminated on the client side of an app proxy. After that a process much like service chaining occurs, only it all happens internally at internal bus and processor speeds (which are almost always faster than that of network speed). Inspection ensues. Policies are applied. Transformations occur. Decisions are made. A separate connection between the proxy and the app is made, and the request is sent on its way.

When that request returns to the proxy, the reverse happens. Inspection ensues. Data is scrubbed. Policies are applied. Then it’s back on the client side where it can be transferred back to the client.

And it all happens in sub-second timing because it’s all internal to the proxy.

Because the purpose of an app proxy is to deliver a wide range of app services – availability, security, mobility, identity and access, and performance – it really should be a full proxy. Only a full proxy is designed to participate in each and every request and response. Simple proxies (which are really stateless proxies if you want to get into the weeds) only participate in the initial conversation, when the connection between the client and app is being created. Its purpose is to pick an app instance and then “stitch” the connection together between the two. After that, the proxy doesn’t participate. It sees a “flow” (a layer 4 TCP construct you may have heard while discussing SDN, which is yet another discussion for another time) and simply forwards packets back and forth, mixing the hot and the cold indiscriminately. (See? I knew the analogy would work eventually.)

Now, all that said, a modern (and scalable) app proxy should be a full proxy and have three key characteristics: programmability, performance, and protocols.

Programmability is critical in modern data centers and the cloud to support automation, orchestration, and standardization. It’s also key in the data path to enabling security and services that provide unique value to business and operations, enabling support for custom protocols and augmentation of existing ones. Performance sounds like it should be simple, but it’s not. Because an app proxy interacts with every single request it has to be not just fast but blazingly fast. It’s got to do what it’s got to do quickly, without adding app experience killing latency to the exchange. That’s hardly as easy as it sounds, especially when there’s such a push to use general purpose compute as a basis for deployment.

Lastly, protocols are important. The first thing we think of when we say “app” is probably HTTP. That’s no surprise; HTTP is the new TCP and the lingua franca of the Internet. But it’s not the only protocol in use and especially not in an age of Internet-enabled communications. There’s SIP and UDP, too. Not to mention SMTP (you do still send e-mail, right?) and LDAP. And how about SSL and TLS? With an increasing focus (and urgency) to get SSL Everywhere, well, everywhere, it’s even more imperative that an app proxy speak SSL/TLS – and speak it uber fluently. Because otherwise that performance requirement might not get met.

An app proxy can provide the platform modern data centers need to address security and performance challenges, to automate and orchestrate their way into lower operating costs, and to ensure the optimal app experience for consumer and corporate customers alike. But it has to be a full app proxy with programmability, performance, and protocol support to ensure that no app is left behind.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.