Let’s talk turkey. Or crème eggs. Or boxes of candy. What do they have in common? They’re all associated with holidays, of course. And, it turns out, those holidays are the number one generator of both profits and poor performance by websites.

Consider recent research from the UK, “which involved more than 100 ecommerce decision makers” It “revealed that more than half (58%) admitted to having faced website speed issues during last year's peak period.”

Now, we all know that performance is important, that even microseconds of delay results in millions of dollars in losses. Nuff said.

The question is, what can you do about it?

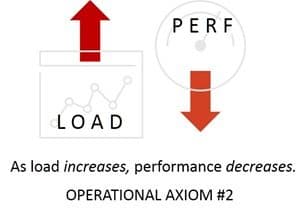

The answer lies in remembering Operational Axiom #2: As Load Increases Performance Decreases.

It doesn’t matter whether the app server is running in the cloud or in the data center, in a virtual machine or a container. This axiom is an axiom because it is always true. No matter what. The more load you put on a system, the slower it runs. Period.

The key to better performance is to balance the need to keep costs down by maximizing load while simultaneously optimizing for performance. In most cases, that means using whatever tools you can to restore that balance, especially in the face of peak periods (which place a lot of stress on systems, no matter where they may be).

1. Balance the load

This is why good enough (rudimentary) app services aren’t. Because while they often effortlessly scale, they don’t necessarily actually balance the load across available resources. They don’t necessarily provide for the intelligence necessary to select resources based on performance or existing load. Their ‘best effort’ isn’t much better than blind chance.

Balancing load requires an understanding of existing load so new requests are directed to the resource most likely to be able to respond as quickly as possible. Basic load balancing can’t achieve this because its focus is purely on algorithmic-based decisions, which rarely factor in anything other than static weighting of available resources. Real-time decisions require real-time information about the load that exists right now. Otherwise you aren’t load balancing, you’re load distributing.

2. Reduce the load

It’s more than just selection of resources that aids in boosting performance while balancing the load. Being able to employ a variety of protocol enhancing functions that reduce load without impairing availability is also key. Multiplexing and reusing TCP connections, offloading encryption and security, and reassigning compressions duties to upstream services relieve burdens on app and web services that free up resources and have real impacts on performance.

Servers should serve, whether they’re in the cloud or in the data center, running in a container or a VM. Cryptography and compression are still compute-heavy functions that can be performed by upstream services designed for the task.

3. Eliminate the load

Eliminating extra hops in the request-response path also improve performance. Yes, you can scale horizontally across load balancing services, but doing so shoves another layer of decision making (routing) into the equation that takes time both in execution (which one of these should service this request?) and in transfer time (send it over the network to that one). That means less time for the web or app server to do its job, which is really all we wanted in the first place. Under typical load, the differences between one system managing a million connections and ten systems each managing a portion of the same may be negligible. Until demand drives load higher and the operational axioms kick in there, too. Because it’s not just about the load on the web or app server that contributes to poor performance, it’s the entire app delivery chain.

The more capacity (connections) your load balancing service can concurrently handle, the fewer instances you need. That reduces the overhead of managing yet another layer of resources that require just as careful attention to operational axiom #2 as any other service.

Performance continues to be a significant issue for retailers, and with the rapidly expanding digital economy it will become (if it isn’t already) an issue for everyone with a digital presence. In the rush that always happens before holidays, folks become even less tolerant of poor performance. What was good enough the day before, isn’t. More often than not performance issues are not the fault of the application, but rather the architecture and the services used to deliver and secure it. By using the right services with the right set of capabilities, organizations are more likely to be able to keep from running afoul of performance issues under heavy load.

Good enough is good enough, until it isn’t. Then it’s too late to cajole frustrated customers to come back. They’ve already found another provider of whatever it was you were trying to sell them.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.