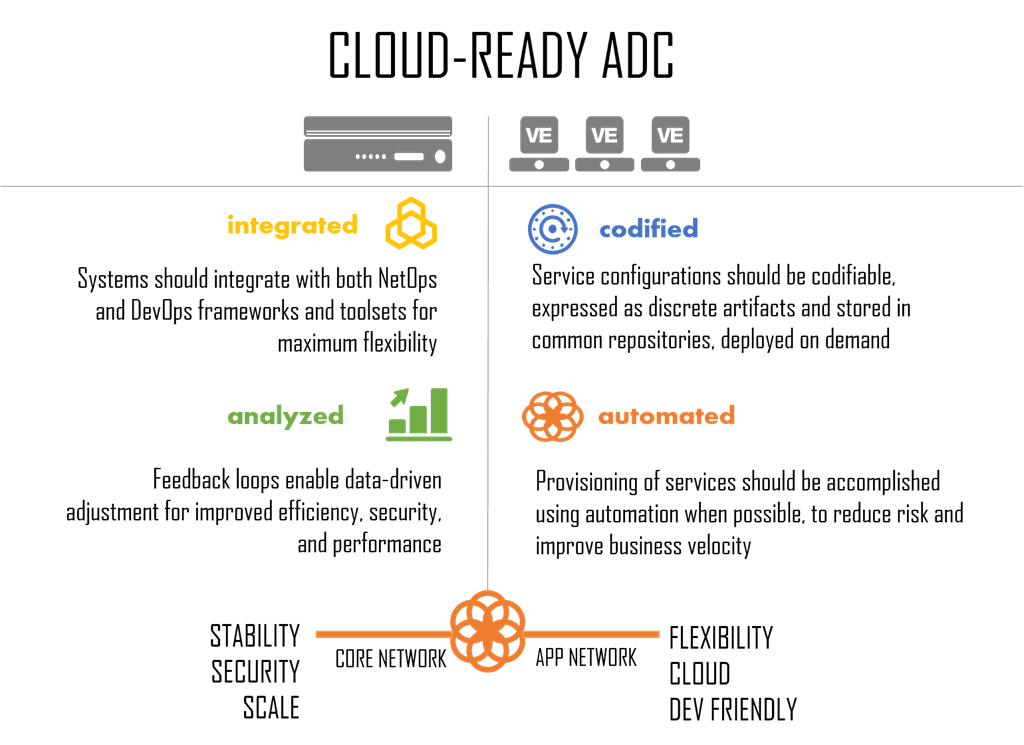

A cloud-ready application delivery controller (ADC) is not your traditional ADC. Available for deployment on custom or COTS hardware, it’s a scalable software-solution supporting both the need for fast, secure, and available delivery and deployment of applications. A cloud-ready ADC enables a modern, two-tiered approach to data center architectures combining traditional stability, security, and scale with modern flexible, cloud, and DevOps-friendly programmatic features.

That’s a nice description, but what does it mean?

I’ve written before about what it takes to be considered a modern app proxy and that’s certain a part of what a cloud-ready ADC requires, but today’s demanding environments need more than just technical capabilities, it needs operational and ecosystem integration and the ability to support modern and emerging application architectures.

And honestly, there are many ADCs out there that would make the same claims of cloud-readiness and support for both traditional and emerging application architectures, among other claims. So today I’d like to dig into what makes a cloud-ready ADC different from a traditional ADC, before we dig further into why it’s so important in today’s application world.

Five Degrees of Differentiation

There are basically five (six if you want to be really pedantic) different ways in which a cloud-ready ADC differentiates itself from a traditional ADC. And since F5 pretty much defined what an ADC should be way back before I was here, we kind of understand the ADC better than anyone else. Both what they needed to be and what they must evolve to be to continue delivering apps with the speed, security, and stability business needs.

Performance

This is a no-brainer, right? After all, if you’re going to sit in front of apps and proxy for them, you’d better be fast (or even better, faster) than the apps you’re delivering. Traditional ADCs are delivered in appliance form factors. Chassis, hardware, appliances. But still traditional ADCs have been limited by the CPUs in that custom hardware they have to work with. ADCs are platforms so they’ve tended to focus on general speed, like general purpose CPUs focus on general processing. But they almost always include a variety of hardware acceleration options that have to be determined before deployment. Now we might be crazy, but we think a cloud-ready ADC should be able to go beyond that limitation, understanding that one app might need one service more than another, while a differenter app might need some other service more than another. Like the very notion of repurposing compute on-demand that underpins the entire concept of cloud computing, organizations that invest in hardware of any kind should be able to repurpose it, too.

The reason that’s important in services layered atop the network is offloading common tasks to specific hardware (FPGAs) means the CPU is freed to do other things, like request/response inspection, modification, scrubbing, etc… That makes the entire “stop” at the proxy faster, which translates to lower latency and happier users.

That’s why a cloud-ready ADC can break barriers, taking advantage of software-defined performance and enabling organizations to programmatically increase the performance of the ADC for certain types of processing, like security. It means that if the needs of the organization change, the performance profile of that hardware can be changed along with it, on-demand. That’s agility in hardware. I know, paradoxical, right? But that’s part of being a cloud-ready ADC; the ability to repurpose specialized hardware so that investments are protected and the need for expensive, forklift upgrades can be eliminated.

Programmability

A long standing frustration I’ve personally heard over and over from customers has been the discord between NetOps and DevOps when it comes to scripting capabilities. The problem is that the traditional ADC only offered the most basic of scripting, and in languages more familiar to NetOps, not DevOps. Now, DevOps were willing to learn to take advantage of scripting in the data path because it enabled a variety of more agile request/response routing, scaling strategies, and even security services. But with the traditional ADC ensconced upstream, in the core network, NetOps were not about to let DevOps go deploying scripts that might muck with the flow of traffic.

The availability of virtual editions, meant DevOps now had access to their own, personal, private ADC in the form of a virtual machine, but they were still forced to learn a networky scripting language. That’s not a good thing. A cloud-ready ADC should support both NetOps in the core network and DevOps in the app network, like, in the cloud. And DevOps and developers in particular prefer more dev-friendly languages, like node.js. Not just because they know the language, but a rich repository of libraries (like NPM) and services already exist to enable faster integration with dev-friendly infrastructure. That’s why a cloud-ready ADC should support both.

App Security

Traditional ADCs have long provided for basic application security. Sitting in front of an app, particularly web apps, means they were (and still are) usually the first thing in the data center to be able to recognize an attack and do something about it. Most of that security revolved, understandably, around protocol-level security. SYN flood protection, DDoS detection, and other TCP and basic HTTP related security options have been part and parcel of traditional ADCs for some time now.

But a cloud-ready ADC should go further. They’re likely to be one of the few "devices” included in the app architecture itself, to ensure the inevitable scale needed by today’s applications, so having a like focus on full stack security just make sense. At least we think so, especially since doing so promotes performance. If you’re going to have to stop for one kind of processing, it just makes sense to do as much as possible at the same time. It’s more efficient and eliminates the latency required to travel from box to box to box. If you’ve traveled long distances with kids you know what I’m talking about. There’s a restroom at the gas station. You don’t want to stop again at a rest stop five minutes later, right? Same thing with security in the network. Do as much as you can at one time to eliminate overhead that is often the source of frustration for customers and the business alike. Performance is critical, and a cloud-ready ADC should do everything it can to ensure faster app experiences.

That may mean offering new models for managing the increasing demand (and in some cases, requirement) to support secure communications between mobile devices and apps. That means support for Forward Secrecy (FS) and the cryptography that enables it. It means enabling new models for offloading the computationally expensive processing of encryption and decryption to the hardware designed to handle it, without breaking the architectures needed to support microservices and emerging applications. A cloud-ready ADC should support both, enabling both fast and secure communications while slipping seamlessly into today’s architectures and cloud environments.

Partner Ecosystem and Automation

The Other API economy is critical to the success of private cloud and the competitive advantage afforded business by increases in automation and orchestration. But no matter how nice it’d be, there are always going to be a variety of services in the data center from different vendors, all with different integration and object models. That often means painful API-driven automation that requires expertise across every necessary component. The reality is that there are two levels of integration required, one for orchestration and one for automation (no, they aren’t the same thing). There’s the inherent automation capabilities of the platform, via APIs and templates, and then there’s the native integration into the partner ecosystem, where orchestration reigns supreme. Both are required of a cloud-ready ADC. The former ensures automation via proprietary products or custom scripts and frameworks like Puppet and Chef, where the latter enables orchestration via increasingly important controllers like OpenStack, VMware, and Cisco.

A cloud-ready ADC should natively integrate and offer orchestration through the frameworks and systems being used to provide a comprehensive orchestration solution.

It shouldn’t need to be said more than once that a cloud-ready ADC should support cloud templates.

So that’s all I’m going to say about that.

App Model

Traditional ADCs were born from a need to offer a robust set of services for traditional applications. Monoliths, if you will. Today’s applications are increasingly distributed, diverse, and differenter than their traditional predecessors, leveraging a variety of architectures, platforms, and deployment models. Whether containers or virtual machines, cloud or cloud-like environments, applications still need some of those services. And where there is a need for more than one service at a time (like, say, load balancing and app security) then you’ll find the need for an ADC. While a traditional ADC may not fit the bill for microservices, a cloud-ready ADC will. That includes a broader set of services that may benefit from load balancing and security, services that fall squarely in the demesne of devops, not netops, like memcached.

Still a platform, a cloud-ready ADC nonetheless supports the programmability, integration, and form-factor requirements necessary to deliver the services both traditional and emerging apps need, in the environment they need it.

There’s a lot of similarity between a traditional ADC and a cloud-ready ADC. Both are still platforms, capable of enabling multiple services with a common operational model. Both are programmable, integrate with other data center and cloud systems, and provide for flexibility in performance capabilities. But a cloud-ready ADC goes beyond the traditional, supporting both while enabling the future of business, applications and their increasingly diverse need to integrate and interoperate with a wide variety of infrastructure and environments.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.