[Editor– This post is an extract from our comprehensive eBook, Managing Kubernetes Traffic with F5 NGINX: A Practical Guide. Download it for free today.]

Along with HTTP traffic, NGINX Ingress Controller load balances TCP and UDP traffic, so you can use it to manage traffic for a wide range of apps and utilities based on those protocols, including:

- MySQL, LDAP, and MQTT – TCP‑based apps used by many popular applications

- DNS, syslog, and RADIUS – UDP‑based utilities used by edge devices and non‑transactional applications

TCP and UDP load balancing with NGINX Ingress Controller is also an effective solution for distributing network traffic to Kubernetes applications in the following circumstances:

- You are using end-to-end encryption (EE2E) and having the application handle encryption and decryption rather than NGINX Ingress Controller

- You need high‑performance load balancing for applications that are based on TCP or UDP

- You want to minimize the amount of change when migrating an existing network (TCP/UDP) load balancer to a Kubernetes environment

NGINX Ingress Controller comes with two NGINX Ingress resources that support TCP/UDP load balancing:

- GlobalConfiguration resources are typically used by cluster administrators to specify the TCP/UDP ports (

listeners) that are available for use by DevOps teams. Note that each NGINX Ingress Controller deployment can only have one GlobalConfiguration resource. - TransportServer resources are typically used by DevOps teams to configure TCP/UDP load balancing for their applications. NGINX Ingress Controller listens only on ports that were instantiated by the administrator in the GlobalConfiguration resource. This prevents conflicts between ports and provides an extra layer of security by ensuring DevOps teams expose to public external services only ports that the administrator has predetermined are safe.

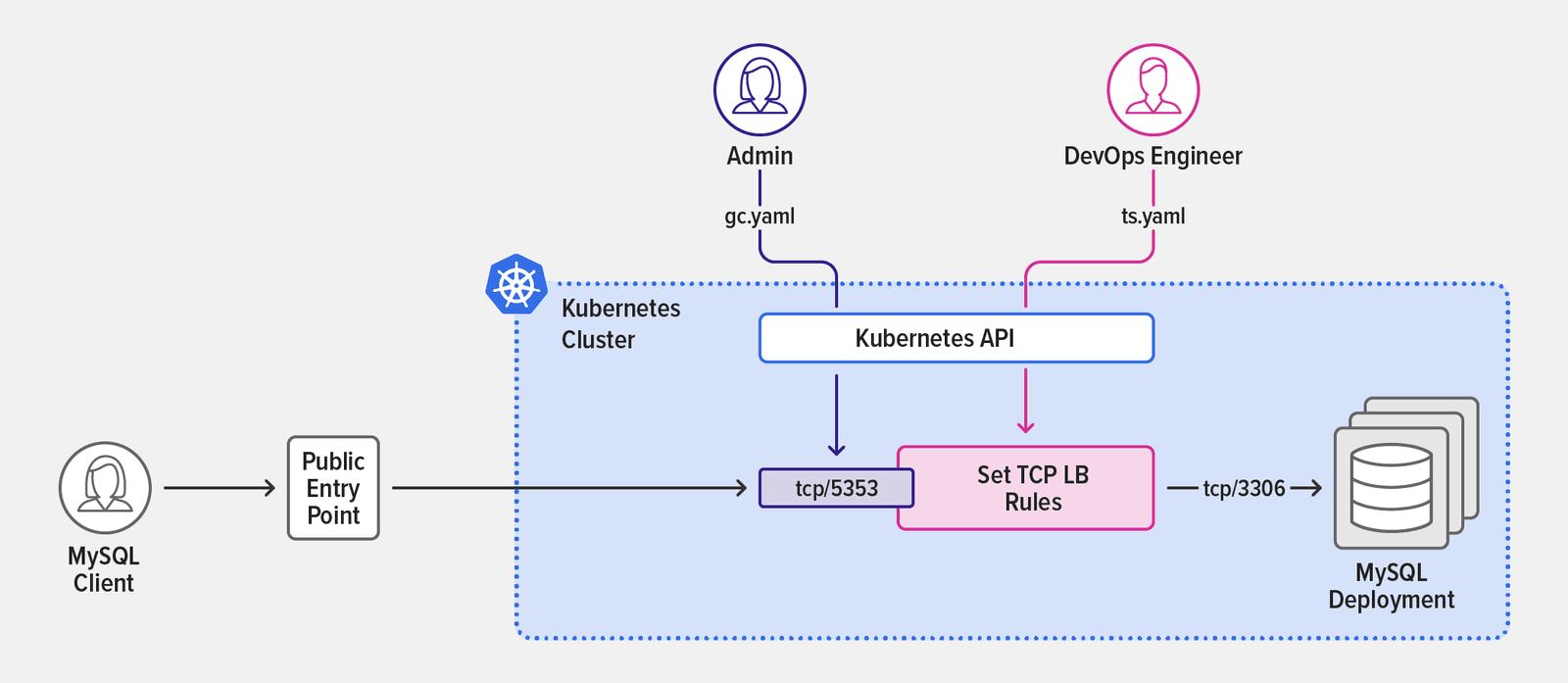

The following diagram depicts a sample use case for the GlobalConfiguration and TransportServer resources. In gc.yaml, the cluster administrator defines TCP and UDP listeners in a GlobalConfiguration resource. In ts.yaml, a DevOps engineer references the TCP listener in a TransportServer resource that routes traffic to a MySQL deployment.

The GlobalConfiguration resource in gc.yaml defines two listeners: a UDP listener on port 514 for connection to a syslog service and a TCP listener on port 5353 for connection to a MySQL service.

Loading gist…

Lines 6–8 of the TransportServer resource in ts.yaml reference the TCP listener defined in gc.yaml by name (mysql-tcp) and lines 9–14 define the routing rule that sends TCP traffic to the mysql-db upstream.

Loading gist…

In this example, a DevOps engineer uses the MySQL client to verify that the configuration is working, as confirmed by the output with the list of tables in the rawdata_content_schema database inside the MySQL deployment.

TransportServer resources for UDP traffic are configured similarly; for a complete example, see Basic TCP/UDP Load Balancing in the NGINX Ingress Controller repo on GitHub. Advanced NGINX users can extend the TransportServer resource with native NGINX configuration using the stream-snippets ConfigMap key, as shown in the Support for TCP/UDP Load Balancing example in the repo.

For more information about features you can configure in TransportServer resources, see the NGINX Ingress Controller documentation.

This post is an extract from our comprehensive eBook, Managing Kubernetes Traffic with NGINX: A Practical Guide. Download it for free today.

Try the NGINX Ingress Controller based on NGINX Plus for yourself in a 30-day free trial today or contact us to discuss your use cases.

About the Author

Related Blog Posts

Secure Your API Gateway with NGINX App Protect WAF

As monoliths move to microservices, applications are developed faster than ever. Speed is necessary to stay competitive and APIs sit at the front of these rapid modernization efforts. But the popularity of APIs for application modernization has significant implications for app security.

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for north-south and east-west API traffic, plus use cases where an API gateway is the right tool.

Deploying NGINX as an API Gateway, Part 2: Protecting Backend Services

In the second post in our API gateway series, Liam shows you how to batten down the hatches on your API services. You can use rate limiting, access restrictions, request size limits, and request body validation to frustrate illegitimate or overly burdensome requests.

New Joomla Exploit CVE-2015-8562

Read about the new zero day exploit in Joomla and see the NGINX configuration for how to apply a fix in NGINX or NGINX Plus.

Why Do I See “Welcome to nginx!” on My Favorite Website?

The ‘Welcome to NGINX!’ page is presented when NGINX web server software is installed on a computer but has not finished configuring