Developers and engineers who have recently arrived in the world of computing and applications often view the world through the lens of the latest, newest, and shiniest technology on the block. More often, though, the truth is that—like the childhood friends of us grizzled tech industry veterans—those concepts and analogous implementations have always been around. They simply advance or recede over time, as the business requirements and economics converge and diverge, relative to the underlying implementation constraints and infrastructures. In fact, it is the constantly evolving business environment that drives needs and requirements, which then results in specific technology strategies to be rediscovered or pushed aside.

In that vein, for the next set of articles I'll be talking about some of the application-related technologies whose tide will be coming in over the next few years, especially as we evolve toward a more fully dispersed application delivery fabric and the emergent role of the edge. But first, it's useful to examine why and how we got to where we are today.

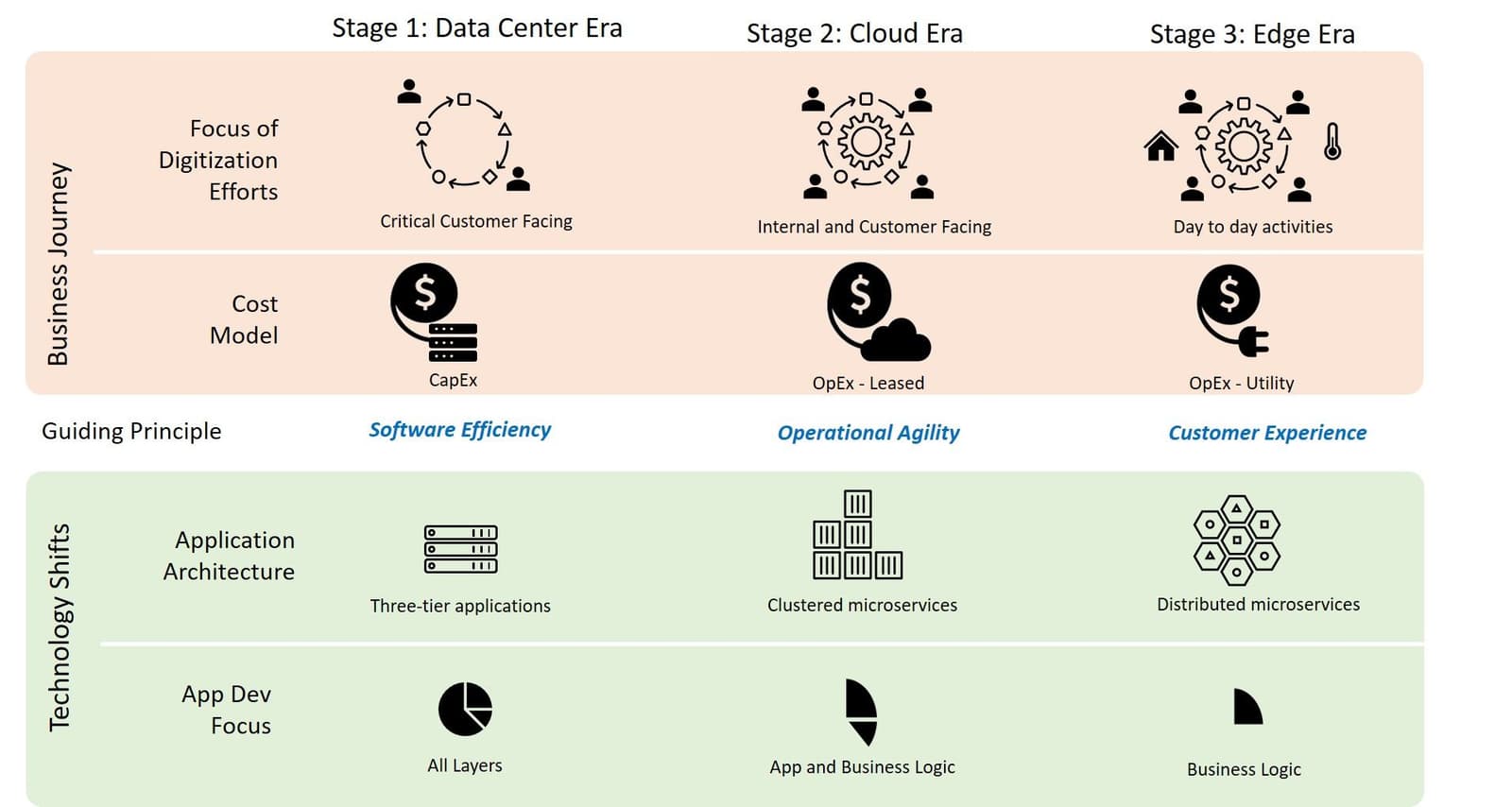

Stage 1: The Data Center Era

Let's start our journey about 20 years back or so, at a time when the digital delivery of business services (the term "applications" wasn't in the vernacular at the time) was from privately owned and operated data centers. This technical strategy was sufficient and appropriate for that era, largely because in that timeframe, it was only the most business-critical business operations that were "digitized." Some examples of digital business operations in that era were:

- An online retailer would only "digitize" the customer shopping experience and perhaps inventory management.

- An airline would only "digitize" the reservation and payment systems.

- The government would "digitize" high value transactional workflows, such as tax payments.

Given the fact that only a small number of business workflows—i.e., the most important—were digitally delivered and considered in the context of a typical business organization in that period (vertically integrated, geographically co-located workforces with centralized control of the organization and IT infrastructure), it was only natural for this to be organizationally reflected in a centrally owned and IT-managed data center, running monolithic "applications" that were mostly or entirely developed in-house. Infrastructure, security, and applications (née "business services") were a single vertically integrated stack. Thus, in a very real sense, the technology stack—centralized and monolithic—mimicked the organizational and business structures.

Stage 2: The Cloud Era

The next step in the evolution of applications and their technology stack was driven by the expansion of "digitalization" into secondary business workflows. This next step widened the set of digital workflows to include not just customer-facing workflows—which additionally became commoditized, as evidenced by the proliferation of apps in app stores—but also expanded the scope to include operational workflows internal to the organization, often referred to as part of the enterprise digital transformation trend.

Consequently, enterprises were forced to rethink their business organizational strategies. One specific implication was a greater emphasis was placed on cost efficiency and agility, given the business problem of rapidly changing business scale. This biased the payment model towards a utility pricing model, paying for what's actually used, rather than having to pre-pay and provision for a higher possibly anticipated load. Using financial terminology, the model for funding application infrastructure increasingly migrated from the up-front CapEx model to the pay-as-you-go OpEx strategy. Another concurrent trend in the same timeframe, also related to cost efficiency and agility, was the movement toward a more geographically distributed workforce, which drove a need for more ubiquitous and reliable 24/7 connectivity than was needed in the past.

The repercussions of these requirements—the digitalization of a much greater number of business workflows, the desire for the flexibility of the OpEx cost model, and the requirement for global 24/7 connectivity—naturally led to an environment that was ripe for the creation of a global network of very large, highly available virtual data centers, the use of which was priced as a utility. And so, the public cloud was born.

Additionally, once the seeds of the public cloud were present, a self-reinforcing positive feedback loop was created. As the public cloud matured as an application platform, it started to subsume much of the lower-level network infrastructure that had previously been managed by traditional enterprise IT. Consequently, the scope of Network Operations teams diminished within many enterprises, with enterprises instead turning their focus toward Application Deployment and Delivery (a.k.a., "DevOps") and Application Security (a.k.a., "SecOps"). Of course, this was not universal; service providers and large enterprises had the need, and capability, to do in-house NetOps for their most critical or sensitive workflows.

This story represented the first phase of the Cloud Era, in which the public cloud could be viewed as a form of an outsourced, time- and resource-shared data center—in other words, the public cloud abstraction was one of Infrastructure-as-a-Service (IaaS).

The next phase of the Cloud Era was driven by two different emergent business insights, both of which required the Phase 1/IaaS paradigm as a prerequisite. The first of these business realizations was enabled by the ability to isolate delivery of the business value from the implementation that delivered the digital workflow. More specifically, businesses could now start to imagine an execution strategy where the lower levels of the technology infrastructure—which were now managed as a service provided by the public cloud—could be decoupled from the business leaders' primary concerns, which were around the enterprise’s value proposition and competitive differentiators.

The second business observation was that, as more of the traditional workflows went digital and were automated, the higher-level business processes could be adjusted and optimized over much shorter time scales. A separate article discusses this effect in more detail, elaborating on how digital workflows evolve from simple task automation through digital optimization (a.k.a., "digital expansion") and eventually ending up in AI-Assisted Business Augmentations. As examples of this trend, workflows as diverse as pricing adjustment, employee time scheduling, and inventory management all benefitted from rapid adaptability, and the term "business agility" was coined.

These two insights resulted in a business epiphany, as enterprises realized it was often more cost-efficient to outsource the areas that were not the business' core competencies. This in turn led to a win-win business arrangement between the enterprise and their cloud provider partners where both sides were motivated to further up-level the services offered by the public cloud, thereby offloading additional technology overhead from the enterprise. This concept was then extended by the public cloud to make available higher-level platform capabilities, such as databases, filesystems, API gateways, and serverless computing platforms, again as services in the public cloud. In addition, public cloud providers also offered to integrate over-the-top services, most commonly in the areas of performance management and security.

The result was that as cloud era matured past the IaaS model of Phase 1, it ushered in the Cloud Phase 2 paradigms of Platform-as-a-Service (PaaS) and Software-as-a-Service (SaaS). With Cloud Phase 2, most, if not all, of the infrastructure needed to support an application could be outsourced from the enterprise to cloud providers that could optimize the infrastructure at scale and bring a larger team of specialists to focus on any broadly required application service. Although this did free the enterprise to focus its technology budget on core business logic, it often caused an undesirable side-effect (from the enterprise's perspective) of "lock in" to a particular cloud vendor. In order to mitigate this effect, enterprises strove to define and codify the expression of their core business value using vendor-agnostic technologies, especially in the key areas of APIs and compute models, and were implemented using REST and gRPC API technology, backed by a virtualized and containerized compute model—Kubernetes.

Stage 3: Digitalization of Physical Things & Processes

The third, and currently emerging, stage in the progression of "applications" is being driven by the digitalization of the everyday activities we hardly think of as workflows. In contrast to Stages 1 and 2, where it was primarily business processes and a small set of transactional consumer workflows that went online, this stage is about creating digital experiences that are ubiquitous and seamlessly integrated into our everyday "human life" actions. The full set of use cases are still currently unfolding, but we already see glimpses of the rich and multi-faceted road ahead in emerging use-cases that leverage technologies such as augmented reality, automated home surveillance systems, and grid-level power management. The solutions notably interact with the real, physical world, often using smart devices—autonomous vehicles, digital assistants, cameras, and all variety of smart home appliances.

From a business' perspective, this next transition will place a much greater emphasis on the user experience of the digital consumer. This user experience focus, coupled to the observation that devices, not humans, will comprise the majority of direct customers of digital process, means that the digital consumer's user experience requirements will be much more diverse than these were in the prior stages of the digital evolution. This next transition, from Stage 2 to Stage 3 will be different the prior one (Stage 1 to Stage 2). Specifically, this next step will not be a simple extrapolated progression of the usual digital experience metrics (i.e., "make it faster and lower latency"), but will instead be about giving the "application" a much broader set of choices on how to make application delivery tradeoffs, allowing the experience to be tailored to the requirements of the digital consumer in the context of the use case being addressed.

From a technologist's perspective, the implication of the business requirements is that the increased diversity of consumption experience requirements will result in the need to construct a correspondingly flexible and adaptable means to tradeoff, specify, and optimize common application delivery metrics: latency, bandwidth, reliability, and availability. For example, an augmented reality experience system may require very low latency and high bandwidth but be more tolerant of dropping a small fraction of network traffic. Conversely, a home camera used for an alarm system may require high bandwidth, but tolerate (relatively) longer latency, on the order of seconds. A smart meter may be tolerant of both long latency and low bandwidth, but may require high levels of eventual, if not timely, reliability, so that all energy use is recorded.

The design and architecture of a system that is flexible and adaptive enough to meet the needs of this next stage of "digitalization" will require a mechanism where the many components that comprise the delivery of a digital experience can be easily dispersed, and migrated when needed, across a variety of locations in the application delivery path. The distribution of these application components will need to be tailored to the delivery needs—latency, bandwidth, reliability—driven by the user experience requirements, and systems will need to continuously adapt as the environment and load changes. Last, but not least, the security concerns of the application—identity management, protection against malware, breach detection—will need to seamlessly follow the application components as they shift around in the application delivery path.

Leaning in towards the Road Ahead

So, more concretely, what does this mean, relative to where we are today? What is means is this:

- First, because the majority of digital experience consumers will be other applications and digital services, the role of APIs and API management will take even more of center stage.

- Second, the set of application delivery locations we have today—the data center, cloud, and cloud-delivered services—will not be sufficient to meet the needs of the future. More specifically, in order to deliver richer customer experiences, we will need to be able to deliver aspects of the digital application experience closer to the end user, using technologies such as the intelligent edge, delivery path content and compute services, and SDWAN in the delivery path. We may even need to extend the application delivery path to use client-side sandbox technologies.

- Third, the edge will increasingly be the point of "ingress" into the digital experience delivery, and therefore the edge will take on more importance as the key delivery orchestration point.

- Finally, when it comes to security, not only must it be innate and pervasive, it must also be agnostic to how the application components are dispersed along the application delivery path.

These last three topics will be the focus for the next articles in this series, where we talk about the "Edge" and where it is headed, and, thereafter, what a truly location-agnostic view of security looks like.

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.