While the standard Kubernetes Ingress resource is great for provisioning and configuring basic Ingress load balancing, it doesn’t include the kind of customization features required to make Kubernetes production‑grade. Instead, non‑NGINX users are left to use annotations, ConfigMaps, and custom templates which are error‑prone, difficult to use, and not secure, and lack fine‑grained scoping. NGINX Ingress resources are our answer to this problem.

NGINX Ingress resources are available for both the NGINX Open Source and NGINX Plus-based versions of NGINX Ingress Controller. They provide a native, type‑safe, and indented configuration style which simplifies implementation of Ingress load balancing. In this blog, we focus on two features introduced in NGINX Ingress Controller 1.11.0 that make it easier to configure WAF and load balancing policies:

- TransportServer resource – The TransportServer resource defines configuration for TCP, UDP, and TLS Passthrough load balancing. We have added health checks, status reporting, and config snippets to enhance TCP/UDP load balancing.

- NGINX Ingress WAF policy – When you deploy NGINX App Protect 3.0 with NGINX Ingress Controller, you can leverage NGINX Ingress resources to apply WAF policies to specific Kubernetes services.

Enhancements to the TransportServer Resource

NGINX Ingress Controller 1.11.0 extends the TransportServer (TS) resource in the following areas:

Note: The additions to the TransportServer resource in release 1.11.0 are a technology preview under active development. They will be graduated to a stable, production‑ready quality standard in a future release.

TransportServer Snippets

In NGINX Ingress Controller, we introduced config snippets for the VirtualServer and VirtualServerRoute (VS and VSR) resources which enable you to natively extend NGINX Ingress configurations for HTTP‑based clients. Release 1.11.0 introduces snippets for TS resources, so you can easily leverage the full range of NGINX and NGINX Plus capabilities to deliver TCP/UDP‑based services. For example, you can use snippets to add deny and allow directives that use IP addresses and ranges to define which clients can access a service

Health Checks

To monitor the health of a Kubernetes cluster, NGINX Ingress Controller not only considers Kubernetes probes which are local to application pods, but also keeps tabs on the status of the network between TCP/UDP‑based upstream services, with passive health checks to assess the health of transactions in flight and active health checks (exclusive to NGINX Plus) to probe endpoints periodically with synthetic connection requests.

Health checks can be very useful for circuit breaking and handling application errors. You can customize the health check using parameters in the healthCheck field of the TS resource that set the interval between probes, the probe timeout, delay times between probes, and more.

Additionally, you can set the upstream service and port destination of health probes from NGINX Ingress Controller. This is useful in situations where the health of the upstream application is exposed on a different listener by another process or subsystem which monitors multiple downstream components of the application.

Supporting Multiple TransportServer Resources with ingressClassName

When you update and apply a TS resource, it’s useful to verify that the configuration is valid and was applied successfully to the corresponding Ingress Controller deployment. Release 1.11.0 introduces the ingressClassName field and status reporting for the TS resource. The ingressClassName field ensures the TS resource is processed by a particular Ingress Controller deployment in environments where you have multiple deployments.

To display the status of one or all TS resources, run the kubectl get transportserver command; the output includes state (Valid or Invalid), the reason for the most recent update, and (for a single TS) a custom message.

If multiple TS resources contend for the same host/listener, NGINX Ingress Controller selects the TS resource with the oldest time stamps, assuring a deterministic outcome in that situation.

Defining a WAF Policy with Native NGINX App Protect Support

NGINX Ingress resources not only make configuration easier and more flexible, they also enable you to delegate traffic control to different teams and impose stricter privilege restrictions on users that own application subroutes, as defined in VirtualServerRoute (VSR) resources. By giving the right teams access to the right Kubernetes resources, NGINX Ingress resources give you fine‑grained control over networking resources and reduce potential damage to applications if users are compromised or hacked.

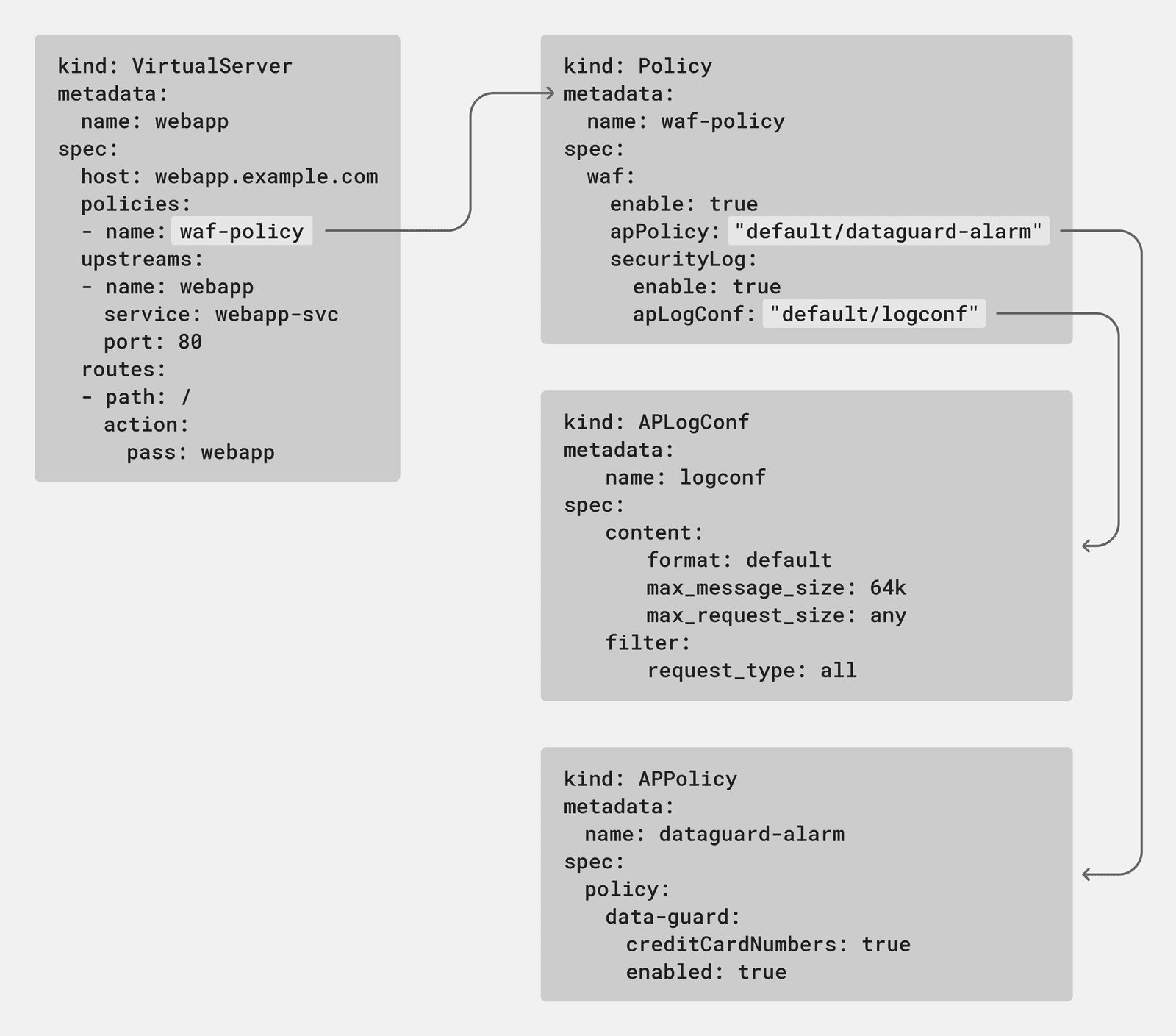

Release 1.11.0 introduces a native web application firewall (WAF) Policy object to extend these benefits to configuration of NGINX App Protect in your Kubernetes deployments. The policy leverages the APLogConf and APPolicy objects introduced in release 1.8.0 and can be attached to both VirtualServer (VS) and VSR resources. This means that security administrators can have ownership over the full scope of the Ingress configuration with VS resources, while delegating security responsibilities to other teams by referencing VSR resources.

In the following example, the waf-prod policy is applied to users being routed to the webapp-prod upstream. To delegate security responsibilities for the /v2 route across namespaces owned by different teams, the highlighted route directive references a VSR resource.

The teams that manage the test namespace can set their own parameters and WAF policies using VSR resources in that namespace.

This example separates tenants by namespace and applies a different WAF policy for the webapp-test-svc service in the test namespace. It illustrates how delegating resources to different teams and encapsulating them with objects simplifies testing new functionalities without disrupting applications in production.

What Else is New in Release 1.11.0?

With NGINX Ingress Controller 1.11.0 we continue our commitment to providing a production‑grade Ingress controller that is flexible, powerful, and easy to use. In addition to WAF and TS improvements, release 1.11.0 includes the following enhancements:

- Validation of more annotations – Avert more configuration errors

- Policy status information – Display the status of

Policyobjects before they are applied to the Ingress configuration - Istio compatibility – Leverage the advanced capabilities of the NGINX Ingress Controller in an Istio environment

- Cluster‑IP addresses for upstream endpoints – Populate upstream endpoints in the NGINX Ingress Controller configuration with service/cluster‑IP addresses

Validation of More Annotations

Building on the improvements to annotation validation introduced in release 1.10.0, we are now validating the following additional annotations:

Annotation Validationnginx.org/client-max-body-size Must be a valid offsetnginx.org/fail-timeout Must be a valid timenginx.org/max-conns Must be a valid non‑negative integernginx.org/max-fails Must be a valid non‑negative integernginx.org/proxy-buffer-size Must be a valid sizenginx.org/proxy-buffers Must be a valid proxy buffer specnginx.org/proxy-connect-timeout Must be a valid timenginx.org/proxy-max-temp-file-size Must be a valid sizenginx.org/proxy-read-timeout Must be a valid timenginx.org/proxy-send-timeout Must be a valid timenginx.org/upstream-zone-size Must be a valid size

If the value of the annotation is not valid when the Ingress resource is applied, the Ingress Controller rejects the resource and removes the corresponding configuration from NGINX.

Status Information about Policies

The kubectl get policy command now reports the policy’s state (Valid or Invalid) and (for a single TS) a custom message and the reason for the most recent update.

Compatibility with Istio

NGINX Ingress Controller can now be used as the Ingress controller for apps running inside an Istio service mesh. This allows users to continue using the advanced capabilities that NGINX Ingress Controller provides on Istio‑based environments without resorting to workarounds. This integration involves two requirements:

- The injection of an Istio sidecar into the NGINX Ingress Controller deployment

- Only one HTTP Host header is sent to the backend

To satisfy the first requirement, include the following items in the annotations field of your NGINX Ingress Deployment file.

The second requirement is achieved by a change to the behavior of the requestHeaders field. In previous releases, with the following configuration two Host headers were sent to the backend: $host and the specified value, bar.example.com.

In release 1.11.0 and later, only the specified value is sent. To send $host, omit the requestHeaders field entirely.

Cluster-IP Addresses for Upstream Endpoints

The upstream endpoints in the NGINX Ingress Controller configuration can now be populated with service/cluster‑IP addresses, instead of the individual IP addresses of pod endpoints. To enable NGINX Ingress Controller to route traffic to Cluster‑IP services, include the use-cluster-ip: true field in the upstreams section of your VS or VSR configuration:

Resources

For the complete changelog for release 1.11.0, see the Release Notes.

To try NGINX Ingress Controller for Kubernetes with NGINX Plus and NGINX App Protect, start your free 30-day trial today or contact us to discuss your use cases.

To try NGINX Ingress Controller with NGINX Open Source, you can obtain the release source code, or download a prebuilt container from DockerHub.

For a discussion of the differences between Ingress controllers, check out Wait, Which NGINX Ingress Controller for Kubernetes Am I Using? on our blog.

About the Author

Related Blog Posts

Secure Your API Gateway with NGINX App Protect WAF

As monoliths move to microservices, applications are developed faster than ever. Speed is necessary to stay competitive and APIs sit at the front of these rapid modernization efforts. But the popularity of APIs for application modernization has significant implications for app security.

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for north-south and east-west API traffic, plus use cases where an API gateway is the right tool.

Deploying NGINX as an API Gateway, Part 2: Protecting Backend Services

In the second post in our API gateway series, Liam shows you how to batten down the hatches on your API services. You can use rate limiting, access restrictions, request size limits, and request body validation to frustrate illegitimate or overly burdensome requests.

New Joomla Exploit CVE-2015-8562

Read about the new zero day exploit in Joomla and see the NGINX configuration for how to apply a fix in NGINX or NGINX Plus.

Why Do I See “Welcome to nginx!” on My Favorite Website?

The ‘Welcome to NGINX!’ page is presented when NGINX web server software is installed on a computer but has not finished configuring