There is an old proverb that is very relevant to AI: “a journey of a thousand miles begins with a single step.”

AI applications and ecosystems are taking the world by storm, requiring every organization to assess its readiness. At F5, we are seeing two types of organizations that are on an AI journey: those developing differentiated solutions through strategic investments like self-driving vehicles or large language models (LLMs) and enterprises deploying and optimizing AI for efficiency and organizational innovation. Business leaders are eager to deploy generative AI functionality and apps to increase productivity, while security and risk management leaders are scrambling to ensure governance for safe adoption of emerging AI apps and a burgeoning ecosystem of infrastructure, tools, models, frameworks, and the associated services to deliver, optimize, and secure them. AI factories are emerging as a blueprint for implementing AI-differentiated products and services.

So, is AI hype? A frenzy? A revolution? Or an apocalypse? The future remains to be seen.

If you take a step back and look at the big picture, one thing becomes clear—the connective tissue of AI apps are APIs. In fact, a common theme we discuss with our global customers is “A world of AI is a world of APIs.” APIs are the interfaces we use to train AI models and use these models. They are also the same interfaces that bad actors use to steal data from models, attempt to jailbreak and abuse AI models, and even invert and steal the models themselves. In the same way defenders missed the importance of API visibility and security in the cloud and the move towards modern apps based on microservices, the big “light bulb” moment is the realization that we cannot secure AI models without securing the interfaces that serve them.

Given the central role of APIs in building and delivering AI-powered applications, it’s critical to design API visibility, security, and governance into the AI development and training process at an early stage. It’s also critical that normal usage patterns and controls such as rate limits and data sanitization are built in during the design phase. With this approach, safe AI adoption becomes less daunting. API security is imperative to protect AI applications, as generative AI apps are especially dependent on APIs. So, the first step in the AI journey begins. Here are four key assertions for why API security is required for AI security and the safe adoption of AI factories.

Assertion #1: AI apps are the most modern of modern apps

Earlier in our AI factory series, we defined an AI factory as a massive storage, networking, and computing investment serving high-volume, high-performance training and inference requirements. Like traditional manufacturing plants, AI factories leverage pretrained AI models to transform raw data into intelligence. AI apps are simply the most modern of modern apps, heavily connected via APIs and highly distributed.

We start with the premise that an AI app is a modern app, with the same familiar challenges for delivery, security, and operation, and that AI models will radically increase the number of APIs in need of delivery and security.

So, mission accomplished! We got this, right? We know how to securely deliver modern apps. We have well-established application security best practices and understand the intricacies for protecting APIs. We have solutions that provide comprehensive protection and consistent security across distributed environments.

Not so fast! Hang on to your GPTs as we briefly dive into the details.

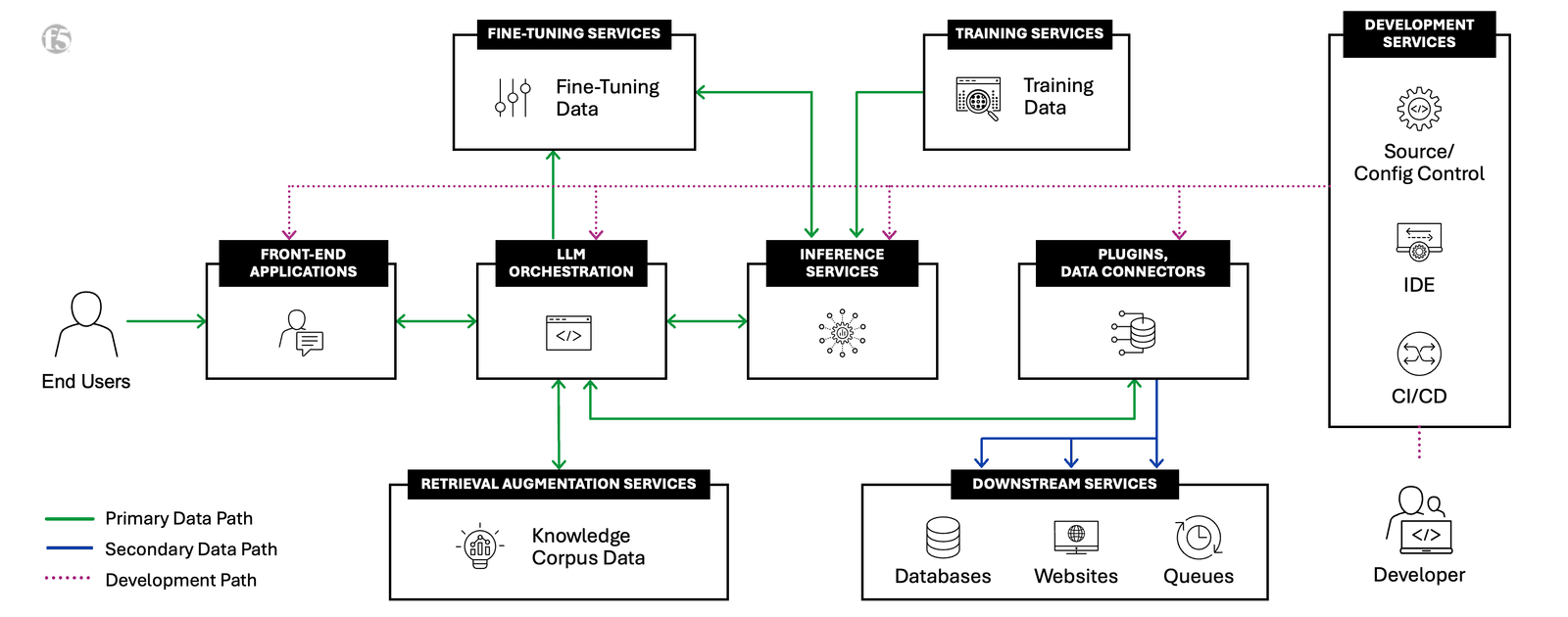

While AI apps share the same risks and challenges as those for modern apps (both of which are essentially API-based systems), AI apps have specific implications—training, fine-tuning, inference, retrieval augmented generation (RAG), and insertion points into AI factories.

So yes, AI apps, like modern apps, have a highly distributed nature, create tool sprawl, and complicate efforts to maintain consistent visibility, uniform security policy enforcement, and universal remediation of threats across hybrid and multicloud architectures. In addition to grappling with this “ball of fire” of risk and complexity, defenders must contend with the random nature of human requests and the highly variable nature of AI-procured content that can lead to unpredictable AI responses, as well as considerations for data normalization, tokenization, embedding, and populating vector databases.

Bringing together data to AI factories creates a holistic and connected enterprise ecosystem, and the integration and interfaces between of all these moving parts requires a robust API security posture.

Today’s enterprises are managing an exploding number of apps and APIs across an increasingly complex hybrid, multicloud environment. At F5, we call the growing risk and complexity the “ball of fire.”

Assertion #2: AI apps are interconnected by APIs

The rise of API-based systems from rapid modernization efforts led to a proliferation of architecture across data centers, public clouds, and the edge. This has been chronicled by F5’s annual research, including our 2024 State of Application Strategy Report: API Security | The Secret Life of APIs report.

The complexity of hybrid and multicloud architectures is intensified by AI apps. Organizations are rapidly assessing and diligently employing governance strategies for a litany of AI apps and underlying AI models, ultimately deciding on an AI-SaaS, cloud-hosted, self-hosted or edge-hostedapproach, sometimes on a use case and per-app basis. For example, some use cases involve training in the data center before production—when inferencing is performed at the edge.

The connectivity into these models and associated services is largely facilitated through APIs. For example: connections into popular services for inferencing. AI apps bring an exponential rise in API usage, as a single API may ultimately have thousands of endpoints, and API calls can be buried deep inside business logic, outside the purview of security teams. This leads to untenable complexity and serious implications for risk management.

“38% of organizations now have apps deployed across six environments.- 2024 State of Application Strategy Report”

It turns out cloud did not eat the world, but APIs did. And AI is going to eat everything. As described in our blog post, Six Reasons Why Hybrid, Multicloud Is the New Normal for Enterprises, a highly distributed environment provides flexibility for deployment of AI apps and associated services that ensure performance, security, efficiency, and accuracy. This is especially important for data gravity concerns. It’s no surprise that 80% of organizations run AI apps in the public cloud and 54% run them on-premises. Regardless of the approach, every facet of the architecture must be secured, and protecting APIs is the first place to start.

““The top security service planned for protecting AI models is API security.”- Three Things You Should Know about AI Applications”

Assertion #3: AI apps are subject to both known and novel risks

Protecting the APIs that are gateways into AI application functionalities and data exchanges from security risks is crucial. While the same vulnerability exploits, business logic attacks, abuse from bots, and denial-of-service (DoS) risks apply, there are additional considerations for new and modified insertion points within AI factories and risks to LLMs through natural language processing (NLP) interfaces, plugins, data connectors, and downstream services—during training, fine-tuning, inference, and RAG. Plus, known attacks like DoS have additional considerations; mitigations for volumetric DDoS and L7 application DoS do not completely offset the risks of model DoS. AI apps also have an explainability paradox, underscoring the importance of traceability to help offset hallucinations, bias, and disinformation.

And attackers are not sitting idle. Generative AI democratizes attacks and increases the sophistication of cybercriminal and nation state campaigns. LLM agents can now autonomously hack web apps and APIs—for example, performing tasks as complex as blind database schema extraction and SQL injections without needing to know the vulnerability beforehand.

While novel AI risks are generating a ton of buzz, the importance of API security for AI factories cannot be overstated. It is urgent. The increased API surface for AI apps is staggering. Every CISO needs to incorporate API security as part of AI governance programs, as a core mantra to any CISO survival guide should be “you can’t protect what you cannot see.” If you do not have an inventory of the interfaces behind your AI apps, you have an incomplete threat model and gaps in your security posture.

Assertion #4: Defenders must adapt

AI applications are dynamic, variable, and unpredictable. Safe adoption of AI apps requires greater emphasis on efficacy, robust policy creation, and automated operations. Inspection technologies will play a critical and evolving role in security.

For API Security within AI apps and ecosystems, in particular, organizations will need to offset the increased likelihood of unsafe usage of third-party APIs by architecting security for outbound API calls and related use cases, in addition to the inbound security controls that are largely in place. Bot management solutions cannot rely on “human” or “machine” identification alone, given the rise of inter-service and East-West traffic, for example, from RAG workflows. And since the supply chain of LLM applications can be vulnerable, and impact the integrity of training data, models, and deployment platforms (which are often sourced from third parties and can be manipulated through tampering or poisoning attacks), security controls need to be implemented everywhere—in the data center, across clouds, at the edge, and from code, through testing, into production.

AI Gateway technology will play a key role in helping security and risk teams mitigate risks to LLMs such as prompt injection and sensitive information disclosure, but the first step in the AI security journey begins with securing APIs that connect AI apps and AI ecosystems by making security intrinsic throughout the AI app lifecycle.

API security within an AI reference architecture

From protecting interactions between a front-end application and an inference service API, inspecting user interactions with AI-SaaS apps, and protecting the connectivity across plugins, data connectors, and downstream services—safe adoption of AI apps and AI factories is contingent on API security.

The F5 platform provides continuous API defense and a consistent security posture, and it allows business leaders to innovate with confidence by giving security and risk teams the capabilities they need to safely build AI factories.

The F5 AI Reference Architecture

F5’s focus on AI doesn’t stop here—explore how F5 secures and delivers AI apps everywhere.

Interested in learning more about AI factories? Explore others within our AI factory blog series:

- What is an AI Factory? ›

- Retrieval-Augmented Generation (RAG) for AI Factories ›

- Optimize Traffic Management for AI Factory Data Ingest ›

- Optimally Connecting Edge Data Sources to AI Factories ›

- Multicloud Scalability and Flexibility Support AI Factories ›

- The Power and Meaning of the NVIDIA BlueField DPU for AI Factories ›

- The Importance of Network Segmentation for AI Factories ›

- AI Factories Produce the Most Modern of Modern Apps: AI Apps ›

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.