As organizations embrace modern application delivery at scale, many face overwhelming configuration demands and operational complexities across their deployments. In December 2024, we announced a new AI assistant for F5 Distributed Cloud Services to reduce the complexity and effort involved in delivering, securing, and operating applications. To further our mission of making at-scale app security and delivery ridiculously easy, F5 is introducing the AI assistant for F5 NGINX One. Powered by a large language model trained specifically for NGINX, the AI assistant offers real-time value and contextually aware guidance for DevOps, SecOps, NetOps, and Platform Ops teams alike.

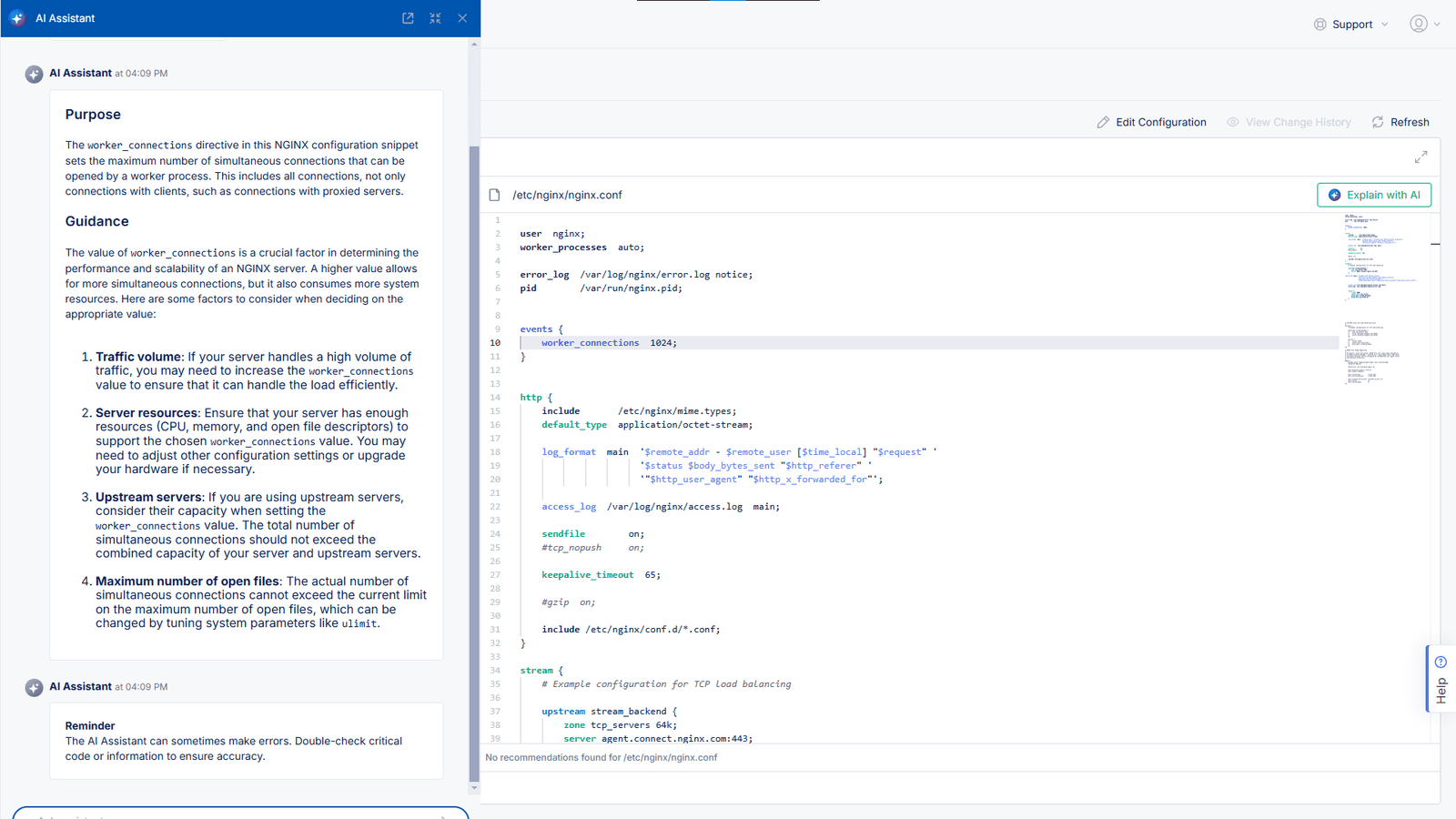

The AI assistant provides intelligent insight into the worker_connections directive in a NGINX configuration.

F5 NGINX offers highly flexible configuration options, but with that flexibility comes a risk of error and a burden of manual research. The AI assistant eliminates friction by providing answers from the NGINX documentation and context-aware recommendations for daily workflows. Instead of sifting through scattered resources, teams can rely on precise, step-by-step guidance that trims hours from troubleshooting and routine maintenance.

Driving both security and efficiency

Organizations require resilient security and consistent insight. The AI assistant reduces operational complexity and boosts ROI by enabling teams to preemptively address threats with quicker time to value. Networking teams leverage guidance for configuring and optimizing application delivery, identifying anomalies before they impact production. Ultimately, the AI assistant helps unify previously siloed efforts, streamlining tasks and reducing time-to-resolution across the entire environment.

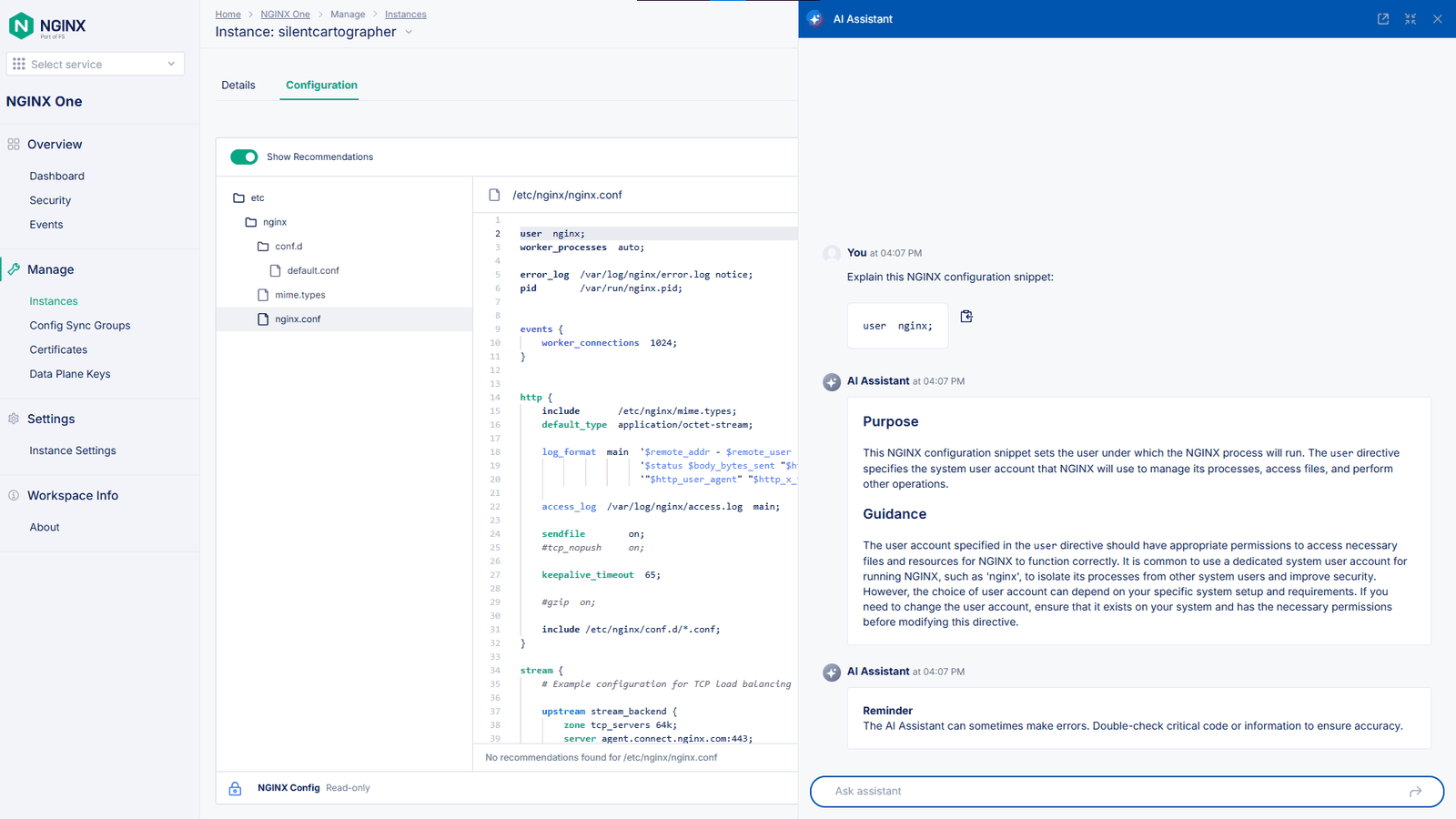

A user asks the AI assistant in the NGINX One Console to explain a NGINX configuration snippet.

Unleashing proactive operations

Security professionals value how quickly they can validate configurations or verify that security best practices are met. Instead of digging into multiple tools or combing through documentation, they get immediate insight into potential misconfigurations and can address them immediately. Meanwhile, DevOps teams can focus more on building and deploying innovative applications by offloading routine configuration tasks to the AI assistant. This intuitive support accelerates development cycles without compromising stability or security.

Reducing complexity across the board

One of the biggest pitfalls of any large-scale NGINX deployment is the fragmentation of information—directives and advanced features can live in archives, outdated guides, or community posts. The AI assistant centralizes this data and surfaces it as actionable intelligence. By actively learning from F5 and NGINX-trained large language model insights, the assistant tailors best practice suggestions, speeding up repetitive operations and improving overall reliability.

The AI assistant in the NGINX One Console explains a NGINX configuration snippet, providing background and guidance in a natural language interface.

Begin using the AI assistant today

Current F5 NGINX One customers can access the AI assistant directly from the NGINX One Console. Those new to NGINX One can explore the power and added workflow efficiency of the AI assistant with an NGINX One trial and see how NGINX One empowers enterprises to optimize, scale, and secure apps in any environment.

Empowering your teams

Whether you’re optimizing high-traffic applications or tightening security posture, the AI assistant within the NGINX One Console reduces the time required for manual processes. By replacing guesswork with context-rich insights, it significantly reduces overhead and ensures consistent performance. From technology leaders looking for strategic tools for their teams to networking, security, developer, and platform operations teams seeking operational excellence, the AI assistant provides a unifying solution.

F5’s focus on AI doesn’t stop here—explore how F5 secures and delivers AI apps everywhere.

About the Author

Related Blog Posts

Secure Your API Gateway with NGINX App Protect WAF

As monoliths move to microservices, applications are developed faster than ever. Speed is necessary to stay competitive and APIs sit at the front of these rapid modernization efforts. But the popularity of APIs for application modernization has significant implications for app security.

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for north-south and east-west API traffic, plus use cases where an API gateway is the right tool.

Deploying NGINX as an API Gateway, Part 2: Protecting Backend Services

In the second post in our API gateway series, Liam shows you how to batten down the hatches on your API services. You can use rate limiting, access restrictions, request size limits, and request body validation to frustrate illegitimate or overly burdensome requests.

New Joomla Exploit CVE-2015-8562

Read about the new zero day exploit in Joomla and see the NGINX configuration for how to apply a fix in NGINX or NGINX Plus.

Why Do I See “Welcome to nginx!” on My Favorite Website?

The ‘Welcome to NGINX!’ page is presented when NGINX web server software is installed on a computer but has not finished configuring