Digital transformation is a business journey accompanied by a technology evolution. In the course of that journey, digital business is enabled by an operational move from manual methods to automated execution.

While security-related technologies may be at the forefront of this automation due to the constant evolution of attackers, operations are not actually all that far behind. Consider, for example, our reliance on auto-scaling to expand digital capabilities on-demand. At one time there was contentious debate over whether external systems should manage capacity for digital assets.

Seriously.

Today, this isn’t even a question. We not only accept but expect auto-scaling capabilities as part of our infrastructure stack.

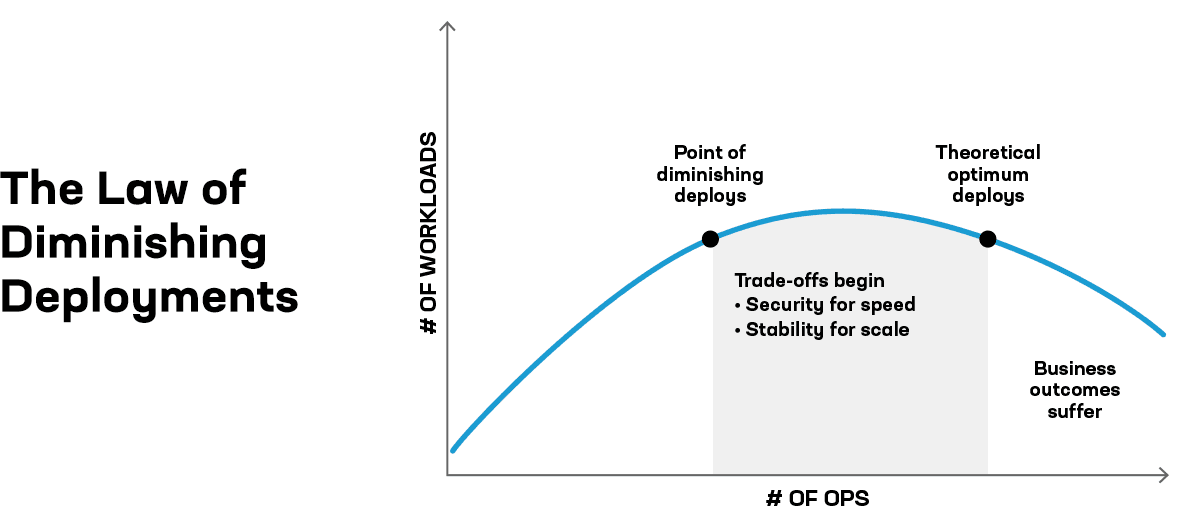

So to assume that greater automation will eventually be embraced as table-stakes seems quite logical. At some point, the value of manually managing the resources that deliver and secure digital experiences will reach a point of diminishing returns and demand a shift to trusting technology.

We know there is value in an infrastructure as code (IaC) approach. Our research showed significant benefits in terms of deployment frequency based on the adoption of IaC. More than half (52%) of organizations treat infrastructure as code, and those that do are more than twice as likely to deploy more frequently. Even more valuable, they are four times as likely to have fully automated application deployment pipelines.

That’s an important relationship to note, as it becomes a critical capability for organizations that want to reap the business benefits of adaptive applications.

Event-Driven Infrastructure as Code

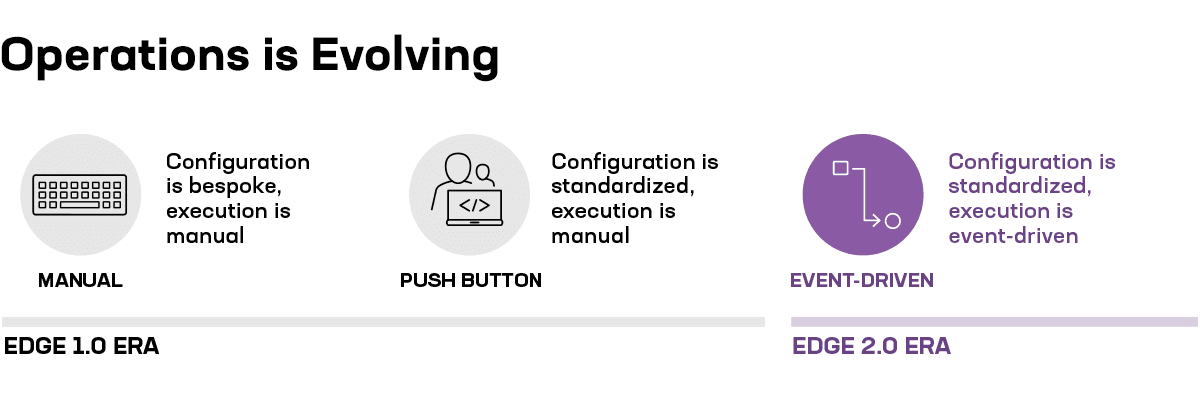

The difference between infrastructure as code and event-driven infrastructure as code is essentially what triggers a deployment.

Most organizations move from manual methods to automation with infrastructure as code but still retain control over deployments. That is, an operator is still required to trigger a deployment. It’s a push-button deployment.

With an event-driven approach, the trigger is automated based on an event. Consider auto-scale, again, as an example. The actual deployment of the configuration changes and additional workloads is triggered by an event, often when the number of concurrent connections has surpassed some pre-determined threshold. That event—going over a defined limit—triggers an automated workflow.

Imagine now, if you will, that this process is expanded to include an entire application. That means all the workloads and associated technology services that deliver and secure it. The event is now performance falling below what defines an acceptable digital experience. That event signifies a need to deploy, automatically, an "application" on the other side of the globe and triggers an automated workflow that does that—in a completely remote location.

This is not (computer) science fiction. This kind of automated deployment of an entire application—its workloads, infrastructure, and supporting services—are often deployed to a public cloud via orchestration tools like Terraform. Configuration artifacts are pulled from a repository, containers from a library, secrets (certificates and keys) from a secure vault. Automatically. This is the essence of infrastructure as code, that configurations, policies, and secrets are treated like code artifacts to enable the automation of the deployment pipeline.

What isn’t automated today is the trigger. The event now is "operator pushed the button/typed the command." The event in the future will become the time of day, the demand in a particular location, the performance for a geographic region.

This is a significant part of what will make applications adaptive in the future; the ability to react automatically to events and adapt location, security, and capacity to meet service-level objectives. Edge 2.0—with its unified control plane—will be the way that business can use resources across multiple clouds, edge, and the data center to achieve that goal.

Event-driven infrastructure as code will be a critical capability for bringing the benefits of adaptive applications to the business.

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.