For enterprises delivering Internet and extranet applications, TCP/IP inefficiencies, coupled with the effects of WAN latency and packet loss, conspire to adversely affect app performance. The result? Inflated response times for applications, and significantly reduced bandwidth utilization efficiency (the ability to "fill the pipe").

F5's BIG-IP Local Traffic Manager provides a state-of-the-art TCP/IP stack that delivers dramatic WAN and LAN application performance improvements for your real-world network—not packet-blasting test harnesses that don’t accurately model actual client and Internet conditions.

This highly optimized TCP/IP stack, called TCP Express, combines several cutting-edge TCP/IP techniques and improvements in the latest RFCs. Numerous improvements and extensions developed by F5 minimize the effect of congestion and packet loss and recovery. Independent testing tools and customer experiences show TCP Express delivers up to a 2x performance gain for end users, and a 4x improvement in bandwidth efficiency with no change to servers, applications, or the client desktops.

F5's TCP Express is a standards-based, state of the art TCP/IP stack that leverages optimizations natively supported in various client and server operating systems, and optimizations that are not operating-system specific. F5's TCP/IP stack contains hundreds of improvements that affect both WAN and LAN efficiencies, including:

At the heart of the BIG-IP Local Traffic Manager is the TMOS architecture that provides F5's optimized TCP/IP stack to all BIG-IP platforms and software add-on modules. These unique optimizations, which extend to clients and servers in both LAN and WAN communications, place F5’s solution ahead of packet-by-packet systems that can’t provide comparable functionality—nor can they approach these levels of optimization, packet loss recovery, or intermediation between suboptimal clients and servers.

The combination of F5's TMOS full proxy architecture and TCP Express dramatically improves performance for all TCP-based applications. Using these technologies, BIG-IP has been shown to:

The following sections describe the TMOS enabling architecture, as well as a subset of the standard TCP RFCs and optimizations that TCP Express uses to optimize traffic flows. Because there is no one-size-fits-all solution, this paper also describes how to customize TCP Profiles and handle communications with legacy systems.

Most organizations don't update server operating systems often, and some applications continue to run on very old systems. This legacy infrastructure can be the source of significant delays for applications as they are delivered over the WAN. The BIG-IP Local Traffic Manager with TCP Express can shield and transparently optimize older or non-compliant TCP stacks that may be running on servers within a corporate data center. This is achieved by maintaining compatibility with those devices, while independently leveraging F5's TCP/IP stack optimizations on the client side of a connection—providing fully independent and optimized TCP behavior to every connected device and network condition.

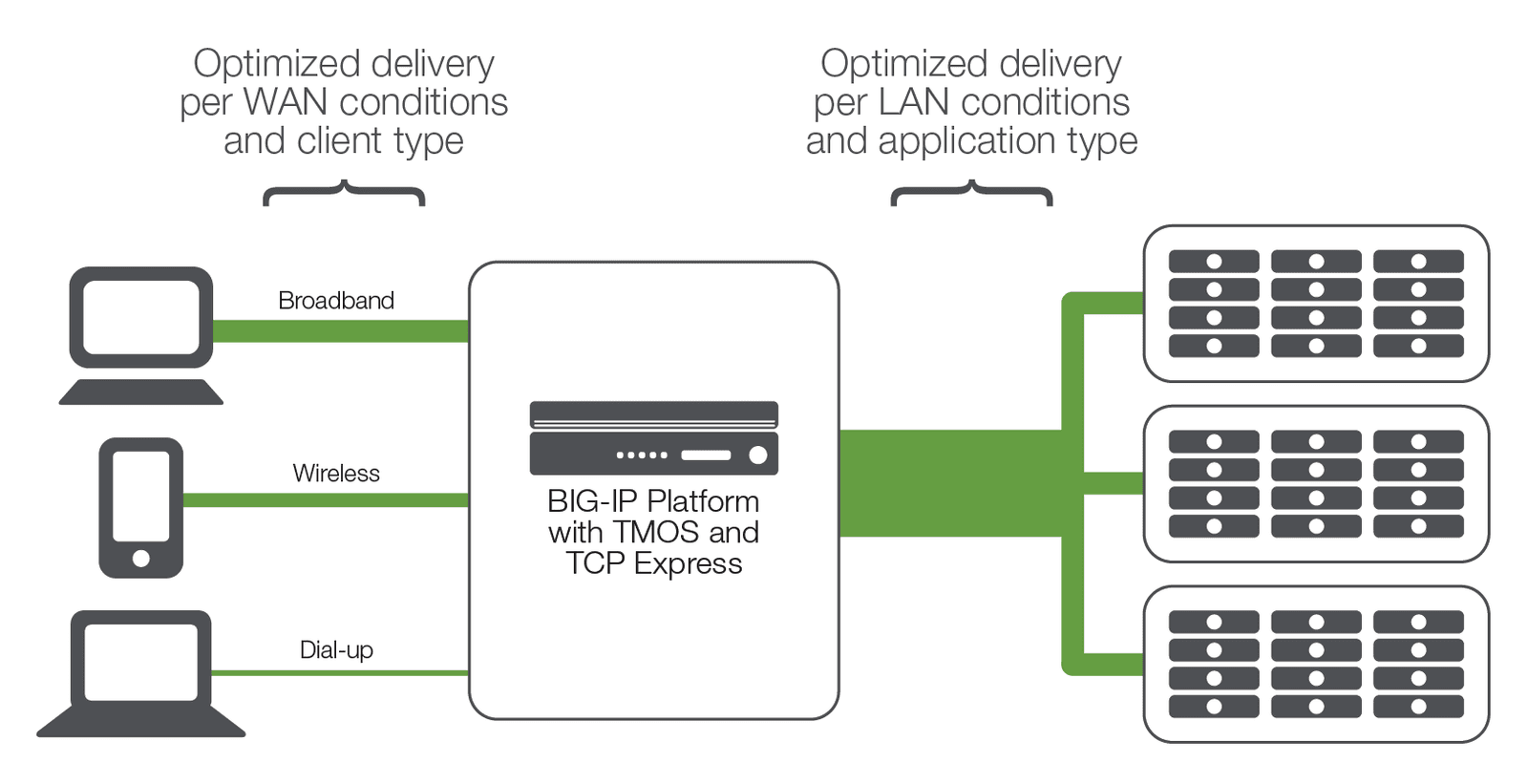

As a full proxy that bridges various TCP/IP stacks, TMOS is a key enabler to many of the WAN optimizations included in F5's unique TCP Express feature set. Client and server connections are isolated, controlled, and independently optimized to provide the best performance for every connecting device.

The BIG-IP Local Traffic Manager eliminates the need for clients and servers to negotiate the lowest common denominator for communications. It intermediates on behalf of the client (called Stack Brokering) and uses TCP Express to optimize client-side delivery while maintaining server-optimized connections on the inside of the network as shown in the following figure.

Often, organizations don't have the resources—or don’t need—to remove or replace their legacy servers and applications. To accommodate these systems, the BIG-IP Local Traffic Manager provides mediation to translate between non-optimized or even incompatible devices, including:

In addition to improving WAN communications, the BIG-IP Local Traffic Manager translates these capabilities across the entire infrastructure by acting as a bridge or translation device between all clients and back-end servers. The net result is that the BIG-IP Local Traffic Manager improves performance while masking inefficiencies in the network. This reduces cost and complexity by eliminating the need to update and tune every client and every server.

Some of the most important F5 TCP/IP improvements include:

These improvements were made to industry-standard RFCs. The following sections highlight some of the key RFCs in TCP Express.

Because TCP Express implements literally hundreds of real-world TCP interoperability improvements, and fixes or provides a workaround to commercially-available product stacks (Windows 7 and up, IBM AIX, Sun Solaris, and more), no single optimization technique accounts for the majority of the performance improvements. These optimizations are dependent on specific client/server type and traffic characteristics. For example:

The BIG-IP still reduces packet round trips and accelerates retransmits just like dial-up, but with faster connections. The BIG-IP Local Traffic Manager and TCP Express also optimizes congestion control and window scaling to improve peak bandwidth. Although improvements for dial-up users may be the most noticeable, improvements for broadband users are the most statistically obvious because of how dramatically some enhancements improve top-end performance on faster links.

As a general rule, the more data that is exchanged, the more bandwidth optimizations apply. The less data that is exchanged, the more Round-Trip delay Time (RTT) optimizations apply. Therefore, traffic profiles that don't exchange a lot of data, such as dial-up, would see more optimization than broadband. For traffic profiles that do exchange a lot of data, broadband would see the most optimization. In both cases, significant gains can be realized using TCP Express.

While TCP Express is automatic and requires no modifications, the BIG-IP Local Traffic Manager gives users advanced control of the TCP stack to tune TCP communications according to specific business needs. This includes the ability to select optimizations and settings at the virtual server level, per application being fronted on the device. Administrators can use a TCP profile to tune each of the following TCP variables:

Administrators can also use these controls to tune TCP communication for specialized network conditions or application requirements. Customers in the mobile and service provider industries find that this flexibility gives them a way to further enhance their performance, reliability, and bandwidth utilization by tailoring communication for known devices (like mobile handsets) and network conditions.

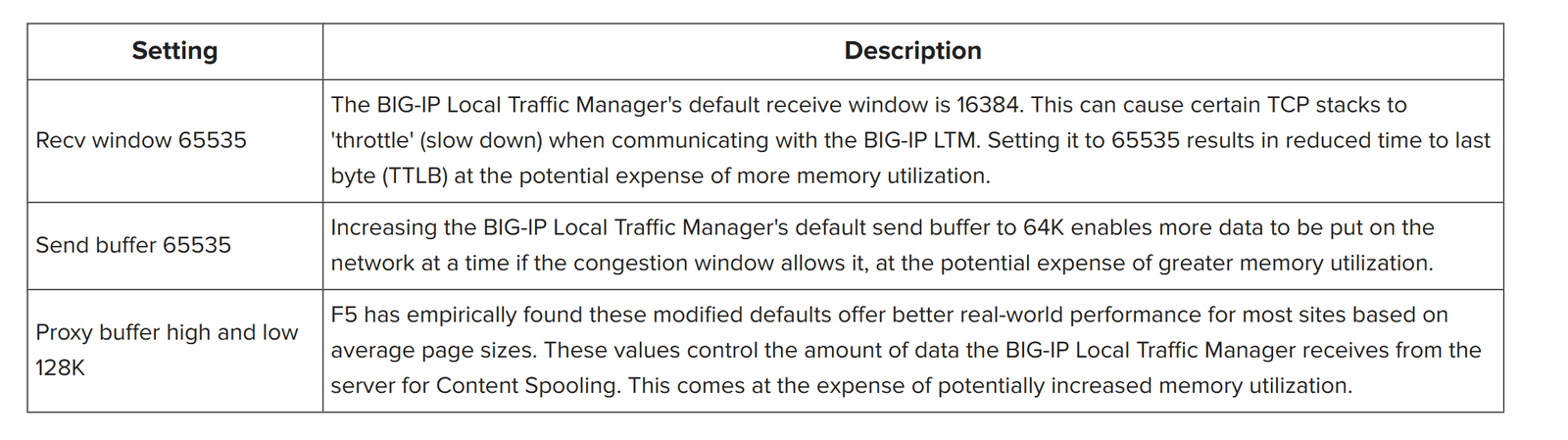

TCP Express provides flexible stack settings to optimize custom services—for example, you can adjust these settings to optimize an ASP application delivered to mobile users. The following table describes the BIG-IP Local Traffic Manager modifiable stack settings.

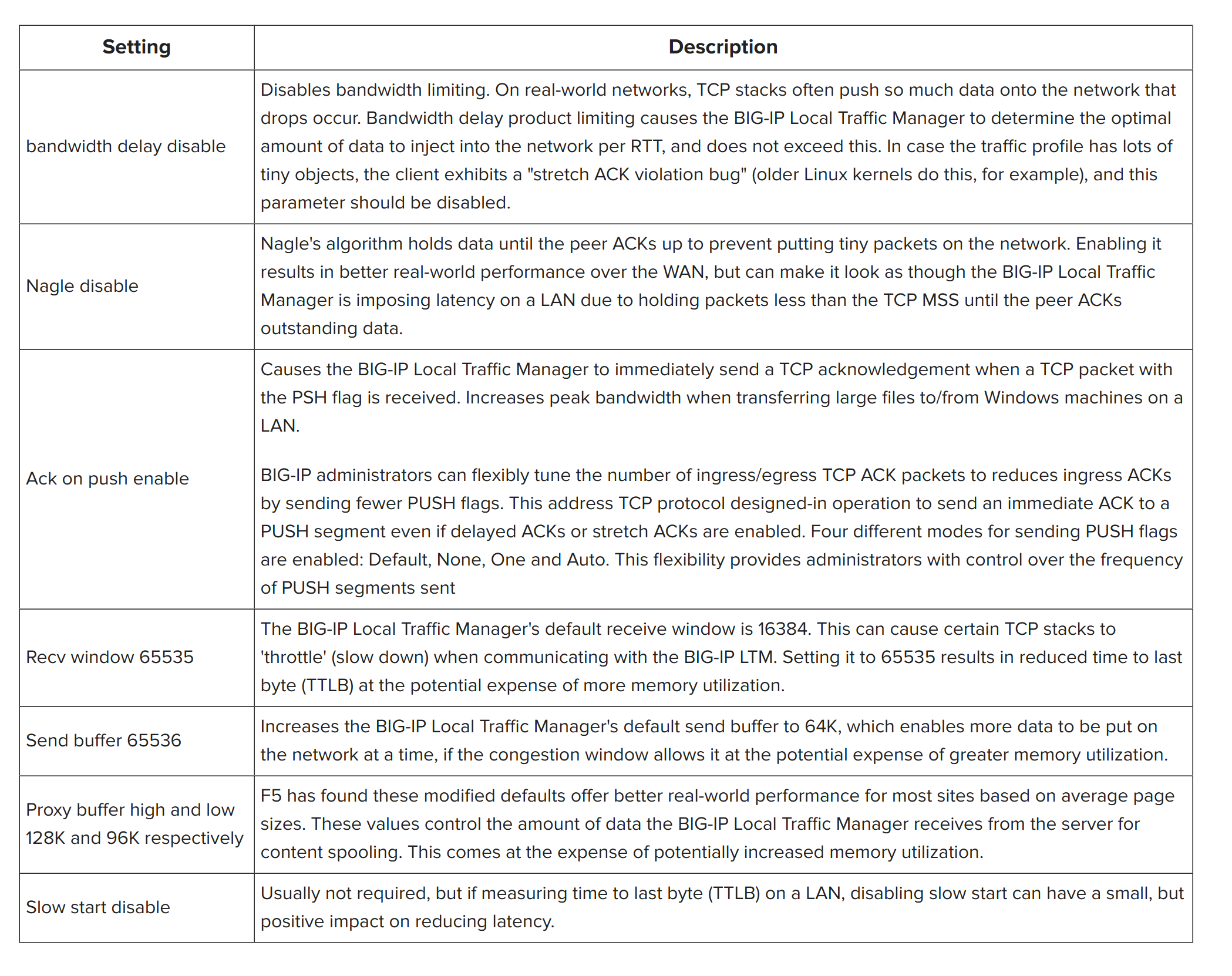

If the traffic on a LAN is highly interactive, F5 recommends a different set of TCP settings for peak performance. F5 has found that Nagle's algorithm works very well for packet reduction and general compression/RAM caching over a WAN. In addition, tweaks to various buffer sizes can positively impact highly-interactive communications on low-latency LANs, with the only possible cost being increased memory utilization on the BIG-IP Local Traffic Manager.

The following table describes modifiable TCP profile settings.

TCP Express is complemented by other F5 acceleration features and products that work to further reduce user download times and optimize infrastructure resources.

Other acceleration features that are integrated with the BIG-IP Local Traffic Manager include:

For organizations looking to improve the capacity and performance of their infrastructure, the BIG-IP Local Traffic Manager provides a unique solution that transparently makes every connecting client and server work more efficiently. F5's unique TCP Express delivers unmatched, real-world network and application performance improvements, and offers organizations an unprecedented level of control to optimize TCP communications for mission-critical applications.

PUBLISHED OCTOBER 27, 2017