Big data. Petabytes generated on an hourly basis for millions of sensors and monitors embedded in things across the business value chain. From manufacturing to delivery, from POS to consumers, data is being generated in unprecedented amounts.

That data, on its own, is meaningless. Data itself is a collection of 1s and 0s in specific formations that when interpreted by an app become information. Information only becomes useful when it is analyzed, and only becomes value when it is acted upon.

People cannot effectively analyze the data being generated today, let alone what will be generated in the next hour or day or week. And yet business decisions are increasingly required to be made in near real-time. To achieve this requires compute and systems trained to recognize the signals amidst the heaping piles of noise collected every day.

This is not merely conjecture. In “Machine Learning: The New Proving Ground for Competitive Advantage” – a survey conducted by MIT Technology Review Custom and Google Cloud – over 60% of respondents “have already implemented ML [Machine Learning] strategies, and nearly one-third considered themselves to be at a mature stage with their initiatives.” The reason behind their non-trivial investment in this nascent technology is competitive advantage. “According to respondents, a key benefit of ML is the ability to gain a competitive edge, and 26 percent of current ML implementers felt they had already achieved that goal.”

The benefits are predicted to be staggering.

“For a typical Fortune 1000 company, just a 10% increase in data accessibility will result in more than $65 million additional net income.”

“Retailers who leverage the full power of big data could increase their operating margins by as much as 60%.”

All good so far. We’re using data to drive decisions that enable business to take the lead and grow.

The danger lies in not recognizing that with any dependency comes risk. If I’m dependent on a car to get to the grocery store (because no public transportation exists where I live) then there is risk in having something happen to that car. A lot of risk. If my business is dependent on big data to make decisions (potentially for me, if pundits predictions are to be taken at face value), then there is risk in something happening to that data.

Now I’m not talking about the obvious loss of the data or even corruption of that data. I’m talking about a more insidious threat that comes from the trust we place in the veracity of that data.

In order to make decisions on any data – whether in our personal or business lives – we must first trust the accuracy of that data.

Big Dirty Data

Dirty data is nothing new. I’ll confess to being guilty of fudging my personal information from time to time when it’s requested to access an article or resource on the Internet. But the new streams of data are not necessarily at risk from this kind of innocuous corruption. They’re at threat from purposeful corruption by bad actors determined to throw your business off course.

Because we make decisions based on data and only tend to question it when obvious outliers present themselves, we are nearly blind to the threat of gradual corruption. Like the now cliché trope of skimming pennies from bank transactions, the subtle shift in data can go unnoticed. Gradual increases in demand for product X in one market might be seen as success of marketing or promotional efforts. Macro-economics can often explain a sudden drop in demand for product Y in others. My ability to impact your business is significant if I have the patience and determination to dirty up the data upon which you make decisions in manufacturing or distribution.

How significant? Poor data quality results in a loss of around 30% of revenue according to Ovum Research. Analytics Week compiled a fascinating list of big data facts with similar consequences of bad data, including:

“Poor data can cost businesses 20%–35% of their operating revenue.”

“Bad data or poor data quality costs US businesses $600 billion annually.”

Seem unlikely? Web scraping to gather intelligence as part of corporate espionage efforts are a real thing, and there are teams dedicated to stopping it. The use of APIs makes these efforts even easier and worse – sometimes in real time. So to think that the possibility of someone intentionally introducing bad data into your stream is not going to happen is akin to willfully ignoring the reality that bad actors are often (usually) two steps ahead of us.

Our security practices – particularly in the cloud, where much big data is expected to reside – amplifies this threat. A white paper from TDWI sponsored by Information Builders has many more examples of the cost of dirty data. While most relate to typical dirty data issues arising from integrating data due to acquisitions or the typical customer-generated fudged information, the costs models are invaluable in understanding the threat to business based on trusting data that may be corrupted – and what you can do about it.

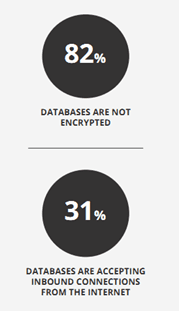

Relying on default configurations has been the cause of multiple breaches this year alone. Remember the MongoDB debacle from January? The one in which default configurations left the databases wide open to anyone on the Internet who might be interested in them? Or how about the RedLock CSI study that found 285 instances of Kubernetes’ administrative consoles completely open. No credentials required. The same report found that 31% of the unencrypted databases in the cloud were accepting inbound connections from the Internet. Directly. Like, nothing between me and your data.

When more than 27000 databases are left exposed and accessible via the Internet due to a failure to take any effort whatsoever to secure them, one can easily imagine that data streams could be dirtied up with ease. When we have organizations deliberately removing default security controls on cloud storage services to leave them wide open to discovery and corruption, this notion of bad actors inserting dirty data rises from the possible to the probable. It’s incumbent on organizations that rely on data – which is pretty much everyone today – to take care with not only how they secure that data but how they verify its accuracy.

Security in the future of data-driven business means more than just protecting against exfiltration, it must also consider the very real threat of infiltration – and how to combat it.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.