The world of the application developer and the network architect could not be more different except, perhaps, in the way in which they each view the other’s domain.

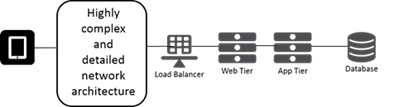

A typical network perspective of an application deployment often looks like this:

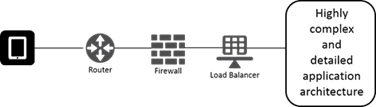

While on the other side of the “wall” the developer’s perspective of the same application deployment often looks like this:

Both are no doubt accurate for their domains, but highly indifferent toward the actual architecture of the other. This is illustrative of why many problems arise when applications move into production. And arise they do. Research notes that in 2013 “90% of developers said that they were spending weekends, holidays and vacations to fix production phase app emergencies[1]”. While many of these are certainly related to software defects and errors, many others are undoubtedly caused by the many differences in the environments. Production is not development, and vice versa.

Today’s applications take advantage of a variety of services that exist in production environments, but rarely in development or test environments: load balancers for scalability, caches to improve performance, and web app firewalls for security. These services not only touch each and every request and response as they traverse the network between the user and the app (or the app and the API, if you prefer) but in some cases they modify the requests and/or responses. A good example of this is the user’s IP address. This value is found in the HTTP headers of every request, but when it passes through a load balancing proxy it becomes the IP address of the proxy, not the client.

For the unsuspecting developer, this can cause applications to “break” and result in hours of troubleshooting in addition to the time required to modify the app. These types of issues arising from differences in environment are no doubt the reason 28% of developers responding to a survey[2] in mid-2015 said “over 50% of production problems could have been found and fixed with the right test environment.” And more than half (52%) said “between 25% and 50% of production problems would have been fixed.”

Many of the issues arising in production are directly attributable to differences in the application, and particularly those developed using agile methodologies. Agile methods can increase the likelihood of these kinds of conflicts arising in production because of the frequency with which code changes.

Increasingly many, but by no means all, of these challenges are resolved by using a programmable proxy as doing so eliminates the need to change code already in production and further delay delivery of the application to the market. The aforementioned “IP address” issue is commonly resolved by instruction the proxy to insert a new HTTP header that contains the actual IP address so developers still have access to that information and layer 7 load balancing proxies are adept at routing application and API requests based on a variety of data, including versioning and API signatures.

It is interesting to note that the one component that is often called out in both software and network engineering diagrams is the load balancer. Even though this proxy is traditionally deployed and managed by the network team, it’s critical enough to application architectures that it is almost always included as part of the application. Similarly, developers recognize the importance of load balancing today for scaling applications and generally include it in their diagrams.

It also reflects the increasing instances in which developers and operations have assumed responsibility for scalability and thus have taken control of load balancing for their applications. That’s a good thing, because it means dev and ops can (and hopefully are) including load balancing (and all its layer 7 goodness) in the CI/CD pipeline, particularly during testing, to ensure any potential issues can be found fast and resolved before moving into production. Shifting load balancing left into the CI/CD pipeline also enables a more holistic approach to continuous delivery in which the entire application package (architecture) is managed as code and can be updated simultaneously. This allows for network infrastructure (because that’s where load balancing traditionally falls when it’s described) to support an immutable architecture in which applications (or microservices if you’re going that route) are completely redeployed with new configurations rather than simply updated, avoiding the natural software entropy that can introduce challenges and technical debt.

The proxy is in many ways the bridge that connects the “network” to the “application” over the figurative gap (more like a wall, if we’re honest) that separates software and network engineers and architects. Which is one of the gaps that DevOps need to include if organizations are going to be able to scale to meet the challenges of modern architectures.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.