Early in the history of cloud, which was not that long ago, it was common to see growth and adoption reports touting the unparalleled market penetration of “cloud” with no distinction between its primary models (that’s IaaS, PaaS, and SaaS in case you’ve forgotten). That made it seem that all three models were experiencing incredible growth and that cloud would, as some predicted, eradicate the data center as we knew it.

Fast forward some years, now, and we’ve seen better market breakdowns that indicate the awesome rise of cloud was mostly on the SaaS side of the world as LOB (line of business) stakeholders saw benefits in moving from an IT-implements-packaged-software-for-us to a SaaS-gives-us-what-we-want-today model. Inarguably, the greatest growth in “cloud” has been, to date, the movement of business operations from on-premises to applications “in the cloud.”

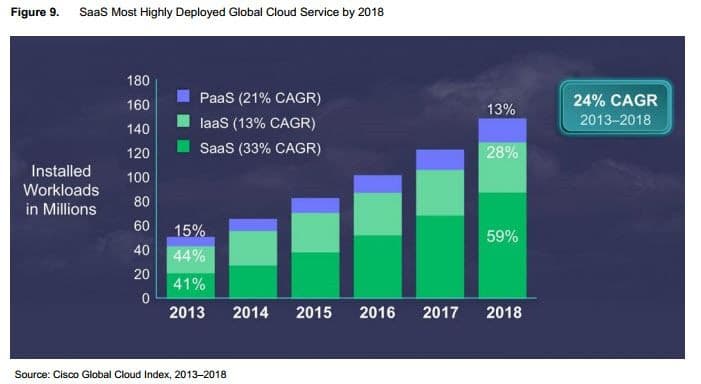

Consider that “by 2018, 59% of the total cloud workloads will be Software-as-a-Service (SaaS) workloads, up from 41% in 2013. Cisco is predicting that by 2018, 28% of the total cloud workloads will be Infrastructure-as-a-Service (IaaS) workloads down from 44% in 2013. 13% of the total cloud workloads will be Platform-as-a-Service (PaaS) workloads in 2018, down from 15% in 2013. The following graphic provides a comparative analysis of IaaS, PaaS and SaaS forecasts from 2013 to 2018.” (http://softwarestrategiesblog.com/tag/idc-saas-forecasts/)

So when you generally ask an organization if they are adopting “cloud”, you’re likely to get a yes. That tells you nothing of what kind of cloud they’re adopting and, therefore, makes it nearly impossible to predict or comment on what kind of challenges they might be facing based on adoption. Each cloud model brings with it its own challenges, after all, and while some are shared – identity management can be an issue across all three models – some are not – web application security is mostly a challenge for IaaS-deployed apps, not SaaS.

So basically, the term “cloud” is kind of meaningless without some kind of qualifier.

This is also true today of SDN, where a variety of models are in play, each with its own unique architectural requirements and, therefore, challenges.

Consider this diatribe from my colleague, Nathan Pearce, on the inclusion of overlay protocols (VXLAN and NVGRE) in the definition of SDN: Which SDN Protocol is Right for You. Nathan rightly suggests that if we’re going to include overlay protocols in the definition of SDN that we then need to name other encapsulation protocols, too, as being SDN.

The problem is, of course, that like cloud, SDN suffers from its broad inclusion of multiple models. There’s the traditional (or classic, if you prefer) definition, based on OpenFlow and inclusive only of the stateless network (layers 2-4). There’s the architectural model that focuses on operationalizing the network; approaching the programmability aspect of SDN from the perspective of automating provisioning and management. This is often referred to broadly as “Network management and orchestration” (MANO)

Then there’s the model that’s kind of a mix, which relies on programmability in order to build an automated network across the full stack; it’s inclusive of all seven OSI layers but focuses on the ability of the network to adjust its routing and enforcement of policies based on real-time traffic. This is more properly referred to as “automated networks”.

Each of these three models brings with it its own challenges (and benefits, too). And there’s nothing stopping an organization from combining these models to achieve its goals (much in the way that 82% of organizations are planning on a multi-cloud strategy (RightScale, State of the Cloud 2015)). But the fact remains that if you ask someone if they’re adopting or implementing SDN and they say “yes” you really have no idea what they’re actually doing. Is it OpenFlow? OpenStack? OpenDaylight? Open-we-wrote-some-scripts-to-automate-the-network?

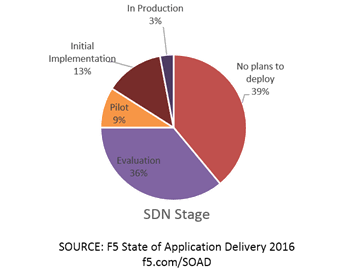

Current statistics on SDN adoption are not very inspiring and certainly nowhere near where cloud was at the same stage of market maturity.

But given the disparity of definitions, it’s quite plausible (and probable) that what is actually going on is not a lack of adoption or interest but rather a lack of common definition.

I’m going to back that up with some data that says organizations may not say they’re doing SDN (or DevOps, for that matter, which also suffers from definition fatigue) but they are likely doing it.

In the results compiled for our most recent State of Application Delivery report (2016 which will out available in, well, 2016) the number of actual “doing SDN” responses was pretty dismal given how many years SDN has been “the thing to do.” But digging into responses regarding the use of automation and scripting, well, that tells a completely different story: 67% of respondents are using at least one automation tool or framework; over half are using 2 or more.

And by “tool” or “framework” I’m including Python, Juju, Chef, Puppet, OpenStack, VMware, and Cisco.

The use of such frameworks and tools indicates more interest in the latter two definitions of SDN – the ones focusing on automation and orchestration, rather than the classic OpenFlow-based definition.

But we (as in those of us who put together the survey) didn’t ask about each of the SDN models; we asked about “SDN” in general. Which leaves the definition open to interpretation, just as the broad umbrella term “cloud” left the result of early adoption surveys open to interpretation.

We need to be more judicious regarding how we use the term SDN and what that means. Does it include overlay protocols? Does it not include overlay protocols? Are we talking about network automation or automating networks? Or are we focusing on classic, OpenFlow-driven networking models?

The answer to that question will ultimately provide everyone with a better understanding of how SDN is (or isn’t) being embraced today and will (hopefully) stop my poor colleagues’ head from exploding when someone suggests that overlay protocols should be included as “SDN”.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.