Amazon S3 changed the shape of modern infrastructure. By separating object storage from compute, S3 gave application teams durable, affordable capacity with a simple API, and just as importantly, a neutral point for data created in one place and consumed in another. That model has become a backbone for AI pipelines: training sets land in buckets, fine-tuning datasets roll through versioned prefixes, and knowledge corpora for retrieval-augmented generation (RAG) are pulled by indexers all day long.

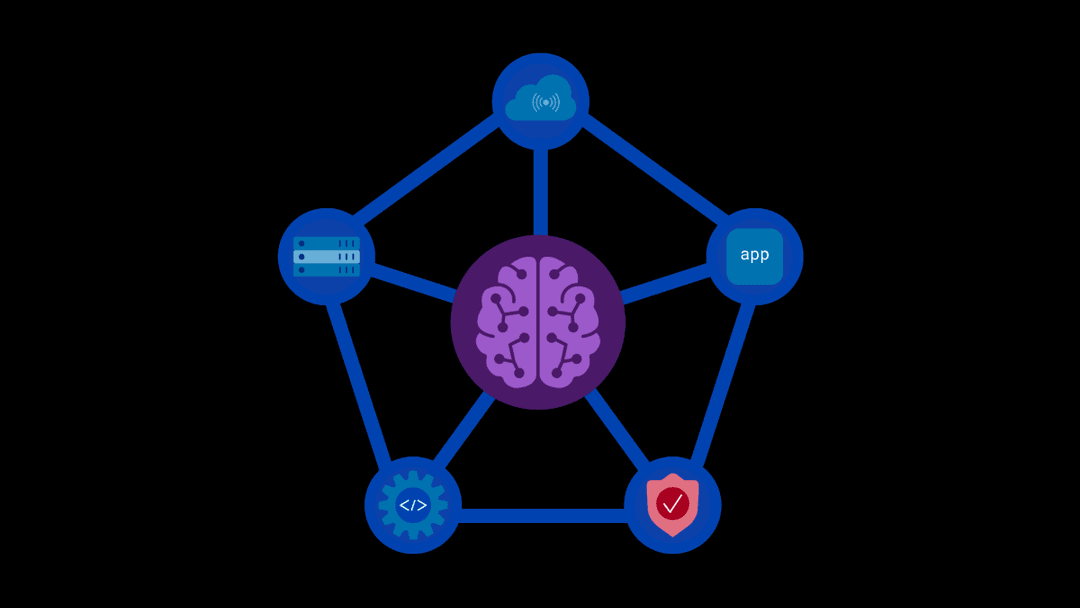

As AI estates scale, pointing your app at S3-compliant object stores stops being enough; enterprises need a delivery layer that makes data movement predictable, fast, and secure. This is exactly the problem that AI data delivery addresses: ensuring the right data gets securely to object stores and is reliably delivered to AI model foundry workflows with the right underlying policies and resilience across sites. Doing so enables training, fine-tuning, and RAG workflows to stay on schedule. An application delivery controller (ADC) in front of storage is a business and technical necessity; it is a control point that protects object stores from surges, smooths throughput to ensure cluster reliability, and centralizes governance across hybrid multicloud S3 deployments.

Why should we place F5 BIG-IP in front of data stores?

Today’s AI storage looks like yesterday’s web traffic on steroids. Ingest is bursty (e.g., parallel writers, multi-part uploads), reads are random, and maintenance is constant (e.g., node rebuilds, compactions, tiering). These unpredictable patterns demand an architecture that decouples applications from the underlying storage, shielding workloads from disruptions and endpoint volatility. Without this dedicated delivery tier, enterprises face eight common issues:

- Local resiliency challenges: A single slow or rebuilding node can disrupt jobs throughput. F5 BIG-IP mitigates this with targeted health monitors, such as S3 HEAD/GET checks on canary keys, policy-driven load balancing, and connection draining to ensure uninterrupted, long-running transfers during maintenance.

- Manual global resiliency: F5 BIG-IP DNS automatically routes clients to the best site based on health, proximity, and business policies. It seamlessly handles cluster or regional failovers without requiring changes to application endpoints.

- Object- and metadata-level quality of service and rate-limiting: BIG-IP inspects and discriminates traffic based on its bucket, object type, size, and tagging. Low-priority requests are rate-limited in favor of high-priority requests, avoiding timeouts and retries in congested or oversubscribed networks.

- Risky upgrades: Storage software upgrades can disrupt workflows. BIG-IP enables safe blue/green or rolling upgrades by draining traffic from affected pools and temporarily pinning traffic to stable system versions until health checks pass.

- Congested replication flows: Cross-region synchronization and cluster replication can face bottlenecks during high-demand periods. BIG-IP prioritizes and shapes traffic to ensure replication processes don’t hinder performance for AI model workloads.

- Inconsistent TLS posture: BIG-IP centralizes TLS management by handling offload, re-encryption, or passthrough. It enforces consistent cipher policies, Perfect Forward Secrecy (PFS), and mTLS where needed, simplifying storage configurations and enabling transparent scaling with SSL acceleration on F5 rSeries and F5 VELOS.

- Post quantum cryptography (PQC) defense: With BIG-IP, organizations cancomply with U.S. National Institute for Standards and Technology (NIST) and EU Digital Operational Resilience Act (DORA) recommendations by supporting PQC in transit and protecting data from attack by future quantum computers like “harvest now, decrypt later” exploits.

- S3 protocol defense: BIG-IP detects and blocks S3 protocol attacks such as injections, privilege escalation, bucket takeover, credential or pre-signed URL reuse, and account takeover before they reach the backend.

S3 data delivery profiles come to BIG-IP v21.0

BIG-IP version 21.0 introduces S3 TCP/TLS profiles designed to easily turn a virtual server into a production-ready S3 front door. These profiles provide TCP settings for high-bandwidth paths, refine HTTP/S3 behavior to support multi-part uploads and retries, and implement security guardrails like strict method allowlists and optional mTLS. This streamlines the transition from cluster setup to fully operating AI pipelines, reducing setup time from days to minutes.

AI data delivery in action

The following demos illustrate how BIG-IP extends beyond faster setup to deliver lasting operational value. They highlight how S3 data delivery can be abstracted from infrastructure changes, dynamically shaped through programmable policies, and safeguarded against cluster stress. Together, these examples show how a dedicated delivery layer ensures AI pipelines remain predictable, scalable, and secure.

Demo 1: Lifecycle abstraction

This demo highlights how applications can stay connected and uninterrupted even as storage environments evolve. By abstracting endpoints, workloads remain resilient through cluster lifecycle changes, vendor changes, version upgrades, or cluster growth without requiring application reconfiguration, with observability that surfaces performance and compliance insights.

Demo 2: Data plane programmability

Here we show how policies and routing can be dynamically adjusted at the data plane level. This flexibility allows enterprises to enforce fine-grained controls, adapt traffic patterns to business needs, and support risk-managed transitions between environments.

Demo 3: Hot spot prevention

The final demo demonstrates how advanced monitoring and intelligent load balancing reduce hot spots and protect clusters under stress. By detecting failures and avoiding overloaded nodes before they disrupt workflows, enterprises can maintain consistent throughput and safeguard critical AI pipelines.

Design notes for practitioners

S3-compatible storage clusters power scalable AI, machine learning, and data-driven applications, but they come with inherent challenges like hot spot formation, node maintenance, shifting traffic patterns, and ensuring resiliency during high-demand workloads. BIG-IP offers flexible and programmable traffic management to address these challenges efficiently. Below are seven essential best practices to optimize BIG-IP for S3 environments and obtain scalability, performance, and maintenance isolation:

- Leverage Intelligent load balancing algorithms: Avoid round-robin load balancing, which can create hot spots in S3 clusters due to uniform traffic distribution. Use adaptive algorithms such as dynamic ratio or least connections to intelligently balance traffic based on node health and workload capacity, ensuring predictable performance.

- Deploy flexible HA architectures: This configuration isolates maintenance activities from client workloads, preventing downtime while achieving maximum fault tolerance. Active-active deployments allow multiple BIG-IP devices to handle traffic simultaneously, ensuring continuous availability and increasing throughput for heavy workloads.

- Use configuration automation: Automation ensures faster deployment, simplifies scaling, and reduces the complexity of maintaining consistent configurations across environments. Use the BIG-IP Application Services 3 Extension (BIG-IP AS3) and BIG-IP Declarative Onboarding to automate BIG-IP provisioning and configuration.

- Utilize advanced health monitors for S3 endpoints: Enable custom health monitors to check the availability and performance of individual S3 endpoints, such as response times, error rates, and node capacity utilization. BIG-IP can automatically reroute traffic away from degraded or offline nodes, ensuring resiliency and uninterrupted workloads even during maintenance.

- Enhance data throughput with TCP/TLS optimizations: Optimize TCP configurations, such as window scaling and congestion control, to improve throughput for large AI/ML data transfers. BIG-IP’s S3-tuned TCP profile minimizes latency, ensuring encrypted data delivery is both secure and fast.

- Leverage data plane programmability for traffic customization: Custom programmability enables granular traffic control, ensuring performance and flexibility for unique S3 workload requirements. Use BIG-IP’s event-driven iRules to dynamically route traffic based on headers, metadata, or maintenance status of individual nodes.

- Enable locality-aware request routing: Use locality-aware routing policies to prioritize traffic to storage nodes based on geographic proximity or latency. This reduces network overhead and improves response times for globally distributed workloads reliant on S3 clusters.

A dedicated delivery tier for AI data pipelines

S3 brings universal, durable storage; F5 BIG-IP version 21.0 gives storage a first-class delivery tier tailored to AI. The result is high-performance data pipelines that keep models training, fine-tuning, and serving RAG workflows, without relying on fragile client logic or custom scripts. BIG-IP v21.0 is now available. For more information, read the BIG-IP version 21.0 announcement blog. Also, be sure to check out today’s press release.

F5’s focus on AI doesn’t stop here—explore how F5 secures and delivers AI apps everywhere.

About the Authors

Mark Menger is a Solutions Architect at F5, specializing in AI and security technology partnerships. He leads the development of F5’s AI Reference Architecture, advancing secure, scalable AI solutions. With experience as a Global Solutions Architect and Solutions Engineer, Mark contributed to F5’s Secure Cloud Architecture and co-developed its Distributed Four-Tiered Architecture. Co-author of Solving IT Complexity, he brings expertise in addressing IT challenges. Previously, he held roles as an application developer and enterprise architect, focusing on modern applications, automation, and accelerating value from AI investments.

More blogs by Mark Menger

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?