Scaling containers is more than just slapping a proxy in front of a service and walking away. There’s more to scale than just distribution, and in the fast-paced world of containers there are five distinct capabilities required to ensure scale: retries, circuit breakers, discovery, distribution, and monitoring.

In this post on the art of scaling containers, we’ll dig into monitoring.

Monitoring

Monitoring. In an era where everything seems to be listening and/or watching everything from how fast we drive to what’s in our refrigerator, the word leaves a bad taste in a lot of mouths. We can – and often do – use the word ‘visibility’ instead, but that semantic sophistry doesn’t change what we’re doing – we’re watching, closely.

Everything about scale relies on monitoring; on knowing the state of the resources across which you are distributing requests. Sending a request to a ‘dead zone’ because the resource has crashed or was recently shut down is akin to turning onto a dead-end street with no outlets. It’s a waste of time.

Monitoring comes in many flavors. There’s the “can I reach you” monitoring of a ping at the network layer. There’s the “are you home” monitoring of a TCP connection. And there’s the “are you answering the door” of an HTTP request. Then there’s the “have you had your coffee yet” monitoring that determines whether the service is answering correctly or not.

Along with just checking in on the health and execution of a service comes performance monitoring. How fast did the service answer is critical if you’re distributing requests based on response times. Sudden changes in performance can indicate problems, which means it’s historically significant data that also needs to be monitored.

There’s active monitoring (let me send you a real request!), synthetic monitoring (let me send you a pretend request), and passive monitoring (I’m just going to sit here and watch what happens to a real request). Each has pros and cons, and all are valid methods of monitoring. The key is that the proxy is able to determine status – is it up? is it down? has it left the building along with Elvis?

Reachability, availability, and performance are all aspects of monitoring and necessary to ensure scalability. Which means it’s not just about monitoring, it’s about making sure the load balancing proxies have up-to-date information regarding the status of each resource to which it might direct a request.

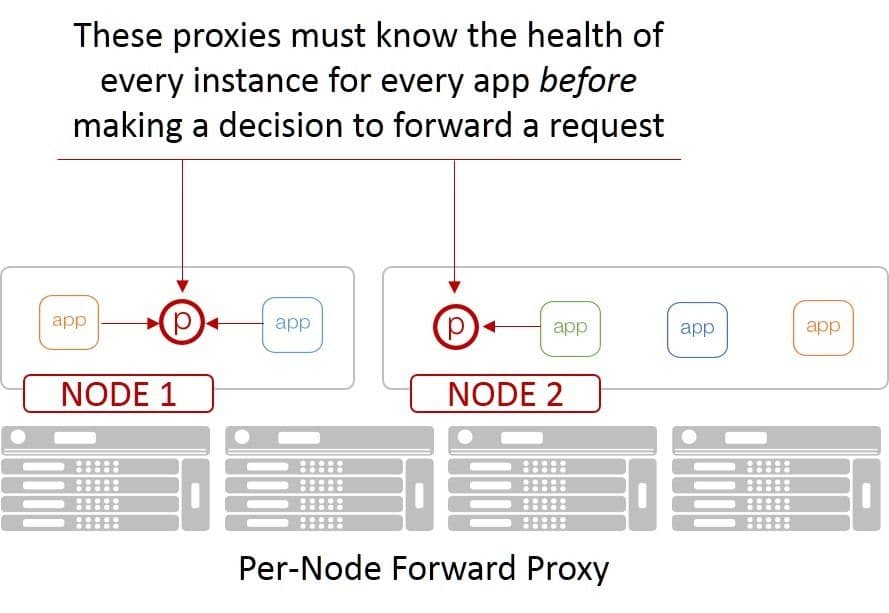

If you think about the nature of containers and the propensity to pair them with a microservice-based architecture, you can see that monitoring quickly becomes a nightmarish proposition. That’s because the most popular model of load balancing inside container environments are forward (and sidecar) proxies. Both require every node know about the health and well-being of every resource to which it might need to send a request. That means monitoring just about every resource.

You can imagine it’s not really efficient for a given resource to expend its own limited resources responding to fifteen or twenty forward proxies as to its status. Monitoring in such a model has a significantly negative effect on both performance and capacity, which makes scale even harder.

Monitoring has never quite had such a significant impact on scale as we’re seeing with containers.

And yet its critical – as noted above – because we don’t want to waste time with ‘dead end’ resources if we can avoid it.

The challenges of necessary monitoring are one of the reasons the service mesh continues to gain favor (and traction) as the future model of scale within container environments.

Because monitoring is not optional, but it shouldn’t be a burden, either.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.