Throughout F5’s AI factory series, we have introduced and defined AI factories, explored their elements and how application delivery and security choices impact performance. In this article, the fifth in our series, we explore the critical role multicloud networking plays in delivering inference and supporting data movement for an AI factory, which F5 defines as a massive storage, networking, and computing investment serving high-volume, high-performance training and inference requirements.

F5’s AI Reference Architecture Diagram ›

Navigating distributed architecture: location impacts workload performance

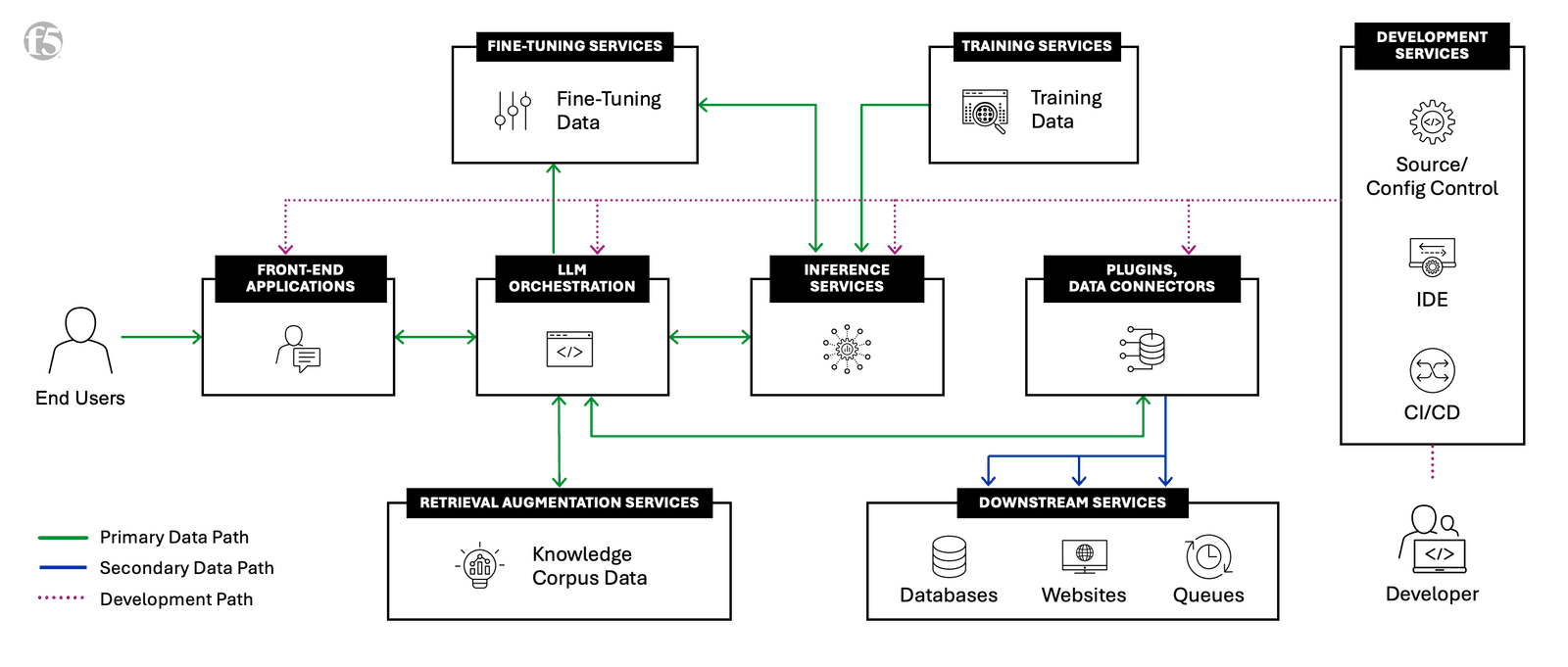

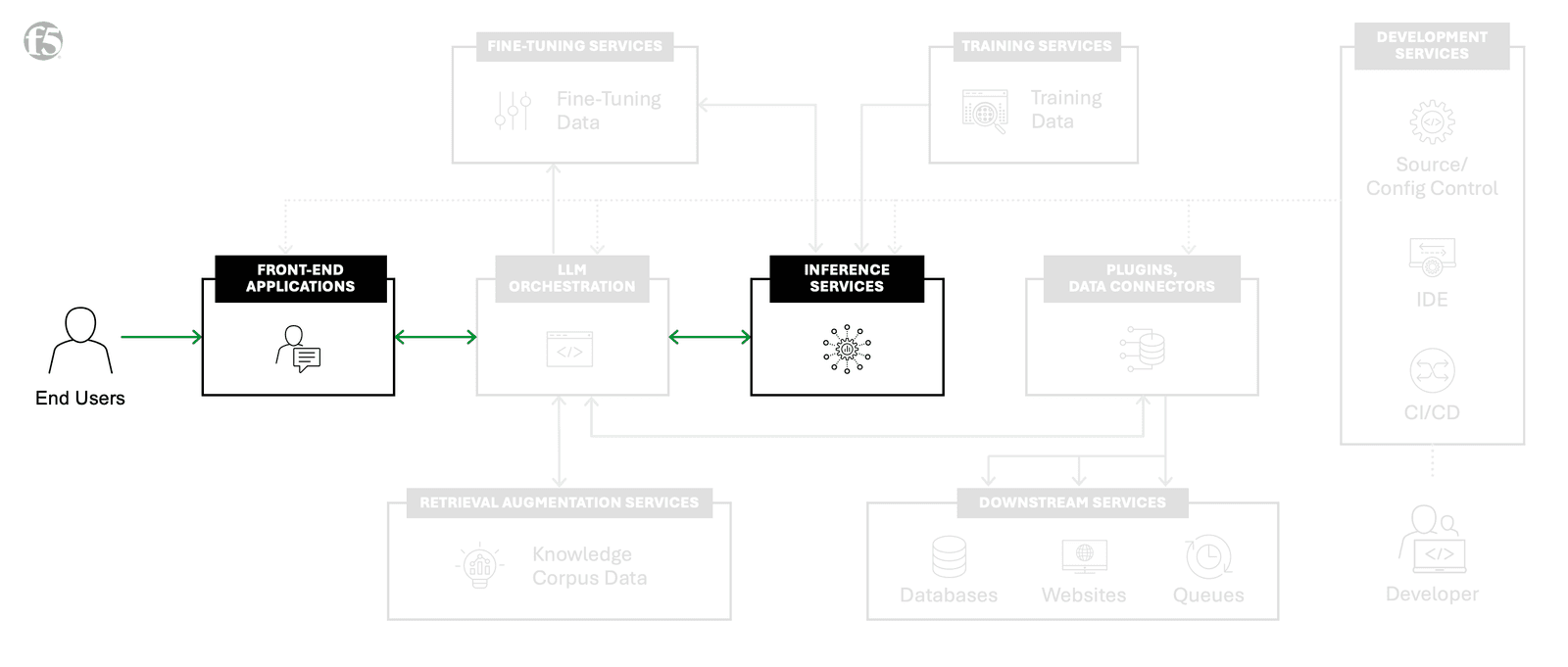

Distributed interconnection points are at the core of AI factories and drive the operation of storage, networking, and computing infrastructure. As enterprises design their AI factories, many services will likely be physically located within the same data center or nearby. However, there are services that may be distributed geographically. Services such as front-end applications, inferences services, and required models will provide the best performance if architected to be near where they need to be consumed. Additionally, Retrieval-Augmented Generation (RAG) knowledge corpus data may be involved if the design calls for RAG, but for now we will focus on front end, inference services, and model deployment.

Inference services and front-end applications

According to Google's list of 185 real-world generative AI use cases from the world’s leading organizations, we observed that many of these companies rely on strategic locations to serve AI workloads to global customers. The distributed architecture of an AI factory must be a focus and curated when thinking of the end user experience. In F5’s 2024 State of Application Strategy report, it was found that 88% of organizations operate in a hybrid cloud model. It was also reported that 94% respondents experience multicloud challenges.

Multi-layered security: learnings from software defined networks (SDNs)

So, what is the best way to enable secure and optimized communication between the AI factories and instances where inference is consumed? The answer revolves around networking. To understand why, let’s review an industry buzzword that was mostly overlooked outside the service provider world: software defined networks (SDN), which have been paramount to the security of 3GPP architectures, like 4G and 5G. The security success of 3GPP architectures can be attributed to adherence of strict application isolation.

SDNs offer a powerful solution by implementing layer 2 and 3 zero-trust principles, where traffic cannot route to an application unless it is verified and processed through a software-defined deterministic routing infrastructure. This ensures that each component of an application can be scaled independently, both locally and globally, to achieve optimal performance and security. Additionally, SDNs route application traffic by name rather than IP address, mitigating issues like IP address overlap and allowing for seamless scaling of applications and security solutions across different regions and environments. The explicit routing and tunneling within SDNs also provide a robust defense against potential attacks, as unauthorized access requires resource ownership within the SDN as well as compliance with strict communication protocols.

Large Tier 1 telecoms have been demonstrating the effectiveness of these principles at scale, and it makes sense to apply them to even bigger workloads in multicloud networking (MCN) for AI factories.

How to secure connectivity at AI-scale

This sounds easy, right? The smartphone you may be reading this from likely has a GPU, 5G connectivity, and a passcode. You should be good to go for private security, right? Well, not quite. For now, we’ll look at three points. However, know that these are only a start and more will be uncovered as you design and model the threat landscape for your AI factory.

First, let’s talk about speed. When generative AI made its initial splash with ChatGPT in late 2022, we were focused on text data. However, in 2024 increasingly we see use cases around other modalities such as images, video, text, and data mixed into the flow and application layer models based on specializations. In a distributed AI factory architecture, it might not be desirable or feasible to deploy all models everywhere. It might come down to factors such as data gravity, power gravity, or compute requirements. This is where you can select high-speed network interconnect to bridge gaps and mitigate performance issues that you face when you move dependent services away from each other.

Let’s also visit model theft, one of the OWASP Top 10 risks for large language models (LLMs) and generative AI apps. Any business looking to leverage generative AI to gain a competitive advantage is going to incorporate their intellectual property into the system. This might be through training their own model with corporate data or fine tuning a model. In these scenarios, just like your other business systems, your AI factory is creating value through a model you must protect. To prevent model theft in a distributed architecture, you must ensure that this model, updates to the model, and data sources that the application needs to access are encrypted and have applied access controls.

Finally, let’s consider model denial of service, also on the OWASP Top 10 for LLMs and generative AI apps. As trust is gained in AI applications, their use in critical systems increases—whether that means a significant revenue-driving system for your business or critical for life sustainment such as a healthcare scenario. The ability to access the front end and inference must be designed so that any possible way in is resilient, controlled, and secured. These access scenarios might be applied to end-user access as well as from inference services back to the core AI factory.

Built-in visibility, orchestration, and encryption

Secure multicloud networking solutions enable the necessary connectivity, security, and visibility to design the distributed aspects of AI factory architecture. F5 Distributed Cloud Network Connect addresses all the above and more. Distributed Could Network Connect also offers unique customer edge solutions allowing for universal connectivity whether you are looking to run inference in the public cloud, your own or co-located data centers, or out at the edge on hardware of your choice. Simply and quickly deploy, and the customer edge establishes connectivity on its own.

- Connect at speed: F5 Distributed Cloud Services is built atop a global high-speed interconnected network that is among the highest peered networks in the world. Enterprises can be confident that their AI factories have incorporated the fastest connectivity possible to their distributed services. Distributed Cloud Network Connect enables quick provisioning of sites allowing enterprises to deliver AI services immediately.

- Connect with security: Distributed Cloud Network Connect is secure by default. Connectivity is encrypted and allows full control of what can and cannot connect to an AI factory and the distributed services. Additionally, customer edges can be used to enable services beyond what we’ve discussed so far, including Web Application and API Protection (WAAP).

- Connect with visibility: Distributed Cloud Services also offers a SaaS-based console that provides rich, data-filled dashboards sharing insights into the health of application connectivity at any part of the network. This allows teams to proactively identify where trouble may be arising and address it before the issue compounds.

Designing your AI factory is no small feat, and enterprises want to reap the benefits of AI as quickly as possible while ensuring maximum security. Being able to reliably deploy multiple secure functions of your AI factory will allow faster innovation, while freeing up time to focus on the aspects of AI factories that bring true business differentiation and a competitive advantage. If you want to discover more about multicloud networking, watch our Brightboard Lesson or explore F5 Distributed Cloud Network Connect.

F5’s focus on AI doesn’t stop here—explore how F5 secures and delivers AI apps everywhere.

Interested in learning more about AI factories? Explore others within our AI factory blog series:

- What is an AI Factory? ›

- Retrieval-Augmented Generation (RAG) for AI Factories ›

- Optimize Traffic Management for AI Factory Data Ingest ›

- Optimally Connecting Edge Data Sources to AI Factories ›

- The Power and Meaning of the NVIDIA BlueField DPU for AI Factories ›

- API Protection for AI Factories: The First Step to AI Security ›

- The Importance of Network Segmentation for AI Factories ›

- AI Factories Produce the Most Modern of Modern Apps: AI Apps ›

About the Authors

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.