In early 2018, I kept finding myself having similar conversations with my friends and colleagues who were experts in networking and emerging 5G standards—we all had a vague discomfort that we were rushing toward a transition with a LOT of unknowns. We all understood that 5G was going to push a transition to containers and cloud-native architecture, but we also knew that Kubernetes and other cloud-native tools were originally created with a totally different set of use cases in mind. What we all saw (and probably many reading this post also observed) was that our industry was on a collision course with heartburn.

Since those apprehensive days, Communication Service Providers (CSPs) have begun the real work of migrating to a cloud-native infrastructure. Early pioneers of these deployments are indeed discovering unexpected barriers where critical areas in Kubernetes networking as originally designed cannot meet the demands of service provider use cases. Whether the telco’s goal is moving toward a 5G Stand Alone (SA) Core deployment or part of a modernization initiative with a distributed cloud architecture, adapting Kubernetes to support network interoperability, carrier-grade scale, and carrier security policies are critical capabilities needed throughout the cloud-native infrastructure.

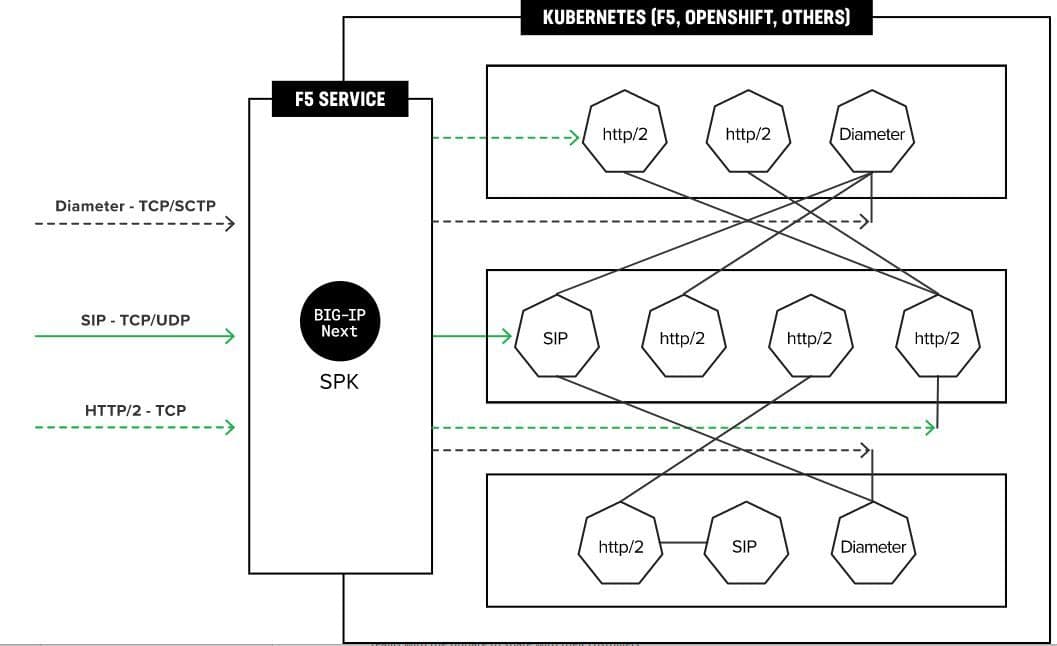

Because Kubernetes has since evolved primarily to focus on web and IT use cases running in public cloud or in enterprise environments, it’s understandable that the unique set of requirements and protocols to deploy service provider use cases were never planned for. Fortunately, however, the architects of Kubernetes put in place a solid set of design patterns that makes Kubernetes extensible, creating a path for fundamental telco use cases: for network traffic management, visibility and control, and extended protocol support such as HTTP/2, GTP, SIP, and Diameter protocols.

Before describing what enhancements are needed to make Kubernetes fit for today’s service provider use cases, let’s first identify the unique requirements a service provider network brings.

Kubernetes must fit within a bigger ecosystem

Firstly, a Kubernetes cluster has to integrate with the wider service provider network and operations. Architectural decisions can have long-term ramifications, in terms of increased operational cost and complexity. Network architects must take into account multiple telco use cases, support for legacy protocols, and how dynamic changes within Kubernetes may impact the exiting network topology—potentially leading to increased complexity.

Secondly, telco workloads (Network Functions) are different than IT workloads. Service provider networks and their network functions utilize more than just standard HTTP/HTTPS or TCP. It will be critical for mobile providers to have networking support for both legacy 3G/4G protocols such as SIP, GTP, SCTP, etc. and 5G HTTP2. Telco workloads also have an additional layer of standards that sit on top of the traditional networking layers compared to IT workloads.

Lastly, and certainly not least, security is paramount for all the new points of exposure, which require new automation, visibility, and management functions. Security must be deployed at all layers and work together with the new clusters when introducing new technology. Service provider SecOps teams are always looking at ways to reduce the attack surface and have consistent security control. Additionally, broader security policies of the network need to be updated and adaptable over time.

Two current approaches for dealing with these functional gaps

Circumvent Kubernetes Patterns

Circumventing cloud-native patterns is an indicator that architects are by necessity driven to create hacks, because Kubernetes doesn’t natively come with the tools to handle telco workloads. We have observed several troubling ways telcos are breaking the patterns:

- Circumventing Kubernetes networking – The great majority of CNFs are placing one or more interfaces outside of the control of Kubernetes. These CNFs require direct network access (often put forward as a request for “multus,” to allow an extra, direct-to-the-outside interface). Without a central point of networking control, this exposes internal Kubernetes complexity to the external service provider network, which will lead to increased operational complexity and cost, as well as an increased security attack surface. It also puts a greater demand on any app/Network-Function developer and network operator to manage IP addresses, as well as handling the dynamic nature of containers in Kubernetes, which is now exposed to the outside world.

- Separate clusters for each Network Function – Kubernetes networking does not natively provide a central point for network traffic ingress/egress. Kubernetes does provide some ingress control but almost totally ignores egress traffic, and there is no built-in way to bind ingress and egress networking. We have seen multiple deployments where each Network Function is running in its own cluster, to allow correlation between IP addresses for servers and the IP addresses of network functions. This leads to additional resource overhead and increased CapEx spend while it also adds operational complexity resulting in increased OpEx spend.

Align with Kubernetes Patterns

An alternative approach is to align with Kubernetes design patterns and introduce a service proxy that will provide a “single pane of glass” for ingress/egress networking and security for the Kubernetes cluster to the outside world. The goal of a service proxy is to fill in the functional gaps Kubernetes presents when used in a service provider environment. A service proxy should:

- Use Kubernetes-native patterns to extend Kubernetes networking (Custom Resource Definitions, K8s Control Loop)

- Interface with broader network (BGP routing, egress SNAT IP assignments, IPv4/v6 translation)

- Link ingress and egress individually for each CNF

- Provide broad L4/L7 support (tcp, udp, sctp, NGAP, HTTP/2, Diameter, GTP, SIP)

- Provide a security layer (TLS termination, firewall, DDoS, web application firewall)

- Present consistent CNF, hiding highly dynamic Kubernetes events

F5’s approach — Introducing the service proxy

F5 has chosen the second scenario described above to extend Kubernetes and create this service proxy to provide this missing functionality. Based on our decades of traffic management and security expertise, we believe it’s a critical function needed to support large scale cloud-native migration. Production-ready and available now, we’ve developed the BIG-IP Next Service Proxy for Kubernetes (SPK) cloud-native infrastructure product, to directly address shortcomings of Kubernetes, and allow service providers to create resources that “vanilla” Kubernetes lacks. SPK simplifies and secures the architecture, with a framework that automates and integrates smoothly with broader network and security policies. This approach to Kubernetes for telcos will continue to lead to lower complexity and operational costs, plus a more resilient and secure infrastructure. As today we are witnessing a slowdown in the transition to 5G SA (Telecom Operators Failing 5G SA, GlobalData Finds), it’s safe to assume the transition will falter further without the introduction of a suitable service proxy. In-production telco customers in the midst of large-scale digital modernization are finding that SPK is proving itself to be the Kubernetes solution to the 5G network architecture problem that they didn’t even know they had.

F5’s SPK is now GA and is currently in production with large scale telcos. Look for upcoming events where we will demonstrate SPK capabilities compared to other approaches, and how to certify CNFs on our platform. For more information, visit this page, and if you’d like to speak directly to an F5 team member, contact us.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.