We've had a number of blogs on the divide between delivery (app dev) and deployment (production) spurred by our recent acquisition of NGINX. One of them touched briefly on a concept that today we're going to explore: operational simplicity.

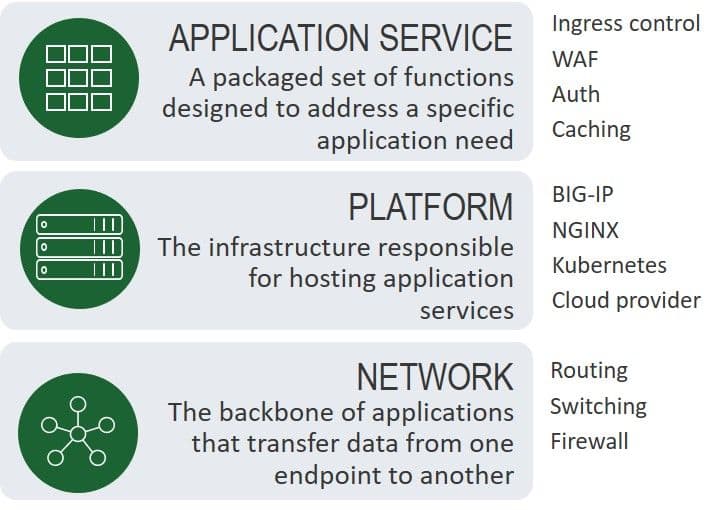

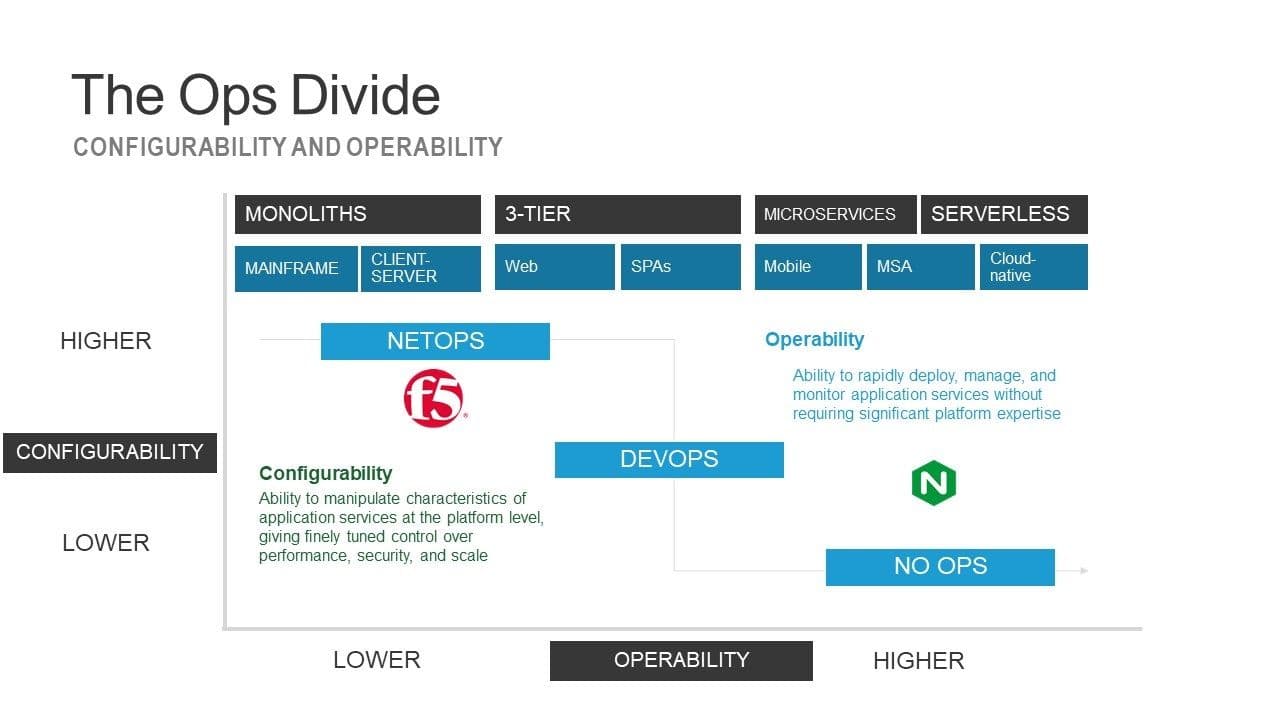

Behind the phrase "operational simplicity" exists a divide between configurability and operability. The two stand in stark contrast with one another. On the one hand is configurability. That's the ability to manipulate characteristics of application services at the network, platform, and application service layers. It's what gives you the ability to turn Nagle's algorithm on and off, and to tweak settings that impact the performance and efficiency of protocols.

On the other hand is operability. That's the ability to rapidly deploy, manage, and monitor application services. The expectation of operability is knowledge of the application service, and little more. There's no requirement that operators be experts in the platform or network layers. To achieve that, the knobs and buttons that exist at the application service layer may be restricted. The primary goal is to make the application service easy to deploy and operate.

One of these introduces complexity. One reduces it. One requires depth and breadth of knowledge across the entire stack. The other one does not. Each serves a role in the secure delivery of applications.

Division of Duties

The reason this divide is important is because cloud and containers are putting pressure on application service infrastructure to shift toward a simpler model. A number of application services are being consumed by containers. Load balancing, ingress control, monitoring, API gateways, and API security are viewed as necessary components to a successful containerization strategy. Essentially, transformation of architectures is dividing application services themselves. Some are better suited for deployment in the data path and some as part of the application architecture.

That means that increasingly it is ops - specifically DevOps - that are consuming application services on-premises and in the public cloud. That has a profound impact on those application services because the expectations of DevOps include operability over configurability. DevOps are not particularly interested in tuning TCP stacks; they are interested in fast, frequent deployments and maintaining application availability.

This is largely due to the focus on time to value that demands greater delivery and deployment velocity. No one has time to muck with infrastructure, they've got applications to get to market.

But that doesn't mean that configurability isn't important. It is, especially when it comes to security and performance. A standardized network and platform stack is not optimized for anything. It's not able to adjust itself to optimize for mobile at the same time it's optimizing for desktop. It isn't optimized for your network, whether in the cloud or on-premises.

And whether we like to admit it or not, performance is a compound measure. If your network is slow, your app is slower. The need to optimize at the network and platform layers is a critical component to ensure not only availability but performance. That makes configurability something that needs to be available to those who can take advantage of it.

Not a Binary Choice

Configurability remains as important as operability. It isn't really a binary choice because the consumption of application services is not binary. Both NetOps and DevOps today consume application services; the divide lies in their expectations regarding deployment and management of those application services. NetOps needs configurability. DevOps requires operability.

The business needs both, because speed of delivery to the market won't help if your app isn't fast and reliable, too.

This divide exists because technology is in a transitional state between a world where configurability was the rule and a future state where operability is expected. Today, you need a way to bridge the divide between the two, with application services that meet the operational expectations of their operators. That's why the combination of F5 and NGINX is so exciting today.

But I see a future where you can have configurability and operability at the same time. That's why the combination of F5 and NGINX is so promising for tomorrow. And if you're specifically interested in how F5 and NGINX will deliver modern API management, register for the upcoming webinar.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.