Generative AI is reshaping the business landscape, accelerating innovation across industries and unlocking new competitive advantages. Yet this rapid evolution brings complex challenges in security, scalability, cost efficiency, and integration. Enterprises must build AI applications that are not only cutting-edge but also secure, scalable, and resilient by design. Recognizing these challenges, F5 has developed the F5 AI Reference Architecture to help enterprises design, deploy, and manage enterprise AI applications.

Addressing AI application challenges

When it comes to developing modern AI applications, it’s easy to focus solely on the unique risks posed by AI-specific threats like prompt injection, model poisoning, or adversarial inputs. While these are certainly critical challenges that enterprises must address, the reality is that most of the security and performance risks aren’t exclusive to AI. They stem from familiar app delivery and security challenges that IT teams are already accustomed to managing.

F5 is a leader in application security, API protection, and performance optimization—the foundational infrastructure that keeps enterprise systems running reliably. These capabilities are just as critical for AI applications, ensuring core architectures can scale, stay resilient under pressure, and deliver seamless, secure access to the massive influx of AI-driven data.

Addressing these elements is step one, but now enterprises must also tackle the unique challenges of deploying AI apps at scale, including:

- Safeguarding AI models from poisoning

- Validating generative data inputs to prevent manipulation

- Securing complex workflows involving LLMs and AI agents

- Inconsistent performance

- Data security concerns

- High infrastructure costs

F5’s AI Reference Architecture provides a clear, actionable framework for organizations trying to address these issues. We offer standardized terminology so NetOps, SecOps, and DevOps teams can together navigate the complexity of deploying AI applications at scale. This comprehensive guide lays out the challenges of managing AI workloads across hybrid multicloud environments by breaking workflows into core blocks focused on security, traffic management, and platform optimization.

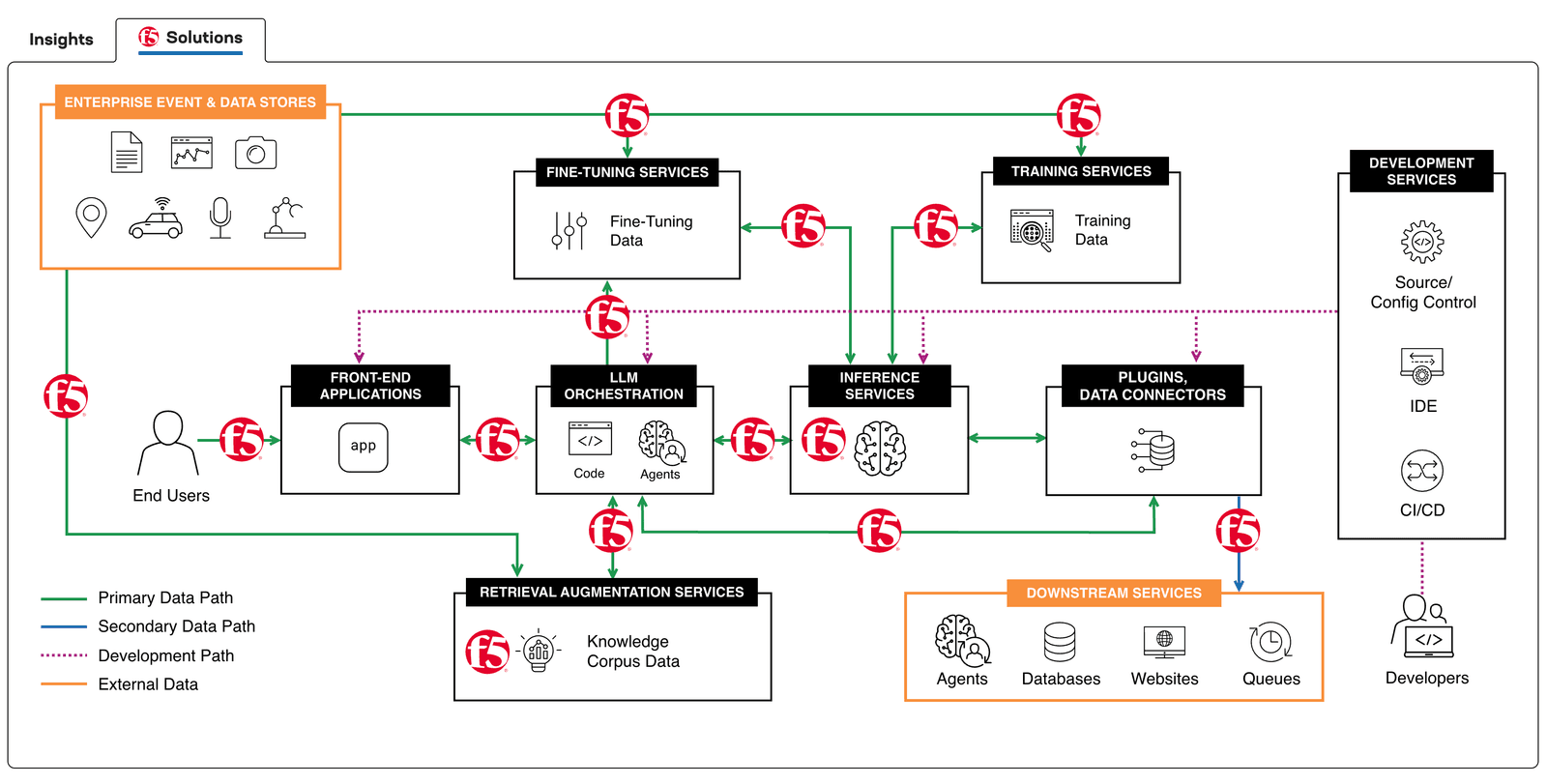

Today, we are releasing the latest iteration of the F5 AI Reference Architecture interactive experience as part of Accelerate AI, our week-long focus on how F5 helps enterprises accelerate their AI deployments. This next generation of the reference architecture showcases where F5 solutions and our partners fit into a full-scale AI architecture–from data ingest to model inference–whether deploying an application like a chatbot or interfacing with downstream services like other AI agents. Understanding where F5 fits given our widespread footprint in the largest global enterprises was a key request from customers who want to know how the F5 Application Delivery and Security Platform supports and secures AI workloads at scale.

AI component architecture

The F5 AI Reference Architecture addresses the critical performance, security, and operational challenges essential for delivering cutting-edge AI applications. Tour the architecture.

At a high level, here are the myriad ways in which F5 is helping customers bring this full-scale architecture to life:

- Optimize traffic management end-to-end

Streamline data flow across the AI pipeline—from the web and API front door to data ingest and GPU cluster ingress—ensuring ultra-reliable and low-latency performance. - Secure AI workloads at every stage

Protect AI applications, APIs, and models with advanced security measures such as zero trust architecture, API protection, and data leakage prevention to mitigate emerging threats. - Maximize infrastructure efficiency

Enable intelligent load balancing and efficient resource allocation, ensuring GPUs deliver full performance for AI training, inference, and fine-tuning while reducing operational costs. - Simplify multicloud AI connectivity

Seamlessly connect distributed data sources and AI inference workflows across hybrid and multicloud environments, ensuring scalability and compliance without sacrificing performance. - Enable observability and control

Deliver real-time insights into AI workflows, traffic behavior, and application interactions, empowering organizations to monitor and optimize operations proactively. - Promote ecosystems innovation

Recognizing the need for collaboration to address emerging risks, F5 has technology partnerships with leading security vendors and hyperscale providers. This approach enables enterprises to stay ahead in the rapidly evolving AI landscape while addressing gaps in broader architectures.

Driving innovation with F5

The F5 AI Reference Architecture is a tool for enterprises to securely scale AI initiatives by addressing foundational application risks and emerging AI-specific threats. Built for adaptability, scalability, and security, it walks through everything from AI supercomputing infrastructure to autonomous agents. With decades of app delivery and security expertise, F5 provides the technical foundation and trusted partnership needed to harness the full business potential of AI.

I encourage you to take a tour of the F5 AI Reference Architecture here. Also, be sure to check out all of F5’s latest innovations, research, and insights as part of Accelerate AI.

F5’s focus on AI doesn’t stop here—explore all the ways F5 delivers and secures AI applications.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.