Everyone wants AI. Most organizations claim to be working on it. But wanting it and being ready for it are two very different things. Our latest research cuts through the noise and takes a hard look at where organizations actually stand. Spoiler: most aren’t as ready as they think.

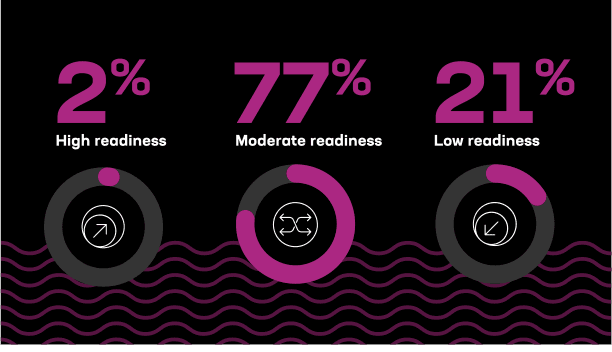

We built a composite AI Readiness Index by normalizing six signals from deployment stage to model diversity and then grouped respondents into tiers. Only 2% landed in the “highly ready” zone. Twenty-one percent were barely out of the gate. The vast majority? Stuck in the middle.

That’s not surprising to me, nor should it be to you. Half of the index is based on adoption of specific AI capabilities—generative AI, AI agents, and agentic AI. To find out a mere 2% are engaged with all three is not surprising, as agentic AI is still evolving as we speak.

Ope! There’s something else new.

It really is moving that fast.

Learning that most are moderately ready is a great finding, because it means they’re moving at the right pace. They’re maturing their practices, infrastructure, and application of AI to business use cases in a measured, meaningful way.

Moderately ready: The comfortable middle

Seventy-seven percent of organizations fall into this tier. They’re past the hype, knee-deep in experimentation, and trying to make it stick. They’ve started laying tracks, but the engine isn’t running at speed yet.

Most have a GenAI project live. Some have agents in place. A few are exploring what it even means to “do” agentic AI. And while they’ve dipped a toe into multiple application types, the average AI footprint is still shallow. Think: five-ish use cases, a third of apps touched, and two models in the mix, usually one paid, one open source. That’s not bad. It’s just not enough.

Why does it matter? Because limited diversity in model types means less flexibility. A single model can't serve every workload. You need options. The same goes for applications: AI confined to a chatbot or internal assistant isn’t going to transform your org. You need depth and distribution.

Security? Still playing catch-up. Integration between AI and security teams is spotty. Firewalls are coming, but they’re mostly still on the roadmap. Data protection? A little inline enforcement here, some tokenization there but not enough infrastructure discipline to call it systemic.

And if you think you can fake it until you make it with duct tape and wishful thinking, just wait. The price of weak security is more than just a bad press cycle, it’s regulatory blowback, loss of trust, and increasingly, existential risk.

And then there’s the data itself. Only 21% of these orgs have formal, repeatable data labeling practices. That’s like building a Formula One car and filling the tank with pond water. You can do it but you’re not getting very far.

It may seem strange for traditionally network and application focused folks to care about data, but at the end of the day, AI is only as good as its data and there’s a lot more of it. After all, the axiom “garbage in, garbage out” still applies, and that goes for augmenting existing anything—apps, infrastructure, security—with AI. Your AI is only as good as the data it’s trained on. Miss this step, and you're not building intelligence, you're reinforcing bad guesses and automating sketchy decisions.

This tier is what we’d call “momentum without mastery.” There’s energy, there’s direction, but there’s still a lot of friction. Not from lack of will, but from the realities of scale, coordination, and architectural debt.

Low readiness: Still stuck in the lobby

Now let’s talk about the 21%. These are the organizations still trying to figure out how to get started or, worse, still pretending they don’t need to. Maybe they’ve got a chatbot prototype. Maybe. For these teams, the issue isn’t vision, it’s inertia.

Many are in regulated sectors or legacy-bound environments where risk tolerance is low and architecture is brittle. But even here, there’s movement. Very few of these orgs have deployed AI-specific security measures like firewalls or inline enforcement. Most rely on general IT perimeter controls that don’t scale to AI use cases. Two-thirds say they’re piloting or planning AI projects. That’s something. But let’s not mistake ambition for execution.

The risk here isn’t missing the trend. No one’s going to miss this one. The risk is being overwhelmed by it when it hits. Without foundational work on data, infrastructure, and people, even the best model will fail to deliver.

What “highly ready” really means

You want to level up? Then build like the 2%:

- Stack more than one model into your environment, both paid and open. Flexibility matters. Not every model fits every task.

- Don’t just “have” agents; use them to handle real operational tasks. That’s how you scale.

- Go beyond isolated apps and start embedding GenAI across workflows. Integration isn’t optional; it’s the difference between novelty and utility.

- Lock down data security at the architecture level, not just with policy. Compliance doesn’t stop breaches; design does.

- And above all, design for scale from the start. Because retrofitting resilience is never fun.

Here’s the truth: readiness isn’t a product you buy. It’s not a service you subscribe to. It’s a posture, a structure, and a mindset. And if you’re reading this thinking, “We’re moderately ready,” that’s not a bad thing. That’s a milestone. But the next steps won’t happen by accident. You’ll need to be deliberate, aligned, and just a little uncomfortable.

Because AI doesn’t reward the curious. It rewards the prepared.

To dig deeper into the data and learn more about the model, you can grab our “2025 State of AI Application Strategy Report: AI Readiness Index” right here.

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.