New use cases sometimes require new load‑balancing algorithms, and in NGINX Plus R16 and NGINX Open Source 1.15.1 we added a new method that is particularly suitable for distributed load balancers: an implementation of the “power of two choices” algorithm.

Why Do We Need a New Load‑Balancing Algorithm?

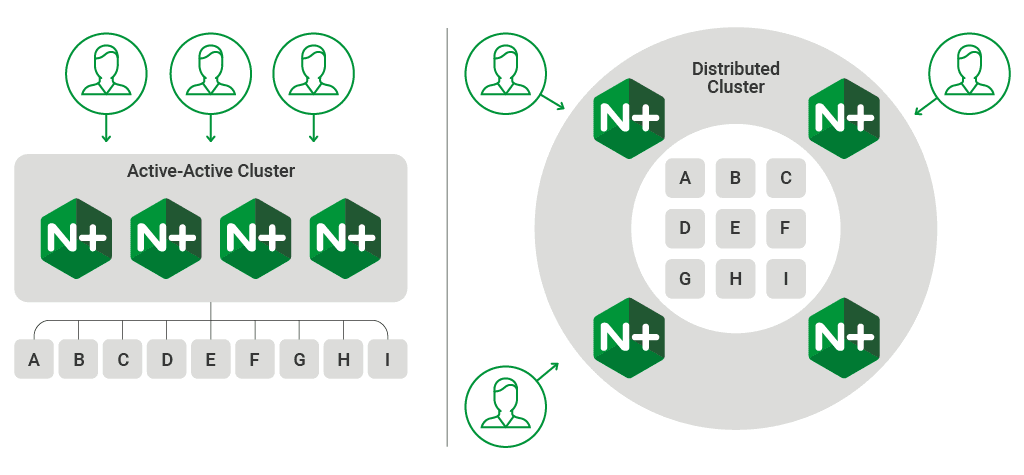

Classic load‑balancing methods such as Least Connections work very well when you operate a single active load balancer which maintains a complete view of the state of the load‑balanced nodes. The “power of two choices” approach is not as effective on a single load balancer, but it deftly avoids the bad‑case “herd behavior” that can occur when you scale out to a number of independent load balancers.

This scenario is not just observed when you scale out in high‑performance environments; it’s also observed in containerized environments where multiple proxies each load balance traffic to the same set of service instances.

A common instance of this scenario occurs when you use NGINX Ingress Controller for Kubernetes, with one load‑balancing instance per Kubernetes node.

The algorithm is referred to in the literature as “power of two choices”, because it was first described in Michael Mitzenmacher’s 1996 dissertation, The Power of Two Choices in Randomized Load Balancing. In NGINX and NGINX Plus, it’s implemented as a variation of the Random load‑balancing algorithm, so we also refer to it as Random with Two Choices.

How Does “Power of Two Choices” Work?

Let’s begin with what might be a familiar situation. You’ve just landed after a long international flight, and along with 400 other travelers, have walked into a busy arrivals hall.

Many airports employ guides in the arrivals hall. Their job is to direct each traveler to join one of the several queues for each immigration desk. If we think of the guides as load balancers:

- You and your fellow travelers are requests, each hoping to be processed as quickly as possible.

- The immigration desks are (backend) servers, each processing a backlog of requests.

- The guides maximize efficency by selecting the best server for each request.

- The methods available to the guides for selecting the best server correspond to load‑balancing algorithms.

Let’s consider how well some of the possible algorithms work in a distributed load‑balancing scenario like the arrivals hall.

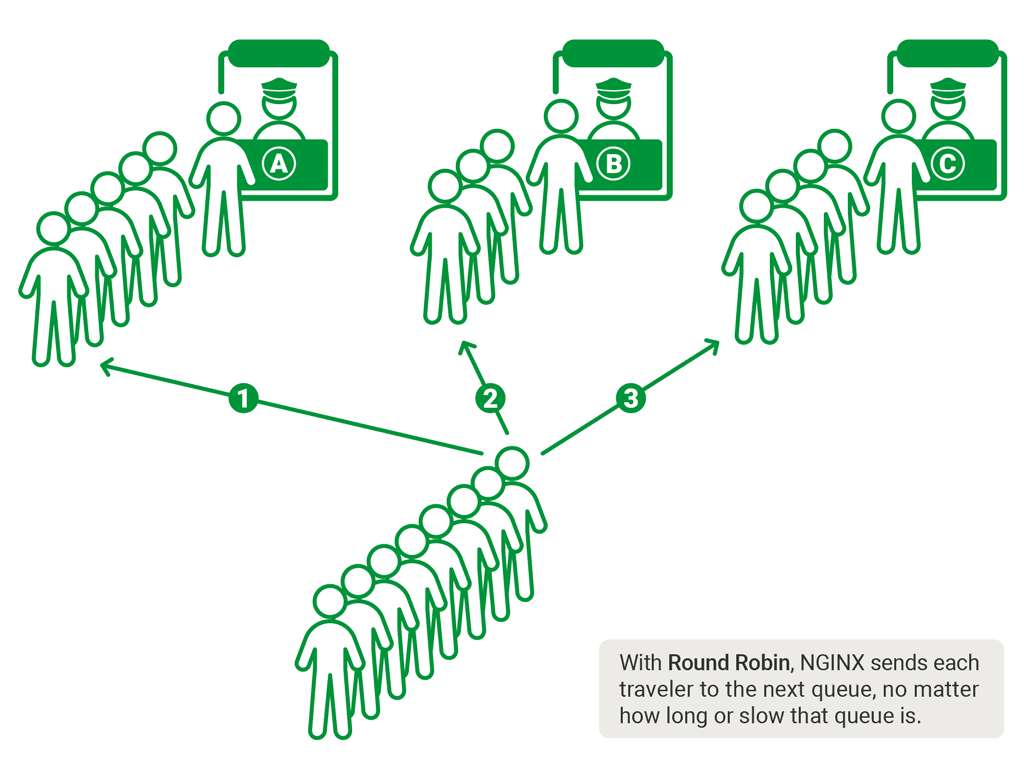

Round-Robin Load Balancing

Round robin is a naive approach to load balancing. In this approach, the guide selects each queue in rotation – the first traveler is directed to queue A, next traveler to queue B, and so on. Once a traveler is directed to the last queue, the process repeats from queue A. Round Robin is the default load‑balancing algorithm used by NGINX:

This approach works adequately, until there’s a delay in one of the queues. Perhaps one traveler has misplaced his or her documentation, or arouses suspicion in the immigration officer:

The queue stops moving, yet the guide continues to assign travelers to that queue. The backlog gets longer and longer – that’s not going to make the impatient travelers any happier!

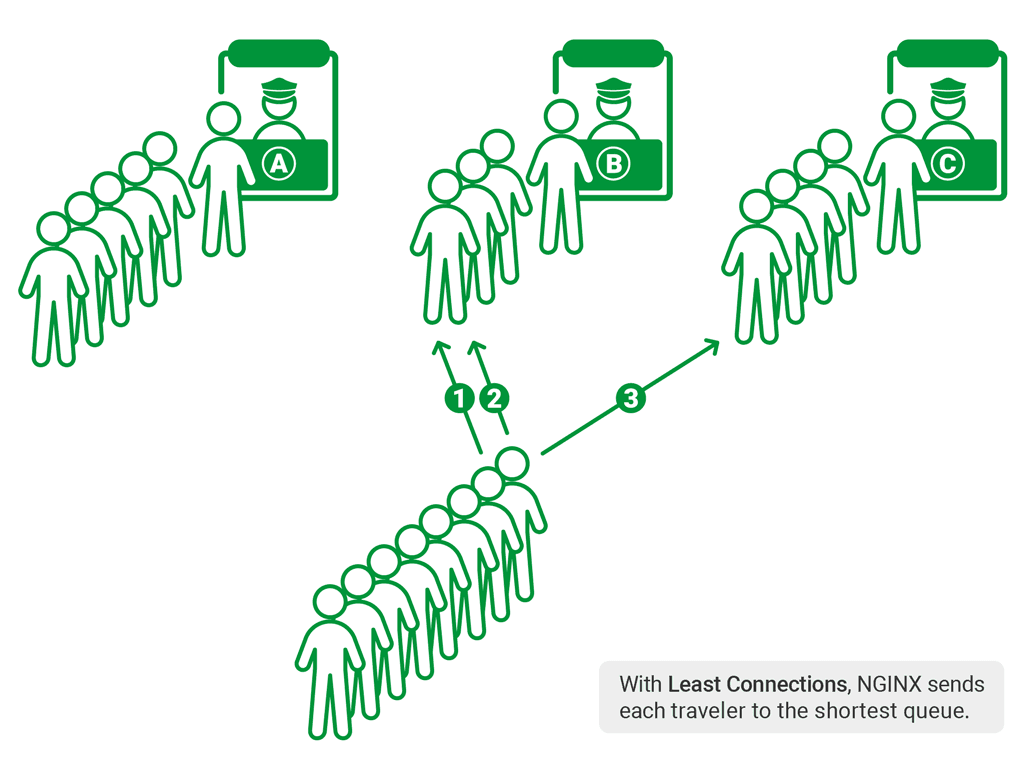

Least Connections Load Balancing

There’s a much better approach. The guide watches each queue, and each time a traveler arrives, he sends that traveler to the shortest queue. This method is analogous to the Least Connections load‑balancing method in NGINX, which assigns each new request to the server with the fewest outstanding (queued) requests:

Least Connections load balancing deals quite effectively with travelers who take different amounts of time to process. It seeks to balance the lengths of the queues, and avoids adding more requests to a queue that has stalled.

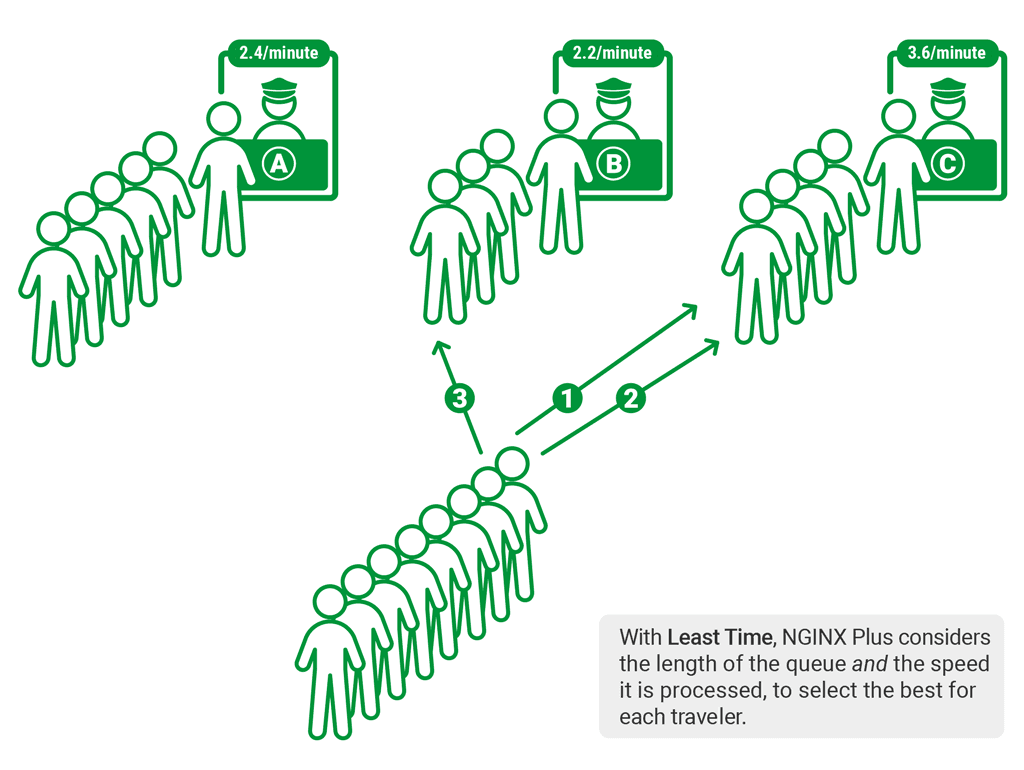

Least Time Load Balancing

We’ve seen that different passengers take different times to process; in addition, some queues are processed faster or slower than others. For example, one immigration officer might have computer problems which means he processes travelers more slowly; another officer might be a stickler for detail, quizzing travelers very closely. Other officers might be very experienced and able to process travelers more quickly.

What if each immigration booth has a counter above it, indicating how many travelers have been processed in, for example, the last 10 minutes? Then the guide can direct travelers to a queue based on its length and how quickly it is being processed. That’s a more effective way to distribute load, and it’s what the Least Time load‑balancing algorithm in NGINX Plus does:

This algorithm is specific to NGINX Plus because it relies on additional data collected with NGINX Plus’s Extended Status metrics. It’s particularly effective in cloud or virtual environments where the latency to each server can vary unpredictably, so queue length alone is not sufficient to estimate the delay.

It All Falls Apart with Multiple Guides

So far, we’ve had one guide (that is, load balancer) with a complete view of the queues and response time in the arrivals hall. That guide tries to make the best choice for each traveler based on the information he knows.

Now consider what happens if we have several guides, each directing travelers independently. The guides have independent views of the queue lengths and queue wait times – they only consider the travelers that they send to each queue.

This scenario is prone to an undesirable behavior, where all the guides notice that one queue is momentarily shorter and faster, and all send travelers to that queue. Simulations show that this “herd behavior” distributes travelers in a way that is unbalanced and unfair. In the same way, several independent load balancers can overload some upstream servers, no matter which “best choice” algorithm you use.

The “Power of Two Choices” Load-Balancing Algorithm

The solution lies in the “power of two choices” load‑balancing algorithm. Instead of making the absolute best choice using incomplete data, with “power of two choices” you pick two queues at random and chose the better option of the two, avoiding the worse choice.

“Power of two choices” is efficient to implement. You don’t have to compare all queues to choose the best option each time; instead, you only need to compare two. And, perhaps unintuitively, it works better at scale than the best‑choice algorithms. It avoids the undesired herd behavior by the simple approach of avoiding the worst queue and distributing traffic with a degree of randomness.

Using “Power of Two Choices” with NGINX and NGINX Plus

In NGINX and NGINX Plus, the “power of two choices” load‑balancing method is implemented as a variant of the Random algorithm, so we call it Random with Two Choices.

In NGINX Open Source, Random with Two Choices chooses between two randomly selected servers based on which currently has fewer active connections. This is the same selection criterion as used for the Least Connections algorithm. (This is also the default algorithm in NGINX Plus, and can be explicitly configured by adding the least_conn parameter.)

NGINX Plus also supports the least_time parameter, which uses the same selection criterion as the Least Time algorithm. As with that algorithm, you further choose between considering either:

- The time to receive the response header (

least_time=header) - The time to receive the full response (

least_time=last_byte), as in the following snippet. The Least Time criterion is ideal for situations where the latency to each upstream server can vary.

Conclusion

NGINX and NGINX Plus support a range of load‑balancing methods; in this article, we did not consider the deterministic Hash and IP Hash methods.

The Least Connections (and for NGINX Plus, Least Time) methods are very effective at balancing loads when the load balancer has a complete view of the workload assigned to each node and its past performance. They are less effective when several load balancers are assigning requests, and each has an incomplete view of the workload and performance.

“Power of two choices” uses a biased random algorithm, and has been demonstrated to be effective at balancing loads when each load balancer has an incomplete or delayed view. It avoids the “herd behavior” exhibited by other algorithms that seek to make a best decision on every request.

Consider Random with Two Choices, NGINX’s implementation of “power of two choices”, for very high‑performance environments and for distributed load‑balancing scenarios. A good use case arises when using multiple Ingress controllers on Kubernetes.

About the Author

Related Blog Posts

Automating Certificate Management in a Kubernetes Environment

Simplify cert management by providing unique, automatically renewed and updated certificates to your endpoints.

Secure Your API Gateway with NGINX App Protect WAF

As monoliths move to microservices, applications are developed faster than ever. Speed is necessary to stay competitive and APIs sit at the front of these rapid modernization efforts. But the popularity of APIs for application modernization has significant implications for app security.

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for north-south and east-west API traffic, plus use cases where an API gateway is the right tool.

Deploying NGINX as an API Gateway, Part 2: Protecting Backend Services

In the second post in our API gateway series, Liam shows you how to batten down the hatches on your API services. You can use rate limiting, access restrictions, request size limits, and request body validation to frustrate illegitimate or overly burdensome requests.

New Joomla Exploit CVE-2015-8562

Read about the new zero day exploit in Joomla and see the NGINX configuration for how to apply a fix in NGINX or NGINX Plus.

Why Do I See “Welcome to nginx!” on My Favorite Website?

The ‘Welcome to NGINX!’ page is presented when NGINX web server software is installed on a computer but has not finished configuring