This tutorial is one of four that put into practice concepts from Microservices March 2022: Kubernetes Networking:

- Reduce Kubernetes Latency with Autoscaling

- Protect Kubernetes APIs with Rate Limiting (this post)

- Protect Kubernetes Apps from SQL Injection

- Improve Uptime and Resilience with a Canary Deployment

Want detailed guidance on using NGINX for even more Kubernetes networking use cases? Download our free eBook, Managing Kubernetes Traffic with NGINX: A Practical Guide.

Your organization just launched its first app and API in Kubernetes. You’ve been told to expect high traffic volumes (and already implemented autoscaling to ensure NGINX Ingress Controller can quickly route the traffic), but there are concerns that the API may be targeted by a malicious attack. If the API receives a high volume of HTTP requests – a possibility with brute‑force password guessing or DDoS attacks – then both the API and app might be overwhelmed or even crash.

But you’re in luck! The traffic‑control technique called rate limiting is an API gateway use case that limits the incoming request rate to a value typical for real users. You configure NGINX Ingress Controller to implement a rate‑limiting policy, which prevents the app and API from getting overwhelmed by too many requests. Nice work!

Lab and Tutorial Overview

This blog accompanies the lab for Unit 2 of Microservices March 2022 – Exposing APIs in Kubernetes, demonstrating how to combine multiple NGINX Ingress Controllers with rate limiting to prevent apps and APIs from getting overwhelmed.

To run the tutorial, you need a machine with:

- 2 CPUs or more

- 2 GB of free memory

- 20 GB of free disk space

- Internet connection

- Container or virtual machine manager, such as Docker, Hyperkit, Hyper-V, KVM, Parallels, Podman, VirtualBox, or VMware Fusion/Workstation

- minikube installed

- Helm installed

- A configuration that allows you to launch a browser window. If that isn’t possible, you need to figure out how to get to the relevant services via a browser.

To get the most out of the lab and tutorial, we recommend that before beginning you:

- Watch the recording of the livestreamed conceptual overview

- Review the background blogs, webinar, and video

- Watch the 18‑minute video summary of the lab:

This tutorial uses these technologies:

The instructions for each challenge include the complete text of the YAML files used to configure the apps. You can also copy the text from our GitHub repo. A link to GitHub is provided along with the text of each YAML file.

This tutorial includes three challenges:

- Deploy a Cluster, App, API, and Ingress Controller

- Overwhelm Your App and API

- Save Your App and API with Dual Ingress Controllers and Rate Limiting

Challenge 1: Deploy a Cluster, App, API, and Ingress Controller

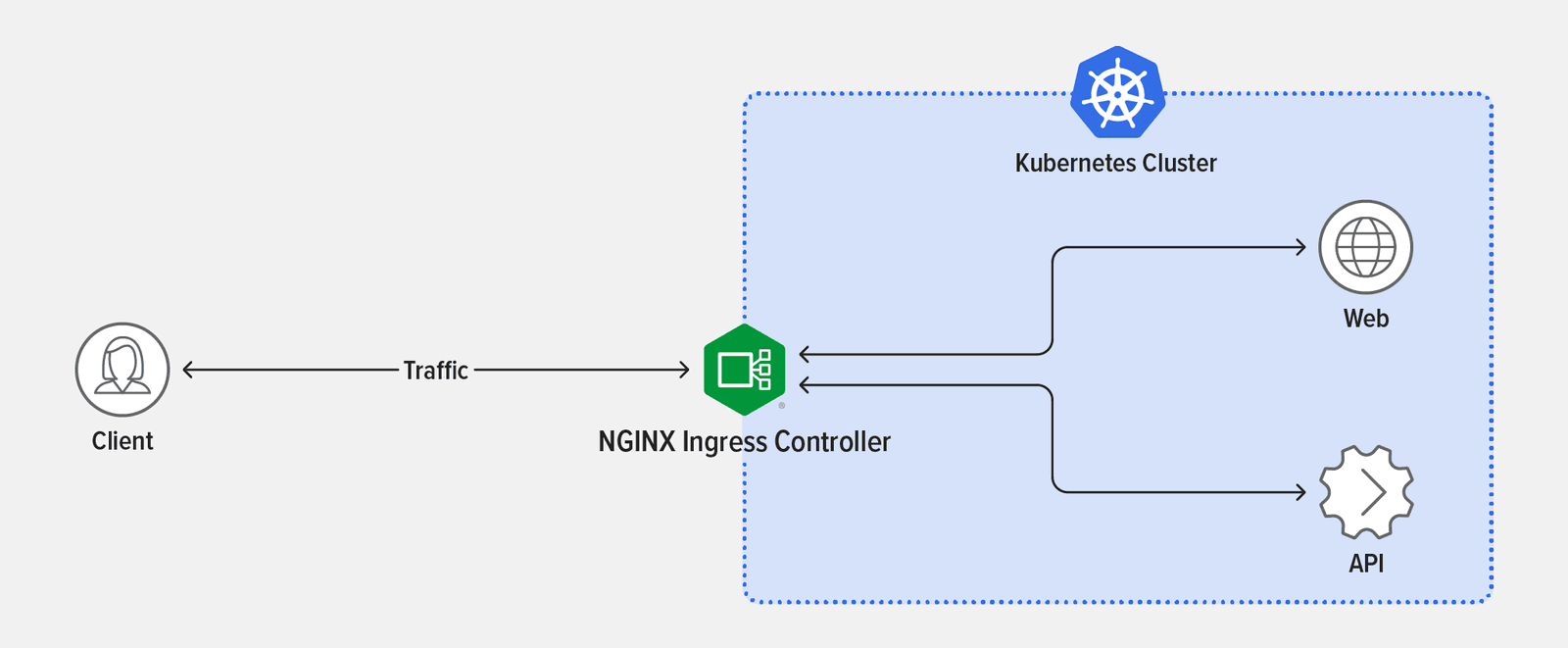

In this challenge, you deploy a minikube cluster and install Podinfo as a sample app and API. You then deploy NGINX Ingress Controller, configure traffic routing, and test the Ingress configuration.

Create a Minikube Cluster

Create a minikube cluster. After a few seconds, a message confirms the deployment was successful.

Install the Podinfo App and Podinfo API

Podinfo is a “web application made with Go that showcases best practices of running microservices in Kubernetes”. We’re using it as a sample app and API because of its small footprint.

- Using the text editor of your choice, create a YAML file called 1-apps.yaml with the following contents (or copy from GitHub). It defines a Deployment that includes:

- A web app (we’ll call it Podinfo Frontend) that renders an HTML page

- An API (Podinfo API) that returns a JSON payload

- Deploy the app and API:

- Confirm that the pods for Podinfo API and Podinfo Frontend deployed successfully, as indicated by the value

Runningin theSTATUScolumn.

Deploy NGINX Ingress Controller

The fastest way to install NGINX Ingress Controller is with Helm.

Install NGINX Ingress Controller in a separate namespace (nginx) using Helm.

- Create the namespace:

- Add the NGINX repository to Helm:

- Download and install NGINX Ingress Controller in your cluster:

- Confirm that the NGINX Ingress Controller pod deployed, as indicated by the value

Runningin theSTATUScolumn (for legibility, the output is spread across two lines).

Route Traffic to Your App

- Using the text editor of your choice, create a YAML file called 2-ingress.yaml with the following contents (or copy from GitHub). It defines the Ingress manifest required to route traffic to the app and API.

- Deploy the Ingress resource:

Test the Ingress Configuration

- To ensure your Ingress configuration is performing as expected, test it using a temporary pod. Launch a disposable BusyBox pod in the cluster:

- Test Podinfo API by issuing a request to the NGINX Ingress Controller pod with the hostname api.example.com. The output shown indicates that the API is receiving traffic.

- Test Podinfo Frontend by issuing the following command in the same BusyBox pod to simulate a web browser and retrieve the web page. The output shown is the HTML code for the start of the web page.

- In another terminal, open Podinfo in a browser. The greetings from podinfo page indicates Podinfo is running. Congratulations! NGINX Ingress Controller is receiving requests and forwarding them to the app and API.

- In the original terminal, end the BusyBox session:

Challenge 2: Overwhelm Your App and API

In this challenge, you install Locust, an open source load‑generation tool, and use it to simulate a traffic surge that overwhelms the API and causes the app to crash.

Install Locust

- Using the text editor of your choice, create a YAML file called 3-locust.yaml with the following contents (or copy from GitHub). The

ConfigMapobject defines a script called locustfile.py which generates requests to be sent to the pod, complete with the correct headers. The traffic is not distributed evenly between the app and API – requests are skewed to Podinfo API, with only 1 of 5 requests going to Podinfo Frontend. TheDeploymentandServiceobjects define the Locust pod. - Deploy Locust:

- Verify the Locust deployment. In the following sample output, the verification command was run just a few seconds after the

kubectlapplycommand and so the installation is still in progress, as indicated by the valueContainerCreatingfor the Locust pod in theSTATUSfield. Wait until the value isRunningbefore continuing to the next section. (The output is spread across two lines for legibility.)

Simulate a Traffic Surge

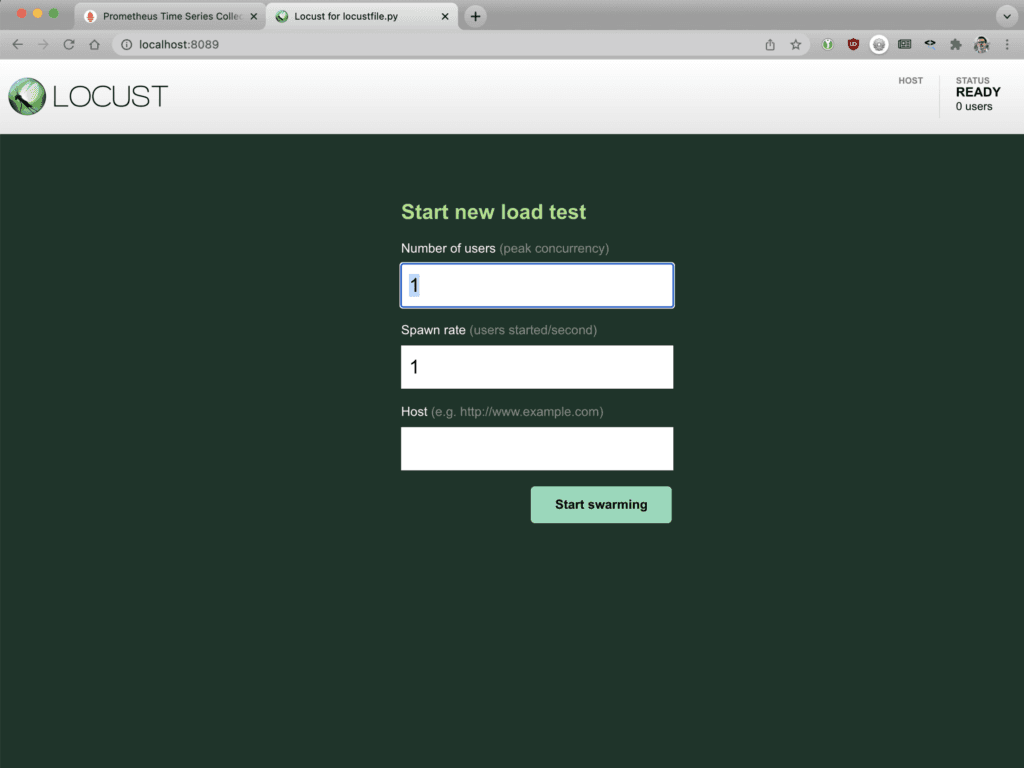

- Open Locust in a browser.

- Enter the following values in the fields:

- Number of users – 1000

- Spawn rate – 30

- Host – http://main-nginx-ingress

- Click the Start swarming button to send traffic to Podinfo API and Podinfo Frontend. Observe the traffic patterns on the Locust Charts and Failures tabs:

- Chart – As the number of API requests increases, the Podinfo API response times worsen.

- Failures – Because Podinfo API and Podinfo Frontend share an Ingress controller, the increasing number of API requests soon causes the web app to start returning errors.

This is problematic because a single bad actor using the API can take down not only the API, but all apps served by NGINX Ingress Controller!

Challenge 3: Save Your App and API with Dual Ingress Controllers and Rate Limiting

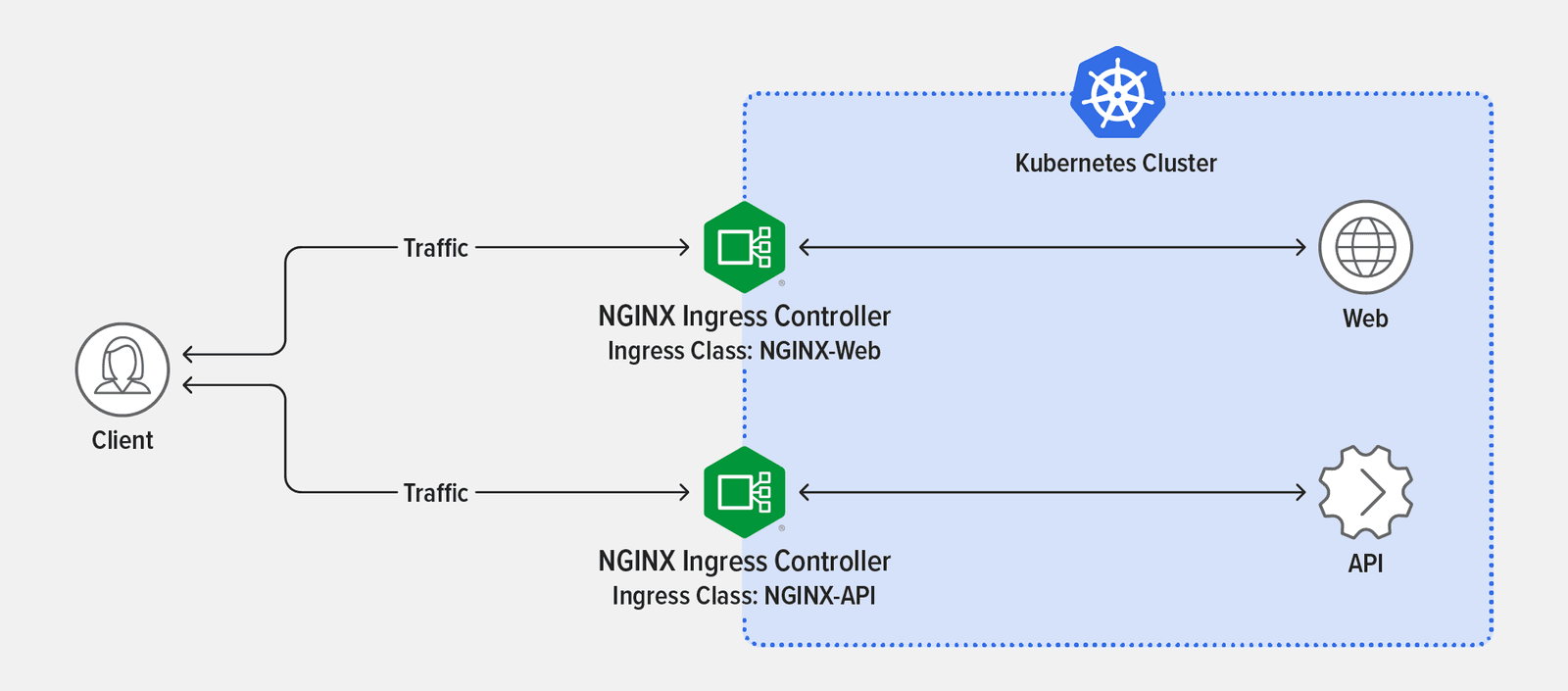

In the final challenge, you deploy two NGINX Ingress Controllers to eliminate the limitations of the previous deployment, creating a separate namespace for each one, installing separate NGINX Ingress Controller instances for Podinfo Frontend and Podinfo API, reconfigure Locust to direct traffic for the app and API to their respective NGINX Ingress Controllers, and verify that rate limiting is effective. First, let’s look at how to address the architectural problem. In the previous challenge, you overwhelmed NGINX Ingress Controller with API requests, which also impacted the app. This happened because a single Ingress controller was responsible for routing traffic to both the web app (Podinfo Frontend) and the API (Podinfo API).

Running a separate NGINX Ingress Controller pod for each of your services prevents your app from being impacted by too many API requests. This isn’t necessarily required for every use case, but in our simulation it’s easy to see the benefits of running multiple NGINX Ingress Controllers.

The second part of the solution, which prevents Podinfo API from getting overwhelmed, is to implement rate limiting by using NGINX Ingress Controller as an API gateway.

What Is Rate Limiting?

Rate limiting restricts the number of requests a user can make in a given time period. To mitigate a DDoS attack, for example, you can use rate limiting to limit the incoming request rate to a value typical for real users. When rate limiting is implemented with NGINX, clients that submit too many requests are redirected to an error page so they cannot negatively impact the API. Learn how this works in the NGINX Ingress Controller documentation.

What Is an API Gateway?

An API gateway routes API requests from clients to the appropriate services. A big misinterpretation of this simple definition is that an API gateway is a unique piece of technology. It’s not. Rather, “API gateway” describes a set of use cases that can be implemented via different types of proxies – most commonly an ADC or load balancer and reverse proxy, and increasingly an Ingress controller or service mesh. Rate limiting is a common use case for deploying an API gateway. Learn more about API gateway use cases in Kubernetes in How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh on our blog.

Prepare Your Cluster

Before you can implement the new architecture and rate limiting, you must delete the previous NGINX Ingress Controller configuration.

- Delete the NGINX Ingress Controller configuration:

- Create a namespace called nginx‑web for Podinfo Frontend:

- Create a namespace called nginx‑api for Podinfo API:

Install the NGINX Ingress Controller for Podinfo Frontend

- Install NGINX Ingress Controller:

- Create an Ingress manifest called 4-ingress-web.yaml for Podinfo Frontend (or copy from GitHub).

apiVersion: k8s.nginx.org/v1 kind: Policy metadata: name: rate-limit-policy spec: rateLimit: rate: 10r/s key: ${binary_remote_addr} zoneSize: 10M --- apiVersion: k8s.nginx.org/v1 kind: VirtualServer metadata: name: api-vs spec: ingressClassName: nginx-api host: api.example.com policies: - name: rate-limit-policy upstreams: - name: api service: api port: 80 routes: - path: / action: pass: api - Deploy the new manifest:

Reconfigure Locust

Now, reconfigure Locust and verify that:

- Podinfo API doesn't get overloaded.

- No matter how many requests are sent to Podinfo API, there is no impact on Podinfo Frontend.

Perform these steps:

- Change the Locust script so that: Because Locust supports just a single URL in the dashboard, hardcode the value in the Python script using the YAML file 6-locust.yaml with the following contents (or copy from GitHub). Take note of the URLs in each

task.- All requests to Podinfo Frontend are directed to the nginx‑web NGINX Ingress Controller at http://web-nginx-ingress.nginx-web

- All requests to Podinfo API are directed to the nginx‑api NGINX Ingress Controller at http://api-nginx-ingress.nginx-api

- Deploy the new Locust configuration. The output confirms that the script changed but the other elements remain unchanged.

- Delete the Locust pod to force a reload of the new ConfigMap. To identify the pod to remove, the argument to the

kubectldeletepodcommand is expressed as piped commands that select the Locust pod from the list of all pods. - Verify Locust has been reloaded (the value for the Locust pod in the

AGEcolumn is only a few seconds).

Verify Rate Limiting

- Return to Locust and change the parameters in these fields:

- Number of users – 400

- Spawn rate – 10

- Host – http://main-nginx-ingress

- Click the Start swarming button to send traffic to Podinfo API and Podinfo Frontend. In the Locust title bar at top left, observe how as the number of users climbs in the STATUS column, so does the value in FAILURES column. However, the errors are no longer coming from Podinfo Frontend but rather from Podinfo API because the rate limit set for the API means excessive requests are being rejected. In the trace at lower right you can see NGINX is returning the message

503ServiceTemporarilyUnavailable, which is part of the rate‑limiting feature and can be customized. The API is rate limited, and the web application is always available. Well done!

Next Steps

In the real world, rate limiting alone isn't enough to protect your apps and APIs from bad actors. You need to implement at least one or two of the following methods for protecting Kubernetes apps, APIs, and infrastructure:

- Authentication and authorization

- Web application firewall and DDoS protection

- End-to-end encryption and Zero Trust

- Compliance with industry regulations

We cover these topics and more in Unit 3 of Microservices March 2022 – Microservices Security Pattern in Kubernetes. To try NGINX Ingress Controller for Kubernetes with NGINX Plus and NGINX App Protect, start your free 30-day trial today or contact us to discuss your use cases. To try NGINX Ingress Controller with NGINX Open Source, you can obtain the release source code, or download a prebuilt container from DockerHub.

About the Author

Related Blog Posts

Secure Your API Gateway with NGINX App Protect WAF

As monoliths move to microservices, applications are developed faster than ever. Speed is necessary to stay competitive and APIs sit at the front of these rapid modernization efforts. But the popularity of APIs for application modernization has significant implications for app security.

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for north-south and east-west API traffic, plus use cases where an API gateway is the right tool.

Deploying NGINX as an API Gateway, Part 2: Protecting Backend Services

In the second post in our API gateway series, Liam shows you how to batten down the hatches on your API services. You can use rate limiting, access restrictions, request size limits, and request body validation to frustrate illegitimate or overly burdensome requests.

New Joomla Exploit CVE-2015-8562

Read about the new zero day exploit in Joomla and see the NGINX configuration for how to apply a fix in NGINX or NGINX Plus.

Why Do I See “Welcome to nginx!” on My Favorite Website?

The ‘Welcome to NGINX!’ page is presented when NGINX web server software is installed on a computer but has not finished configuring