Incorporating a service mesh connects your microservices and scales Kubernetes in a way that minimizes management overhead.

A service mesh connects microservices, making it easier to secure and orchestrate containerized components, and simplifying the work for cloud architects.

First it was applications everywhere, for everything, then it was microservices (and the container technologies that enable them). What’s the next innovation that will help deliver stellar user experience through apps? Service mesh.

The microservice approach relies on small, single-purpose components that are used to form larger, more complex applications. You can make a microservice for just about anything, and because each one focuses on a single capability, they are relatively quick to develop. However, the more capabilities you want, the more complex it becomes to coordinate the microservice components.

Traditional applications (sometimes called monolith applications) are slower to develop, but they have advantages. Because many separate functions are executed by a single program, coordination between the components is generally built into the system. There is only a single source of code, which makes traditional apps easier to troubleshoot and debug.

A service mesh is a transparent layer of infrastructure that facilitates communication between microservices. This gives apps built with microservices the same advantages as a traditional app—things like resiliency, observability, and security.

The shift from monolith to microservices was driven primarily by the need for application teams to be more agile and to execute more quickly. Reducing a large application into many small elements allowed individual teams to specialize. This let them iterate faster than they could when they had to be concerned about every aspect of the monolith.

However, this speed and agility comes with a price: increased complexity. Microservices simplify development, but introduce new challenges, especially around securing and orchestrating multiple, ephemeral components. Service mesh is a way for developers to offload complexity as microservice-enabled applications scale.

“The goal of multidimensional visibility is to eliminate data siloes and make information accessible within an organization.”

In a recent podcast, Andrew Jenkins, co-founder and CTO of Aspen Mesh, put it this way, “In a service mesh environment, the biggest change is not in what app developers need to do, but all the things they’re expected to not do.” For example, the security component of a service mesh provides authentication and manages whether one application trusts another. When a request fails, the platform operator has the tools to define whether the microservice retries or is retired.

In this new environment, the developers can focus on getting requests down to the service mesh layer. The platform operator—with access to tools that observe all communications between microservices—is able to offer the app developer a whole new range of data and metrics. “In a service mesh, you only have to fix that break in one place and then it’s fixed everywhere. That same consistency story appears again and again,” says Jenkins.

Think of service mesh as a powerful new toolbox. There are tools for security, transaction processing, encryption, recommendation engines, distributed tracing…the list goes on. But powerful tools won’t help you if you don’t know how to use them. Successful early adopters of service mesh need a strong cloud architecture practice and a platform team experienced in defining infrastructure services for developers.

Many organizations are successfully running Kubernetes and other container technologies without a service mesh. Standard Kubernetes implementations address built-in deployment issues very well. However, at scale, Kubernetes applications develop runtime issues that are not as well addressed—things like encryption, circuit breaking, and dynamic traffic routing. Solutions like NGINX and other container ingress solutions fill much of this gap by providing means to capture, shape, control, and visualize network traffic as it comes into an application. But when developers start to reach the edge of what those tools can deliver, service mesh promises a new level of visibility into what containerized microservices can do.

Right now, service mesh is still cutting edge. For organizations that are already fully committed to Kubernetes or that plan to develop with Kubernetes, it will be easier to implement a service mesh early than to bake it in down the road. If you are scaling Kubernetes, and want to minimize management overhead, then you need a service mesh.

For organizations with complex security needs or that rely heavily on microservice-derived applications, a service mesh can accelerate deployment and maintenance, harden security, and help optimize resource consumption. Service mesh environments increase visibility, delivering micro and macro level data that can be used to optimize applications.

Service mesh is the next level of advancement for microservices and container technologies. With the right strategy, it can be a powerful tool.

Speed minus the typical compromises—it’s yours with the right cloud architecture.

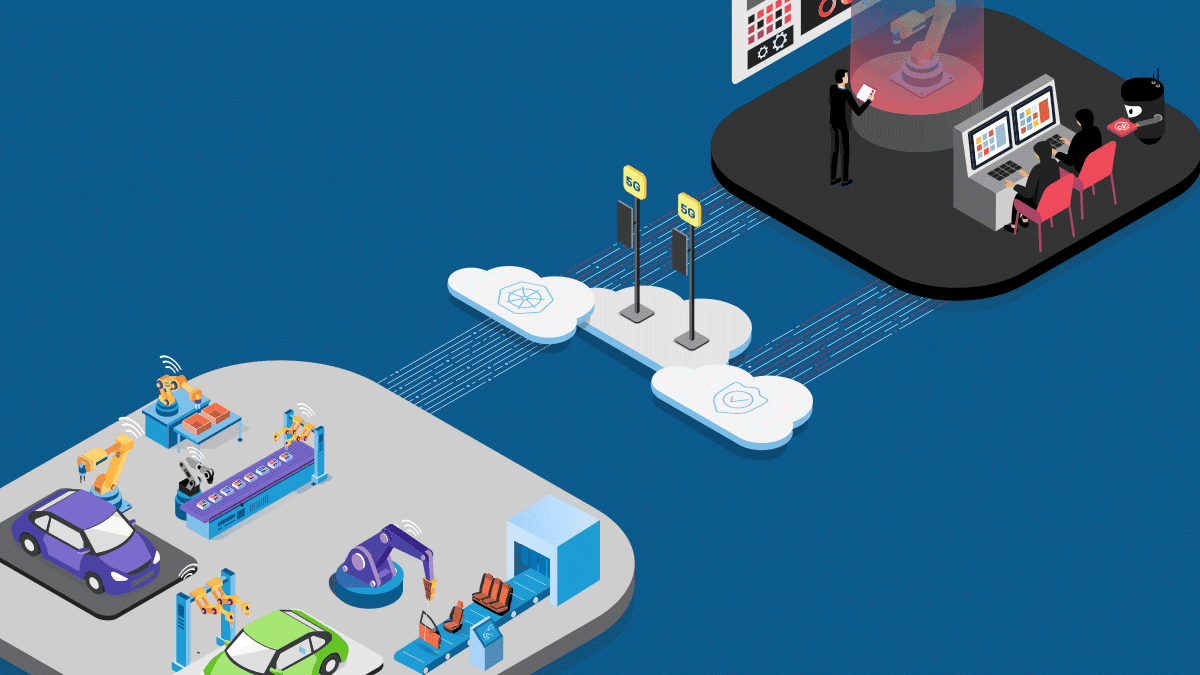

F5’s 5G infrastructure solutions provide the tools service providers need to modernize their network infrastructure.

Discover how to connect, secure, and scale microservices.

Discover service mesh and its alternatives in the What Lies Beneath podcast.