We’ve reached a key inflection point in the application landscape. Two key data points from the F5 2024 State of Application Strategy Report underscore the significant need for automation and app support services that can meet organizations where they are on their journey. The report tells us that:

- 41% of respondents manage more APIs than applications.

- Modern applications have outpaced traditional apps (51% vs. 49%).

New developments in modern applications and workloads bring quantum shifts to app architectures (not to mention delivery and security requirements). Put simply, the need to align behind containerization, container orchestration, automation, and microservices networking has never been greater. The same report named microservices networking as the most exciting technology trend in 2024. Not surprising when you consider that over 60% of enterprises have adopted Kubernetes.

However, this more recent way to build and operate apps and APIs comes with significant complexity and risk. According to Red Hat’s 2024 State of Kubernetes security report, almost 9 in 10 organizations reported a Kubernetes or container security incident in the last 12 months—which can lead to loss of reputation and revenue. The main concerns (and likely culprits) are vulnerabilities and misconfigurations. Those occur often in highly dynamic application deployment environments (like containers.)

Today’s enterprise application landscape is highly complex, with organizations deploying hundreds of apps and APIs, distributed globally, in form factors and operating environments that include:

- Monolithic or three-tier apps running in on-prem data centers and colocation facilities.

- Modern apps running in public clouds or customer edge locations.

- SaaS applications that are becoming increasingly business critical.

- Digital experiences leveraging microservices and APIs deployed across all these environments, based on business case.

Add the breakneck pace of innovation and widespread adoption of AI (with its close relationship to containers and microservices) and this complexity is on track to get out of hand quickly.

At F5, we affectionately call this situation the “Ball of Fire” and it leads to stratification of operations, less agility, and slower time to value. Adding to these operational concerns is the growing attack surface resulting from this heterogeneous application environment. That expanded surface is a prime target for bad actors and increasingly sophisticated threats (such as AI-driven attacks). All of these factors create a highly undesirable—and ultimately untenable—situation. You end up with poor visibility, little policy consistency, and gaps in app security, performance, and availability.

The answer to both the Ball of Fire and container-specific concerns lies with a new generation of application delivery controllers (ADCs). ADCs can provide full lifecycle automation to generate insights, provide policy consistency, plus deliver and secure apps across operating environments (especially containers.)

The growing demand for modern app delivery in Kubernetes environments

One of the most challenging aspects of operating apps and APIs in Kubernetes environments is lack of consistency. Because Kubernetes and containers in general are ethereal by nature, they’re spun up and down constantly, subject to constant change in policy and configuration, and meant to scale as demand and business needs dictate. This dynamic environment is great for operating modern apps, microservices, and APIs—but can prove difficult to manage and control.

These shifting requirements present significant challenges for DevOps, DevSecOps, IT, and security teams attempting to build, deploy, and operate apps and services in these environments—hurdles like configuration drift, scaling bottlenecks, and security vulnerabilities. And considering that each of the 30 most common app delivery and security technologies enjoys an average deployment rate of 93%, the challenges aren’t going away.

Operating apps in this changing environment requires that app delivery and security services—such as load balancing, content caching, WAFs, ingress controllers, and API gateways—be just as elastic and dynamic.

Enter automation to streamline these operations for apps in Kubernetes.

Whether through “traditional” automation (i.e. triggers based on defined thresholds and CI/CD workflows) or the next generation of automation enabled through AI, automated app delivery and security are becoming an accepted norm among technologists.

We learned through research conducted for our 2024 State of Application Strategy Report that a quarter of organizations use automated app delivery, and those same teams are almost twice as likely to automate their app and API security (43%).

As organizations continue to prioritize AI in their strategic decisions, automation will only increase. In fact, automation is now the top use for generative AI—to enable reduced complexity, faster responses, and greater efficacy in app delivery and security.

Automation in action: F5 BIG-IP Ingress for Kubernetes

Effective automation depends on visibility and data inputs that identify performance bottlenecks, respond quickly by adjusting policies, and ensure the correct configurations are in place. That means monitoring the environment—a significant challenge in Kubernetes.

F5 BIG-IP is a holistic, best-in-class solution for providing advanced application delivery and security services—largely because of the app and API data it unlocks. BIG-IP is an ideal solution for the ever-changing nature of Kubernetes environments.

Here’s what BIG-IP provides:

- Comprehensive visibility into your Kubernetes applications, enabling proactive health checks and real-time insights for early issue detection.

- Critical application data points like CPU, memory, and network usage, service performance, response times, and latency.

- Essential ability to tune and optimize app delivery and security to enhance the performance, reliability, and stability of your Kubernetes clusters.

BIG-IP for modern applications using automation in Kubernetes

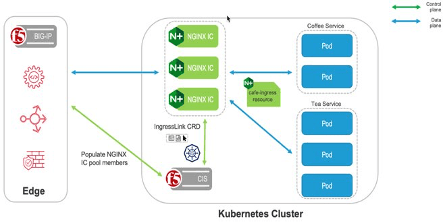

For a more “analog” automation solution (read: without AI), there is BIG-IP for modern apps using Kubernetes enabled by BIG-IP Container Ingress Services (CIS) and F5 IngressLink. This is a solution approach to manage and optimize network traffic for apps deployed in Kubernetes.

This deployment provides innovative front-door features such advanced traffic management, multi-cluster load balancing, SSL termination, and advanced protocols capabilities. This makes it ideal for handling the demanding ingress needs of multi-cluster Kubernetes environment. Also, it provides the absolute best available security enhancements that BIG-IP offers enterprise-wide and for Kubernetes environments. These include DDoS protection and access control, advanced web application firewall, network firewall, and more.

CIS listens for changes in Kubernetes environments and automatically updates the front-door BIG-IP to automate tasks, enforce consistent policies, and ensure app availability and resilience. Together, BIG-IP and CIS are designed to simplify Kubernetes deployments, which enables centralized control points for your entire distributed infrastructure.

BIG-IP for Kubernetes also addresses modern app delivery at scale, offering an integration between BIG-IP, F5 NGINX technologies, and community ingress controllers. This approach brings to market the best of F5, providing an elegant solution that offers a unified method of working with multiple technologies. This enables access to the ideal tool for each use case and fosters better collaboration across NetOps and DevOps teams. A diagram below illustrates this solution.

Modern app support services in Kubernetes with F5 BIG-IP

Enhanced with AI

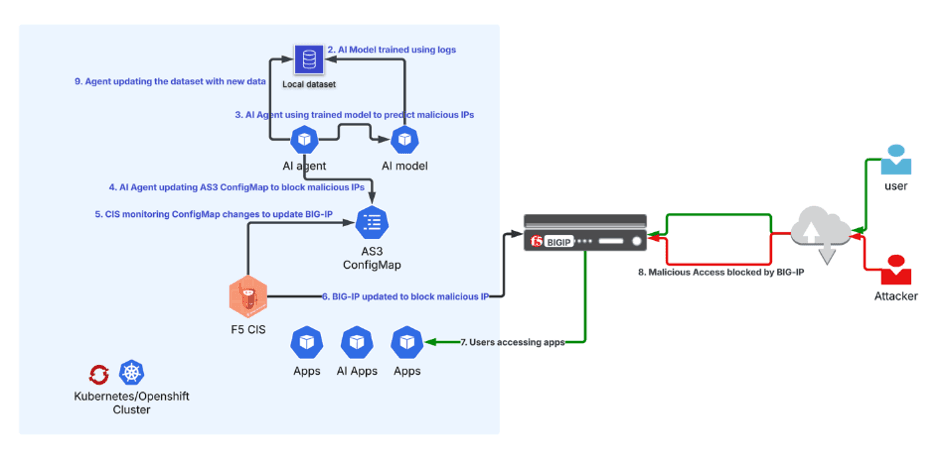

AI enables greater use of automation as it can leverage data to suggest an ideal course of action, optimize those recommendations, and continually improve. As a real-world example that’s deployable today, an organization can pull BIG-IP data into a model to train AI. Then, leveraging CIS, AS3, and Automation Toolchain, that team can update BIG-IP to optimize and enhance it to better block threats and anomalous behavior.

You can dive into this solution by exploring this video, or check out the POC reference architecture below. If you’d like to get started now, this POC is available via GitHub.

Automatically enhancing F5 BIG-IP with AI

Supporting AI workloads

Kubernetes environments are just one of several operating environments for apps and APIs. AI workloads (like an AI factory) are a prime example. However, Kubernetes networking was not designed to integrate into multiple networks or support protocols beyond HTTP/HTTPS. F5 BIG-IP Next for Kubernetes provides a central point for ingress/egress networking control and security for the Kubernetes cluster. This Kubernetes-native solution provides high-performance traffic optimization for large-scale AI infrastructures that include containerized apps.

BIG-IP Next for Kubernetes deployed on NVIDIA BlueField-3 DPUs enhances data ingestion efficiency and optimizes GPU utilization during model training. At the same time, it delivers a superior user experience during inferencing by boosting performance for retrieval-augmented generation (RAG). This solution is deployed within the cluster at the point of ingress/egress and is natively orchestrated by Kubernetes.

Whatever your use case is, BIG-IP and F5 have solutions to support these modern application and Kubernetes workloads.

Automation is the key to Kubernetes success

Due to the constantly shifting nature and requirements of applications in Kubernetes environments, it’s imperative that app delivery and security services benefit from intelligent and scalable automation. The F5 Application Delivery and Security Platform and Kubernetes-specific solutions enable IT, DevOps, and security teams to support application workloads in this operating environment, automate away the administrative hurdles, and ensure performance, resiliency, and security for their most important resource: apps and APIs.

To learn more about modern app delivery, visit our F5 BIG-IP Container Ingress Services webpage.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.