Some folks seem to be conflating LLMs with AI agents. Let’s just put that to bed, shall we? While certainly some are “extending” chatbots to execute tools and call them AI agents, that’s an immature approach if you want to harness agents for advanced automation. Which of course you do, because you recognize that that’s one of the most valuable use cases to address the growing operational fatigue caused by hybrid, multicloud complexity.

An AI agent should be a software unit (an application) bounded system that interprets goals, maintains context, and performs actions by invoking tools. It may use a large language model (LLM) to reason about what needs to happen, but the LLM is just one piece of the machinery. The agent is the system.

In practical terms, an AI agent receives a task (explicit or inferred), evaluates it within a contextual boundary, and decides how to act. Such actions can include calling tools, querying systems, or triggering workflows.

Now, you don’t need a swarm of agents to get value out of one. A well-scoped, single agent tied to a tight toolchain can do useful work today. It can automate summaries, generate reports, classify tickets, or even shepherd alerts to the right queues. As long as it respects scope and policy, it’s already valuable.

You can use AI agents without doing Agentic AI. But once agents collaborate, you are doing agentic AI, even if your architecture isn't ready for it.

Based on our most recent research, I assume you’re either already dealing with agentic behavior (9% of respondents) or headed there (79% of respondents). Agentic AI requires a framework built around controlled execution in which multiple agents (yes, “minions,” because that makes it easier to distinguish from agentic AI) interact with shared tools, contextual goals, and enforcement layers like MCP.

What is an agent?

An agent is a software construct that operates autonomously or semi-autonomously within clearly defined constraints. It interprets tasks, manages context, invokes tools, and makes decisions on behalf of a user or a larger system. In MCP-aligned architectures, agents conform to a structured protocol that governs how tasks, state, and policy interact.

Agents can reason, delegate, and act—but only within the sandbox they’ve been given. They don't improvise system calls. They don't invent tool access. Every action should pass through a declared interface that can be secured, monitored, and revoked.

At minimum, an agent has:

- Context handler – Keeps track of state, goals, memory (short-term or persistent)

- Reasoning engine – Usually an LLM or symbolic planner that interprets tasks and plans execution

- Tool interface – Maps intent to actions; controls what operations the agent can invoke

- Security boundary – Enforces what the agent is allowed to do and under what scope

- Transport layer – Moves messages and context, typically using MCP, HTTP, or an event bus

An LLM thinks. An agent acts. The platform governs.

Agent construction models

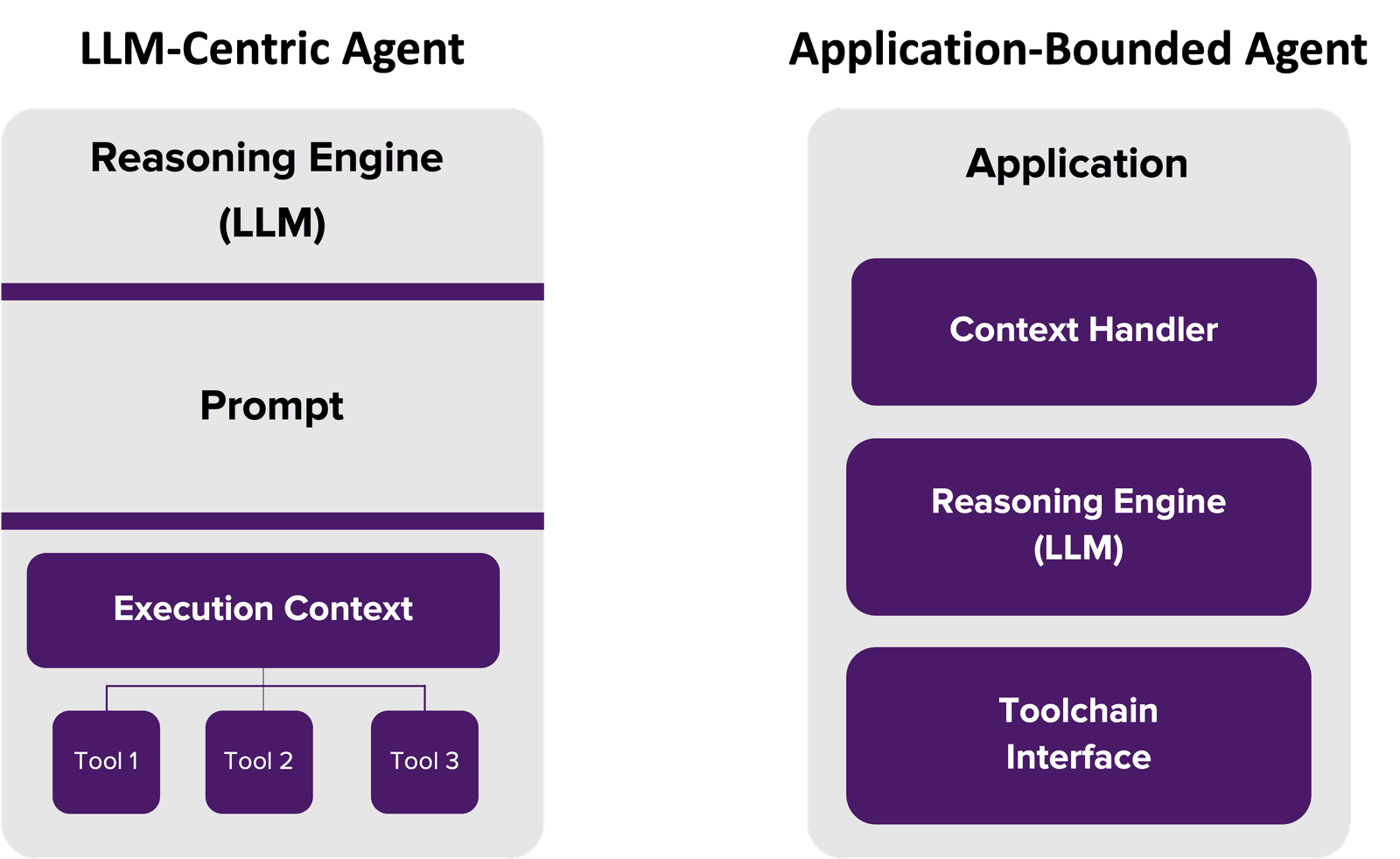

There are two prevailing models, and one of them is a trap.

1. LLM-centric agents (monolithic)

This is the default for most frameworks today: LangChain, AutoGen, CrewAI, etc. The agent is essentially a chat prompt with injected tools and optional memory—wrapped around a single LLM session.

- Quick to prototype

- Easy to demo

- Unsafe to scale

Limitations:

- No persistent architecture

- No native policy enforcement

- Easy for the model to hallucinate tool calls or forget constraints

- Zero observability outside token logs unless extended

In short: it’s a smart intern with root access and no supervision. Works great, until it doesn't.

2. Application-bound agents (modular)

This is the model you want in production.

Here, the agent is a full software service built on a framework that uses an LLM but doesn’t depend on it to handle execution.

- State is external (Redis, vector DBs, policy engines)

- Tool use is explicit and managed by a control layer (MCP server, gateway proxy)

- Security and observability are first-class, not an afterthought

- The agent is a deployed service, not a prompt

This approach gives you version control, access logs, tool-level governance, and runtime isolation. It’s how you turn agents from toys into infrastructure.

Agents are not personas

When people design agents as if they’re intelligent personas, they instinctively reach for human-centric access models: role-based access control (RBAC), login tokens, user attributes, identity scopes. These make sense when you're dealing with a consistent human identity across a session. But agents don’t behave like that. They’re not users. They're executors. And that changes everything.

Agents shift roles as they operate. A single agent might act as a data retriever, then a planner, then a trigger for automation, often within the same session and under the same task umbrella. It doesn’t log in, grab a static token, and stay in one lane.

This is where traditional access control fails. RBAC assumes static roles. Attribute-based access control (ABAC) assumes fixed attributes. Session tokens assume consistent scope. None of that holds when agents are dynamic. Identity in agentic systems is functional, not personal.

That’s why governing agents requires shifting away from identity-based policy to execution-based policy. Every tool call must be validated in real time against the agent’s current task role, context state, and permitted scope. Policies live at the tool layer, not the authentication layer. Context blocks, not login sessions, carry the necessary metadata to enforce those policies. This is why we call this paradigm shift “Policy in Payload,” because the policy literally is in the payload.

Treat agents like agents. Govern them like software. And never forget: the LLM thinks, the agent acts, and the platform governs. Confuse those roles, and you're building a personality with admin rights and no memory of its last mistake.

The LLM thinks. The agent acts. The platform governs.

Stick to that, and you’ll build agent infrastructure that scales, secures, and survives contact with reality.

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.