Editor – This post is part of a 10-part series:

- Reduce Complexity with Production-Grade Kubernetes

- How to Improve Resilience in Kubernetes with Advanced Traffic Management

- How to Improve Visibility in Kubernetes

- Six Ways to Secure Kubernetes Using Traffic Management Tools

- A Guide to Choosing an Ingress Controller, Part 1: Identify Your Requirements

- A Guide to Choosing an Ingress Controller, Part 2: Risks and Future-Proofing (this post)

- A Guide to Choosing an Ingress Controller, Part 3: Open Source vs. Default vs. Commercial

- A Guide to Choosing an Ingress Controller, Part 4: NGINX Ingress Controller Options

- How to Choose a Service Mesh

- Performance Testing NGINX Ingress Controllers in a Dynamic Kubernetes Cloud Environment

You can also download the complete set of blogs as a free eBook – Taking Kubernetes from Test to Production.

In Part 1 of our guide to choosing an Ingress controller, we explained how to identify your requirements. But it’s still not yet time to test products! In this post, we explain how the wrong Ingress controller can slow your release velocity and even cost you customers. As with any tool, Ingress controllers can introduce risks and impact future scalability. Let’s look at how to eliminate choices that might cause more harm than good.

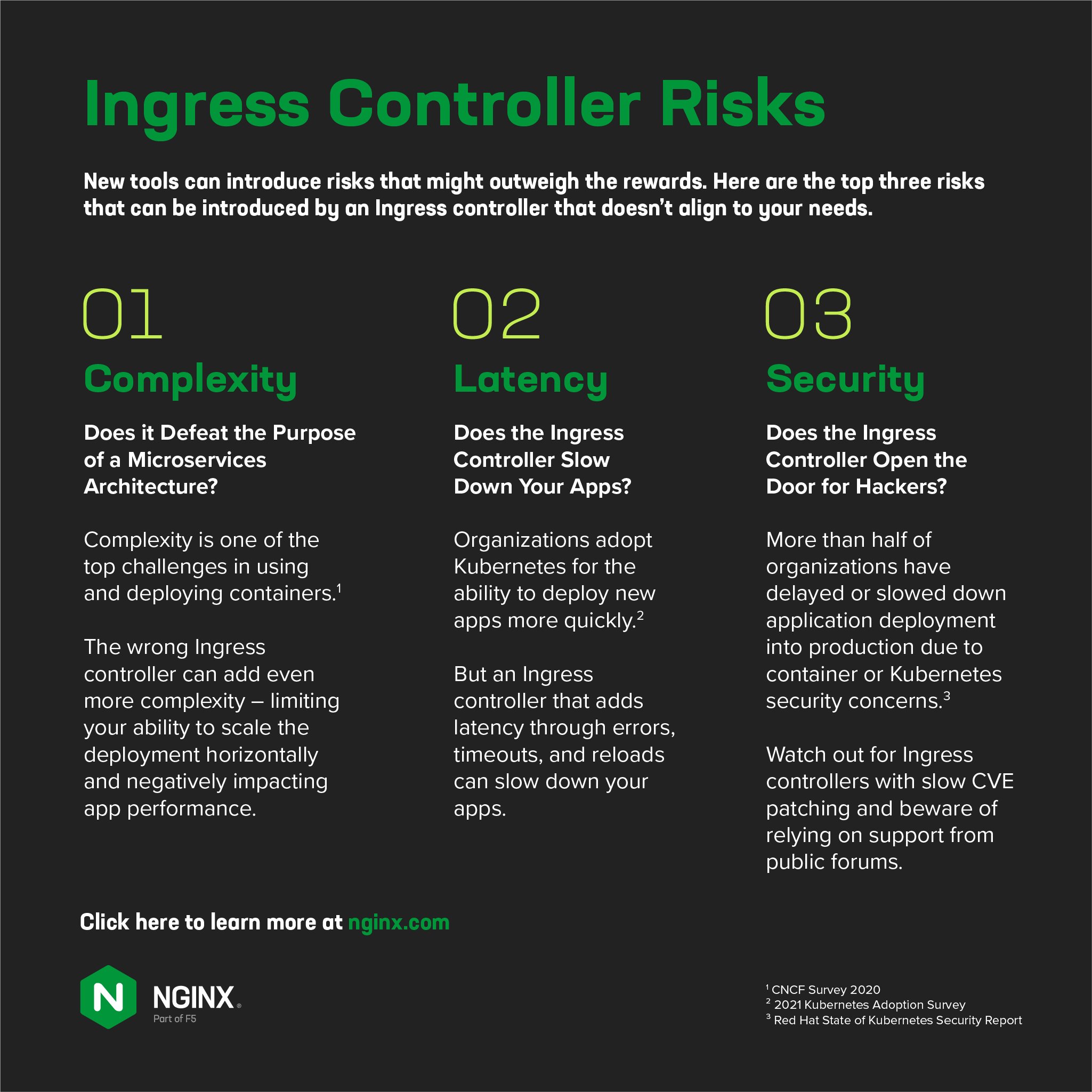

Ingress Controller Risks

There are three specific risks you should consider when introducing a traffic management tool for Kubernetes: complexity, latency, and security. These issues are often intertwined; when one is present, it’s likely you’ll see the others. Each can be introduced by an Ingress controller and it’s up to your organization to decide if the risk is tolerable. Today’s consumers are fickle, and anything that causes a poor digital experience may be unacceptable despite a compelling feature set.

Complexity – Does it Defeat the Purpose of a Microservices Architecture?

The best Kubernetes tools are those that meet the goals of microservices architecture: lightweight and simple in design. It’s possible to develop a very feature‑rich Ingress controller that sticks to these principles, but that’s not always the norm. Some designers include too many functions or use convoluted scripting to tack on capabilities that aren’t native to the underlying engine, resulting in an Ingress controller that’s needlessly complex.

And why does that matter? In Kubernetes, an overly complex tool can negatively impact app performance and limit your ability to scale your deployment horizontally. You can typically spot an overly complex Ingress controller by its size: the larger the footprint, the more complex the tool.

Latency – Does the Ingress Controller Slow Down Your Apps?

Ingress controllers can add latency due to resource usage, errors, and timeouts. Look at latency added in both static and dynamic deployments and eliminate options that introduce unacceptable latency based on your internal requirements. For more details on how reconfigurations can impact app speed, see Performance Testing NGINX Ingress Controllers in a Dynamic Kubernetes Cloud Environment on our blog.

Security – Does the Ingress Controller Open the Door for Hackers?

Common Vulnerabilities and Exposures (CVEs) are rampant on today’s Internet, and the time it takes for your Ingress controller provider to furnish a CVE patch can be the difference between safety and a breach. Based on your organization’s risk tolerance, you may want to eliminate solutions that take more than a few days (or at most weeks) to provide patches.

Beyond CVEs, some Ingress controllers expose you to another potential vulnerability. Consider this scenario: you work for an online retailer and need help troubleshooting the configuration of your open source Ingress controller. Commercial support isn’t available, so you post the issue to a forum like Stack Overflow. Someone offers to help and wants to look for problems in the config and log files for the Ingress controller and other Kubernetes components. Feeling the pressure to get the problem resolved quickly, you share the files.

The “good Samaritan” helps you solve your problem, but six months later you discover a breach – credit card numbers have been stolen from your customer records. Oops. Turns out the files you shared included information that was used to infiltrate your app. This scenario illustrates one of the top reasons organizations choose to pay for support: it guarantees confidentiality.

A Note on OpenResty-Based Ingress Controllers

OpenResty is a web platform built on NGINX Open Source that incorporates LuaJIT, Lua scripts, and third‑party NGINX modules to extend the functionality in NGINX Open Source. In turn, there are several Ingress controllers built on OpenResty, which we believe could potentially add two risks compared to our Ingress Controllers based on NGINX Open Source and NGINX Plus:

- Timeouts – As noted, OpenResty uses Lua scripting to implement advanced features like those in our commercial NGINX Plus-based Ingress Controller. One such feature is dynamic reconfiguration, which eliminates an NGINX Open Source requirement that reduces availability – namely, that the NGINX configuration must be reloaded when service endpoints change. To accomplish dynamic reconfiguration with OpenResty, the Lua handler chooses which upstream service to route the request to, thereby eliminating the need to reload the NGINX configuration.However, Lua must continuously check for changes to the backends, which consumes resources. Incoming requests take longer to process, causing some of the requests to get stalled, which increases the likelihood of timeouts. As you scale to more users and services, the gap between the number of incoming requests per second and the number that Lua can handle widens exponentially. The consequence is latency, complexity, and higher costs.Read Performance Testing NGINX Ingress Controllers in a Dynamic Kubernetes Cloud Environment to see how much latency Lua can add.

- CVE patching delays – Compared to the Ingress Controllers from NGINX, patches for CVEs inevitably take longer to show up in Ingress controllers based on tools like OpenResty that are in turn based on NGINX Open Source. As we outline in detail in Mitigating Security Vulnerabilities Quickly and Easily with NGINX Plus, when a CVE in NGINX is discovered, we as the vendor are generally informed before the CVE is publicly disclosed. That enables us to release a patch for NGINX Open Source and NGINX Plus as soon as the CVE is announced.Technologies based on NGINX Open Source might not learn about the CVE until that point, and in our experience OpenResty patches lag behind ours by a significant amount – four months in one recent case. Patches for an Ingress controller based on OpenResty inevitably take yet more time, giving a bad actor ample opportunity to exploit the vulnerability.

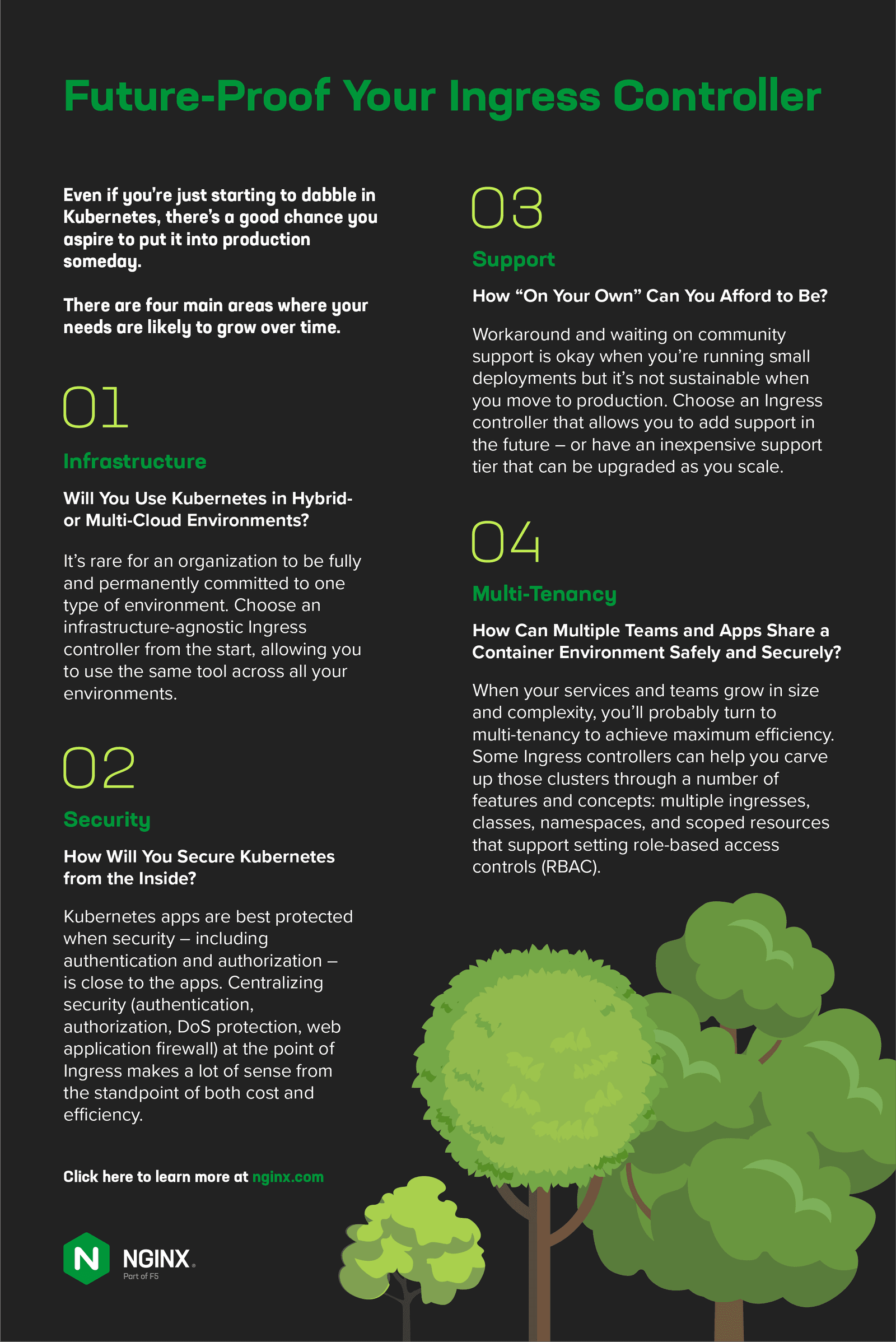

Future-Proof Your Ingress Controller

Even if you’re just starting to dabble in Kubernetes, there’s a good chance you aspire to put it into production someday. There are four main areas where your needs are likely to grow over time: infrastructure, security, support, and multi‑tenancy.

Infrastructure – Will You Use Kubernetes in Hybrid- or Multi‑Cloud Environments?

It’s rare for an organization to be fully and permanently committed to one type of environment. More commonly, organizations have a mix of on premises and cloud, which can include private, public, hybrid‑cloud, and multi‑cloud. (For a deeper dive into how these environments differ, read What Is the Difference Between Multi‑Cloud and Hybrid‑Cloud?.)

As we mentioned in Part 1 of this series, it’s tempting to choose tools that come default with each environment, but there are a host of problems specific to default Ingress controllers. We’ll cover all the cons in Part 3 of the series, but the issue that’s most relevant to future‑proofing is vendor lock‑in – you can’t use a cloud‑specific Ingress controller across all your environments. Use of default cloud‑specific tooling impacts your ability to scale because you’re left with just two unappealing alternatives:

- Try to make the existing cloud work for all your needs

- Rewrite all your configurations – not just load balancing but security as well! – for the Ingress controller in each new environment

While the first alternative often isn’t viable for business reasons, the second is also tricky because it causes tool sprawl, opens new security vulnerabilities, and requires your employees to climb steep learning curves. The third, and most efficient, alternative is to choose an infrastructure‑agnostic Ingress controller from the start, allowing you to use the same tool across all your environments.

When it comes to infrastructure, there’s another element to consider: certifications. Let’s use the example of Red Hat OpenShift Container Platform (OCP). If you’re an OCP user, then you’re probably already aware that the Red Hat Marketplace offers certified operators for OCP, including the NGINX Ingress Operator. Red Hat’s certification standards mean you get peace of mind knowing that the tool works with your deployment and can even include joint support from Red Hat and the vendor. Lots of organizations have requirements to use certified tools for security and stability reasons, so even if you’re only in testing right now, it pays to keep your company’s requirements for production environments in mind.

Security – How Will You Secure Kubernetes from the Inside?

Gone are the days when perimeter security alone was enough to keep apps and customers safe. Kubernetes apps are best protected when security – including authentication and authorization – is close to the apps. And with organizations increasingly mandating end-to-end encryption and adopting a zero‑trust network model within Kubernetes, a service mesh might be in your future.

What does all this have to do with your Ingress controller? A lot! Centralizing security (authentication, authorization, DoS protection, web application firewall) at the point of Ingress makes a lot of sense from the standpoint of both cost and efficiency. And while most service meshes can be integrated with most Ingress controllers, how they integrate matters a lot. An Ingress controller that aligns with your security strategy can prevent big headaches throughout your app development journey.

Read Secure Cloud‑Native Apps Without Losing Speed for more details on the risks of cloud‑native app delivery and Deploying Application Services in Kubernetes, Part 2 to learn more about the factors that determine the best location for security tools.

Support – How “On Your Own” Can You Afford to Be?

When teams are just experimenting with Kubernetes, support – whether from the community or a company – often isn’t the highest priority. This is okay if your teams have a lot of time to come up with their own solutions and workarounds, but it’s not sustainable when you move to production. Even if you don’t need support today, it can be wise to choose an Ingress controller that allows you to add support in the future – or has an inexpensive support tier that can be upgraded as you scale.

Multi-Tenancy – How Can Multiple Teams and Apps Share a Container Environment Safely and Securely?

In the beginning, there was one team and one app…isn’t that how every story starts? The story often continues with that one team successfully developing its one Kubernetes app, leading the organization to run more services on Kubernetes. And of course, more services = more teams = more complexity.

To achieve maximum efficiency, organizations adopt multi‑tenancy and embrace a Kubernetes model that supports the access and isolation mandated by their business processes while also providing the sanity and controls their operators need. Some Ingress controllers can help you carve up those clusters through a number of features and concepts: multiple ingresses, classes, namespaces, and scoped resources that support setting role‑based access controls (RBAC).

Next Step: Narrow Down Options

Now that you’ve thought about your requirements, risk tolerance, and future‑proofing, you have enough information to start narrowing down the very wide field of Ingress controllers. Breaking that field down by category can help you make quick work of this step. In Part 3 of our series, we’ll explore three different categories of Ingress controllers, including the pros and cons of each.

About the Author

Related Blog Posts

Secure Your API Gateway with NGINX App Protect WAF

As monoliths move to microservices, applications are developed faster than ever. Speed is necessary to stay competitive and APIs sit at the front of these rapid modernization efforts. But the popularity of APIs for application modernization has significant implications for app security.

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for north-south and east-west API traffic, plus use cases where an API gateway is the right tool.

Deploying NGINX as an API Gateway, Part 2: Protecting Backend Services

In the second post in our API gateway series, Liam shows you how to batten down the hatches on your API services. You can use rate limiting, access restrictions, request size limits, and request body validation to frustrate illegitimate or overly burdensome requests.

New Joomla Exploit CVE-2015-8562

Read about the new zero day exploit in Joomla and see the NGINX configuration for how to apply a fix in NGINX or NGINX Plus.

Why Do I See “Welcome to nginx!” on My Favorite Website?

The ‘Welcome to NGINX!’ page is presented when NGINX web server software is installed on a computer but has not finished configuring