We recently tested the scalability of NGINX for load balancing WebSocket connections. Even with 50,000 active WebSocket connections, NGINX required less than 1 Gb memory and less than 1 core of CPU capacity, and when loaded up with very busy connections, memory usage was stable and increased more slowly than message size. Performance in your environment will depend on the nature of your application, but the results of these tests can provide some indication of the amount of resources you can expect NGINX to use.

Test Environment

The following machines were used for this testing:

- Load generator – thor on a 6-core Xeon EX5645 @ 2.40 GHz, 48 GB RAM

- NGINX – Version 1.7.0, x86_64 with 6 workers on a 6-core Xeon E5645 @ 2.40 GHz, 48 GB RAM

- WebSocket backend – Node.js echo server on a 4-core Xeon E5-2660 0 @ 2.20 GHz, 8 GB RAM

Tests

We ran two sets of tests.

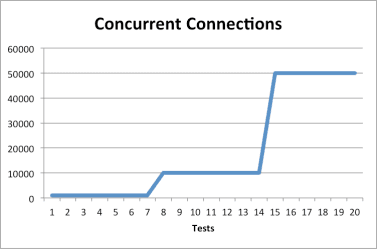

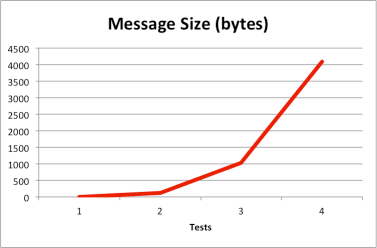

The first set of tests simulated long-lived and mostly idle connections. The number of connections varied from 1,000 to 50,000, message size from 10 to 4096 bytes, and the frequency of messages from 0.1 to 10 seconds (which we considered low).

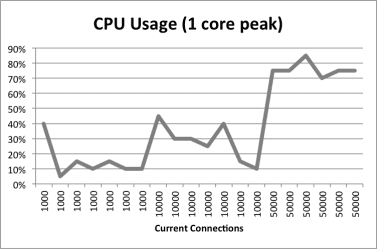

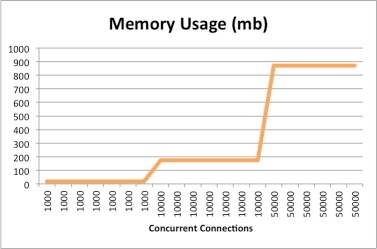

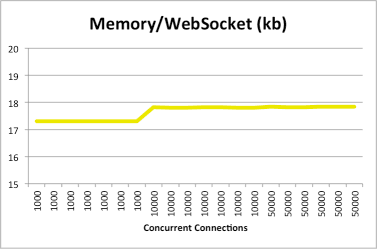

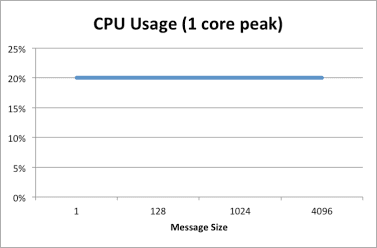

The results show that the total memory needed for WebSocket connections depends on the number of connections, and that the memory used per connection is consistent. Memory utilization is not affected by message size or frequency. CPU utilization basically trends with the number of connections and even at 50,000 connections did not use a full CPU core. The CPU utilization represented here is normalized to a single core by adding up the CPU utilization across all the cores. The following graphs show the results for this set of tests:

The second set of tests simulated short-lived but highly active connections. The number of concurrent connections was kept constant at 500, with 50 messages per connection and no delay between messages. Message size ranged from 1 to 4096 bytes

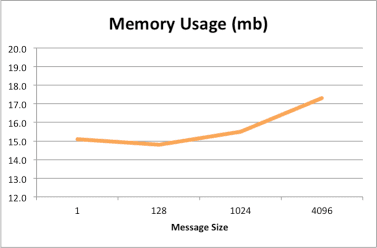

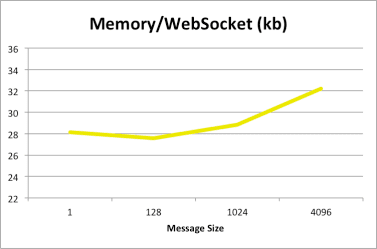

The results show that with a fixed number of concurrent connections, the total memory utilization is a factor of the message size, as is the memory used per connection, but in both cases the amount of memory does not vary much and varies in a sub-linear manner, increasing by less than 15%.

Summary

During these tests, NGINX delivered predicable and scalable performance when proxying WebSocket servers. These were synthetic tests and not necessarily representative of a real-world application, so the results of tests done on other systems may differ. For more information, please see:

- NGINX as a WebSocket Proxy

- WebSocket proxing (nginx.org)

- NGINX and NGINX Plus feature comparison

- NGINX Plus Technical Specifications

About the Author

Related Blog Posts

Secure Your API Gateway with NGINX App Protect WAF

As monoliths move to microservices, applications are developed faster than ever. Speed is necessary to stay competitive and APIs sit at the front of these rapid modernization efforts. But the popularity of APIs for application modernization has significant implications for app security.

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for north-south and east-west API traffic, plus use cases where an API gateway is the right tool.

Deploying NGINX as an API Gateway, Part 2: Protecting Backend Services

In the second post in our API gateway series, Liam shows you how to batten down the hatches on your API services. You can use rate limiting, access restrictions, request size limits, and request body validation to frustrate illegitimate or overly burdensome requests.

New Joomla Exploit CVE-2015-8562

Read about the new zero day exploit in Joomla and see the NGINX configuration for how to apply a fix in NGINX or NGINX Plus.

Why Do I See “Welcome to nginx!” on My Favorite Website?

The ‘Welcome to NGINX!’ page is presented when NGINX web server software is installed on a computer but has not finished configuring