Featured Blog Posts

F5 BIG-IP Next for Kubernetes joins the NVIDIA Enterprise AI Factory validated design

F5 BIG-IP Next for Kubernetes is being included in the NVIDIA Enterprise AI Factory validated design—enabling customers to seamlessly extend F5 BIG-IP as they adopt NVIDIA platforms.

F5 again recognized with six TrustRadius Buyer’s Choice Awards in 2026

F5 was once again honored with six TrustRadius Buyer’s Choice Awards—a testament to our continued commitment to delivering trusted, high-value solutions.

30 years of F5 through the eyes of F5ers

As F5 celebrates its 30th anniversary, we asked employees, including several who’ve been here for decades, what it means to thrive at F5. Read on to see their answers.

All Blog Posts(1846)

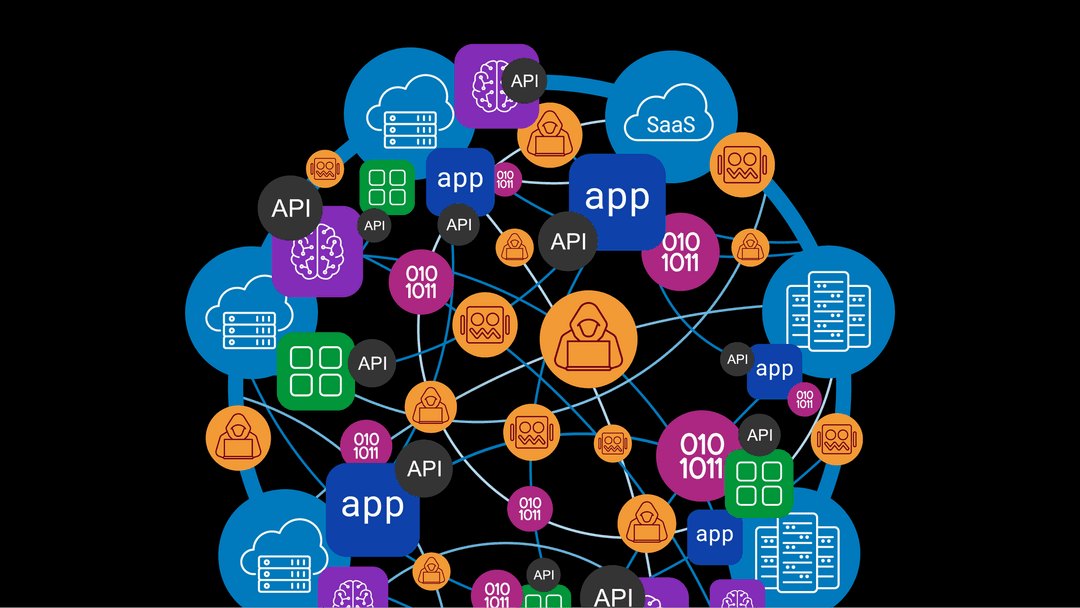

API management is even more critical in the agentic AI era

F5 explains how the mobile industry can make CAMARA-based APIs work for the growing army of enterprise AI agents.

By Ahmed Guetari

Banking on resilience in the AI era: Why 2026 is different

In 2026, resilience is a top banking priority. Discover why outages, digital sovereignty, and AI in production make always‑on infrastructure a key differentiator.

By Chad Davis

Operational sovereignty: Why digital portability drives resilience

Learn how operational sovereignty boosts resilience and why having the right tools is critical to staying ahead in an unpredictable world.

By Bart Salaets

F5 AI runtime security is a game changer for the public sector

Enhance public sector AI initiatives with advanced model security, policy enforcement, and observability to ensure compliance, mitigate risks, and protect data.

By Matt Kaneko

Securing your AI journey with F5 and AWS

See how F5 and AWS can help secure AI models, agents, and APIs for safe and accelerated AI development and deployment.

By Dave Morrissey

AI observability: Auditing and tracing AI decisions

Discover how AI observability ensures accountability for AI decisions with advanced runtime visibility, auditing, & traceability across AI workflows & systems.

By Jessica Brennan

From packets to prompts: Inference adds a new layer to the stack

Inference is not training. It is not experimentation. It is not a data science exercise. Inference is production runtime behavior, and it behaves like an application tier.

By Lori Mac Vittie

Powering advanced AI data delivery with F5 and Scality

This expanded partnership brings together Scality’s enterprise-grade, S3-compatible object storage with F5’s application delivery and security capabilities.

By Yuichi Miyazaki

MazeBolt + F5: Partners for automated DDoS protection

Integrating MazeBolt DDoS testing technologies into the F5 Application Delivery and Security Platform (ADSP) delivers fully automated, AI-powered DDoS protections.

By Rona Rom Amram

Secure your AI data pipeline without slowing pipelines down

Securing the AI data pipeline is not optional. That much is clear. What is far less obvious—and far more challenging—is how to secure it well

By Mark Menger

Why BOLA’s "authorization gap" requires a runtime strategy

Broken object-level authorization (BOLA) is not a bug but a failure of access controls. Learn how a three-tiered runtime strategy can close the gap and protect data.

By Chaim Peer, Ian Dinno

F5 again recognized with six TrustRadius Buyer’s Choice Awards in 2026

F5 was once again honored with six TrustRadius Buyer’s Choice Awards—a testament to our continued commitment to delivering trusted, high-value solutions.

By Jay Kelley

F5ers shaping AI: A Q&A with Mark Toler on securing AI with purpose

Meet an F5 Product Marketing Manager shaping AI security through storytelling, trust, and impact in this Life at F5 Q&A.

By F5 Newsroom Staff

From sprawl to strategy: Why a sound strategy for application delivery and security is key

This accumulation of disconnected tools comes at a high price. See how a unified approach to application delivery and security reduces your total cost of ownership.

By Greg Maudsley

Simplify multicloud AI success with F5 and Google Cloud

Hybrid multicloud environments are complex, but are they too complex for AI? With the right tools and solutions from F5 and Google Cloud, you can get the connectivity, security, and intelligent orches...

By Beth McElroy

Why take a platform approach?

Learn why a converged platform for application delivery and cybersecurity offers the best path to simpler operations and stronger protection.

By Greg Maudsley

30 years of F5 through the eyes of F5ers

As F5 celebrates its 30th anniversary, we asked employees, including several who’ve been here for decades, what it means to thrive at F5. Read on to see their answers.

By F5 Newsroom Staff

AI compliance and regulation: Using F5 AI Guardrails to meet legal and industry standards

See how F5 AI Guardrails and F5 AI Red Team simplify compliance, reduce legal exposure, and enable organizations to demonstrate responsible AI governance.

By Jessica Brennan

OPSWAT and F5: Taking network traffic security to the next level

Integrating OPSWAT file security technologies into the F5 platform provides critical defenses to keep complex networks and sensitive data secure.

By George Prichici

Delivering AI applications at scale: The role of ADCs

Delivering AI applications reliably requires more than fast models or elastic infrastructure. It requires a control layer to govern how AI apps are consumed.

By Buu Lam

Compression isn’t about speed anymore, it’s about the cost of thinking

In the AI era, compression reduces the cost of thinking—not just bandwidth. Learn how prompt, output, and model compression control expenses in AI inference.

By Lori Mac Vittie

F5 NGINX STIGs: A security blueprint for public sector and regulated environments

Learn how F5 NGINX STIGs provide a security blueprint for U.S. DoD and regulated environments, helping you achieve compliance while building robust, zero-trust infrastructure with enhanced visibility ...

By Bill Church

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

By Brian Ehlert, Ilya Krutov

Classifier-based vs. LLM-driven guardrails: What actually works at AI runtime

Not all AI guardrails work the same way. Learn why it’s important to know the distinction between classifier-based and LLM-driven guardrails.

By Jessica Brennan

MCP: The key to AI-ready application delivery

Model Context Protocol (MCP) enables secure, low-latency AI integration in modern applications. Learn why MCP is essential for future-proof application delivery strategies.

By Griff Shelley

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

By Jack Spellacy

Responsible AI: Guardrails align innovation with ethics

AI innovation moves fast. But without the right guardrails, speed can come at the cost of trust, accountability, and long-term value.

By Mark Toler

Best practices for optimizing AI infrastructure at scale

Optimizing AI infrastructure isn’t about chasing peak performance benchmarks. It’s about designing for stability, resiliency, security, and operational clarity

By Mark Menger

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

By Scott Calvet

Rein in API sprawl with F5 and Google Cloud

Find out how F5 and Google Cloud can help you secure and manage your ever-increasing API integrations.

By Beth McElroy