Bots are cool. Bots are scary. Bots are the future. Bots are getting smarter every day.

Depending on what kind of bot we’re talking about, we’re either frustrated or fascinated by them. On the one hand, chat bots are often considered a key component of business’ digital transformation strategies. On the consumer side, they provide an opportunity to present a rapid response to questions and queries. On the internal side, they can execute tasks and answer questions on the status of everything from a recently filed expense report to the current capacity of your brand-spanking-new app.

On the other (and admittedly darker) hand, some bots are bad. Very bad. There are thingbots – those IoT devices that have been compromised and joined a Death Star botnet. And there are bots whose only purpose is to scrape, steal, and stop business through subterfuge.

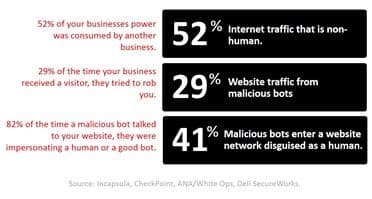

It is these latter bots we are concerned with today, as they are getting significantly smarter and sadly, they are now the majority of “users” on the Internet.

Seriously. 52% of all Internet traffic is non-human. Now some of that is business-to-business APIs and legitimate bots, like search indexers and media bots. But a good portion of it just downright bad bots. According to Distil Networks, which tracks these digital rodents, “bad bots made up 20% of all web traffic and are everywhere, at all times.” For large websites, they accounted for 21.83% of traffic – a 36.43% increase since 2015. Other research tells a similar story. No matter who is offering the numbers, none of them are good news for business.

Distil Networks’ report notes that “in 2016, a third (32.36%) of sites had bad bot traffic spikes of 3x the mean, and averaged 16 such spikes per year.” Sudden spikes are a cause of performance problems (as load increases, performance decreases) as well as downtime.

If the bots are attacking apps on-premises, they can cause not only outages, but drive the cost associated with that app to go up. Many apps are still deployed on platforms that require licenses. Each time a new instance is launched, so is an entry in the accounting ledger. It costs real money to scale software. Regardless of licensing costs, there are associated costs with every transaction because hardware and bandwidth still aren’t as cheap as we’d like.

In the cloud, scale is easier (usually) but you’re still going to pay for it. Neither compute nor bandwidth is free in the cloud, and like their on-premises counterparts, the cost of a real transaction is going to increase thanks to bot traffic.

The answer is elementary, of course. Stop the traffic before it gets to the app.

This sounds far more easy than it is. You see, security is forced to operate as “player C” in the standard interpretation of the Turing Test. For those who don’t recall, the Turing Test forces an interrogator (player C) to determine which player (A or B) is a machine and which is human. And it can only use written responses, because otherwise, well, duh. Easy.

In much the same way today, security solutions must distinguish between human and machine using only digitally imparted information.

Web App Firewalls: Player ‘C’ in the Turing Security Test

Web application firewalls (WAF) are designed to be able to do this. Whether as a service, on-premises, or in the public cloud, a WAF protects apps against bots by detecting them and refusing them access to the resources they desire. The problem is that many WAF only filter bots that match known bad user-agents and IP addresses. But bots are getting smarter, and they know how to rotate through IP addresses and switch up user-agents to evade detection. Distil notes this increasing intelligence when it points out that 52.05% of “bad bots load and execute JavaScript—meaning they have a JavaScript engine installed.”

Which means you have to have to have a whole lot more information about the “user” if you’re going to successfully identify – and reject – bad bots. The good news is that information is out there, and it’s all digital. Just as there is a great deal that can be learned from a human’s body language, speech patterns, and vocabulary choices, so can a great deal be gleaned from the digital bits that are carried along with every transaction.

With the right combination of threat intelligence, device profiling, and behavioral analysis, A WAF can correctly distinguish bad bots from legitimate users – human or bot. Your choice determines how whether or not a bot can outsmart your security strategy and effectively “win” the Turing Security Test.

- Threat Intelligence

Threat intelligence combines geo-location with proactive classification of traffic and uses intelligence feeds from as many credible sources as possible to help identify bad bots. This is essentially “big security data” that enables an entire ecosystem of security partners to share intelligence that results in timely and thus more accurate identification of the latest bots attempts.

Device Profiling

Profile a device includes comparing requests against known BOT signatures and identity checks. Operating system, network, device type – everything that can be gleaned from a connection (and there’s a lot) can be used. Fingerprinting is also valuable because it turns out that the amount of information (perhaps inadvertently) shared by browsers (and bots alike) is pretty close to enough to uniquely identify them. A great read on this theory can be found on the EFF site. I’ll note that it’s been statistically determined that as of 2007, it required only 32.6 bits of information to uniquely identify an individual. User-agent strings contain about 10.5 bits, and bots freely provide that.Behavioral Analysis

In a digital world, however, profiles can change in in an instant and location can be masked or spoofed. That’s why behavioral analysis is also part of identifying bad bots from legitimate traffic. This often takes the form of some sort of challenge. We see this as users in captchas and “I’m not a robot” checkboxes, but those are not the only means of challenging bots. Behavioral analysis also watches for session and transaction anomalies, as well as attempts to brute force access.

Using all three provides more comprehensive context and allows the WAF to correctly identify bad bots and refuse them access.

We (that’s the Corporate We) have always referred to this unique combination of variables as ‘context’. Context is an integral component of many security solutions today – access control, identity management, and app security. Context is critical to an app-centric security strategy, and it is a key capability of any WAF able to deal with bad bots accurately and effectively. Context provides the “big picture” and allows a WAF to correctly separate bad from good and in doing so protect valuable assets and constrain the costs of doing business.

The fix is in. Bots are here to stay, and with the right WAF you can improve your ability to prevent them from doing what bad bots do – steal data and resources that have real real impacts on the business’ bottom line.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.