Every new technology brings both opportunities and risks. AI is no different, bringing groundbreaking advancements including automating complex workflows, enhancing decision-making with predictive analytics, and creating realistic synthetic media. It also introduces vulnerabilities such as data breaches, model exploitation, and bias amplification. As AI capabilities rapidly evolve, security measures often lag behind, leaving organizations exposed to both obvious dangers and unforeseen threats. When technology advances faster than its safeguards, the responsibility for security often shifts from creators to practitioners.

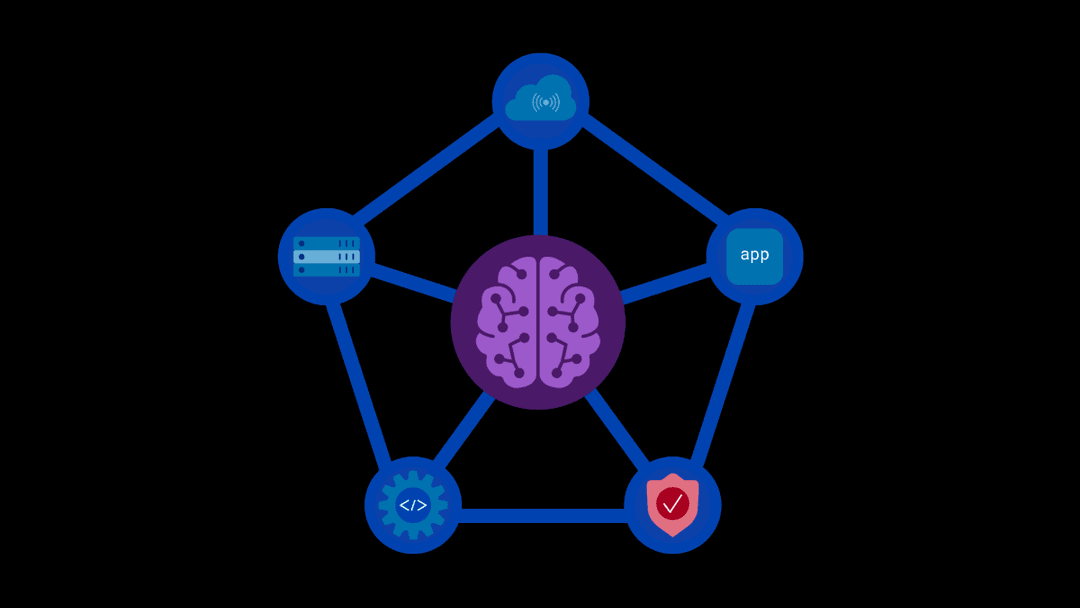

For decades, F5 has helped organizations navigate the shifting digital landscape—accelerating innovation, ensuring reliable connectivity, and protecting sensitive systems from potential harm. With AI now at the forefront of technological progress, it demands not only thoughtful adoption but adaptive security measures to protect users, secure data, combat adversarial threats, and ensure ethical AI governance. Organizations racing to see the benefits of AI need adaptive “guardrails” to protect every user, the data moving at speed and scale, and defend all angles against bad actors.

Introducing F5 AI Guardrails

Today, F5 is pleased to announce that we have completed our acquisition of CalypsoAI, a pioneer at the frontier of AI security, and we are introducing the latest addition to securing our digital roads: F5 AI Guardrails—comprehensive runtime security for AI models and agents. With this new addition, F5 can now provide a model-agnostic solution that secures AI data, combats adversarial threats, and ensures responsible AI governance across all interactions. The AI landscape is constantly evolving with millions of proprietary and open-source models, as well as their fine-tuned variations. The present and future of AI security is not limited to a few monolithic LLMs, but rather a cascade of proprietary and public AI innovations, each requiring consistent privacy and security, independent from any single model provider. Every model carries a different portfolio of risks, and thus, every model needs tailored Guardrails to keep AI interactions from drifting beyond acceptable boundaries.

F5 AI Red Team identifies threats obvious and obscure

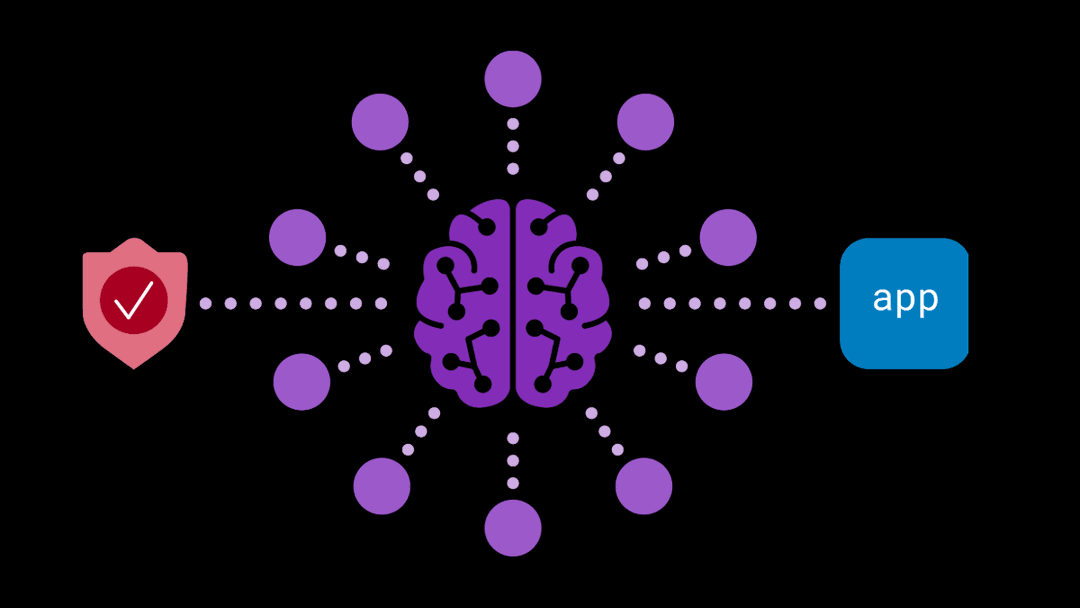

You can’t secure what you can’t see. Across all physical and digital highways, there are countless points where guardrails could be useful. While known accident locations or high-traffic intersections are naturally prioritized, a more strategic, preventative approach is needed. F5 AI Red Team acts as the eye in the sky, analyzing the landscape to simulate the real-world chaos of rush hour traffic, the actions of worst-case road rage drivers, and the vulnerabilities they could expose. By identifying not only where accidents in AI systems are likely but where they would have the greatest impact, AI Red Team informs exactly where guardrails need to go and how urgently they should be placed.

We are introducing AI Red Team to deliver this capability in stride, unleashing swarms of autonomous agents to simulate thousands of attack patterns and hunt vulnerabilities in AI systems. These agent swarms accelerate discovery of both common AI vulnerabilities as well as the most obscure, worst-case exploits that security teams lack the time to consider. What’s more, simulated attack techniques are rooted in our preeminent AI vulnerability database, a vast library updated regularly with over 10,000 new attack patterns each month to reflect emerging trends. The insights are valuable on their own, but with a few clicks, teams can translate every insight into active AI Guardrails.

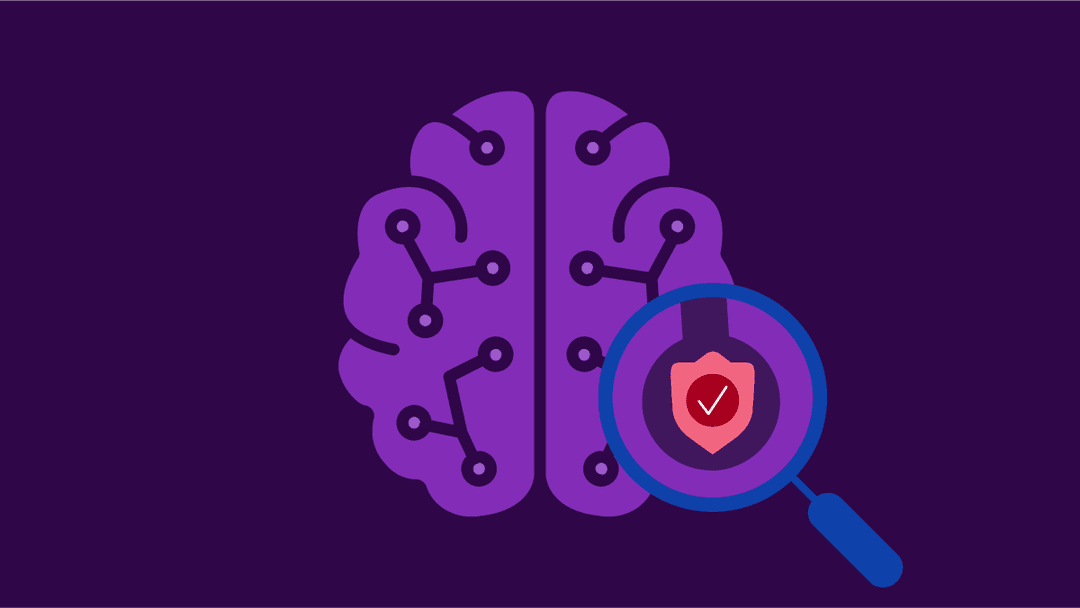

See into the AI black box

The ‘what’ and ‘where’ of AI threats are foundational requirements to maintaining security posture but understanding ‘how’ and ‘why’ is essential for improving posture. The opaque ‘black box’ nature of AI systems has historically made this difficult, calling for an entirely new approach. Assume your AI hits a Guardrail and a prompt gets blocked. Under traditional methods like static keyword or regex filtering, you would know the user, datetime, and trigger, but you wouldn’t have the full picture of what occurred. Crucially, F5 AI Guardrails and AI Red Team prioritize explainability, helping customers understand why an AI system made a particular decision or took a particular action.

With advanced capabilities like Agentic Fingerprints and Outcome Analysis, teams now have granular insight into every AI interaction, with detailed reasoning as to why a prompt was accepted or blocked, as well as the context leading up to that decision. This level of granularity is not only critical for informing security teams, but it radically simplifies compliance with accessible audit trails and ready-made templates by regulatory framework.

Secure your AI present and anticipate your AI future

AI is not going away and it is not slowing down. MCP servers, A2A Protocol, retrieval augmented generation (RAG), and whatever innovation comes next will exponentially fuel the global engines of AI effectiveness. However, AI effectiveness cannot advance at the expense of AI security. F5 has earned a reputation for excellence at the application layer, helping organizations move faster and smarter along our digital highways, and we see no reason to change that for the AI era. F5 AI Guardrails and AI Red Team ensure we can maintain that promise of intelligent speed and deliver comprehensive security for AI models, agents, and connected data.

We can’t predict exactly what the AI landscape will look like a year from now. At F5, we believe the best way to prepare for what’s next is to empower organizations with tools that can adapt to their needs, not dictate their path. With AI Guardrails and AI Red Team, and the combined power of the F5 Application Delivery and Security Platform (ADSP), we’re giving you the flexibility to adapt across any environment, cloud, and AI model, knowing that the best judge of your future needs isn’t us—it’s you.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?