As organizations accelerate innovation, developing AI-powered and life-changing products like self-driving cars or large language models (LLMs), efficient infrastructure is critical for scaling operations and staying competitive. Historically, data centers have focused on using central processing units (CPUs) for general-purpose computing and graphics processing units (GPUs) for intensive parallel processing tasks central to AI and machine learning. As AI models grow in scale and complexity, data centers have become the new unit of computing, pushing the boundaries of traditional cloud networks. To enable the transformation toward data center-scale computing, the data processing unit (DPU) has emerged as a third pillar in computing.

The rise of AI factories

Earlier in our AI factory series, F5 defined an AI factory as a massive storage, networking, and computing investment serving high-volume, high-performance training and inference requirements. Like traditional manufacturing plants, AI factories leverage pretrained AI models to transform raw data into intelligence.

What is a Data Processing Unit (DPU)?

A DPU is a programmable processor designed to handle vast data movement and processing via hardware acceleration at a network’s line rate. In late 2024, we announced BIG-IP Next for Kubernetes deployed on NVIDIA BlueField-3 DPUs. NVIDIA BlueField is an accelerated computing platform for data center infrastructure, purpose-built to power NVIDIA AI factories. While the CPU is responsible for general-purpose computing for computational applications and the GPU excels in accelerated computing tasks such as AI-related large-scale vector and matrix computations and graphics rendering, the NVIDIA BlueField DPU is often incorporated into a PCIe (peripheral component interconnect express) network interface card (NIC), which is responsible for network connectivity for the host or chassis of an AI cluster. In other words, the NIC now essentially has become a powerful processor, optimized for processing data as it moves in and out of the server. The BlueField DPU can also function as an inter-cluster networking device when multiple hosts or chassis are in a single AI cluster.

“A DPU is a programmable processor designed to handle vast data movement and processing via hardware acceleration at a network’s line rate.”

Unlocked power

By handling software-defined networking, storage management, and security services, BlueField DPUs reduce the computational burden on CPUs, allowing them to focus on the tasks at which they excel. This offloading capability is crucial for AI factories, where vast amounts of data must be processed and transferred rapidly to meet the demands of complex AI models and real-time inference tasks.

BlueField DPUs contribute significantly to energy efficiency and scalability within AI factories. As AI factories require massive computational resources, the efficient management of power and cooling becomes paramount. DPUs, with their specialized acceleration engines and high-performance network interfaces, ensure data is processed and transported with minimal latency and power consumption. This efficiency not only reduces operational costs but also enables AI factories to scale effectively. With BlueField DPUs, AI factories and large-scale infrastructure can achieve a balanced, high-performance, and high-efficiency infrastructure that supports the continuous innovation and deployment of AI technologies.

Where are BlueField DPUs deployed in AI factories?

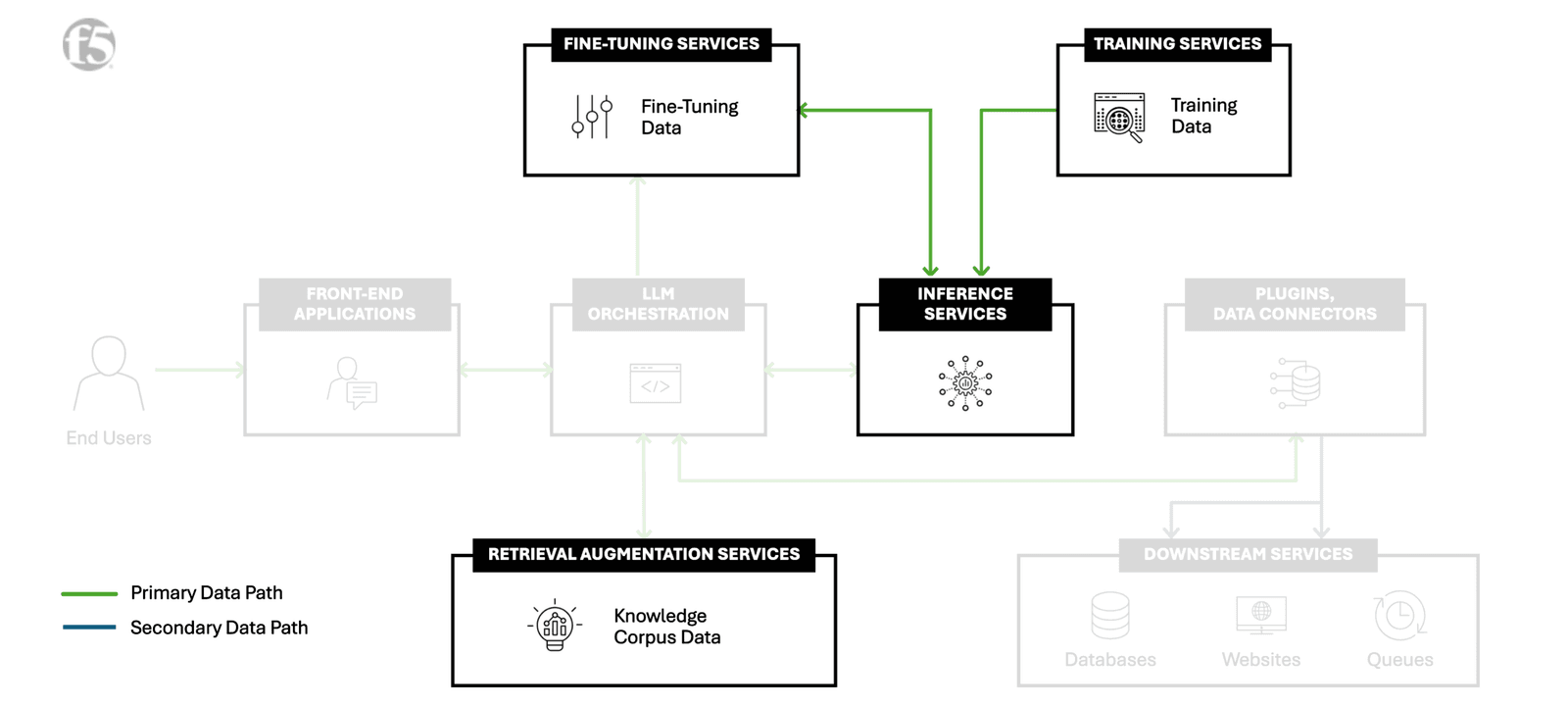

When looking at F5’s AI Reference Architecture, DPUs are commonly deployed within the functional areas of RAG corpus management, fine-tuning, training, and inference services as well as the storage clusters supporting these functions. Additionally, DPUs are found in numerous applications where high-performance data throughput and power efficiency are required, including examples like DPUs supporting 5G radio access network (RAN) deployments.

The F5 AI Reference Architecture highlighting areas where DPUs are commonly deployed.

Offloading and accelerating application delivery and security to the DPU

The new requirement for efficient traffic management and robust security for AI factories represents an important shift focusing on data flow and fortified infrastructure to prevent security threats. F5 BIG-IP Next for Kubernetes deployed on NVIDIA BlueField-3 DPUs enables low-latency, high-throughput connectivity by offloading and accelerating data movement from the CPU to the DPUs. Additionally, it integrates comprehensive security features, such as firewalls, DDoS mitigation, WAF, API protection, and intrusion prevention, directly on the programmable NVIDIA BlueField-3 DPU. This allows you to create an architecture that isolates AI models and apps from threats, ensuring data integrity and sovereignty.

BIG-IP Next for Kubernetes supports multi-tenancy, enabling the hosting of multiple users and AI workloads on a single infrastructure, enabling network isolation. Managing a large-scale AI infrastructure becomes straightforward with BIG-IP Next for Kubernetes, as it provides a central point for managing networking, traffic management, security, and multi-tenant environments. This simplifies operations and reduces operational expenditure by offering detailed traffic data for network visibility and performance optimization. The integration between BIG-IP Next for Kubernetes and the NVIDIA BlueField-3 DPU helps ensure AI factories operate at their full potential while reducing tool sprawl and operational complexity.

Powered by F5

For enterprises investing in AI, ensuring their infrastructure is optimized and secure is non-negotiable. F5 BIG-IP Next for Kubernetes deployed on NVIDIA BlueField-3 DPUs is a strategic investment to deliver high performance, scalability, and security, maximizing the return on large-scale AI infrastructure. For organizations deploying GPUs and DPUs to support AI factory investments, contact F5 to learn how BIG-IP Next for Kubernetes can enhance your AI workloads.

F5’s focus on AI doesn’t stop here—explore how F5 secures and delivers AI apps everywhere.

Interested in learning more about AI factories? Explore others within our AI factory blog series:

- What is an AI Factory? ›

- Retrieval-Augmented Generation (RAG) for AI Factories ›

- Optimize Traffic Management for AI Factory Data Ingest ›

- Optimally Connecting Edge Data Sources to AI Factories ›

- Multicloud Scalability and Flexibility Support AI Factories ›

- API Protection for AI Factories: The First Step to AI Security ›

- The Importance of Network Segmentation for AI Factories ›

- AI Factories Produce the Most Modern of Modern Apps: AI Apps ›

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.