Good security starts with a good defense, and in that respect generative AI is no different than other new technologies of recent years. Prevention, detection, and response are as necessary for threats targeting AI applications as for any other app or attack surface. Attackers have the same goals as always: break into the environment, get access to secrets and proprietary data, disrupt operations, co-opt systems, or inflict brand damage.

What’s different is the rapid adoption of generative AI by end users and the sense within organizations that unless they catch the wave, they’ll be left behind by competitors. Security teams are caught off guard by how widespread employee use of unauthorized Large Language Models (LLMs) has become, or how quickly leadership is looking to get LLM-based applications into production. The call is coming from inside the house!

Another difference is the natural language-based interface of the most commonly used LLMs, which lowers the barrier to entry for benign and malicious users alike. When successful prompt attack techniques include simple line breaks, emojis, and poetry, defenders must learn new tricks (and possibly break out the thesaurus).

The convergence of these forces has security teams in a tight spot. The traditional defense in high-risk, high uncertainty situations is to limit exposure by closing the aperture of allowable activity. For LLMs, that means controlling which models can be used, who has access, and what prompts and responses are allowed to go through. But if the controls are too tight, productivity, employee morale, and customer experience will suffer. Imagine interacting with a medical chatbot and being blocked from describing your symptoms because they fall foul of a sexual terms policy.

What security teams need is control, power, and flexibility to protect the organization, its employees, and its customers while enabling smooth operations, successful projects, and quality experience.

Solutions for AI Security

Control

- Data Security: Organizations with sensitive or proprietary data building internal employee-facing AI applications are often better served with an on-premises solution that keeps data inside the enterprise.

- Robust Access Controls: Granular permissions for individuals and teams — and increasingly, AI agents — to limit access to models, guardrails, and operational metrics and keep costs under control.

- Regulatory Compliance: Features that help organizations stay compliant with proliferating regulatory frameworks for AI use and data privacy that span industry and geo-requirements.

Power

- Rapid Time to Value: Deployment and setup of security solutions needs to be quick and easy, especially when installing on-prem, so organizations can build, test, and release applications sooner.

- GenAI-Powered Defenses: While regex- and neural-net-based guardrails still have their strengths, they don’t come close to the adaptability and contextual awareness offered by GenAI.

- Red-Teaming: Vendor solutions need to offer tools that replicate realistic adversarial attacks so applications can be properly tested before going into production, and whenever new attacks and vulnerabilities are discovered.

Flexibility

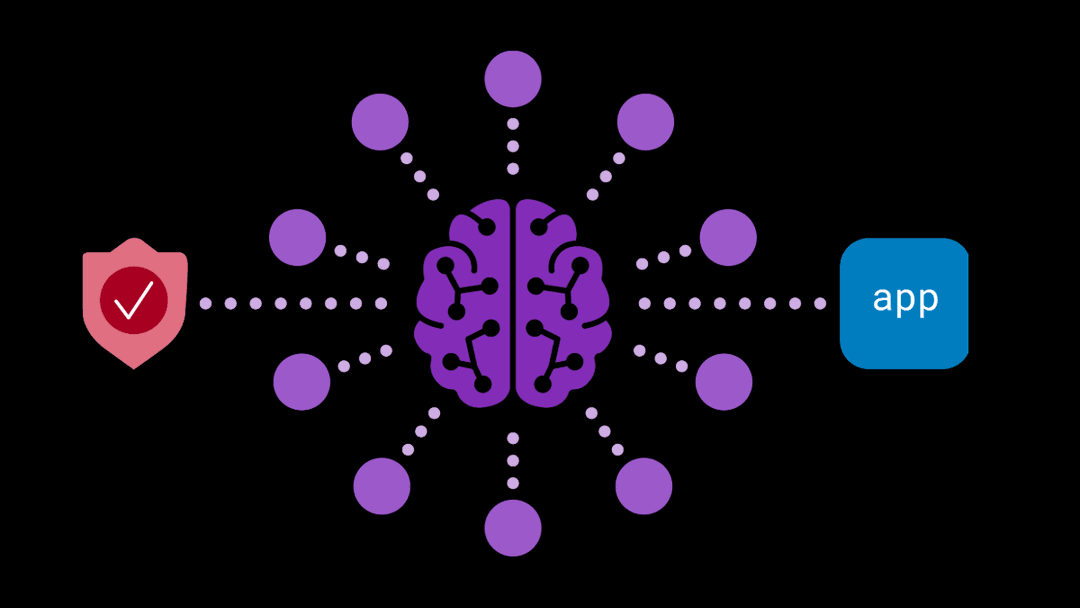

- Model Agnostic: Enterprises need to be able to select the best public models for their use cases, change models when better ones come along, and use their own internal models and RAG data.

- Customization: No security solution can anticipate every specific use case and risk profile, so organizations need the ability to adjust and fine-tune out-of-the-box controls and attacks.

- Robust API: It goes without saying that every enterprise security product needs a good API enabling data to be pulled into existing workflows.

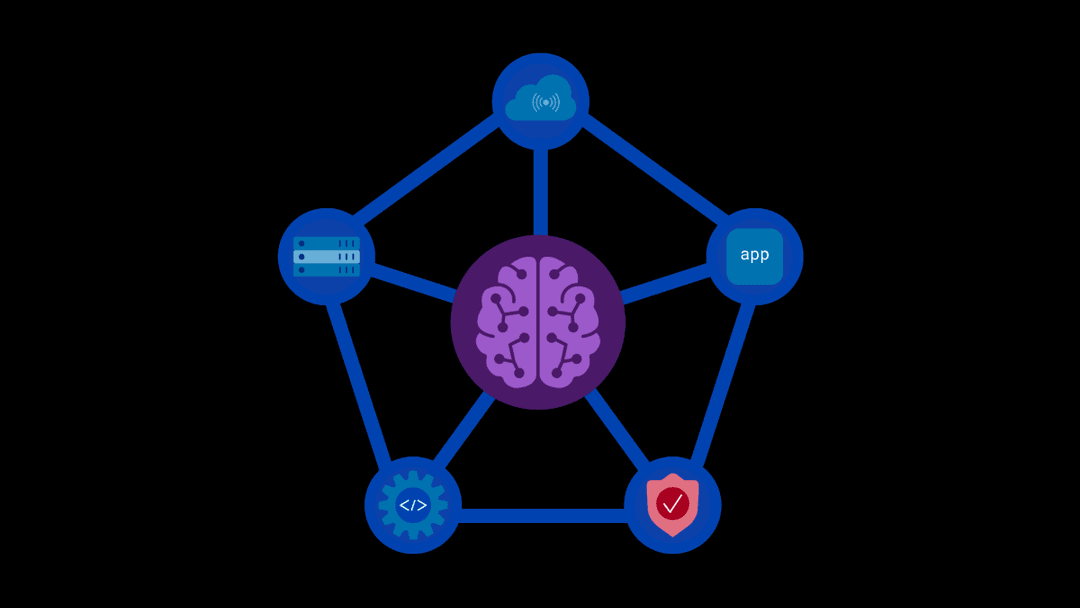

- Deployment Options: Because every application workflow is different, infrastructure teams want discretion on where AI security tools sit in the tech stack.

There are a small handful of solutions on the market right now addressing the problem of AI application security. Of those, only F5 AI runtime security solutions are explicitly focused on helping the security team mount an effective defense for internal and customer-facing GenAI-based applications.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?