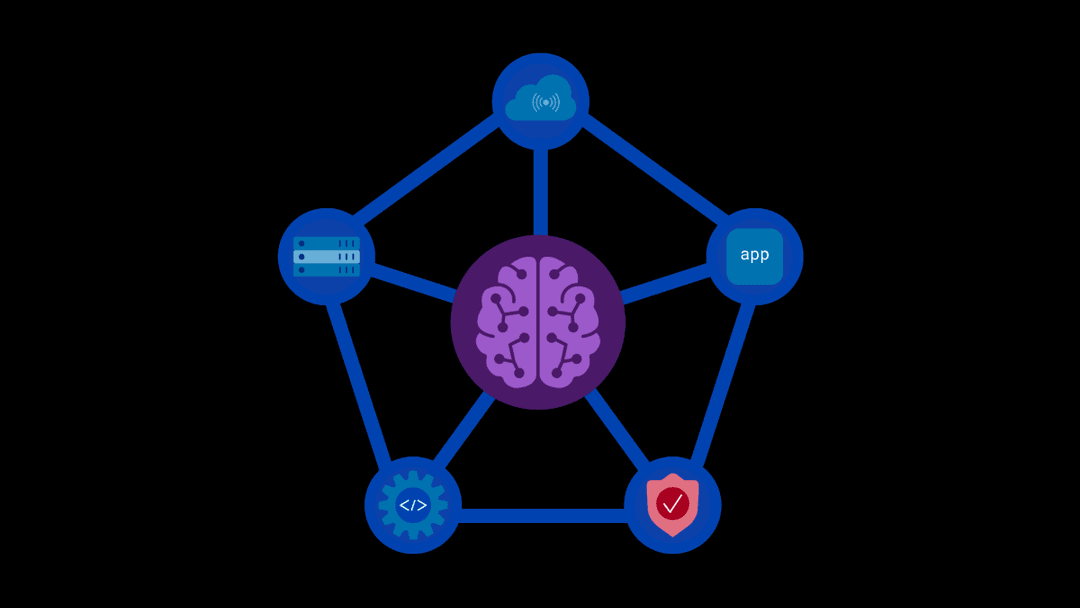

AI-dependent applications must be secure from the start if they are going to protect the data they will access or manipulate, and be trusted by their users. We’ve identified several best practices for secure AI application development.

Incorporate Security from the Beginning

Security must not be an afterthought in AI application development. Implementing security measures from the design phase, known as “security by design,” ensures potential vulnerabilities are addressed early and includes:

- Conducting threat modeling to identify and mitigate potential security risks.

- Defining security requirements alongside functional requirements.

- Ensuring secure coding practices are followed throughout development.

Example: A financial application using AI for fraud detection should include data encryption and secure data storage protocols from the outset, in order to protect sensitive financial data.

Ensure Data Privacy and Compliance

AI applications that handle large amounts of data and are used across international boundaries must comply with relevant regulations, such as the General Data Protection Regulation (GDPR) and the recent EU AI Act for applications used in Europe. Key practices include:

- Data Minimization: The only data collected should be that which is necessary for the AI application to function.

- Anonymization and Pseudonymization: Protect personal data by anonymizing or pseudonymizing it, making it harder to trace back to individuals.

- User Consent: Ensure that users explicitly consent to having their data collected and processed.

Example: A healthcare AI application must anonymize patient data to comply with GDPR, ensuring that personal health information is protected and cannot be linked back to individual patients.

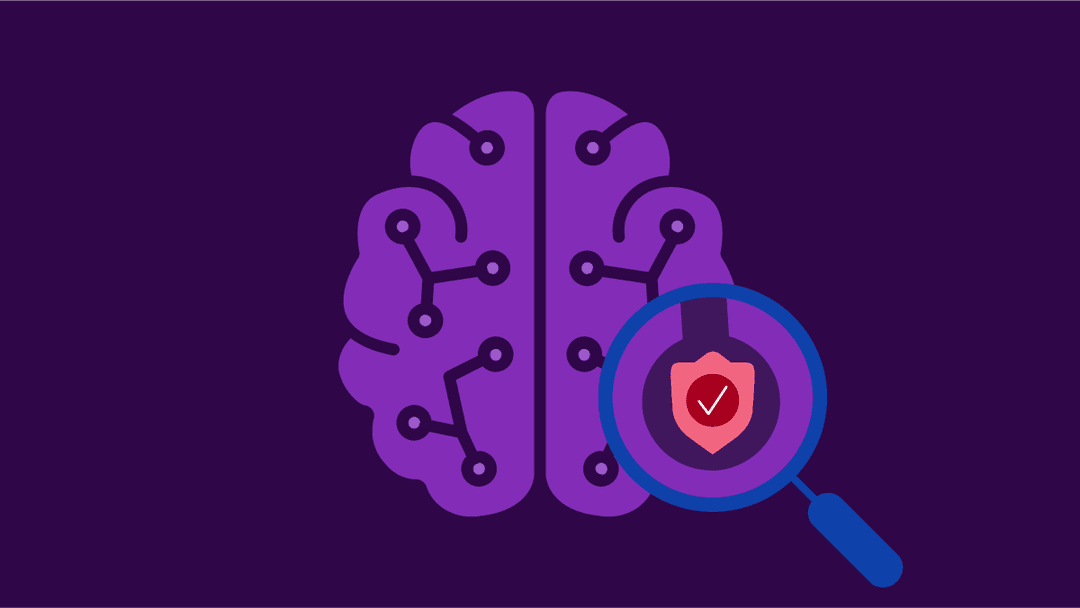

Implement Robust Model Security

AI models themselves can be targets for attacks, such as model inversion or adversarial attacks. Protecting your AI models involves:

- Access Control: Restrict access to your models, ensuring only authorized personnel can interact with or modify them. Our policy-based access controls ensure that models and data are protected from unauthorized access from inside or outside your organization.

- Model Monitoring: Continuously monitor your AI models for unusual activities or performance anomalies that might indicate an attack. Our security and enablement platform allows administrators to apply rate limits to mitigate the threat of model denial of service (DoS) attacks and provides end-to-end visibility into user interactions.

- Regular Updates: Keep your models and the underlying systems updated with the latest security patches.

Example: An AI-based chatbot for customer service should have restricted access controls and real-time monitoring to detect and respond to potential adversarial inputs designed to exploit the model.

Secure Development and Deployment Practices

Following secure software development practices is key to ensuring your AI application lifecycle is safe from threats and vulnerabilities. These include:

- Code Reviews and Audits: Regularly conduct code reviews and security audits to identify and fix vulnerabilities.

- Automated Testing: Implement automated security testing tools to continuously check for security issues throughout development.

- Secure Deployment: Use secure deployment practices, such as containerization and secure configuration management, to protect your application in production.

- Gap analysis: Review protocols and practices regularly to identify any gaps that emerge due to new or updated tools.

Example: A machine learning model deployed in a cloud environment should use containerization to ensure that any security breaches in one container do not affect the entire system.

Educate and Train Your Team

Security is a shared responsibility and a workforce that doesn’t understand its role in your organization’s digital security is a significant vulnerability. Ensuring your development team is well-versed in secure AI development practices is critical. This involves:

- Regular Training: Conduct regular training sessions on the latest security threats and secure development practices.

- Security Champions: Designate employees who are knowledgeable and enthusiastic about security as “champions” within your team to advocate and enforce security best practices.

- Collaborative Culture: Foster a culture of collaboration where security is seen as a core component of development rather than a hindrance.

Example: Regular security workshops and training sessions can keep your team updated on the latest security trends and practices, ensuring they are equipped to handle novel and emerging threats.

Continuous Monitoring and Incident Response

Even with the best practices in place, security incidents can occur. Establishing robust monitoring and incident response protocols is important.

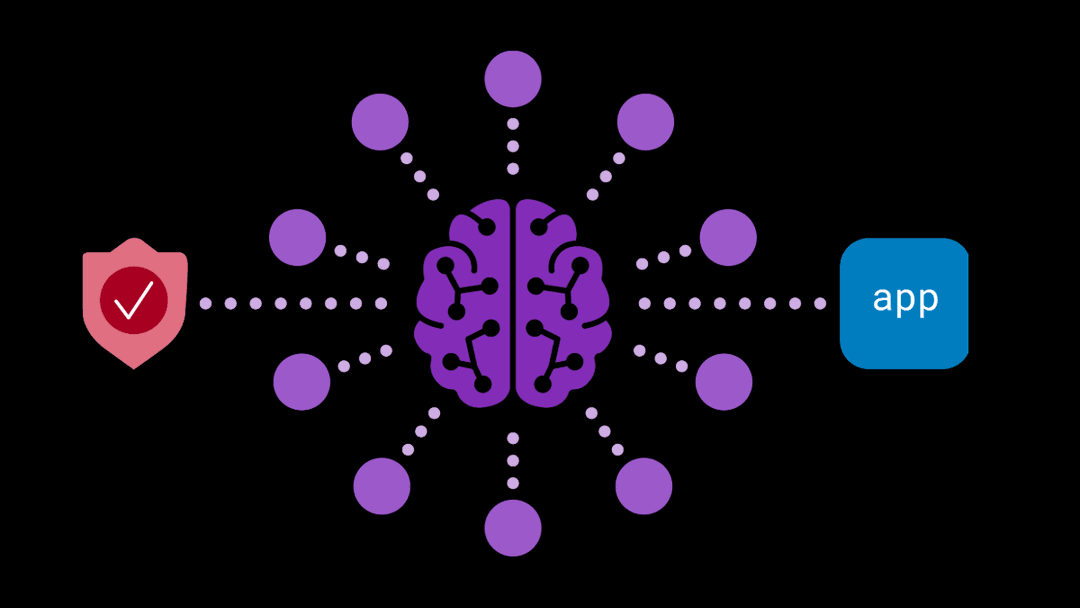

- Real-time Monitoring: Implement real-time monitoring tools to detect and respond promptly to security threats. F5’s model-agnostic security solutions monitor and record all user and administrator interactions with each model, enabling real-time auditing and response.

- Incident Response Plan: Develop and regularly update an incident response plan to ensure quick and effective action in the event of a security breach.

- Post-Incident Analysis: Conduct post-incident analyses to understand the root causes and improve your security measures and posture.

Example: A retail AI application should have an incident response plan that includes steps for data breach notification, root cause analysis, and mitigation to minimize the impact of any security incidents. Developing secure AI applications requires a comprehensive approach that integrates security at every stage of the AI application development lifecycle. By following these best practices, from initial design to deployment and beyond, developers can ensure their AI applications are robust, compliant, and trustworthy.

Staying informed and proactive in your security measures is essential for navigating the complexities of secure AI application development. Click here to contact us and find out how our GenAI security and enablement solutions can help your organization to achieve its AI ambitions.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?