Taken on their own, with no specialist safeguards or security protocols in place, deploying generative AI (GenAI) models, particularly large language models (LLMs), across the enterprise is a high-risk, high-reward opportunity for any organization. Exactly how your organization should undertake this big step into the GenAI landscape requires some thoughtful planning.

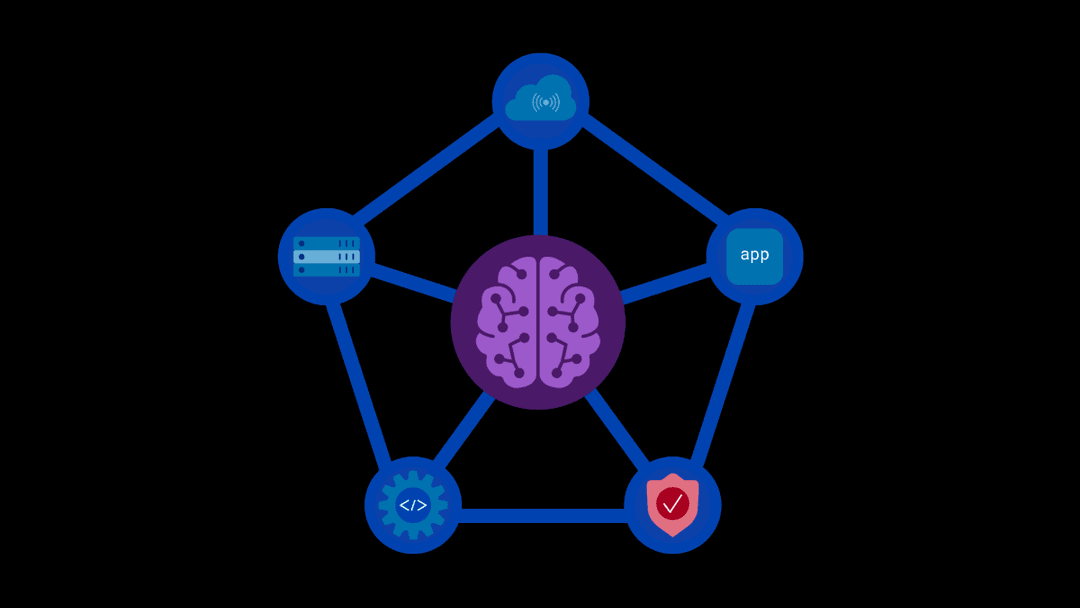

Perhaps it would be better organizationally to gain access to AI models through a provider, following the Software as a Service (SaaS) framework, to avoid any configuration or installation issues. Or it might work better to deploy the model on your organization’s private cloud or on the physical premises and enable your organization to control API configuration and management.

This series of blogs addresses the How? question: How should your organization deploy LLMs across the enterprise to achieve maximum return on investment? Each blog will provide information about the benefits and drawbacks of one common deployment framework, enabling you to consider it in light of your company’s organizational and business structure and specific business needs.

Defining the Cloud and APIs

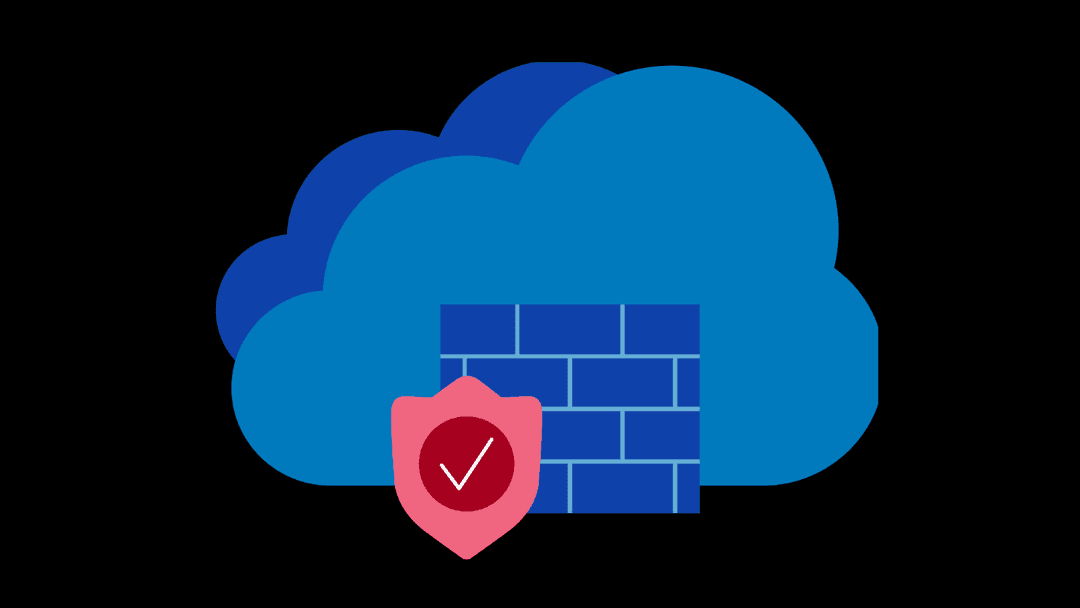

As a term, ‘the cloud’ is both commonly used and commonly misunderstood, so we want to be clear about what we mean when we discuss it. The cloud is a vast collection of physical servers located in myriad data centers around the globe. These remote servers handle multiple business-critical functions, such as running applications; storing or managing data; delivering content, such as social media; or providing services, such as streaming services or specialized software. Although each has unique functionality, they are networked and operate as components of a single, interdependent digital ecosystem that allows users—individuals and organizations—to access files and data from any device with an Internet connection.

Meanwhile, an application program interface (API) is, in essence, a connection between two devices that enables them to send information back and forth. API software comprises definitions that identify what will be sent between the devices (e.g., the client device making the request and the server device sending the response) and protocols for how that information is to be sent (e.g., the URL/endpoint of the receiving device and the syntax/wording/language that both the request and the response must use).

This enables end users to, for instance, log in to applications to purchase items on a website, or schedule a rideshare, or, in reference to an LLM, to issue a query and receive a reply, without having to understand how or why the system works. APIs can be monitored and secured, so data on the client’s device is never completely exposed to the server device.

The Difference Between SaaS and Cloud-Based Services

The first blog in this series addressed the benefits and drawbacks of deploying LLMs across the enterprise using a Software-as-a-Service, or SaaS, framework. A SaaS-based deployment model is similar to a cloud-based deployment model in many ways, in that both use the cloud to access needed software rather than hosting applications on their own systems. However, the key distinction that sets them apart can be distilled to a very simple concept. By default, SaaS means your organization is paying for access to and use of a third-party software application that is hosted “in the cloud,” whereas cloud computing means that you are paying a third party to host and provide access to your organization’s data, which is stored “in the cloud.”

Benefits

Quick Deployment: Cloud services enabling an LLM to be configured for use by your organization can be set up quickly, allowing you to start using the model quickly.

Scalability: An LLM deployed in the cloud can be configured to auto-scale to meet demand, with no manual intervention required.

Reduced Operational Overhead: Setup, security, maintenance, updates, and system optimization are typically taken care of by the cloud provider, keeping your IT team and others available to focus on other tasks. Accessibility: Cloud-based LLMs can be accessed from any device in any place that offers internet access, which provides flexibility for personnel who are traveling or working remotely.

Cost Efficiency: Cloud service providers typically offer a use-based pricing model, charging only for the resources you utilize, which can be important if your organizational demand fluctuates. Your organization has no need for extensive compute resources or data storage capacity.

Pre-Trained and Fine-Tuned Models: AI models are continually being upgraded and trained on bigger, better datasets, and can be fine-tuned for use with custom datasets.

Inherent Redundancy: The risks of data loss due to outages are significantly reduced by layers of redundancies in cloud environments.

Drawbacks

Latency: The definition of “real time” will depend on the location, speed, and compute power of the servers operated by your cloud service provider. While slightly delayed response times might not be noticed in some environments, they can compromise mission-critical activities in others.

Ongoing Costs: Cloud computing is not a low-budget endeavor and, as models keep getting bigger and faster, the costs for compute and storage resources rise accordingly.

Vendor Lock-In: Relying on a single provider comes with risks. The provider’s physical and cyber security posture and protocols are important considerations, as are issues such as changes in terms of service or other policies, price increases, and discontinued services.

Conclusion

Whether the benefits of deploying an LLM across your enterprise using a cloud-based service outweigh the drawbacks will be determined by serious consideration of your organization’s financial and technical resources, business needs, and security or other operational constraints. Some of our earlier blogs have focused on establishing internal and external systematic safeguards, AI governance programs, and other ways to safely deploy these transformative tools in your organization, and may be of help to you as you traverse this new path through the technosphere.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

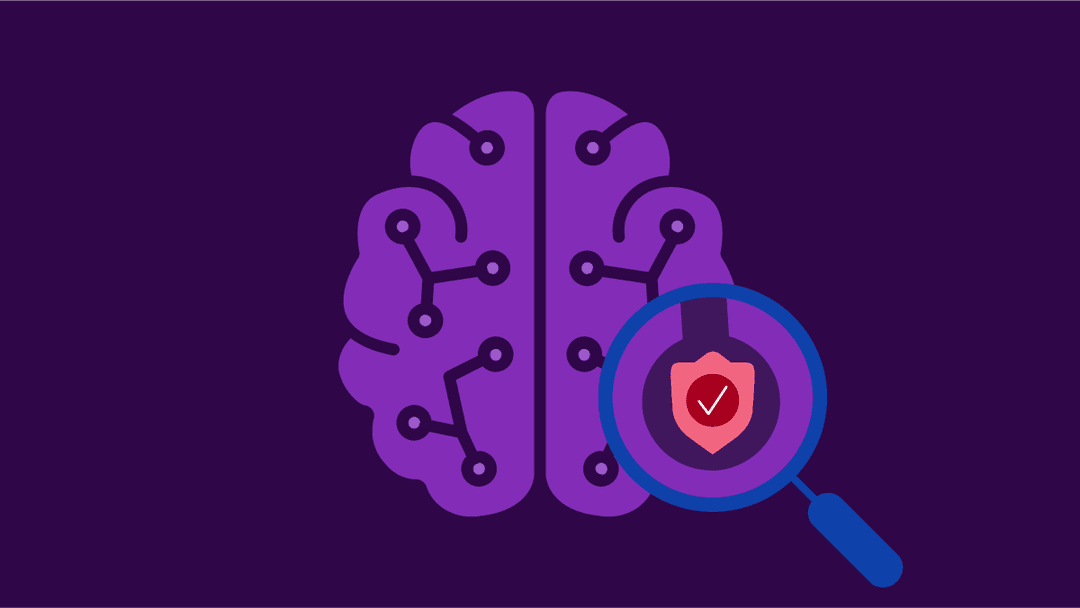

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?