Since the dawn of social media over two decades ago, people everywhere have grown more, and more, and more comfortable sharing information—sometimes considerably beyond what’s necessary. Our collective journey from posting too much information (TMI) on MySpace and Facebook, to sharing Instagram and Pinterest photos of every minute of our lives, to having human-like, AI-powered conversations with large language models (LLMs) has been swift. Unfortunately, within the context of Generative AI (GenAI), oversharing isn’t just a social faux pas, it’s a significant security risk, particularly for organizations.

GenAI models, such as LLMs, offer remarkable capabilities in terms of generating all sorts of content—including accurate and relevant content—but they are the most porous of information sieves and pose a substantial risk when fed detailed, private, or sensitive information. The ease of interacting with LLMs can lull users into a false sense of security, leading to unintentional oversharing of critical data and instances of inadvertent data exposure through LLMs. In fact, it has become so common that it fails to raise eyebrows any more when reports surface of executives sharing strategic company documents, physicians entering patient details, or engineers uploading proprietary source code into a public model, such as ChatGPT or others.

These are just a few examples of how sensitive information can be compromised unintentionally because the person sending the information didn’t realize it becomes part of the model’s knowledge base. There is no getting it back and there are no do-overs. It’s out there. Forever.

The key to leveraging the power of LLMs without compromising security lies in the art and science of creating prompts. Crafting a prompt that is detailed enough to elicit the desired response, yet discreet enough to protect sensitive information, is imperative. This requires a thoughtful approach to crafting the prompts, balancing the need for specificity with the imperative of discretion. Some tips to impress upon users of AI models and applications are:

- Be Concise and Clear: Avoid including unnecessary details in prompts that could reveal confidential information. These could include personal or project names, dates, destinations, and other particulars.

- Use Hypothetical Scenarios: When seeking AI assistance for sensitive tasks, frame requests in hypothetical terms. Do not use real names of companies, people, projects, or places.

- Maintain Awareness: The AI security training and education you provide to employees about the risks of oversharing should be reinforced with frequent reminders and helpful guidelines about best practices when using AI tools.

- Implement Oversight Mechanisms: Monitor the usage of AI tools to detect and prevent potential data leaks and identify potential internal threats.

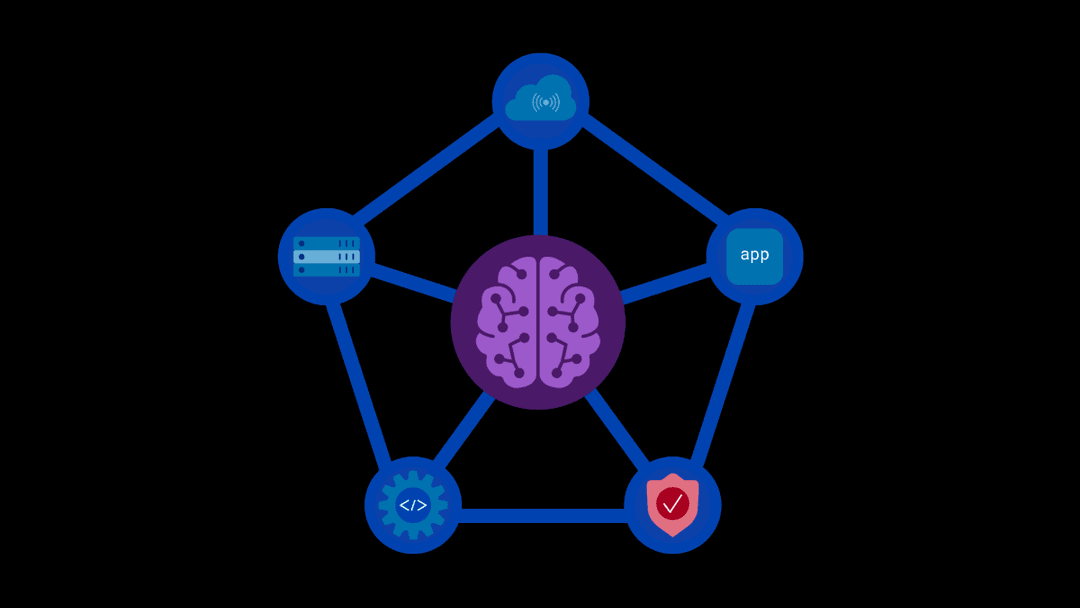

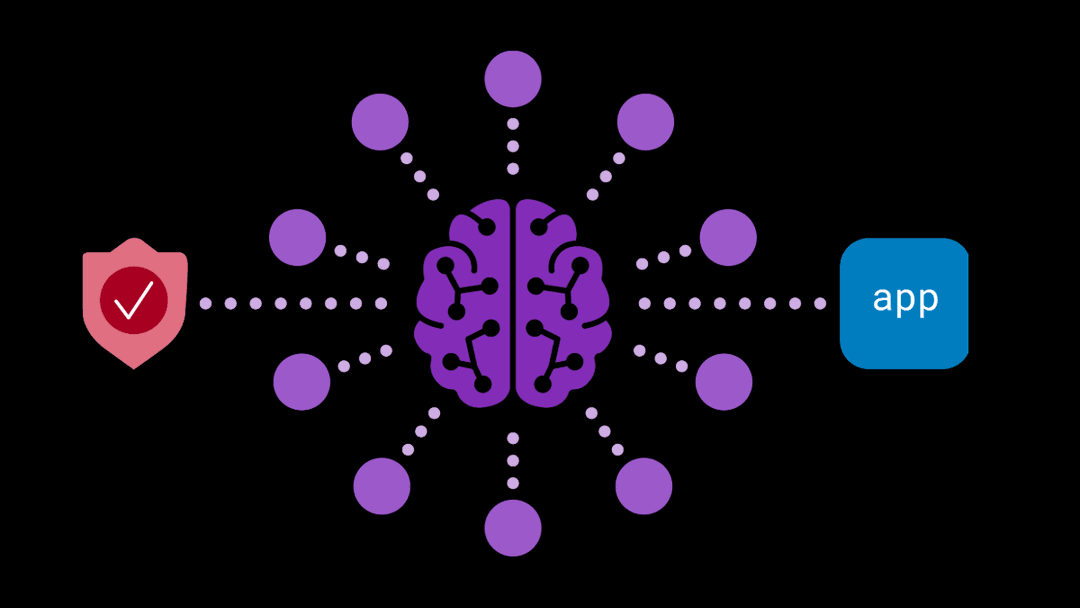

However, even the most diligent employee writing a carefully worded prompt can still cause security issues. That is why an automated trust layer with customizable content scanners can be the key to watertight data loss prevention (DLP). Our security and enablement platform for GenAI deployments reviews outgoing and incoming content to ensure confidential personal or company data doesn’t leave the organization and malicious, suspicious, or otherwise unacceptable content doesn’t get in. Other scanners review prompts for content that, while not detrimental to the company, is not aligned with company values or doesn’t conform to business use. All interactions executed on our model-agnostic platform are recorded for administrator review, auditability, and accountability purposes.

As LLMs become more ingrained in our daily operations, the importance of managing how we interact with them cannot be overstated. Oversharing, whether intentional or accidental, can have far-reaching, deeply negative consequences. By adopting prudent practices in employee engagement with these powerful tools, your organization can reap the benefits of GenAI while safeguarding personal and professional information. Click here to schedule a demonstration of our GenAI security and enablement platform.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?