NGINX and NGINX Plus provide a number of features that enable it to handle most SSL/TLS requirements. They use OpenSSL and the power of standard processor chips to provide cost‑effective SSL/TLS performance. As the power of standard processor chips continues to increase and as chip vendors add cryptographic acceleration support, the cost advantage of using standard processor chips over specialized SSL/TLS chips also continues to widen.

There are three major use cases for NGINX and NGINX Plus with SSL/TLS.

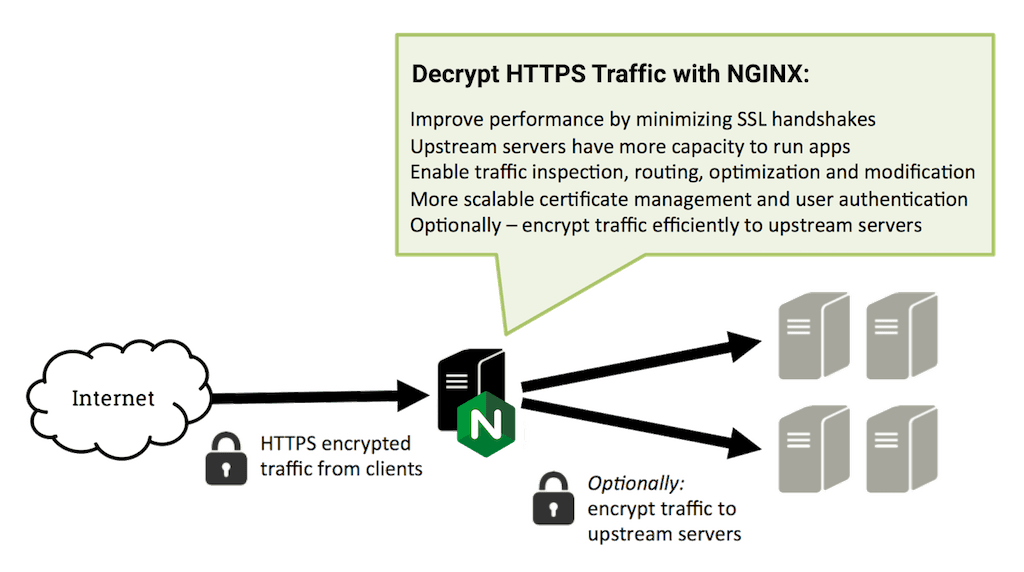

SSL/TLS Offloading

When NGINX is used as a proxy, it can offload the SSL decryption processing from backend servers. There are a number of advantages of doing decryption at the proxy:

- Improved performance – The biggest performance hit when doing SSL decryption is the initial handshake. To improve performance, the server doing the decryption caches SSL session IDs and manages TLS session tickets. If this is done at the proxy, all requests from the same client can use the cached values. If it’s done on the backend servers, then each time the client’s requests go to a different server the client has to re‑authenticate. The use of TLS tickets can help mitigate this issue, but they are not supported by all clients and can be difficult to configure and manage.

- Better utilization of the backend servers – SSL/TLS processing is very CPU intensive, and is becoming more intensive as key sizes increase. Removing this work from the backend servers allows them to focus on what they are most efficient at, delivering content.

- Intelligent routing – By decrypting the traffic, the proxy has access to the request content, such as headers, URI, and so on, and can use this data to route requests.

- Certificate management – Certificates only need to be purchased and installed on the proxy servers and not all backend servers. This saves both time and money.

- Security patches – If vulnerabilities arise in the SSL/TLS stack, the appropriate patches need be applied only to the proxy servers.

For more details, see NGINX SSL Termination in the NGINX Plus Admin Guide.

SSL/TLS Encryption to the Origin Servers

There are times you might need NGINX to encrypt traffic that it sends to backend servers. These requests can arrive at the NGINX server as plain text or as encrypted traffic that NGINX must decrypt in order to make a routing decision. Using a pool of keepalive connections to the backend servers minimizes the number of SSL/TLS handshakes and thus maximizes SSL/TLS performance. This is achieved very simply by configuring NGINX to proxy to “https” so that it automatically encrypts traffic that is not already encrypted.

End-to-End Encryption

Because NGINX can do both decryption and encryption, you can achieve end‑to‑end encryption of all requests with NGINX still making Layer 7 routing decisions. In this case the clients communicate with NGINX over HTTPS, and it decrypts the requests and then re‑encrypts them before sending them to the backend servers. This can be desirable when the proxy server is not collocated in a data center with the backend servers. As more and more servers are being moved to the cloud, it is becoming more necessary to use HTTPS between a proxy and backend servers.

Client Certificates

NGINX can handle SSL/TLS client certificates and can be configured to make them optional or required. Client certificates are a way of restricting access to your systems to only pre‑approved clients without requiring a password, and you can control the certificates by adding revoked certificates to a certificate revocation list (CRL), which NGINX checks to determine whether a client certificate is still valid.

Additional Security Features

There are number of other features that help support these use cases, including (but not limited to) the following:

- Multiple certificates – A single NGINX instance can support many certificates for different domains and can scale up to support hundreds of thousands of certificates. It is a common use case to have an NGINX instance serving many IP addresses and domains with each domain requiring its own certificate.

- OCSP stapling – When this is enabled, NGINX includes a time‑stamped OCSP response signed by the certificate authority that the client can use to verify the server’s certificate, avoiding the performance penalty from contacting the OCSP server directly.

- SSL/TLS ciphers – You can specify which ciphers are enabled.

- SSL/TLS protocols – You can specify which protocols are enabled, including SSLv2, SSLv3, TLSv1, TLSv1.1, and TLSv1.2.

- Chained certificates – NGINX supports certificate chains, used when the website’s certificate is not signed directly by the root certificate of a CA (Certificate Authority), but rather by a series of intermediate certificates. The web server presents a ‘certificate chain’ containing the intermediate certificates, so that the web client can verify the chain of trust that links the website certificate to a trusted root certificate.

- HTTPS server optimizations – NGINX can be tuned to maximum its SSL/TLS performance by configuring the number of worker processes, using keepalive connections, and using an SSL/TLS session cache.

For a more details, check out these resources:

- NGINX SSL Termination in the NGINX Plus Admin Guide

- Configuring HTTPS Servers at nginx.org

- ngx_http_ssl_module reference documentation at nginx.org

Examples

Here are a few examples of NGINX’s security features. These examples assume a basic understanding of NGINX configuration.

The following configuration handles HTTP traffic for www.example.com and proxies it to an upstream group:

Now add HTTPS support, so that NGINX decrypts the traffic using the certificate and private key and communicates with the backend servers over HTTP:

Or if you instead receive traffic over HTTP and send it to the backend servers over HTTPS:

To try NGINX Plus, start your free 30-day trial today or contact us to discuss your use cases.

About the Author

Related Blog Posts

Automating Certificate Management in a Kubernetes Environment

Simplify cert management by providing unique, automatically renewed and updated certificates to your endpoints.

Secure Your API Gateway with NGINX App Protect WAF

As monoliths move to microservices, applications are developed faster than ever. Speed is necessary to stay competitive and APIs sit at the front of these rapid modernization efforts. But the popularity of APIs for application modernization has significant implications for app security.

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for north-south and east-west API traffic, plus use cases where an API gateway is the right tool.

Deploying NGINX as an API Gateway, Part 2: Protecting Backend Services

In the second post in our API gateway series, Liam shows you how to batten down the hatches on your API services. You can use rate limiting, access restrictions, request size limits, and request body validation to frustrate illegitimate or overly burdensome requests.

New Joomla Exploit CVE-2015-8562

Read about the new zero day exploit in Joomla and see the NGINX configuration for how to apply a fix in NGINX or NGINX Plus.

Why Do I See “Welcome to nginx!” on My Favorite Website?

The ‘Welcome to NGINX!’ page is presented when NGINX web server software is installed on a computer but has not finished configuring