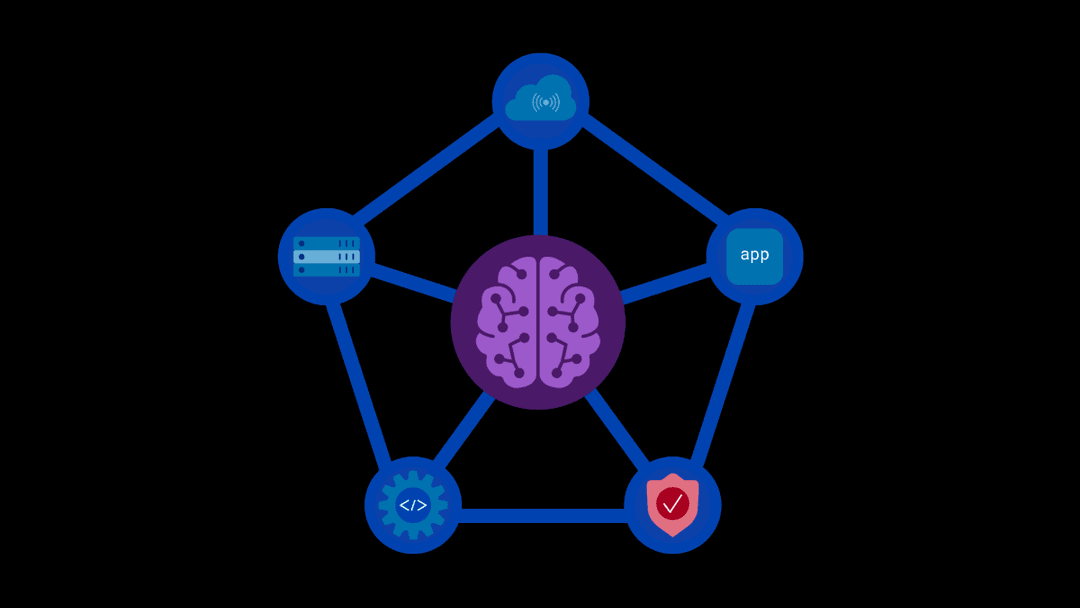

Agentic AI today is roughly where generative AI was a year ago. Most organizations with agentic projects are still in the pilot phase, but some early workloads are already beginning to interact with production systems. Teams are moving beyond simple model queries and are experimenting with orchestration agents, specialized worker agents, and tool-using workflows that can coordinate actions across applications and services.

As these agentic systems evolve, so do the risks. In traditional LLM interactions, failures often begin and end within a single prompt-response exchange between the model and humans. In agentic systems, failures propagate across multi-step plans, tool usage, context management and reuse, and interactions between agents and existing applications.

The new OWASP Top 10 for Agentic Applications provides a structured view of where these risks emerge. It defines ten categories of failure that occur when models and agents are given goals, tools, context, and the ability to act across systems. The list builds on the previously announced OWASP Top 10 for Large Language Model Applications to address agentic AI threats related to autonomy, tool use, context management, supply chains, and multi-agent interactions.

With this new OWASP Top 10, security leaders and compliance teams gain a shared language to discuss agentic AI risk, align security efforts, and determine where to focus first.

Why this matters for CISOs

The OWASP Agentic Top 10 highlights the failure modes shaping secure AI adoption. CISOs now need to apply this guidance to systems already moving into production.

F5 supports this through:

- F5 AI Guardrails, enforcing security policies at runtime to govern AI behavior as agentic systems move into production.

- F5 AI Red Team, evaluating GenAI systems to assess real-world resilience across model behavior, applications, and agentic execution paths.

- Comprehensive AI Security Index (CASI) and Agentic Resistance Scoring (ARS), delivered through F5 Labs, providing standardized benchmarks to measure model and system-level resilience and support informed security decisions.

As agentic AI accelerates, the organizations that benefit most will be those that embed resilience from the outset and treat security as foundational to how these systems are designed and operated.

OWASP Agentic Top 10 — How these risks manifest

ASI01 – Agent goal hijack

When an agent’s objectives are altered through prompt injection, poisoned context, or plan deviation.

- F5 AI Red Team tests indirect prompt injection scenarios drawn from its attack library, where attacker-controlled or untrusted content sources such as documents, web pages, emails, or tool outputs are used to introduce malicious instructions, assessing how easily agent objectives can be shifted.

- F5 AI Guardrails enforces input validation and constrains unsafe or non-compliant reasoning paths during inference, reducing the risk of unintended downstream behavior.

- ARS measures resilience against sustained, agent-driven, multi-step attack sequences.

ASI02 – Tool misuse and exploitation

When an agent misuses tools or attackers leverage tool interfaces as pivot points.

- F5 AI Guardrails validates tool-related requests at runtime and constrains reasoning patterns that could lead to unsafe tool interactions.

- CASI and ARS reflect susceptibility to misuse across representative execution patterns.

ASI03 – Identity and privilege abuse

When agents inherit or misuse credentials, tokens, or elevated access.

- F5 AI Guardrails can detect secrets, credentials, or tokens within reasoning context and block or log them before a tool is invoked with implicit or explicit access.

ASI04 – Agentic supply chain vulnerabilities

Risks arising from MCP servers, registries, remote prompt packs, and third-party tools that support agent workflows.

- F5 AI Guardrails detects and blocks hidden instructions or injected prompts embedded in data consumed by agents, including data from trusted or previously approved sources, at runtime during agent workflows.

- CASI and ARS measure risk at a system level, capturing how vulnerabilities propagate across models and execution patterns rather than treating each component in isolation.

ASI05 – Unexpected code execution

When agents generate, execute, or modify code, shells, or configurations in unsafe ways.

- F5 AI Guardrails applies fine-grained policies to code-related outputs, enforcing allow lists and blocking high-risk patterns.

- CASI and ARS capture these behaviors across representative execution paths.

ASI06 – Context management and retrieval manipulation

When persistent or retrieved context is poisoned, manipulated, or drifting.

- F5 AI Red Team evaluates scenarios where agents consume and reuse untrusted or misleading context supplied through normal runtime inputs, assessing how context degradation affects downstream reasoning and actions.

- F5 AI Guardrails inspects retrieved context for policy violations and detects prompt-injection payloads before they influence model behavior.

- CASI and ARS measure resilience to runtime context poisoning and injection-based propagation.

ASI07 – Insecure inter-agent communication

When agents exchange messages without channel protection, authentication, or integrity checks.

- F5 AI Guardrails can detect and flag agent delegation intent at runtime, including when an agent or agent manager evaluates which agent should be invoked to perform a task, and apply policy controls to constrain high-risk delegation reasoning.

- CASI and ARS provide visibility into how multi-step, multi-agent execution patterns increase systemic risk over time.

ASI08 – Cascading failures

When multiple risks compound across steps, agents, or systems.

- F5 AI Guardrails limits blast radius through quotas, policy controls, and runtime enforcement.

- CASI measures resilience across diverse attack vectors, while ARS evaluates how far failures can propagate across sustained, multi-step, agentic execution.

ASI09 – Human-agent trust exploitation

When agents exploit human trust through convincing or misleading instructions.

- F5 AI Guardrails tags and blocks unsafe content and logs decisions for audit.

- F5 AI Red Team simulates social-engineering patterns to strengthen workflow design and operator awareness.

ASI10 – Rogue agents

When compromised or drifting agents persist inside complex systems without detection.

- F5 AI Guardrails enforces policies consistently as agent behavior evolves, blocking unsafe execution patterns or data exfiltration attempts.

- CASI and ARS detect behavioral drift by tracking measurable changes in risk signals over time.

How F5 delivers AI security for agentic workflows

AI security is a core part of the F5 Application Delivery and Security Platform (ADSP). Agentic workflows introduce new risks in how prompts, tools, context, and agents interact at the application and API layer, but these interactions still traverse the same web, API, data plane, and control plane pathways that organizations already protect today.

By extending security controls and visibility into inference-time behavior, F5 enables organizations to address agentic risks while building on the application security foundations they already rely on.

F5 continues to support the broader community effort to strengthen secure AI practices, including the work of the OWASP GenAI Security Project.

Learn more about F5 AI Red Team and F5 AI Guardrails.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?