When the first large language models (LLMs) emerged from developers’ sandboxes into the wild, the business world’s responses ranged from fear to euphoria, and both emotions lingered for quite a while. It didn’t take long before all sorts of models began flooding the landscape: small LLMs (yes, they are a thing), retrieval-augmented generative (RAG) models, bespoke, internal, fine-tuned, public, private, open-source—and the list continues to grow. But at least reality has settled in, calming things down and allowing organizations to identify their needs and determine which models will best meet them.

Before deciding on and deploying any generative AI (GenAI) models, organizations must develop a proactive, flexible approach and fully understand the features, tools, and add-ins that will best support their business use cases, and then prioritize their in-house situations and the solutions to be adopted. For instance, leadership committees must determine how many models will be needed, what types, and who will have access to them. They must identify the person or team who will determine how the new AI tools fit into the company’s broader digital infrastructure. They will also need to address questions such as whether off-the-shelf solutions are enough, or if the ability to customize features and parameters to meet organizational, and even departmental, use cases is necessary? Is an audit trail for prompts and responses important? What about verifying the accuracy of the model-provided information? Observability? Convenience? Ease of use?

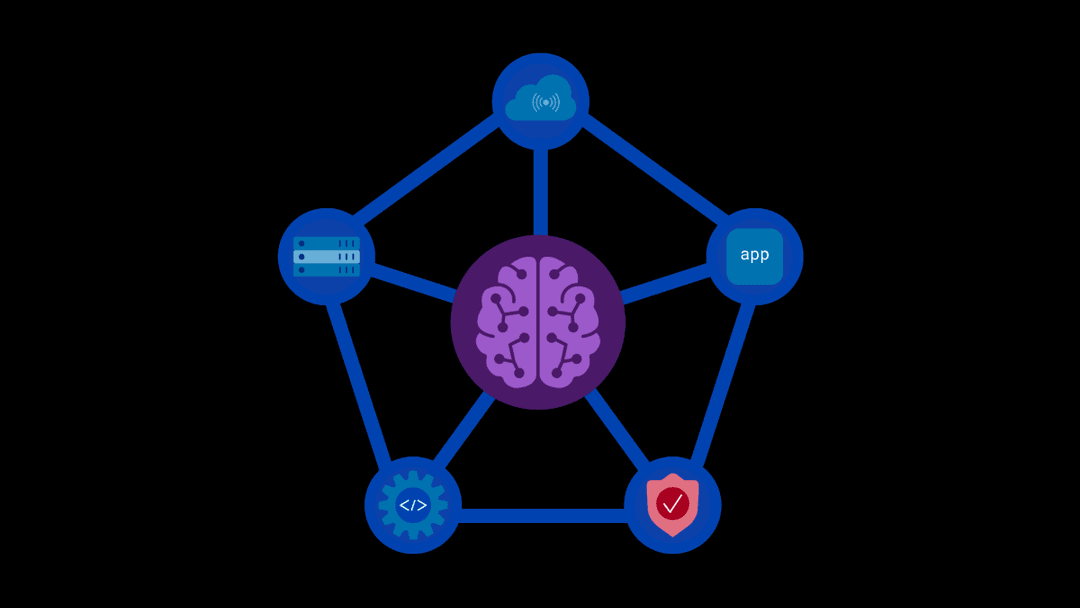

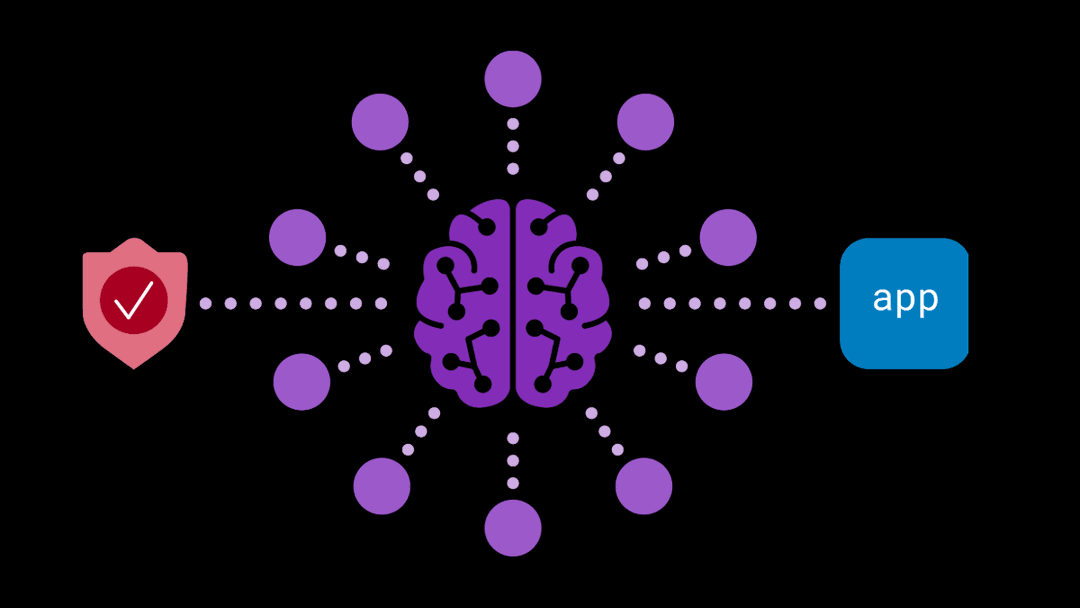

Faced with such questions, one easy decision to make early in the process is to employ a model-agnostic solution that enables flexibility and adaptability—essential product characteristics for organizations seeking to harness the full potential of AI. A model-agnostic approach to AI deployment involves using solutions that are compatible with a wide range of AI models, regardless of the models’ specific architecture or design. Such flexibility allows organizations to adapt quickly to new developments, tools, and innovations without being tethered to a single model or provider. A model-agnostic solution can also sharpen a firm’s competitive edge, enabling it to pivot quickly and efficiently when responding to new challenges and opportunities. At F5, we created the first model-agnostic model orchestration solution to enhance security and enablement for GenAI deployments. Our AI runtime security solutions are designed to integrate seamlessly with a wide variety of AI models, offering:

- Universal Compatibility: Our solutions work across different AI models, providing a consistent trust layer regardless of the underlying technology. A single query can be run on all models to compare and assess the depth and accuracy of responses.

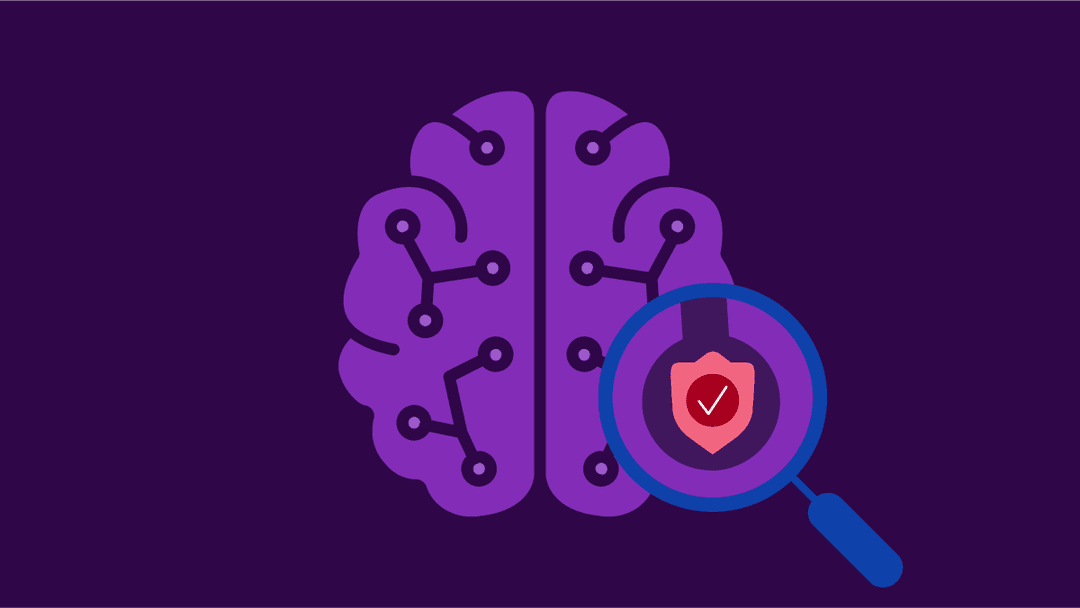

- Adaptive Security Measures: With an ability to interface with multiple models, the F5 solutions ensure robust security and observability, adapting to the unique needs of each model. Customizable, policy-based access controls allow admins to determine who has access to each model and under what circumstances.

- Ease of Integration: F5’s API-driven, SaaS-enabled design means integration is reduced to a few lines of code, diminishing the time and complexity typically required to secure AI deployments.

In practical terms, a model-agnostic approach allows organizations to explore a broader range of AI applications. Whether it’s for predictive analytics, customer service enhancements, or advanced research, the ability to seamlessly switch or combine AI models without compromising security or functionality is invaluable. As AI continues to advance, the model-agnostic approach will become increasingly necessary to ensure organizations are keeping up with AI developments and are positioned to lead and innovate. With our security, enablement, and model orchestration solutions, organizations can navigate this evolving ecosystem while enjoying enhanced productivity and increased competitive advantage. Click here to contact us and find out how our AI Runtime Security solutions can help you achieve your AI ambitions.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?