The rapid adoption of large language models (LLMs) and other AI tools across enterprises is revolutionizing business operations and enhancing productivity. However, these tools also pose significant security and compliance challenges as they expand the organizational attack surface. Organizations historically prioritize utility and speed-to-market over robust governance and control measures, and the evolution of AI governance is no exception. As enterprises integrate AI and machine learning (ML) capabilities into more and more tasks, it is critical that they establish a comprehensive AI governance framework that addresses these new risks to ensure internal and external compliance and security.

The Necessity of AI Governance

As AI/ML tools gain traction across functions including Operations, Sales, Legal, Human Resources, and others, the organization’s security leaders—which could include CISOs, CIOs, CTOs, CSOs, CDOs, Compliance Officers, and/or the General Counsel—must undertake to develop and implement an enterprise-wide AI governance framework. This process is pivotal in balancing the increasing demand for AI tools with the need to protect the expanding attack surface.

This task involves assembling a cross-functional team with representatives from all major organizational functions to achieve consensus on governance principles and secure buy-in from stakeholders including the C-suite. Limiting the team to six to eight participants can streamline decision-making and implementation processes. The security leader most involved with the technology should lead; however, the role is not that of a subject matter expert, but that of a facilitator who demonstrates neutrality and avoids bias.

Current Landscape and Challenges

The surge in LLMs and other generative AI (GenAI) products provides enterprises myriad opportunities to enhance business processes, improve customer experiences, and boost professional productivity. These opportunities include introducing custom-developed, in-house models that are reliant on proprietary data to satisfy industry- or company-specific use cases. The rapid adoption of these tools, as well as the large array of models and customization to the models, places a cognitive burden on users who might never have used such sophisticated digital tools until now.

The security leaders must educate stakeholders—employees, contractors, customers, clients, and any others—about the risks, responsibilities, results, and rewards of using these game-changing solutions.

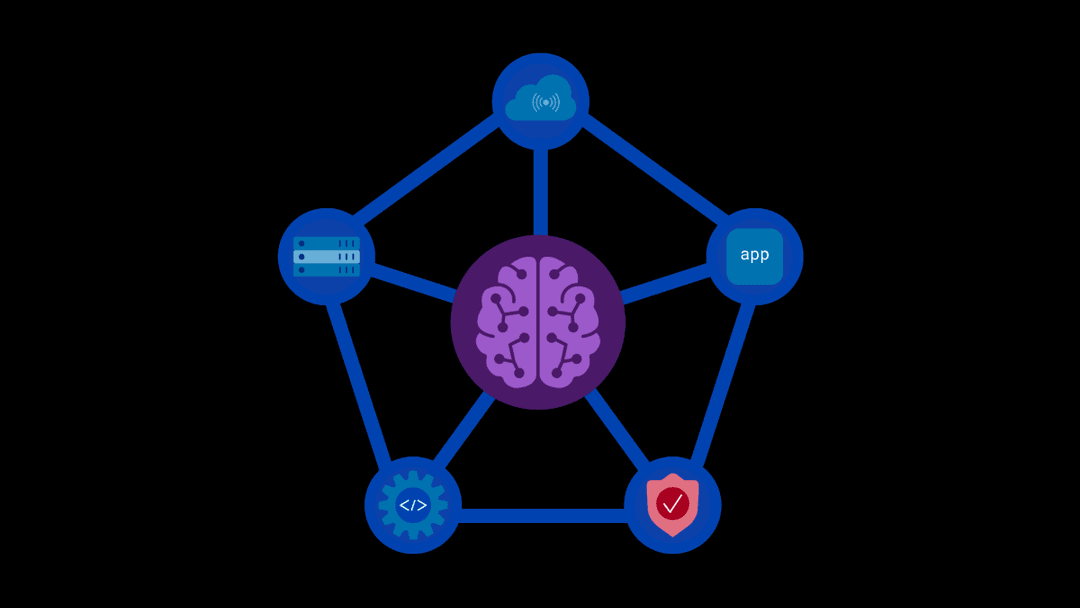

Framework for AI Governance

When establishing an AI governance framework, security leaders should focus on several key areas, including:

- People: The security leaders must educate department, team, and practice leaders across functions about the importance of consensus-driven AI governance.

- Principles: Governance principles should align with organizational values and brand reputation, providing a clear business value foundation and a solid underpinning for AI-specific policies, addressing issues such as acceptable use and consequences of misuse. Examples of principles include mandating human review of all LLM outputs and requiring identification of LLM use in internal communications. Early establishment of these principles allows for the amendment of existing company policies and the creation of new policies.

- Regulation: AI governance must consider regional, national and global regulations influenced by existing privacy laws, such as the European Union’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). Executives responsible for privacy and compliance must be integral to the governance framework, ensuring that AI-aligned or AI-dependent models, tools, and practices meet regulatory standards for consent, data anonymization, and data deletion.

- Ethics: AI governance is labor-intensive, focusing on how people work rather than the tools they use. Ethical considerations must be integrated into AI development, with humans serving as arbiters of ethical judgment in technology design.

- Controls: AI governance should incorporate three tiers of human input: Human-in-the-loop (HITL), human-on-the-loop (HOTL), and human-in-control (HIC). Doing so ensures human oversight and accountability across AI operations, mitigating risks associated with autonomous AI decision-making.

The Business Case for AI Governance

Implementing AI governance requires investment in technology and labor. However, the productivity gains and labor cost savings from using LLMs in core business workflows can be significant enough to justify these investments. Establishing an accountability model, in which identified employees are responsible for AI outputs, can minimize the need for additional support personnel. When such a framework is put in place and has executive, as well as employee, buy-in, the enterprise can efficiently, effectively, and safely manage extended risks and achieve measurable benefits aligned with company values and policies.

Click here to schedule a demonstration of our GenAI security and enablement platform.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?