Every major shift in application architecture forces the network to adapt. This is not a new or even revolutionary idea. We’ve seen it before, repeatedly. And each time, the same pattern played out. A new dominant application type emerged and “the network” adapted to support it, because those applications demand capabilities the existing stack cannot provide. That pressure is exactly what we are seeing now with AI inference.

Inference is not training. It is not experimentation. It is not a data science exercise. Inference is production runtime behavior, and it behaves like an application tier. It has users, traffic patterns, latency sensitivity, failure modes, cost constraints, and security risks. Treating it like a backend service or a specialized workload misses what is actually happening.

Inference is a new application type.

Our annual research makes this reality obvious. Nearly 80% of organizations are operating inference themselves. Only a small minority rely exclusively on public AI services. Most organizations are running inference inside their own environments, across multiple clouds, alongside traditional applications and APIs. They are already dealing with routing, availability, performance, and security problems at inference time.

That is the key point. The problems show up during inference; not before, not after, and certainly not during training.

Traditional delivery techniques are not sufficient here. Load balancing assumes interchangeable backends. Health checks assume binary availability. Static traffic steering assumes predictable behavior. None of those assumptions hold when requests vary in cost, quality, latency, and risk based on prompts, tokens, and model behavior.

Therefore, ergo, and thusly, “the network” must change again.

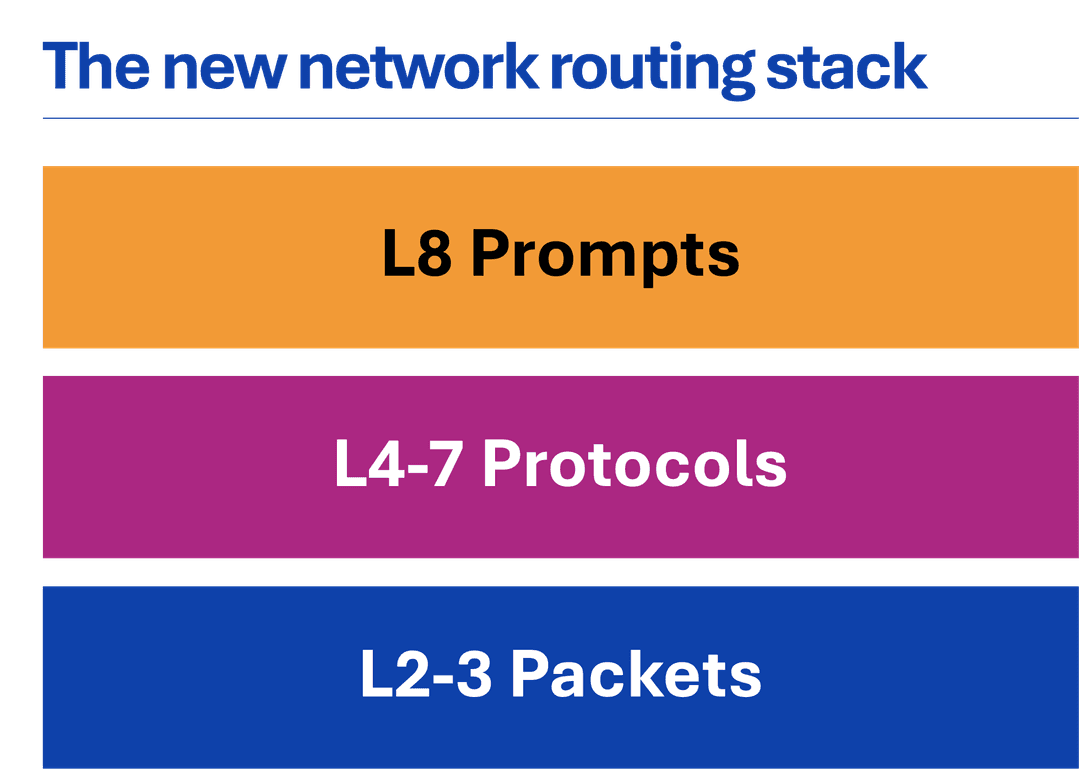

The new network stack

For decades, the network stack has been described in terms of packets and protocols. Layers 2 and 3 handled packets. Layers 4 through 7 handled sessions, protocols, and applications. That framing worked because applications expressed intent and purpose through protocols. Inference does not.

Inference expresses intent and purpose through prompts.

That is why we are now seeing the emergence of a new dominant layer. Layer 8. The prompt layer.

Now wait a minute, Lori, you might be thinking. We’ve been routing based on parts of the payload for a long time, so what makes this so different?

Thanks for asking!

The difference is actually quite profound, especially given that URIs don’t map to “functions” with inferencing, and what is being invoked requires extraction of intent from…the prompt. This is fundamentally why payload-based traffic steering did not rise to the level of a new layer. It didn’t radically change the game. Inference? Oh, inference not only changes the game, it rewrites the rules on a regular basis.

Now, the big deal is that at “the prompt layer,” routing decisions are no longer based solely on IPs, ports, URIs, or even pieces of the payload. They are based on who is asking, what they are asking for, how expensive it is, how risky it is, and what outcome quality is required. Together with output moderation and token control, this layer of the stack was identified by our latest research as being the layer where app delivery and security have the greatest impact. Throw in model routing (also layer 8) and you’ve got a majority of organizations who are already recognizing how important it is to securing and scaling AI inferencing.

Prompt inspection, token control, output moderation, and model routing are not optional features. They are table stakes for operating inference in production.

Never fear, the layers below it do not disappear any more than L2-3 disappeared when L4-7 became the top of the stack. They simply become foundational. Packet routing still matters. Protocol handling still matters.

But make no mistake, the value is now at layer 8.

The response to inference confirms the shift

Application Delivery Controllers emerged because networks built for packets could not support applications. API gateways emerged because networks built for web applications could not support APIs. Inference support is emerging now because networks built for APIs cannot support autonomous, probabilistic, and cost-variable applications.

The market response confirms this shift. Startups are forming around model routing, inference gateways, token governance, and prompt control. Hyperscalers are quietly building the same capabilities internally.

The enterprise response confirms this shift. Ninety percent of organizations are using inference services, and 70% of them are using two or more different inferencing services, including operating their own. They are implementing multi-model strategies, experimenting with agentic AI, and adopting AI-driven automation in operations.

This is not hype. This is structural.

Inference is not just another workload. Architectures that remain anchored at packets and protocols will continue to function, but they will not scale efficiently. Network stacks that stop at APIs will struggle to control autonomous systems.

Inference moved the point of control up the stack. The rest is inevitable.

About the Author

Related Blog Posts

From packets to prompts: Inference adds a new layer to the stack

Inference is not training. It is not experimentation. It is not a data science exercise. Inference is production runtime behavior, and it behaves like an application tier.

Compression isn’t about speed anymore, it’s about the cost of thinking

In the AI era, compression reduces the cost of thinking—not just bandwidth. Learn how prompt, output, and model compression control expenses in AI inference.

The efficiency trap: tokens, TOON, and the real availability question

Token efficiency in AI is trending, but at what cost? Explore the balance of performance, reliability, and correctness in formats like TOON and natural-language templates.

Programmability is the only way control survives at AI scale

Learn why data and control plane programmability are crucial for scaling and securing AI-driven systems, ensuring real-time control in a fast-changing environment.

The top five tech trends to watch in 2026

Explore the top tech trends of 2026, where inference dominates AI, from cost centers and edge deployment to governance, IaaS, and agentic AI interaction loops.