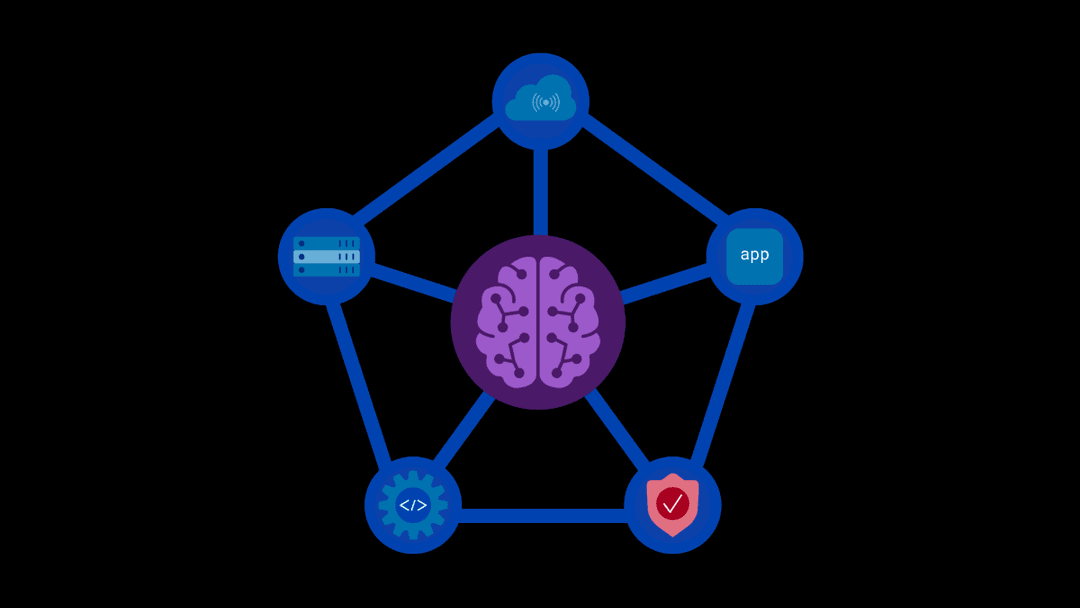

In an era when GenAI is becoming a critical tool to maintain competitive advantage—enhancing business processes as well as customer experiences—ensuring its safety is paramount. While AI drives innovation, it also introduces a new attack surface and unique risks that cannot be effectively managed with traditional security tools. Enter red teaming for AI—a proactive, offense-driven approach to identifying vulnerabilities and strengthening defenses before adversaries strike.

What is Red Teaming for AI?

AI red teaming involves simulating adversarial tactics to identify vulnerabilities in models and applications before malicious actors can exploit them. This process isn’t about identifying theoretical risks, it’s about testing the system’s real-world resilience against a wide array of threats, including prompt injections, jailbreaks, data poisoning, information leakage and unauthorized data extraction.

Why Red Teaming is Essential for AI Security

Hidden vulnerabilities in AI systems create an ever-expanding attack surface. These risks demand more than periodic testing in order to stay ahead of bad actors. By continuously testing AI models and applications, companies will achieve a critical edge in today’s AI-driven landscape through:

- Proactive Risk Mitigation: AI red teaming surfaces vulnerabilities that may otherwise remain undetected until exploited.

- Bridging the Gap in Security Testing for AI: Red teaming has long been a critical component of traditional information security, but when up against AI systems, existing methods fall short in complexity and scope. By focusing on tailored adversarial testing that incorporates a comprehensive attack suite of static, agentic, and operational attacks, organizations can close critical security gaps and maintain control over their expanding AI landscape.

- Building Trust: Proactive testing reassures stakeholders, governance groups, and regulators that AI systems are safe and aligned with both internal and external policies.

These benefits empower security teams to confidently demonstrate how they are mitigating AI-related risks in a way that ultimately drives innovation forward.

The Foundations of a Practical Red Teaming Strategy

A strong red teaming strategy extends beyond simple “one-and-done” tests—it involves continuous iteration and evaluation to keep up with the dynamic nature of AI. Key components for an effective red teaming strategy include:

- Comprehensive Attack Simulations:

- Systematically testing for weaknesses in single-turn model responses, targeting common vulnerabilities like violence, toxicity, illegal acts, and misinformation.

- Simulating real-world adversarial interactions by engaging a model in multi-turn conversations in order to adapt dynamically, uncovering deeper vulnerabilities that surface only during prolonged interactions.

- Tailored testing that supports user-defined malicious prompts and intents to exploit model-specific weaknesses and unique organizational risks.

- Identifying vulnerabilities in how models handle API requests and code-level inputs to ensure robustness beyond content-based interactions.

- Meaningful Insights: Detailed reports should clearly identify weaknesses and provide guidance for actionable improvements.

- Scalability: Red teaming exercises must scale across multiple models, applications and scenarios to ensure extensive testing.

This approach ensures that no stone is left unturned in identifying vulnerabilities, enabling organizations to fortify their AI systems before adversaries exploit them.

Preparing for What’s Next

With years of defense-focused insights, F5 has gained extensive knowledge into what it takes to protect against AI’s ever-evolving threat landscape. Core to an effective security strategy is an offense-driven approach. Learn more about our best-in-class red teaming solution, designed to empower proactive AI security that drives innovation.

About the Author

Related Blog Posts

The hidden cost of unmanaged AI infrastructure

AI platforms don’t lose value because of models. They lose value because of instability. See how intelligent traffic management improves token throughput while protecting expensive GPU infrastructure.

AI security through the analyst lens: insights from Gartner®, Forrester, and KuppingerCole

Enterprises are discovering that securing AI requires purpose-built solutions.

F5 secures today’s modern and AI applications

The F5 Application Delivery and Security Platform (ADSP) combines security with flexibility to deliver and protect any app and API and now any AI model or agent anywhere. F5 ADSP provides robust WAAP protection to defend against application-level threats, while F5 AI Guardrails secures AI interactions by enforcing controls against model and agent specific risks.

Govern your AI present and anticipate your AI future

Learn from our field CISO, Chuck Herrin, how to prepare for the new challenge of securing AI models and agents.

F5 recognized as one of the Emerging Visionaries in the Emerging Market Quadrant of the 2025 Gartner® Innovation Guide for Generative AI Engineering

We’re excited to share that F5 has been recognized in 2025 Gartner Emerging Market Quadrant(eMQ) for Generative AI Engineering.

Self-Hosting vs. Models-as-a-Service: The Runtime Security Tradeoff

As GenAI systems continue to move from experimental pilots to enterprise-wide deployments, one architectural choice carries significant weight: how will your organization deploy runtime-based capabilities?