The adoption of AI, analytics, and cloud-native development has become a baseline requirement for enterprises to stay competitive and embrace powerful, future-forward use cases such as retrieval-augmented generation (RAG), multimodal AI, and agentic AI. Underpinning these capabilities is the use of scalable, resilient, high-performance object storage.

Here's why: applications across distributed environments are now generating and consuming petabytes of data, with exabytes not far off, and data needs to be more readily available to support real-time workflows. AI requests and API calls traverse numerous environments including cloud, on-premises, and edge, accumulating latency that slows down services and time to insights. Even a few seconds’ delay in an AI application’s responses can lead to significant rates of user drop off.

Efficient, effective, and scalable object storage allows enterprises to remove barriers for current AI projects and be better positioned to make the most of future AI opportunities. Dell ObjectScale and F5 BIG-IP form a synergistic solution that not only meets these demands but redefines how S3-compatible workloads are delivered across distributed infrastructures.

How F5 and Dell enhance storage scalability

Dell, a global leader in object storage, offers ObjectScale as a container-based, software-defined storage platform that supports Amazon S3 and other protocols. This platform is purpose-built for large-scale data collection, backup, analytics, and generative AI across distributed environments. The latest update includes capabilities such as S3 event notifications and automated, push-based updates to external applications, which enable real-time processing for AI/ML pipelines.

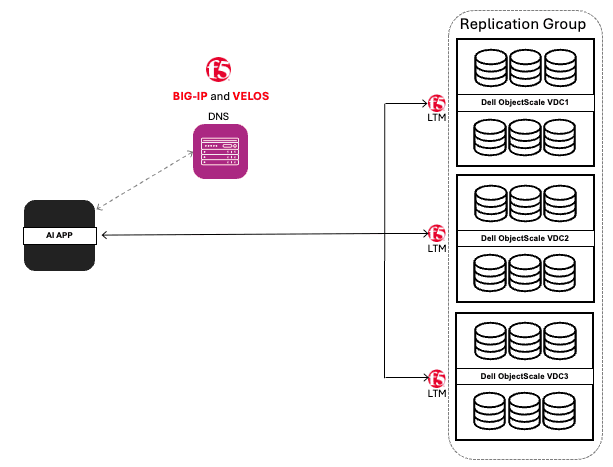

F5 BIG-IP, within the F5 Application Delivery and Security Platform, complements and enhances ObjectScale with intelligent traffic management that ensures seamless operations for AI data delivery and ingestion across hybrid multicloud environments. The F5 AI data delivery solution includes two key offerings:

- F5 BIG-IP Local Traffic Manager (LTM) distributes S3 API traffic (PUT, GET, DELETE, and LIST) across ObjectScale nodes using real-time metrics like connection count and server health.

- F5 BIG-IP DNS routes traffic based on geolocation, latency, and availability, ensuring optimal performance across multi-site deployments.

F5 BIG-IP LTM and BIG-IP DNS work together to optimize traffic routing to healthy, available ObjectScale storage instances or virtual data centers (VDCs) wherever they are hosted, to prevent network congestion and latency that degrades read/write speeds. When organizations leverage the complete package of F5 solutions combined with ObjectScale, they benefit from seamless horizontal scaling, high availability, and consistent performance for multi-petabyte workloads even in geographically distributed or hybrid environments. These enhancements are especially critical for AI workloads where data mobility and responsiveness directly impact model training and inference cycles.

Architectural flexibility for AI workloads

BIG-IP goes beyond load balancing to enable adaptive traffic orchestration with granular control. For example, F5 iRules dynamically steer traffic to the nearest or healthiest ObjectScale VDC, optimizing response times and read/write operations. Reads are intelligently routed to the original write site, reducing latency while maintaining strong data consistency.

Additional deployment options for both FastL4 and standard configurations provide greater flexibility based on an organization’s priorities. FastL4 boosts performance with minimal payload inspection and hardware acceleration for layer 4 traffic, getting the data to where it needs to be faster. Standard configuration provides greater control and security with layer 7 features such as TLS termination, HTTP customization, and other F5 iRules that organizations can choose and apply as they see fit.

By segmenting traffic and applying each configuration profile where it’s most effective, organizations can get the best of both worlds: raw throughput for read/writes to ObjectScale instances with FastL4, and granular control with app-layer logic and features like a web application firewall (WAF) with standard configuration, all wrapped in the same BIG-IP system. Integration with F5 VELOS and F5 rSeries appliances further extends scalability, supporting large-scale throughput and concurrent connections

Efficient AI/ML workloads at petabyte scale

With scalability at its core, ObjectScale is built to eliminate bottlenecks in the storage layer while BIG-IP leverages intelligent traffic management to eliminate bottlenecks in the network layer. Combined, these solutions provide secure, scalable, connected storage that supports S3 protocols and works consistently across distributed environments. Real-time, multicloud AI with global availability is now simpler to achieve.

Interested in reading more? Read the Dell white paper.

Also, check out my recent technical article on F5 DevCentral.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.