This post is one of four tutorials that help you put into practice concepts from Microservices March 2023: Start Delivering Microservices:

- How to Deploy and Configure Microservices (this post)

- How to Securely Manage Secrets in Containers

- How to Use GitHub Actions to Automate Microservices Canary Releases

- How to Use OpenTelemetry Tracing to Understand Your Microservices

All apps require configuration, but the considerations when configuring a microservice may not be the same as for a monolithic app. We can look to Factor 3 (Store config in the environment) of the twelve‑factor app for guidance applicable to both types of apps, but that guidance can be adapted for microservices apps. In particular, we can adapt the way we define the service configuration, provide the configuration to a service, and make a service available as a configuration value for other services that may depend on it.

For a conceptual understanding of how to adapt Factor 3 for microservices – specifically the best practices for configuration files, databases, and service discovery – read Best Practices for Configuring Microservices Apps on our blog. This post is a great way to put that knowledge into practice.

Note: Our intention in this tutorial is to illustrate some core concepts, not to show the right way to deploy microservices in production. While it uses a real “microservices” architecture, there are some important caveats:

- The tutorial does not use a container orchestration framework such as Kubernetes or Nomad. This is so that you can learn about microservices concepts without getting bogged down in the specifics of a certain framework. The patterns introduced here are portable to a system running one of these frameworks.

- The services are optimized for ease of understanding rather than software engineering rigor. The point is to look at a service’s role in the system and its patterns of communication, not the specifics of the code. For more information, see the README files of the individual services.

Tutorial Overview

This tutorial illustrates how Factor 3 concepts apply to microservices apps. In four challenges, you’ll explore some common microservices configuration patterns and deploy and configure a service using those patterns:

- In Challenge 1 and Challenge 2 you explore the first pattern, which concerns where you locate the configuration for a microservices app. There are three typical locations:

- The application code

- The deployment script for the application

- Outside sources accessed by the deployment script

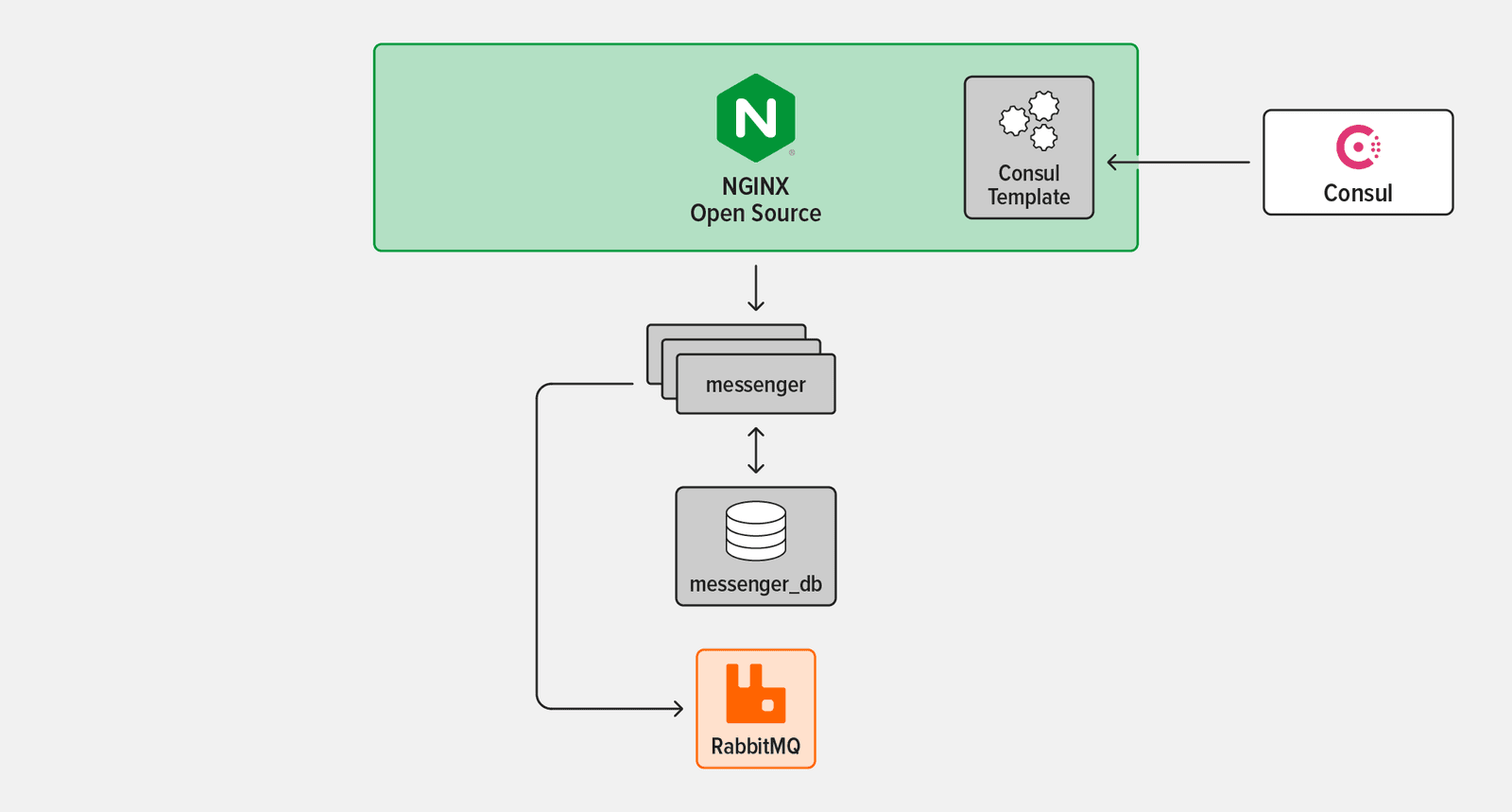

- In Challenge 3 you set up two more patterns: exposing the app to the outside world via a NGINX as a reverse proxy and enabling service discovery using Consul.

- In Challenge 4 you implement the final pattern: using an instance of your microservice as a “job runner” that performs a one-off action different from its usual function (in this case emulating a database migration).

The tutorial uses four technologies:

- messenger – A simple chat API with message storage capabilities, created for this tutorial

- NGINX Open Source – An entry point to the messenger service and the wider system at large

- Consul – A dynamic service registry and key‑value store

- RabbitMQ – A popular open source message broker that enables services to communicate asynchronously

Watch this video to get an overview of the tutorial. The steps do not exactly match this post, but it helps in understanding the concepts.

Prerequisites and Set Up

Prerequisites

To complete the tutorial in your own environment, you need:

- A Linux/Unix‑compatible environment

- Basic familiarity with the Linux command line, JavaScript, and

bash(but all code and commands are provided and explained, so you can still succeed with limited knowledge) - Docker and Docker Compose

- Node.js 19.x or later

curl(already installed on most systems)- The four technologies listed in Tutorial Overview: messenger (you’ll download it in the next section), NGINX Open Source, Consul, and RabbitMQ.

Set Up

- Start a terminal session (subsequent instructions will refer to this as the app terminal).

- In your home directory, create the microservices-march directory and clone the GitHub repositories for this tutorial into it. (You can also use a different directory name and adapt the instructions accordingly.) Note: Throughout the tutorial the prompt on the Linux command line is omitted, to make it easier to copy and paste the commands into your terminal. The tilde (

~) represents your home directory. - Change to the platform repository and start Docker Compose: This starts both RabbitMQ and Consul, which will be used in subsequent challenges.

- The

-dflag instructs Docker Compose to detach from the containers when they have started (otherwise the containers will remain attached to your terminal). - The

--buildflag instructs Docker Compose to rebuild all images on launch. This ensures that the images you are running stay updated through any potential changes to files.

- The

- Change to the messenger repository and start Docker Compose: This starts the PostgreSQL database for the messenger service, which we’ll refer to as the messenger-database for the remainder of the tutorial.

Challenge 1: Define Application-Level Microservices Configuration

In this challenge you set up configuration in the first of the three locations we’ll look at in the tutorial: the application level. (Challenge 2 illustrates the second and third locations, deployment scripts and outside sources.)

The twelve‑factor app specifically excludes application‑level configuration, because such configuration doesn’t need to change between different deployment environments (which the twelve‑factor app calls deploys). Nonetheless, we cover all three types for completeness – the way you deal with each category as you develop, build, and deploy a service is different.

The messenger service is written in Node.js, with the entrypoint in app/index.mjs in the messenger repo. This line of the file:

is an example of application‑level configuration. It configures the Express framework to deserialize request bodies that are of type application/json into JavaScript objects.

This logic is tightly coupled to your application code and isn’t what the twelve‑factor app considers “configuration”. However, in software everything depends on your situation, doesn’t it?

In the next two sections, you modify this line to implement two examples of application‑level configuration.

Example 1

In this example, you set the maximum size of a request body accepted by the messenger service. This size limit is set by the limit argument to the express.json function, as discussed in the Express API documentation. Here you add the limit argument to the configuration of the Express framework’s JSON middleware discussed above.

- In your preferred text editor, open app/index.mjs and replace: with:

- In the app terminal (the one you used in Set Up), change to the app directory and start the messenger service:

- Start a second, separate terminal session (which subsequent instructions call the client terminal) and send a

POSTrequest to the messenger service. The error message indicates that the request was successfully processed, because the request body was under the 20-byte limit set in Step 1, but that the content of the JSON payload is incorrect: - Send a slightly longer message body (again in the client terminal). There’s much more output than in Step 3, including an error message that indicates this time the request body exceeds 20 bytes:

Example 2

This example uses convict, a library that lets you define an entire configuration “schema” in a single file. It also illustrates two guidelines from Factor 3 of the twelve‑factor app:

- Store configuration in environment variables – You modify the app so that the maximum body size is set using an environment variable (

JSON_BODY_LIMIT) instead of being hardcoded in the app code. - Clearly define your service configuration – This is an adaptation of Factor 3 for microservices. If you’re unfamiliar with this concept, we recommend that you take a moment to read about it in Best Practices for Configuring Microservices Apps on our blog.

The example also sets up some “plumbing” you’ll take advantage of in Challenge 2: the messenger deployment script you’ll create in that challenge sets the JSON_BODY_LIMIT environment variable which you insert into the app code here, as an illustration of configuration specified in a deployment script.

- Open the

convictconfiguration file, app/config/config.mjs, and add the following as a new key after theamqpportkey: Theconvictlibrary takes care of parsing theJSON_BODY_LIMITenvironment variable when you use it to set the maximum body size on the command line in Step 3 below:- Pulls the value from the correct environment variable

- Checks the variable’s type (

String) - Enables access to it in the application under the

jsonBodyLimitkey

- In app/index.mjs replace: with

- In the app terminal (where you started the messenger service in Step 2 of Example 1), press

Ctrl+cto stop the service. Then start it again, using theJSON_BODY_LIMITenvironment variable to set the maximum body size to 27 bytes: This is an example of modifying the configuration method when doing so makes sense for your use case – you’ve switched from hardcoding a value (in this case a size limit) in the app code to setting it with an environment variable, as recommended by the twelve-factor app. As mentioned above, in Challenge 2 the use of theJSON_BODY_LIMITenvironment variable will become an example of the second location for configuration, when you use the messenger service’s deployment script to set the environment variable rather than setting it on the command line. - In the client terminal, repeat the

curlcommand from Step 4 of Example 1 (with the larger request body). Because you’ve now increased the size limit to 27 bytes, the request body no longer exceeds the limit and you get the error message that indicates the request was processed, but that the content of the JSON payload is incorrect: You can close the client terminal if you wish. You’ll issue all commands in the rest of the tutorial in the app terminal. - In the app terminal, press

Ctrl+cto stop the messenger service (you stopped and restarted the service in this terminal in Step 3 above). - Stop the messenger-database. You can safely ignore the error message shown, as the network is still in use by the infrastructure elements defined in the platform repository. Run this command at the root of the messenger repo.

Challenge 2: Create Deployment Scripts for a Service

“ Configuration should be strictly separated from code (otherwise how can it vary between deploys?)” – From Factor 3 of the twelve‑factor app

At first glance, you might interpret this as saying “do not check configuration in to source control”. In this challenge, you implement a common pattern for microservices environments that may seem to break this rule, but in reality respects the rule while providing valuable process improvements that are critical for microservices environments.

In this challenge, you create deployment scripts to mimic the functionality of infrastructure-as-code and deployment manifests which provide configuration to a microservice, modify the scripts to use external sources of configuration, set a secret, and then run the scripts to deploy services and their infrastructure.

You create the deployment scripts in a newly created infrastructure directory in the messenger repo. A directory called infrastructure (or some variation of that name) is a common pattern in modern microservice architectures, used to store things like:

- Infrastructure as code (think Terraform, AWS CloudFormation, Google Cloud Deployment Manager, and Azure Resource Manager)

- Configuration for the container orchestration system (for example, Helm charts and Kubernetes manifests)

- Any other files related to the deployment of applications

The benefits of this pattern include:

- It assigns ownership of the service deployment and the deployment of service‑specific infrastructure (such as databases) to the team that owns the service.

- The team can ensure changes to any of these elements go through its development process (code review, CI, etc.).

- The team can easily make changes to how the service and its supporting infrastructure are deployed without depending on outside teams doing work for them.

As mentioned previously, our intention for the tutorial is not to show how to set up a real system, and the scripts you deploy in this challenge do not resemble a real production system. Rather, they illustrate some core concepts and problems solved by tool‑specific configuration when dealing with microservices‑related infrastructure deployment, while also abstracting the scripts to the minimum amount of specific tooling possible.

Create Initial Deployment Scripts

- In the app terminal, create an infrastructure directory at the root of the messenger repo and create files to contain the deployment scripts for the messenger service and the messenger-database. Depending on your environment, you might need to prefix the

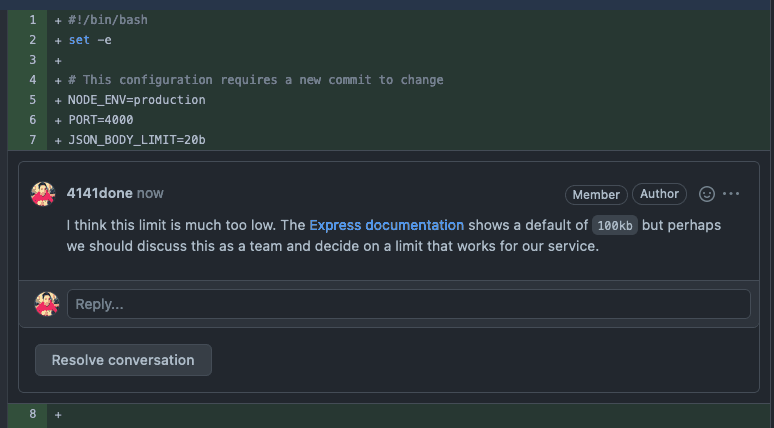

chmodcommands withsudo: - In your preferred text editor, open messenger-deploy.sh and add the following to create an initial deployment script for the messenger service:

This script isn’t complete at this point, but it illustrates a couple concepts:

- It assigns a value to environment variables by including that configuration directly in the deployment script.

- It uses the

-eflag on thedockerruncommand to inject environment variables into the container at runtime.

It may seem redundant to set the value of environment variables this way, but it means that – no matter how complex this deployment script becomes – you can take a quick look at the very top of the script and understand how configuration data is being provided to the deployment.

Additionally, although a real deployment script may not explicitly invoke the docker run command, this sample script is meant to convey the core problems being solved by something like a Kubernetes manifest. When using a container orchestration system like Kubernetes, a deployment starts a container and the application configuration derived from your Kubernetes configuration files is made available to that container. Thus, we can consider this sample deployment file to be a minimal version of a deployment script that plays the same role as framework‑specific deployment files like Kubernetes manifests.

In a real development environment, you might check this file into source control and put it through code review. This gives the rest of your team an opportunity to comment on your settings and thus helps avoid incidents where misconfigured values lead to unexpected behavior. For example, in this screenshot a team member is rightly pointing out that a limit of 20 bytes for incoming JSON request bodies (set with JSON_BODY_LIMIT) is too low.

Modify Deployment Scripts to Query Configuration Values from External Sources

In this part of the challenge, you set up the third location for a microservice’s configuration: an external source that is queried at deployment time. Dynamically registering values and fetching them from an outside source at deployment time is a much better practice than hardcoding values, which must be updated constantly and can cause failures. For a discussion, see Best Practices for Configuring Microservices Apps on our blog.

At this point two infrastructure components are running in the background to provide auxiliary services required by the messenger service:

- RabbitMQ, owned by the Platform team in a real deployment (started in Step 3 of Set Up)

- The messenger-database, owned by your team in a real deployment (started in Step 4 of Set Up)

The convict schema for the messenger service in app/config/config.mjs defines the required environment variables corresponding to these pieces of external configuration. In this section you set up these two components to provide configuration by setting the values of the variables in a commonly accessible location so that they can be queried by the messenger service when it deploys.

The required connection information for RabbitMQ and the messenger-database is registered in the Consul Key/Value (KV) store, which is a common location accessible to all services as they are deployed. The Consul KV store is not a standard place to store this type of data, but this tutorial uses it for simplicity’s sake.

- Replace the contents of infrastructure/messenger-deploy.sh (created in Step 2 of the previous section) with the following: This script exemplifies two types of configuration:

- Configuration specified directly in the deployment script – It sets the deployment environment (

NODE_ENV) and port (PORT), and changesJSON_BODY_LIMITto 100 KB, a more realistic value than 20 bytes. - Configuration queried from external sources – It fetches the values of the

POSTGRES_USER,PGPORT,PGHOST,AMQPHOST, andAMQPPORTenvironment variables from the Consul KV store. You set the values of the environment variables in the Consul KV store in the following two steps.

- Configuration specified directly in the deployment script – It sets the deployment environment (

- Open messenger-db-deploy.sh and add the following to create an initial deployment script for the messenger-database: In addition to defining configuration that can be queried by the messenger service at deployment time, the script illustrates the same two concepts as the initial script for the messenger service from Create Initial Deployment Scripts):

- It specifies certain configuration directly in the deployment script, in this case to tell the PostgreSQL database the port on which to run and the username of the default user.

- It runs Docker with the

-eflag to inject environment variables into the container at runtime. It also sets the name of the running container to messenger-db, which becomes the hostname of the database in the Docker network you created when you launched the platform service in Step 2 of Set Up.

- In a real deployment, it’s usually the Platform team (or similar) that handles the deployment and maintenance of a service like RabbitMQ in the platform repo, like you do for the messenger-database in the messenger repo. The Platform team then makes sure that the location of that infrastructure is discoverable by services that depend on it. For the purposes of the tutorial, set the RabbitMQ values yourself: (You might wonder why

amqpis used to define RabbitMQ variables – it’s because AMQP is the protocol used by RabbitMQ.)

Set a Secret in the Deployment Scripts

There is only one (critical) piece of data missing in the deployment scripts for the messenger service – the password for the messenger-database!

Note: Secrets management is not the focus of this tutorial, so for simplicity the secret is defined in deployment files. Never do this in an actual environment – development, test, or production – it creates a huge security risk.

To learn about proper secrets management, check out Unit 2, Microservices Secrets Management 101 of Microservices March 2023. (Spoiler: a secrets management tool is the only truly secure method for storing secrets).

- Replace the contents of infrastructure/messenger-db-deploy.sh with the following to store the password secret for the messenger-database in the Consul KV store:

- Replace the contents of infrastructure/messenger-deploy.sh with the following to fetch the messenger-database password secret from the Consul KV store:

Run the Deployment Scripts

- Change to the app directory in the messenger repo and build the Docker image for the messenger service:

- Verify that only the containers that belong to the platform service are running:

- Change to the root of the messenger repository and deploy the messenger-database and the messenger service: The messenger-db-deploy.sh script starts the messenger-database and registers the appropriate information with the system (which in this case is the Consul KV store). The messenger-deploy.sh script then starts the application and pulls the configuration registered by messenger-db-deploy.sh from the system (again, the Consul KV store). Hint: If a container fails to start, remove the second parameter to the

dockerruncommand ( the-d\line) in the deployment script and run the script again. The container then starts in the foreground, which means its logs appear in the terminal and might identify the problem. When you resolve the problem, restore the-d\line so that the actual container runs in the background. - Send a simple health‑check request to the application to verify that deployment succeeded: Oops, failure! As it turns out, you are still missing one critical piece of configuration and the messenger service is not exposed to the wider system. It’s running happily inside the mm_2023 network, but that network is only accessible from within Docker.

- Stop the running container in preparation for creating a new image in the next challenge:

Challenge 3: Expose a Service to the Outside World

In a production environment, you don’t generally expose services directly. Instead, you follow a common microservices pattern and place a reverse proxy service in front of your main service.

In this challenge, you expose the messenger service to the outside world by setting up service discovery: the registration of new service information and dynamic updating of that information as accessed by other services. To do this, you use these technologies:

- Consul, a dynamic service registry, and Consul template, a tool for dynamically updating a file based on Consul data

- NGINX Open Source, as a reverse proxy and load balancer that exposes a single entry point for your messenger service which will be composed of multiple individual instances of the application running in containers

To learn more about service discovery, see Making a Service Available as Configuration in Best Practices for Configuring Microservices Apps on our blog.

Set Up Consul

The app/consul/index.mjs file in the messenger repo contains all the code necessary to register the messenger service with Consul at startup and deregister it at graceful shutdown. It exposes one function, register, which registers any newly deployed service with Consul’s service registry.

- In your preferred text editor, open app/index.mjs and add the following snippet after the other

importstatements, to import theregisterfunction from app/consul/index.mjs: Then modify theSERVERSTARTsection at the end of the script as shown, to callregisterConsul()after the application has started: - Open the

convictschema in app/config/config.mjs and add the following configuration values after thejsonBodyLimitkey you added in Step 1 of Example 2. This configures the name under which a new service is registered and defines the hostname and port for the Consul client. In the next step you modify the deployment script for the messenger service to include this new Consul connection and service registration information. - Open infrastructure/messenger-deploy.sh and replace its contents with the following to include in the messenger service configuration the Consul connection and service registration information you set in the previous step: The main things to note are: Note: In a real‑world deployment, this is an example of configuration which must be agreed upon between teams – the team responsible for Consul must provide the

CONSUL_HOSTandCONSUL_PORTenvironment variables in all environments since a service cannot query Consul without this connection information.- The

CONSUL_SERVICE_NAMEenvironment variable tells the messenger service instance what name to use as it registers itself with Consul. - The

CONSUL_HOSTandCONSUL_PORTenvironment variables are for the Consul client running at the location where the deployment script runs.

- The

- In the app terminal, change to the app directory, stop any running instances of the messenger service, and rebuild the Docker image to bake in the new service registration code:

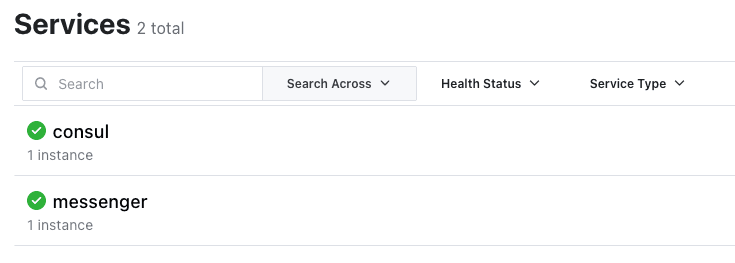

- Navigate to http://localhost:8500 in a browser to see the Consul UI in action (though nothing interesting is happening yet).

- At the root of the messenger repository, run the deployment script to start an instance of the messenger service:

- In the Consul UI in the browser, click Services in the header bar to verify that a single messenger service is running.

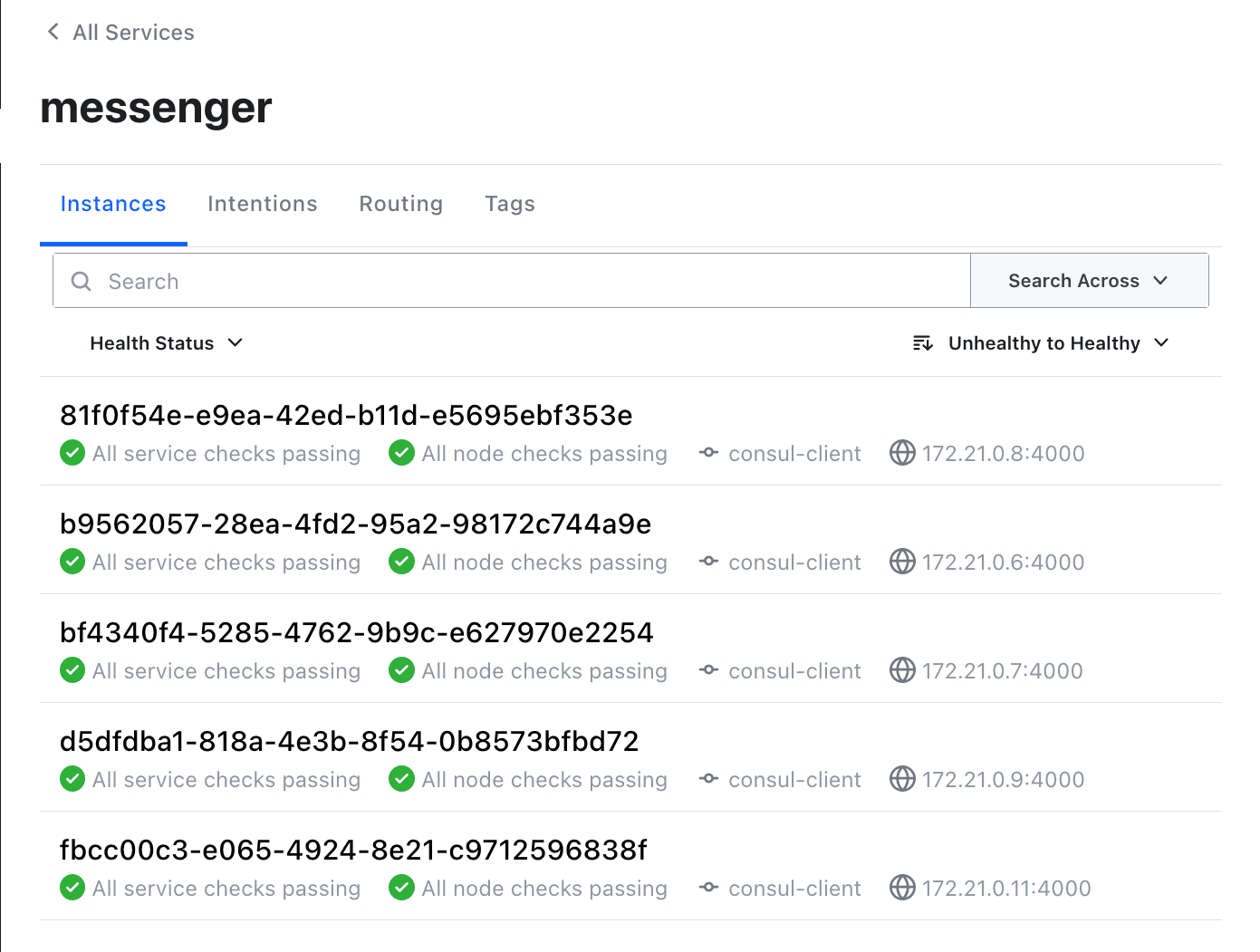

- Run the deployment script a few more times to start more instances of the messenger service. Verify in the Consul UI that they’re running.

Set Up NGINX

The next step is to add NGINX Open Source as a reverse proxy and load balancer to route incoming traffic to all the running messenger instances.

- In the app terminal, change directory to the root of the messenger repo and create a directory called load-balancer and three files: The Dockerfile defines the container where NGINX and Consul template run. Consul template uses the other two files to dynamically update the NGINX upstreams when the messenger service changes (service instances come up or go down) in its service registry.

- Open the nginx.ctmpl file created in Step 1 and add the following NGINX configuration snippet, which Consul template uses to dynamically update the NGINX upstream group: This snippet adds the IP address and port number of each messenger service instance as registered with Consul to the NGINX messenger_service upstream group. NGINX proxies incoming requests to the dynamically defined set of upstream service instances.

- Open the consul-template-config.hcl file created in Step 1 and add the following config: This config for Consul template tells it to re‑render the

sourcetemplate (the NGINX configuration snippet created in the previous step), place it at the specifieddestination, and finally run the specifiedcommand(which tells NGINX to reload its configuration). In practice this means that a new default.conf file is created every time a service instance is registered, updated, or deregistered in Consul. NGINX then reloads its configuration with no downtime, ensuring NGINX has an up-to-date, healthy set of servers (messenger service instances) to which it can send traffic. - Open the Dockerfile file created in Step 1 and add the following contents, which builds the NGINX service. (You don’t need to understand the Dockerfile for the purposes of this tutorial, but the code is documented in‑line for your convenience.)

- Build a Docker image:

- Change to the root of the messenger directory, create a file named messenger-load-balancer-deploy.sh as a deployment file for the NGINX service (just like with the rest of the services you have deployed throughout the tutorial). Depending on your environment, you might need to prefix the

chmodcommand withsudo: - Open messenger-load-balancer-deploy.sh and add the following contents:

- Now that you have everything in place, deploy the NGINX service:

- See if you can access the messenger service externally: It works! NGINX is now load balancing across all instances of the messenger service that have been created. You can tell because the

X-Forwarded-Forheader is showing the same messenger service IP addresses as the ones in the Consul UI in Step 8 of the previous section.

Challenge 4: Migrate a Database Using a Service as a Job Runner

Large applications often make use of “job runners” with small worker processes that can be used to do one‑off tasks like modify data (examples are Sidekiq and Celery). These tools often require additional supporting infrastructure such as Redis or RabbitMQ. In this case, you use the messenger service itself as a “job runner” to run one‑off tasks. This makes sense because it’s so small already, is fully capable of interacting with the database and other pieces of infrastructure on which it depends, and is running completely separately from the application that is serving traffic.

There are three benefits to doing this:

- The job runner (including the scripts it runs) goes through exactly the same checks and review process as the production service.

- Configuration values such as database users can easily be changed to make the production deployment more secure. For example, you can run the production service with a “low privilege” user that can only write and query from existing tables. You can configure a different service instance to make changes to the database structure as a higher‑privileged user able to create and remove tables.

- Some teams run jobs from instances that are also handling service production traffic. This is dangerous because issues with the job can impact the other functions the application in the container is performing. Avoiding things like that is why we’re doing microservices in the first place, isn’t it?

In this challenge you explore how an artifact can be modified to fill a new role by changing some database configuration values and migrating the messenger database to use the new values and testing its performance.

Migrate the messenger Database

For a real‑world production deployment, you might create two distinct users with different permissions: an “application user” and a “migrator user”. For simplicity’s sake, in this example you use the default user as the application user and create a migrator user with superuser privileges. In a real situation, it’s worth spending more time deciding which specific minimal permissions are needed by each user based on its role.

- In the app terminal, create a new PostgreSQL user with superuser privileges:

- Open the database deployment script (infrastructure/messenger-db-deploy.sh) and replace its contents to add the new user’s credentials. Note: Let’s take the time to reiterate – for a real‑world deployment, NEVER put secrets like database credentials in a deployment script or anywhere other than a secrets management tool. For details, see Unit 2: Microservices Secrets Management 101 of Microservices March 2023. This change just adds the migrator user to the set of users that is set in Consul after the database deploys.

- Create a new file in the infrastructure directory called messenger-db-migrator-deploy.sh (again, you might need to prefix the

chmodcommand withsudo): - Open messenger-db-migrator-deploy.sh and add the following: This script is quite similar to the infrastructure/messenger-deploy.sh script in its final form, which you created in Step 3 of Set Up Consul. The main difference is that the

CONSUL_SERVICE_NAMEismessenger-migratorinstead ofmessenger, and thePGUSERcorresponds to the “migrator” superuser you created in Step 1 above. It’s important that theCONSUL_SERVICE_NAMEismessenger-migrator. If it were set tomessenger, NGINX would automatically put this service into rotation to receive API calls and it’s not meant to be handling any traffic. The script deploys a short‑lived instance in the role of migrator. This prevents any issues with the migration from affecting the serving of traffic by the main messenger service instances. - Redeploy the PostgreSQL database. Because you are using

bashscripts in this tutorial, you need to stop and restart the database service. In a production application, you typically just run aninfrastructure-as-code script instead, to add only the elements that have changed. - Deploy the PostgreSQL database migrator service:

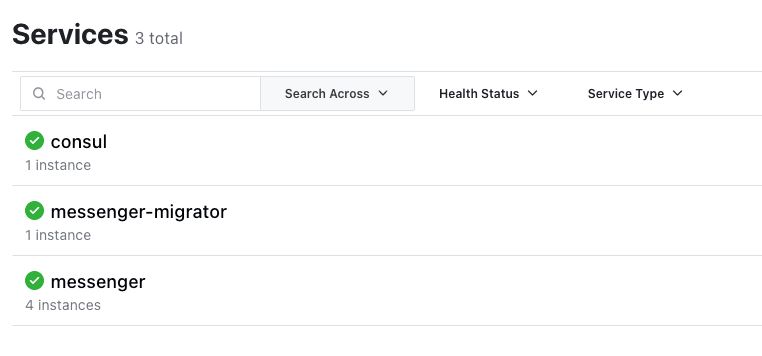

- Verify that the instance is running as expected: You can also verify in the Consul UI that the database migrator service has correctly registered itself with Consul as messenger-migrator (again, it doesn’t register under the messenger name because it doesn’t handle traffic):

- Now for the final step – run the database migration scripts! These scripts don’t resemble any real database migration scripts, but they do use the messenger-migrator service to run database‑specific scripts. Once the database has been migrated, stop the messenger-migrator service:

Test the messenger Service in Action

Now that you have migrated the messenger database to its final format, the messenger service is finally ready for you to watch in action! To do this, you run some basic curl queries against the NGINX service (you configured NGINX as the system’s entry point in Set Up NGINX).

Some of the following commands use the jq library to format the JSON output. You can install it as necessary or omit it from the command line if you wish.

- Create a conversation:

- Send a message to the conversation from a user with ID 1:

- Reply with a message from a different user (with ID 2):

- Fetch the messages:

Clean Up

You have created a significant number of containers and images throughout this tutorial! Use the following commands to remove any Docker containers and images you don’t want to keep.

- To remove any running Docker containers:

- To remove the platform services:

- To remove all Docker images used throughout the tutorial:

Next Steps

You might be thinking “This seems like a lot of work to set up something simple” – and you’d be right! Moving into a microservices‑focused architecture requires meticulousness around how you structure and configure services. Despite all the complexity, you made some solid progress:

- You set up a microservices‑focused configuration that is easily understandable by other teams.

- You set up the microservices system to be somewhat flexible both in terms of scaling and usage of the various services involved.

To continue your microservices education, check out Microservices March 2023. Unit 2, Microservices Secrets Management 101, provides an in‑depth but user‑friendly overview of secrets management in microservices environments.

About the Author

Related Blog Posts

Secure Your API Gateway with NGINX App Protect WAF

As monoliths move to microservices, applications are developed faster than ever. Speed is necessary to stay competitive and APIs sit at the front of these rapid modernization efforts. But the popularity of APIs for application modernization has significant implications for app security.

How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh

When you need an API gateway in Kubernetes, how do you choose among API gateway vs. Ingress controller vs. service mesh? We guide you through the decision, with sample scenarios for north-south and east-west API traffic, plus use cases where an API gateway is the right tool.

Deploying NGINX as an API Gateway, Part 2: Protecting Backend Services

In the second post in our API gateway series, Liam shows you how to batten down the hatches on your API services. You can use rate limiting, access restrictions, request size limits, and request body validation to frustrate illegitimate or overly burdensome requests.

New Joomla Exploit CVE-2015-8562

Read about the new zero day exploit in Joomla and see the NGINX configuration for how to apply a fix in NGINX or NGINX Plus.

Why Do I See “Welcome to nginx!” on My Favorite Website?

The ‘Welcome to NGINX!’ page is presented when NGINX web server software is installed on a computer but has not finished configuring