This blog is the second in a series of blogs that cover various aspects of what it took for us to build and operate our SaaS service:

- Control plane for distributed Kubernetes PaaS

- Global service mesh for distributed applications

- Platform security for distributed infrastructure, apps, and data

- Application and network security of distributed clusters

- Observability across a globally distributed platform

- Operations & SRE of a globally distributed platform

- Golang service framework for distributed microservices

In an earlier blog, we had provided some background on our needs that led us to build a new platform. Using this platform, we enable our customers to build a complex and diverse set of applications — like smart manufacturing, video forensics for public safety, algorithmic trading, telco 5G networks.

The first blog in this series covered a distributed control plane to deliver multiple multi-tenant Kubernetes based application clusters in public cloud, our private network PoPs, and edge sites.

This specific blog will tackle the challenges of connecting and securing these distributed application clusters from three points of view — application to application, user/machine to application, and application to the public internet. We will introduce a brand new and fully integrated L3-L7+ datapath, a globally distributed control plane, and our high performance global network. These three things combine to deliver a comprehensive set of network and security services in edge, within any cloud VPC, or in our global network PoPs. After running in production with 35+ customers for over 1-year, we have started to scale the deployment and felt that now is a good time to share our journey.

TL;DR (Summary)

- Delivering high-performance, reliable and secure connectivity for distributed application clusters required us to build a new global network. While there are many service providers for connecting and securing consumers to applications (Akamai, Cloudflare, etc) and employees to applications (eg. Zscaler, Netskope, etc), there isn’t a service provider that delivers on the need for application to application connectivity and security.

- In addition to a global network to connect distributed applications, these application clusters require networking, reliability, and security services — API routing, load balancing, security, and network routing — with a consistent configuration and operational model. These services need to run within multiple cloud provider locations, in resource-constrained edge locations, and/or our global network.

- To deliver these networking and security services, we decided to build a new and fully integrated L3-L7+ network datapath with consistent configuration, policy, and observability irrespective of where it ran — in our network, in many public clouds, or edge locations. Since many of these datapath can be deployed across multiple sites, it also required us to build a globally distributed control plane to orchestrate these datapaths.

- The combination of a global network, a new network datapath, and a distributed control plane has given us a “globally distributed application gateway” that delivers zero-trust security, application connectivity, and cluster reliability without giving network access across this global network — a “global service mesh” that delivers simplification for our security and compliance team.

Where are the Cables? Why did we build a Global Network?

As our customers are building fairly complex and mission-critical applications — like smart manufacturing, video forensics for public safety, algorithmic trading, telco 5G transition — we need to deliver an always-on, connected, and reliable experience for the applications and the end-users of these applications. Some of these applications run data pipelines across clusters within a cloud, or need to backup data across cloud providers, or need reliable delivery of requests from a global user-base to app backends, or need high-performance connectivity from their machines to the backends running in centralized clouds.

In order to meet these needs, there were two requirements from the physical network:

R1 — Cloud to Cloud — High performance and on-demand connectivity of applications across public or private cloud locations across the globe

R2 — Internet to Cloud — In order to get devices and machines in edge locations to connect reliably to the application backend, we needed to provide multiple redundant paths as well as terminate connections as close to these users instead of backhauling traffic all the way to the backends using the internet.

We could have solved the requirement R1 and R2 by going to a network service provider like AT&T but they couldn’t have provided us with a seamless network experience and integration with our application services — API-based configuration, streaming telemetry, ability to easily add our own or our customers’ IP prefixes, API-based network services, etc . In addition, getting global coverage from service providers is very difficult or prohibitively expensive. We could have gone with two or three different service providers to solve all these problems but then we would have ended up with a mess of different systems that behave differently, have different failure modes, different SLAs, support systems, and have different (or none) APIs.

So we ended up needing to build our own network and this meant that the network needed to have three properties:

- Solving R1 meant that we needed to build a private network across multiple cloud providers and across the globe to connect all the regions — and the best approach to do this is to peer with cloud providers in major cloud regions as well as use a mix of direct connect links at major colocation facilities. Peering does not guarantee the best SLAs whereas direct connect links do provide SLA guarantees within a cloud region.

- Solving R2 for machines in edge locations meant that we needed to support multiple active-active links from each edge location where the links can be low-cost internet links (over cellular or cable/fiber) and/or higher-cost dedicated links (over fiber/ethernet). In order to get performance and reliability, traffic needs to be load balanced and terminated as close to the edge location as possible instead of hauling over unreliable internet. In industry parlance, this feature is also called SD-WAN.

- Solving R2 for internet users and edge applications meant that we needed to perform edge termination of TLS traffic and this needs to also happen as close to the user as possible. This meant that we needed to build network edge locations with dense peering with mobile operators, content providers, and also bring traffic using multiple transit providers to ensure the most optimal routing.

To meet these three properties, we needed to do many things. However, we will highlight five big bucket items:

- Global Network PoPs with our Network Gear — we ended up having to build multiple network PoPs in major metro markets — we started with multiples cities in Europe with Paris, London, Amsterdam Luxembourg and one PoP in the US with NYC during the early days of our platform build and then expanded to Seattle, San Jose, Ashburn, Frankfurt, Singapore, and Tokyo — as this gave us global coverage and we were close to all major cloud providers. We chose Equinix as our global partner and augmented with Interxion and Telehouse/KDDI, Westin for locations where Equinix is not the most optimal choice. Because we believe in automation, we ended-up picking Juniper Networks for switching/routing gear and Adva for metro fiber interconnectivity of 400G and 100G links.

- Private Backbone — In order to connect all of these PoPs with high performance and reliability, we decided not to build an overlay network on top of multiple transit providers and instead chose to build a global private backbone using a combination of multiple 100G waves from providers like Zayo, Centurylink, EuNetworks, etc. While many CDN (eg. Cloudflare, Fastly) and Security providers (eg. Zscaler) rely entirely on Transit and Peering connections as they are serving downstream traffic, we believe there are good reasons to use a combination of private backbone and transit as we are dealing with east-west application to application traffic. This is not unique to us — all major cloud providers have a global private backbone connecting their cloud locations.

- Internet Peering and Transit Providers — In order to increase the diversity and achieve the best performance to reach users and content or SaaS providers, an ideal solution is to peer with as many operators and content providers directly. We peer at multiple exchanges as outlined in PeeringDB (ASN-35280 in PeeringDB). Not all traffic can be serviced through the peering connections and that is where transit connections come to play. Transit links and peering ports need to be monitored very carefully to maintain quality — we ended up selecting the best Tier-1 providers such as NTT, CenturyLink, and Telia to serve us at various global locations.

- Cloud Peering and Interconnects — We recently started peering directly with cloud providers but they don’t guarantee any SLAs for these connections — for example, at our peering connection in Seattle (Westin facility), we are able to reach all three major cloud providers directly. Even though these connections are better than just going over transit providers, we started to augment our network with direct connections (called AWS Direct Connect, Azure Express Route, and GCP Dedicated Interconnect) to these cloud providers, as these come with SLAs and additional features. While these links are expensive and only allow access to internal VPC/VNET subnets, they provide the best connectivity.

- Software Gateways — In the cloud or edge locations that are not directly connected to our PoP locations, we install our software gateways. We will cover advanced functionality provided by these gateways in a later section of this blog, but one of the functionality that pertains to the transport layer is that these gateways automatically create secure VPN (IPsec or SSL) tunnels to multiple network PoPs for redundancy and load balance traffic to the PoPs. In addition, in a cloud deployment, these gateways create an outbound connection to our nearest PoP across cloud providers NAT gateways and an on-ramp to our global private network — no need to traverse public networks and/or connect application clusters across cloud locations using public IP addresses.

We realized very early in our development that building and operating a global network requires time and skills and we needed to bring this expertise. After months of discussions with the founders of Acorus Networks (security provider based of Paris, France), we ended up merging Acorus with Volterra. This helped us solve the problem of our global infrastructure and network rollout. Acorus had bootstrapped a great network and an operations team that had fully automated physical network configuration using Ansible and built external APIs for telemetry and configuration that could be used by our software team.

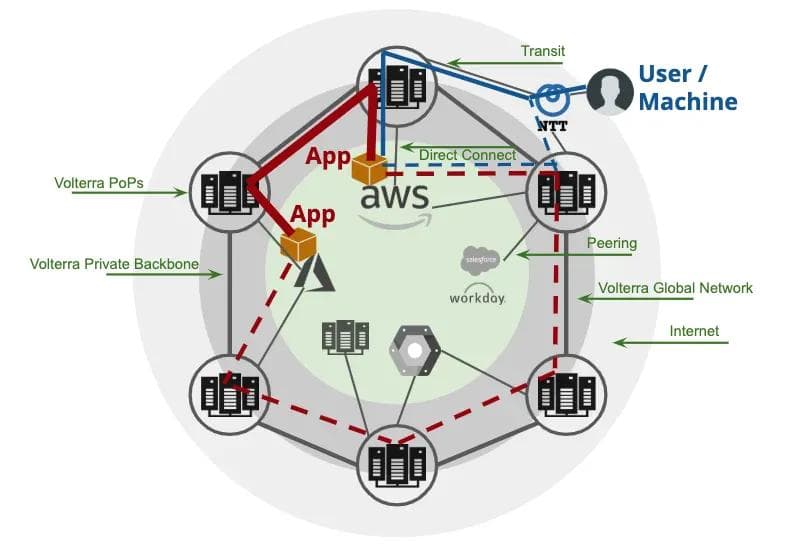

Now that we had a team focused on building out a global private network with high reliability and performance, we could easily solve the problems of both application to application and user/machine to application traffic across edge or cloud locations as shown in Figure 1. In addition, compute and storage infrastructure in our Network PoPs also allowed us to add API termination, app security, and workload offload (from edge or cloud) to our network. This will enable us to continue to evolve our capabilities for a long time to come and easily expand our network footprint and service catalog based on demand.

Network Services for Applications — Multi-Cloud, Private, Edge?

Once we had a functioning global network, we needed to start adding application clusters and our workloads. These workloads need their own set of application-level connectivity and security services.

As we explained in our earlier blog, our goal with the platform was to improve productivity and reduce complexity for our teams that are managing workloads running across multiple environments (cloud, edge, and our network PoPs).

Every cloud provider requires configuring, managing, and monitoring many different cloud resources for secure connectivity to application clusters. For example, in Google Cloud, to configure an end-to-end network service for an application cluster, it will require us to manage many different sets of services:

Wide Area Network (WAN) Services

Application Networking Services

- Application Load Balancers, Network Load Balancers (Public APIs)

- Application Load Balancers, Network Load Balancers (Internal)

- Service Mesh (App to App)

Network Routing and Isolation Services

Great, while it would be a big challenge, we could have decided to tackle this by building a configuration and operations layer to harmonize across each cloud provider. However, we knew that it would not have solved our problems as the services from cloud providers did not meet all our needs (eg. ability to perform application and API security is missing at CSPs), they are not the best of the breed, and are continuously changing.

In addition, what about our Network PoPs and edge sites? We would have to go and get similar capabilities from hardware or software vendors and integrate them all together — eg. Cisco/Juniper for routing+nat+vpn, F5 for load balancing and firewall, etc. At the edge locations, the problem is compounded as neither the cloud providers nor the large network vendors could solve the network services problems for a resource-constrained environment. Another potential option for the edge could have been to move all the application connectivity, security, and network services to our global network — but it was clear that will not work as app-to-app traffic within the same edge site needed some of these services also.

After doing a lot of discussions and surveying the landscape, we realized that doing integration across many service providers and vendors — configuration, telemetry, monitoring, alerting — and keeping up with their roadmap and API changes ran counter to our goal of simplicity and speed.

Another problem that we needed to solve across the platform was multi-tenancy — this was easier to do for WAN services and network routing, but challenging for application networking services given that most of the load-balancers on the market have none to very limited support for multi-tenancy.

A New L3-L7+ Network Datapath

As a result, we decided to take on this challenge and build a high-performance network data path that not only provides network routing service but also application, security, and wide-area services. This would allow us to unify the service portfolio across our hybrid and distributed cloud environment and require us to depend on a minimal set of native services in public clouds.

Given that the team had good networking experience and that we had dealt with such problems in our previous lives with Contrail/Juniper, we thought the best route to solve this problem will be to start with a clean slate and build a brand new networking solution that integrates L3 to L7+ services in a single datapath that can run in any cloud or edge while being managed centrally by our platform.

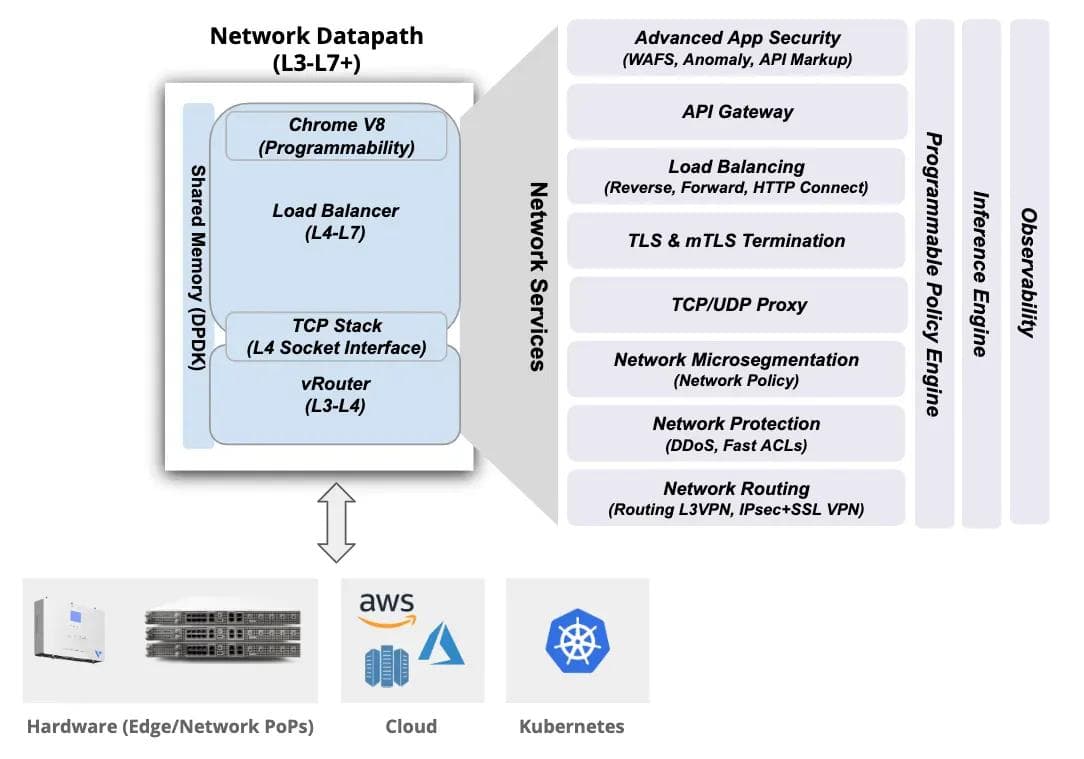

We picked the best projects to start the design of this new datapath — OpenContrail vRouter (for L3-L4) that we had already spent 6 years developing and Envoy for L7 as it has huge community backing. That said, we had to make several changes and additions to build a new unified L3-L7+ datapath — multi-tenancy for Envoy, dpdk for Envoy, user-space dpdk TCP stack, network policy, SSL/IPSec VPNs, http connect, dynamic reverse proxy, transparent forward proxy, API gateway, programmable application policy, fast ACLs for DDoS protection, PKI identity integration, Chrome v8 engine, application and network security, etc.

This datapath (as shown in Figure 2) is deployed as ingress/egress gateway for our application clusters, software gateway in edge, deliver service-mesh capability within a cluster or across multiple clusters, or to deliver network services from our global PoPs.

In order to align with our need for multi-tenancy for the platform (covered in the previous blog), we also needed to deliver full multi-tenancy for this network datapath. This was relatively straightforward for the routing layer as the vRouter already supported VRFs, however, we had to make changes in Envoy to make it multi-tenant. Since we were not using the kernel TCP stack, we changed Envoy’s TCP socket interface and added VRF awareness.

This datapath also needed to perform API security, application firewalls, and network security — we used a combination of algorithmic techniques and machine inference for this and will cover this topic in an upcoming blog post.

We got many advantages by building this network datapath:

Complete L3-L7+ capabilities with uniform configuration, control, and observability

Support for Scale-out within a single cluster allows us to support a range from a very small footprint and lower performance edge devices up to 100Gbps+ or more capacity in our network or public cloud locations

Rapidly add new capabilities to the network datapath without depending on any network vendor and/or cloud provider

Common solution with similar failure and performance characteristics across any environment — very important for our operations teams.

Now that we could deploy multiple app clusters with this new network datapath, it was critical to build a globally distributed control plane to configure, control, and monitor them.

Purpose-built Control Plane for Distributed Network

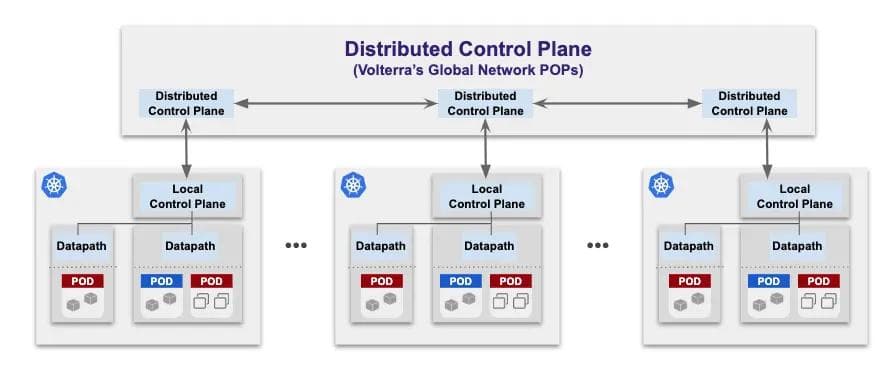

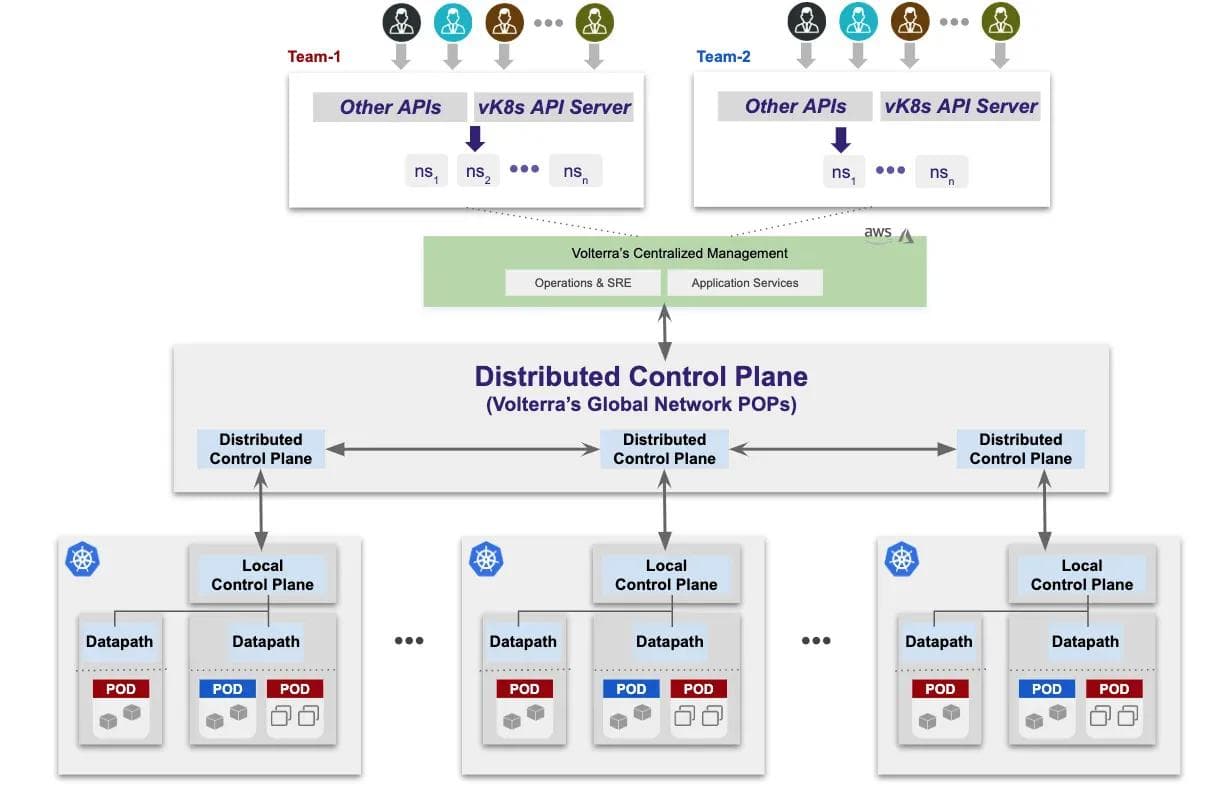

There are multiple issues that our platform team had to tackle as part of building a distributed control plane to manage a large number of distributed datapath processing nodes. Since we built a new datapath, there was no out-of-the-box control plane that could be leveraged and we had to write our own: Local Control Plane to manage multiple datapath running within a single cluster (cloud, edge, or network PoP). These datapath nodes needed a local control plane to manage configuration changes, route updates, perform health-checks, perform service discovery, peer with other network devices using a protocol like BGP, aggregate metrics and access logs, etc. Distributed Control Plane to manage multiple local control planes — There is a distributed control plane running in our global network PoPs (Figure 3) and this control plane is responsible for configuration management of the local control planes, operational state distribution across each of the local control planes, and data collection from each of the nodes. To distribute operational state — we decided to use BGP as it is eventually consistent and robust. Since we had built a very high-scale and multi-threaded implementation of BGP as part of OpenContrail, we leveraged it and added extensions for load balancing — health-check distribution, http/api endpoints, policy propagation, etc.

In addition, there is a centralized management plane running in two cloud regions — AWS and Azure — that gets used alongside the distributed control plane to deliver multi-tenancy as shown in Figure 4. Our SRE team can create multiple tenants and each tenant is completely isolated from another tenant across our global network. They can only route to each other using “public network”. Within a tenant, services across namespaces can talk to each other based on http/api/network routing and security rules.

The control planes run as Kubernetes workloads in a separate tenant and isolated namespace that is under the control of only our SRE teams. As a result, no developer and/or customer can disrupt this service. In addition, since the control plane is distributed, an outage of a control plane in one location does not impact the overall service.

Globally Distributed Application Gateway?

Once we had figured out the needs for our global network and had defined the requirements for L3-L7+ network services for app clusters (a new datapath and distributed control plane), our product team came up with more requirements that made our life even more exciting. Basically, they wanted us to deliver a global, inter-cluster service mesh with the following features:

- Deliver application connectivity (routing, control, and observability) across the global network but not network connectivity (at-least not by default) — The reason was simple, connecting networks creates all kinds of security vulnerabilities that can only be solved by creating complex firewall rules and this makes it harder to perform change management.

- Improve edge load balancers in the network PoPs with endpoint health and reliability — Currently, edge load balancers (eg. AWS global accelerator or Akamai global load balancer), the routing decision from the edge location to the backend is based on the health of the origin server (in most cases, the origin server is yet another load balancer and not the actual serving endpoint). Our team wanted the capability of each of our edge load balancer to manage traffic based on the health of all the endpoints across all clusters and not just the health of origin load balancers.

- Improve edge site (network PoP) selection as part of GSLB decision-making — Currently, anycast or GSLB will route the user/client to the closest edge site for ingress. However, for various reasons (load on the backbone and/or transit providers), it might be better to send the user to a different ingress edge site.

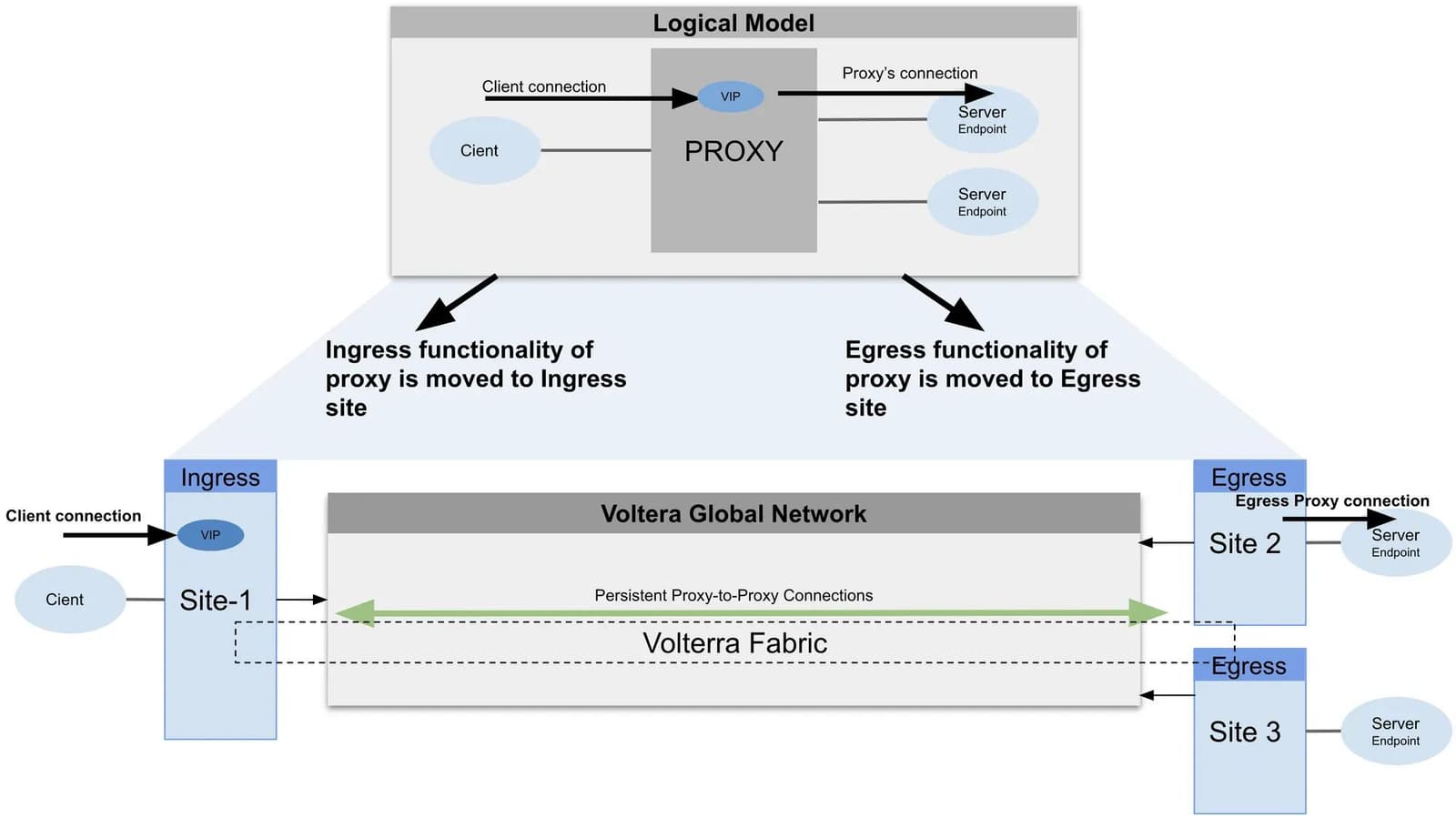

With requirement #2 and #3, the goal was to improve the response time and performance of client to server connectivity and this is really critical in application to application communication or for large scale SaaS applications. In order to meet requirement #2 and #3, it was clear that the best approach was to build a distributed proxy (Figure 5) — an ingress proxy and egress proxy and route based on application addressing and not network addressing. In addition, we decided to distribute the health of serving endpoints (or servers) by leveraging the distributed control plane and BGP extensions (as described earlier). This implementation of the distributed proxy is shown in the diagram below.

Since it became a distributed proxy with a complete L3-L7+ network stack, we call this as a globally distributed application gateway. All the functionality that is available in our network datapath is now available across our global network — for example, load balancing and routing based on HTTP or APIs, policy enforcement at the edge, API transformation, TLS termination closer to the user, etc.

Gains delivered by Distributed Application Gateway

There are multiple gains that the team was able to realize with the implementation of a globally distributed application gateway. These gains were in the areas of operational improvements, security, performance, and TCO:

- Security and Compliance — Ability to provide application access across sites without network access has significantly improved the security and compliance across application clusters in edge or cloud locations. This has also reduced the need for complex firewall configuration management as there is no network access across sites and the application clusters can be connected but completely behind NAT in each site.

- Operational Overhead — Common set of network services across our Network PoPs and edge/cloud sites has removed the need for our SRE or DevOps to do integration of configuration and observability across different sets of services.

- Performance and Reliability Improvements — Since we route from our network PoP to most optimal and healthy endpoint and not the most optimal load-balancer, the platform is able to deliver much higher performance for application to application or user to application traffic than any other solution on the market.

- TCO gains — For large scale SaaS applications, it is common to have hundreds of replicas of the service being served from multiple data-centers across the globe. In order to do this effectively, the SaaS provider has to build complex capabilities that are now available to all our customers with this distributed application gateway.

To Be Continued…

This series of blogs will cover various aspects of what it took for us to build and operate our globally distributed SaaS service with many application clusters in public cloud, our private network PoPs, and edge sites. Next up is Platform and Data Security…

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.