Flight: Convergent Evolution in Practice

Most of us have dreamt of being able to fly; and, as it turns out, so has evolution. The ability to fly—whether to evade predators, search more efficiently for food, or migrate long distances—has been repeatedly demonstrated to be of great utility to wide variety of animals. So much and so frequently, in fact, that evolution keeps coming around to finding some path to achieving the goal of flight. Today, we see three distinct groups of animals that have independently developed the ability to fly: insects, birds, and mammals (bats). Each of these three groups have followed different evolutionary paths that led to flight, with the current form of flight reflecting their ancestral pre-flight forms. Consider insects: ancestral insects had external exoskeletons, which has the property of being low density and laminated, resulting in differing tensile strengths along different dimensions. As a consequence, insect evolution toward flight leveraged the use of deformable wings—an approach that took advantage of the low density, variable tensile strength building block at hand. Birds, on the other hand, had the development of feathers as the crucial "technology" in their journey toward flight; in this case, the feathers were a happy coincidence, driven by the needs of a set of warm-blooded dinosaurs requiring a fur-like material to retain heat. It was only later that the feathers turned out to be fortuitous "technology" for enabling flight. Finally, bats, being the most recent group to develop flight, use an approach based on incremental evolution from gliding mammals, but do not yet have the benefit of eons of evolutionary adaptation.

An interesting and noteworthy complement to this story is the observation that, when humans finally achieved practical flight, using machines, it applied a set of completely different approaches—either lighter than air lift or fast moving airfoils propelled by gasoline powered engines.

That was interesting, but what does have to do with the edge?

From a perspective of evolutionary thinking, the paths that application delivery has taken over the last 20 years is also a story of convergent evolution. More specifically, these years have seen the development of multiple delivery technologies, that—when looked upon in hindsight—have all been those initial, nascent evolutionary steps towards what is becoming the application edge.

The precursor to many of these ancestral technologies were the Content Delivery Networks (CDNs). This technology was motivated—its "evolutionary niche," so to speak—by the need to deliver a large amount of fairly static data, typically large images, and streaming audio/video, to a sizable consumer client community. The solution was to place cached copies of the large data files in multiple geographically dispersed locations, closer to the client devices. This approach allowed the delivery workload to be distributed away from a single central data center, in terms of both compute and bandwidth, while simultaneously providing lower and more predictable latency.

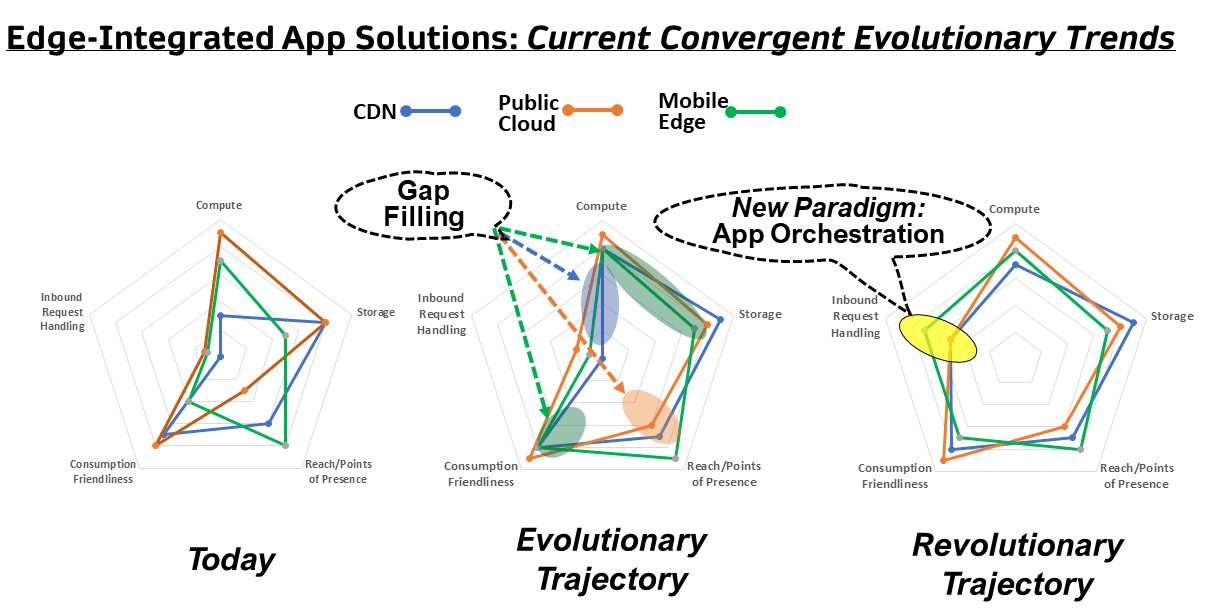

Today, as applications have advanced and become tightly integrated into our everyday routines, the need to improve the customer’s application experience continues to drive a need to reduce latency and provide additional bandwidth. However, the ground rules have changed and evolved. Just as climate and geography continuously drive adaptation in nature, the changes in application delivery requirements drive changes in the solutions required. More modern application delivery needs extend well beyond the basic CDN scenario. Today’s more sophisticated use cases are required to do more than deliver only simple static content; they also have requirements in the areas of computation and interactivity. Examples of applications with more advanced needs are videoconferencing and electronic trading. More broadly, the general trend towards a Single Page Application (SPA) paradigm, in which an application is composed of the aggregation of a number of disparate web services, requires a more robust set of distributed resources than mere static storage in order to maintain a rich user experience. As the environment has "evolved" towards these new use cases, we see the corresponding convergence of multiple incumbent market solutions adapting to better fit into this new and lucrative "evolutionary niche"—the technology analogue of the "convergent evolution" concept.

The first of these pre-existing solutions are the aforementioned CDNs. Their evolutionary incumbency "strength" is their substantial extant infrastructure of large numbers of existing CDNs nodes; their "evolutionary" path is to enhance the extant nodes from being focused purely on cache-like storage toward providing a more balanced mix of multiple storage technologies—files, databases, object stores—coupled to compute resources that can operate on that storage.

The second of the incumbent market solutions are the public cloud providers. Their area of "strength" is the robust ecosystem of compute, storage, and bandwidth resources, as well as the corresponding flexible consumption models for those resources. For example, AWS offers multiple forms of database technology, makes compute available using either a server-based or serverless model, has a rich set of technologies for handling identities and authentication, and provides a diverse set of ancillary services such as logging/reporting, visualization, data modeling, and so on. However, their "evolutionary gap" is that they do not have as many points of presence as the incumbent CDN vendors, so they do not enjoy the same breadth of distribution.

At the other end of the points of presence scale are the Mobile Service Providers (MSPs), especially as they roll out their 5G infrastructures. The large MSPs plan to have tens of thousands of mobile access points, each one of which is an entry point onto the core network. In addition to the “strength” of distribution and scale, they have computational and some limited storage capacity at these access points; however, the compute and storage has, until recently, been limited in scope to focus on the MSPs own infrastructure needs. Therefore, the "gap" they need to address is migrating to a computing paradigm that exposes the computing infrastructure to external applications and augmenting that with additional storage capabilities.

Getting back to flight—and why humans used a different approach

The journey just outlined maps as a natural incremental evolutionary process. However, sometimes there are evolutionary "game changers[1]"—something that disrupts evolution and causes a reassessment of the linear progression. For humans developing flight, the development of systems that can quickly deliver a large amount of power (e.g., gasoline powered engines) coupled to materials and engineering technologies that could fabricate strong lightweight alloys allowed us humans to reimagine how the challenge of flight might be tackled, "changing the game" with respect to how nature developed flight.

Relating this story back to the edge concept, the application delivery narrative thus far has focused on offloading "server" side work—that is, the part of the delivery chain that beings with the server receiving a request. Specifically, because of the "evolutionary predisposition" of the preexisting solutions (one in which the value was from lightening the burden of the application server), the focus has implicitly been on the offloading and distribution of the computation and content delivery that is in response to a "client" customer’s request.

However, consider now what happens when we think of these geographically dispersed points of presence not only as compute nodes and caches for the unburdening the server—"application proxies"—but additionally as the control points that pay equal attention to the incoming client requests, mapping those requests onto infrastructure and compute/cache nodes appropriate for the application’s requirements. For example, a latency tolerant but high bandwidth application, such as cloud file backup, might be routed differently than low bandwidth, latency sensitive application like online gaming. Banking applications that need a central data clearinghouse might be steered to a central data center. This concept, which I think of as an "application orchestrator," follows naturally once you think of the edge as not something that merely offloads the server, but and element whose role is being the on-ramp to the generalized server/access-node environment.

Let’s build a plane, not a Ornithopter

Just as humans finally achieved practical flight not by use of a mechanized bird-like approach (da Vinci’s famed "Ornithopter"), but instead by thinking about how to better leverage the most appropriate technologies at hand—airfoils coupled to gasoline powered engines—we should think about how application infrastructure can be reimagined with the advent of a pervasive, distributed, service-rich edge. As the power of this emerging capability becomes more apparent and more consumable, application owners and operators will begin the next step in the application evolution journey. The resultant "game changing" event—the disruptive evolution of the edge from being merely a proxy for the application server or an application cache on steroids, to being a full-blown application orchestrator—will open the application ecosystem up to explosion of new possibilities, which will be the subject of future articles.

Previewing that road ahead, I intend to share a vision of how embracing the edge’s role of application orchestrator will enable more a declarative, intent-driven specification of each application’s intended behavior, driving optimizations of both the application’s infrastructure and also the customer experience of the application. For example, one application, perhaps one emphasizing augmented reality technology, might specify a policy that prioritizes latency and bandwidth, whereas a financial application may prioritize reliability or centralization, and yet some other consumer IoT application might focus on OpEx management. More broadly, adopting a mindset that expands the role of the edge, and leverages it as the application orchestrator, also positions the edge to be the logical location to implement security policies and visibility, fulfilling another dream of many application owners—the goal of infrastructure-agnostic application controls.

[1] The development of oxygen-based metabolism is a good example, but I’ll have to save that story for another time.

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.