One of the often mentioned benefits of cloud – private or public, on-prem or off – is speed. Speed to provision, speed to scale, speed to ultimately get an app delivered to the users who desire or demand its functionality.

To get that speed, much of cloud is standardized. Standardization has always, from the earliest days of automation for assembly lines, been the golden key to achieving faster results. This remains true in cloud environments. While Amazon is not standardized in the sense that its APIs are interchangeable with that of Azure or Google or even OpenStack, it is standardized across all its various services, which means all applications deployed atop its infrastructure share a great deal in its day-to-day operational procedures.

The same is true for OpenStack, which has a standard deployment with standard services, but enables bespoke environments through its plug-in architecture. That means you can mix-and-match load balancers and web application firewalls and compute to meet your specific requirements.

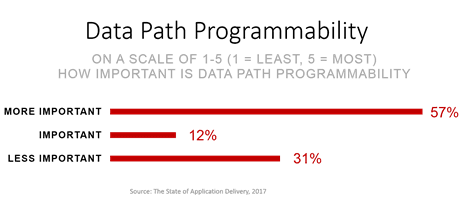

What OpenStack doesn’t do, however, is take that bespoke approach beyond the basics without additional code. For example, you can plug-in a different load balancer, but you are limited to the algorithms and features available through OpenStack. So if a load balancer has advanced capabilities – say data path programmability (which our surveys tell us is pretty important to NetOps folks, at least) or SSL termination or support for distinct user-side and server-side TCP profiles – you can’t really use the OpenStack API to turn them on and off. Because they aren’t “standard” features across all load balancers you might plug-in.

That’s fair. But what’s not fair is forcing you to choose between those advanced features that make apps faster and safer and the benefits of a standardized cloud platform like OpenStack.

That’s where Open Source once again comes to the rescue and proves with 90% of respondents in the State of Open Source survey cite “innovation” as one of the things open source improves.

To provide access to those advanced features available to those relying on F5 BIG-IP for load balancing and secure app delivery – without breaking OpenStack – we’ve put into place the ability to seamlessly define and include those features into an OpenStack implementation.

The F5 OpenStack LBaaSv2 Enhanced Service Definition (ESD) provides a simple, JSON-based mechanism for specifying the provisioning of advanced profiles on F5 BIG-IP in an OpenStack environment. With ESDs, you can deploy OpenStack load balancers customized for specific applications.

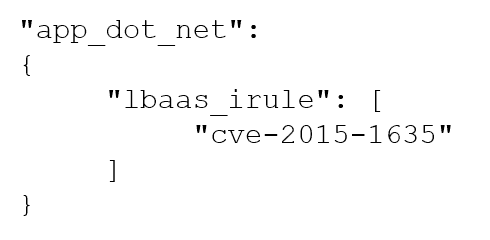

In a nutshell, an ESD is a file in a standard JSON-format with key-value pairs describing profiles, policies, or iRules you want applied to specific applications.

For example, the JSON to the left tells the BIG-IP to apply an iRule named “cve-2015-1635” to the virtual server (the application) named “app_dot_net”.

That’s it. This simple little snippet enables an app with protection against a real CVE using some data path scripting magic and a little open source innovation.

Profiles and policies, too, can be applied the same way using the appropriate tags, all documented here.

The F5 OpenStack LBaaSv2 Driver for BIG-IP is open source, and available on Github. You can extend that, too, if you like. Though the ability to take advantage of ESDs offers the same extensibility as having access to the code to do it yourself.

But hey, it’s open source, so whatever trips your trigger and, more importantly, delivers your apps.

You can read more about ESDs and grab the F5 OpenStack LBaaSv2 Driver for BIG-IP on github here:

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.